Code Quality

Nov 26, 2025

How Automated Code Quality Analysis Reduces Technical Debt

Sonali Sood

Founding GTM, CodeAnt AI

Technical debt is the silent tax on every feature you ship. It's the accumulated cost of shortcuts, quick fixes, and "we'll clean this up later" decisions that slow your team down more with each passing sprint.

Automated code quality analysis changes the equation by catching these issues before they compound. This guide covers how automated analysis detects and prevents technical debt, the types of debt it catches, and how to integrate it into your workflow for measurable improvements in code health.

What is Technical Debt

Automated code quality analysis reduces technical debt by using tools to find issues like code smells, security flaws, and complexity in real-time. These tools enable early fixes through automated code reviews, refactoring suggestions, and quality gates in CI/CD pipelines. The result? Small problems get caught before they grow into costly debt, and code stays clean, maintainable, and aligned with your team's standards.

Technical debt is the accumulated cost of shortcuts and deferred improvements in your codebase. Think of it like financial debt: you borrow time now by shipping "good enough" code, but you pay interest later when that code becomes harder to understand or modify.

The metaphor holds up well. A small shortcut today might save a few hours, but six months later, that same shortcut could cost days of debugging. The longer debt sits unaddressed, the more expensive it becomes to pay down.

Why Technical Debt Builds Up in Software Projects

Even well-intentioned teams accumulate technical debt. It's rarely a conscious choice to write bad code—it's usually the result of competing pressures and imperfect processes.

Deadline pressure and shortcuts

Tight timelines force developers to ship code that works but isn't ideal. The plan is always to "fix it later," but later rarely comes. New features take priority, and those quick fixes become permanent fixtures.

Inconsistent code reviews

Manual reviews vary wildly depending on who's reviewing and how much time they have. A senior engineer with a light workload might catch subtle issues, while an overloaded teammate might approve changes with a quick glance.

Missing or unenforced standards

Without documented coding standards—or with standards that exist only on paper—teams end up with fragmented codebases. One developer prefers tabs, another uses spaces. One module follows clean architecture, another is a tangled mess.

Outdated dependencies and legacy code

Aging libraries and inherited codebases are debt magnets. Teams often avoid updating dependencies because "it works," but those outdated packages accumulate security vulnerabilities and compatibility issues that eventually demand attention.

How Automated Code Quality Analysis Detects Technical Debt

Automated analysis tools scan your codebase continuously, surfacing debt that would otherwise go unnoticed. They catch issues that humans miss—not because humans aren't capable, but because humans get tired, distracted, and busy.

Scanning for code complexity and maintainability

Automated tools measure how difficult code is to understand and modify. They look at metrics like cyclomatic complexity (the number of independent paths through code) and maintainability index (a score based on volume, complexity, and lines of code).

When these metrics exceed thresholds, the tool flags the code for review. You might not notice that a function has grown to 15 nested conditionals, but the scanner will.

Identifying duplication and code smells

Copy-paste programming is one of the fastest ways to accumulate debt. Automated tools detect duplicated logic across your codebase and flag common anti-patterns—those recurring code structures that signal deeper design problems.

Code smells like overly long methods, excessive parameters, or tightly coupled classes often indicate areas where refactoring would pay dividends.

Detecting security vulnerabilities and secrets

Security debt is particularly dangerous because it carries risk beyond just maintenance costs. Automated scanners find hardcoded credentials, SQL injection vulnerabilities, insecure configurations, and other security-related issues.

These tools catch the API key someone accidentally committed or the input validation that got skipped during a rushed release.

Flagging dependency risks and misconfigurations

Your code doesn't exist in isolation—it depends on dozens or hundreds of external packages. Automated analysis checks for outdated or vulnerable dependencies and infrastructure misconfigurations that could cause problems in production.

Types of Technical Debt that Automated Analysis Catches

Technical debt comes in several forms, and automated tools can identify most of them. Understanding these categories helps you prioritize what to address first.

Security debt

Unpatched vulnerabilities, exposed secrets, and insecure configurations that create risk. Security debt carries the highest urgency because it can lead to breaches.

Code quality debt

Complex functions, duplicated logic, and poor naming conventions that slow comprehension. Code quality debt is the most common form and the easiest to accumulate.

Architectural debt

Tightly coupled modules, circular dependencies, and violations of design principles. Architectural debt is harder to fix because changes ripple across the system.

Documentation debt

Missing or outdated comments, README files, and API documentation. While often overlooked, documentation debt slows onboarding and increases the risk of misunderstanding.

Dependency debt

Outdated packages, deprecated libraries, and untracked third-party components. Dependency debt grows silently until a security advisory or breaking change forces action.

Debt Type | Description | Example |

Security debt | Vulnerabilities left unaddressed | Hardcoded API keys |

Code quality debt | Hard-to-maintain code patterns | Duplicated business logic |

Architectural debt | Structural design issues | Circular module dependencies |

Documentation debt | Missing or stale documentation | Outdated API docs |

Dependency debt | Unmanaged external libraries | Deprecated npm packages |

Benefits of Automating Code Quality Analysis

Automation transforms code quality from a sporadic concern into a continuous practice. The benefits compound over time as teams build healthier habits.

Objective and consistent reviews

Every commit gets evaluated against the same standards. There's no variation based on reviewer mood, workload, or experience level. The rules apply equally to the intern's first PR and the principal engineer's refactor.

Faster developer feedback

Issues surface immediately in pull requests rather than weeks later during debugging. When developers get feedback while the code is still fresh in their minds, fixes are faster and less frustrating.

Lower manual review burden

Human reviewers can focus on what humans do best: evaluating business logic, questioning architectural decisions, and mentoring junior developers. They don't have to spend time checking for consistent formatting or catching obvious security issues.

Clear visibility into code health

Dashboards and reports give leadership a real-time view of codebase quality trends. Instead of vague concerns about "tech debt," teams can point to specific metrics and track improvement over time.

Integrating Automated Analysis into Your CI/CD Workflow

The most effective automated analysis happens automatically, without developers needing to remember to run it. Integration into existing workflows is key.

Pull request reviews and pre-merge checks

Analysis runs automatically when developers open PRs. Critical issues can block merges entirely, while warnings appear as comments for the developer to address. Problems get caught before they reach the main branch.

Pipeline integration for continuous scanning

Beyond PR checks, scheduled scans monitor the entire codebase for regressions and drift. Even code that hasn't changed recently gets evaluated against current standards and security databases.

Enforcing organization-specific coding standards

Generic rules aren't enough—teams can configure custom rulesets to match their conventions and compliance requirements. Platforms like CodeAnt AI allow this customization while maintaining consistency across projects.

How Continuous Analysis Prevents New Technical Debt

Detecting existing debt is valuable, but preventing new debt from accumulating is even more powerful. Continuous analysis shifts the focus from cleanup to prevention.

Real-time detection at the pull request stage

Catching issues before code merges stops new debt from entering the main branch. It's far easier to fix a problem in a PR than to track it down months later when it's causing production issues.

Automated fix suggestions and recommendations

AI-powered tools can suggest or auto-apply fixes, reducing friction for developers. Instead of just flagging "this function is too complex," the tool might propose a specific refactoring approach.

Ongoing monitoring across the entire codebase

Scheduled scans catch regressions and drift even in unchanged files. Security vulnerabilities in dependencies can appear at any time, and continuous monitoring ensures you know about them quickly.

Tracking Technical Debt Reduction with Code Health Metrics

What gets measured gets managed. Tracking the right metrics helps teams demonstrate progress and justify continued investment in code health.

Measuring complexity and maintainability trends

Track how average complexity scores and maintainability indexes improve sprint over sprint. Downward trends in complexity indicate that refactoring efforts are paying off.

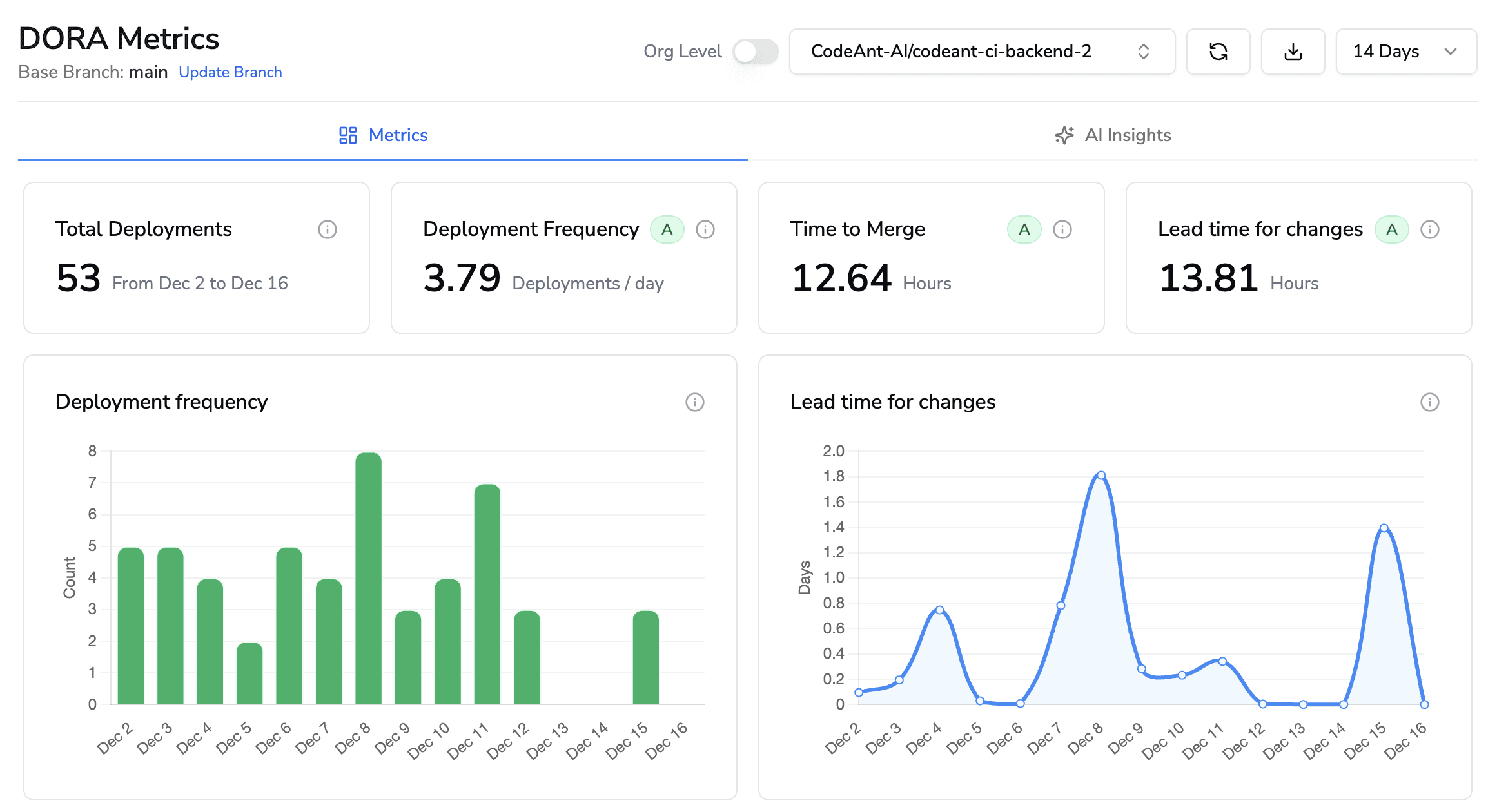

Using DORA metrics to monitor release reliability

DORA metrics—deployment frequency, lead time, change failure rate, and recovery time—connect code health to business outcomes. Teams with less technical debt typically ship more frequently with fewer failures.

Reporting code health to engineering leadership

Dashboards and trend reports help communicate technical debt status to stakeholders. CodeAnt AI provides unified visibility for this purpose, making it easier to have data-driven conversations about code quality investments.

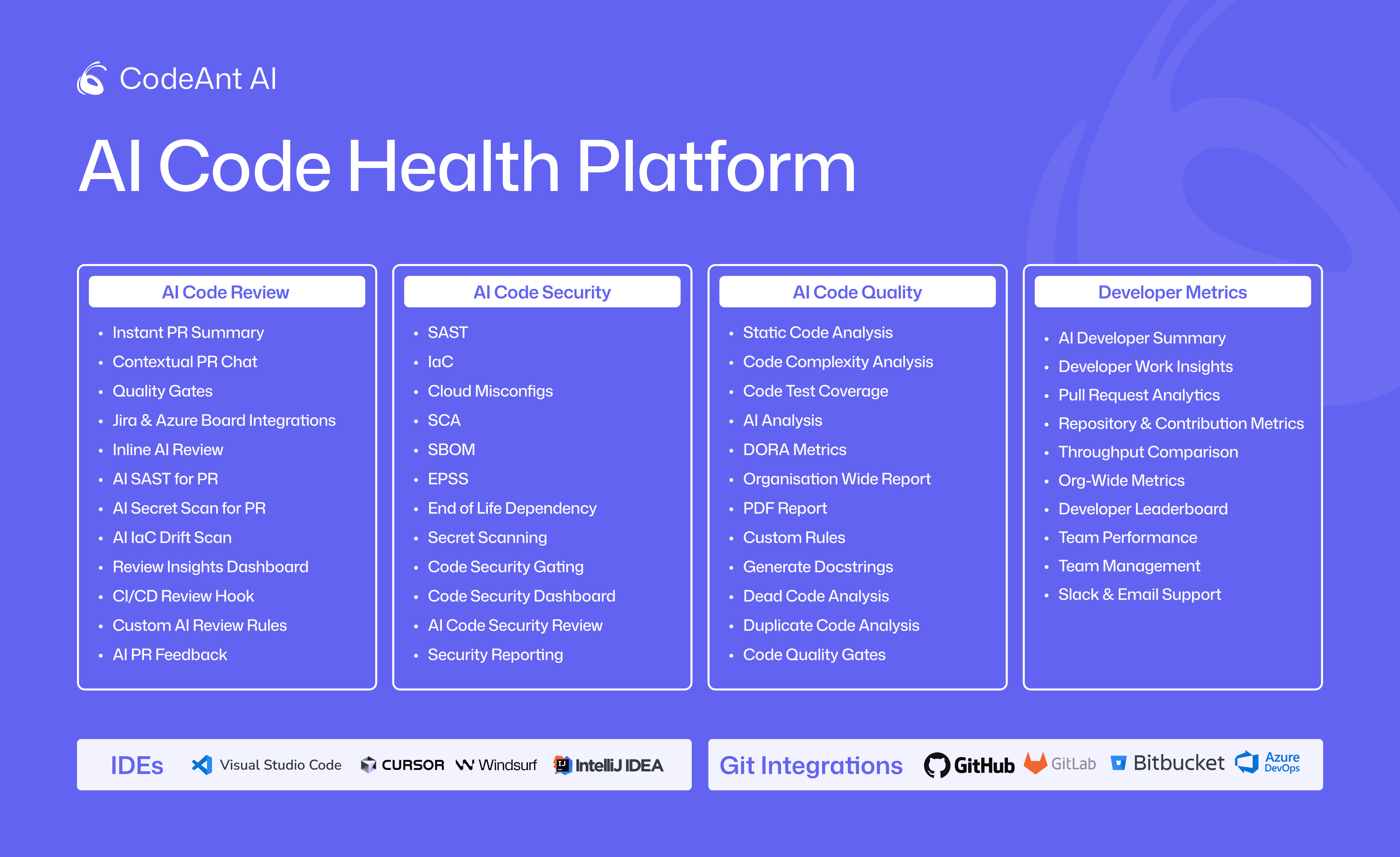

Unifying Code Quality, Security, and Reviews in One Platform

Juggling multiple point solutions creates its own overhead. A unified platform brings together PR reviews, security scanning, quality metrics, and developer productivity insights in a single view.

Single source of truth: one dashboard for all code health signals

Reduced tool fatigue: no juggling separate security, quality, and review tools

Consistent enforcement: same standards applied across reviews, scans, and metrics

CodeAnt AI takes this unified approach, combining automated code review, security scanning, and quality tracking into one platform that understands your codebase and helps your engineers move faster with confidence.

Start a free 14-day trial of CodeAnt AI and see unified code quality analysis across your entire development lifecycle.