AI Code Review

Dec 13, 2025

The Truth About AI Code Review Accuracy in 2026

Sonali Sood

Founding GTM, CodeAnt AI

Eighty-four percent of developers now use AI in their workflow. Only a third trust the accuracy of what it produces. That gap tells you everything about where AI code review stands in 2026.

The frustration is real: AI suggestions that look right but break something else, security scans that miss obvious vulnerabilities while flagging non-issues, and the nagging question of whether you're saving time or just creating new problems. This guide breaks down where AI code review actually delivers, where it falls short, and how to configure your tools for results you can rely on.

Why Developers Still Question AI Code Review Accuracy

Can you trust AI code review? The short answer: Yes, AI is a powerful tool, but it requires human oversight. Trust in AI accuracy is actually declining even as usage rises. The main reason? AI suggestions are often "almost right, but not quite," which leads to frustrating debugging sessions for subtle errors that wouldn't exist if you'd written the code yourself.

Here's the disconnect. Vendors promise AI catches everything. Then you watch it flag a perfectly fine function while missing an obvious security hole two files over. That gap between marketing and reality erodes confidence fast.

When developers talk about "accuracy" in code review, they mean several things at once:

Correct suggestions: Does the AI recommend changes that actually improve the code?

Relevant findings: Are flagged issues real problems in your specific codebase?

Low false positives: Can you trust alerts without checking each one manually?

What AI Code Review Gets Right

AI code review delivers consistent results in specific, well-defined areas. Pattern matching and repetitive analysis play to AI's strengths, and frankly, they're the tasks that exhaust human reviewers anyway.

Security Vulnerabilities and Common Exploits

AI excels at detecting known vulnerability patterns. SQL injection, cross-site scripting (XSS), and hardcoded secrets follow recognizable signatures. SAST, which is automated analysis that scans source code for security flaws, benefits enormously from AI's ability to process entire codebases in minutes rather than days.

Code Style and Formatting Consistency

Enforcing linting rules, naming conventions, and formatting standards across large codebases is tedious for humans. AI handles this without fatigue, applying the same rules to the thousandth file as it did to the first. No Monday morning slowness, no Friday afternoon shortcuts.

Detecting Code Smells and Anti-Patterns

Code smells are indicators of deeper problems: long methods, duplicate code, overly complex functions. AI's pattern recognition identifies maintainability issues reliably, flagging technical debt before it compounds into something expensive.

Identifying Dependency Risks

Outdated packages, known CVEs in dependencies, and license compliance issues all follow patterns AI tracks well. When your project pulls in dozens of third-party libraries, automated scanning catches risks that manual review would miss entirely.

Where AI Code Review Still Falls Short

Being honest about limitations helps you use AI tools more effectively. Some review tasks genuinely require human judgment, and pretending otherwise wastes everyone's time.

Business Logic and Domain-Specific Context

AI lacks understanding of your product's unique requirements. It can't validate whether code actually solves the intended business problem or fits your user workflows. A function might be technically correct yet completely wrong for your domain. Only someone who understands the business can catch that.

Architectural Decision Validation

Design patterns, system boundaries, and long-term scalability implications require experience and context AI doesn't possess. Will this approach scale? Does it fit your existing architecture? Human reviewers answer questions like this; AI doesn't even know to ask them.

False Positives That Cause Alert Fatigue

Alert fatigue, which is desensitization from too many irrelevant warnings, erodes trust faster than missed issues. When developers start ignoring AI suggestions because most are noise, the tool becomes counterproductive. You end up worse off than having no tool at all.

Edge Cases and Complex Control Flow

Deeply nested logic, multi-step state changes, and rare execution paths challenge AI's analysis. Ironically, edge cases often hide the most critical bugs, yet they're precisely where AI struggles most.

AI Code Review Marketing Claims vs Reality

Setting realistic expectations helps you get actual value from AI tools rather than disappointment.

Marketing Claim | Reality |

"Catches all security issues" | Catches known patterns; misses novel or context-dependent vulnerabilities |

"Replaces human reviewers" | Augments reviewers; requires human oversight for complex decisions |

"Zero configuration needed" | Accuracy improves significantly with organization-specific tuning |

"Instant, accurate feedback" | Speed is real; accuracy depends on code context and tool configuration |

Is AI-Reviewed Code Actually Secure

Security is the highest-stakes accuracy question. False confidence here carries real risk, so let's be specific about what AI can and can't do.

What Security Issues AI Catches Reliably

AI handles injection flaws, authentication weaknesses, exposed secrets, and insecure configurations well. Known vulnerability patterns with clear signatures get flagged consistently.

Blind Spots in AI Security Analysis

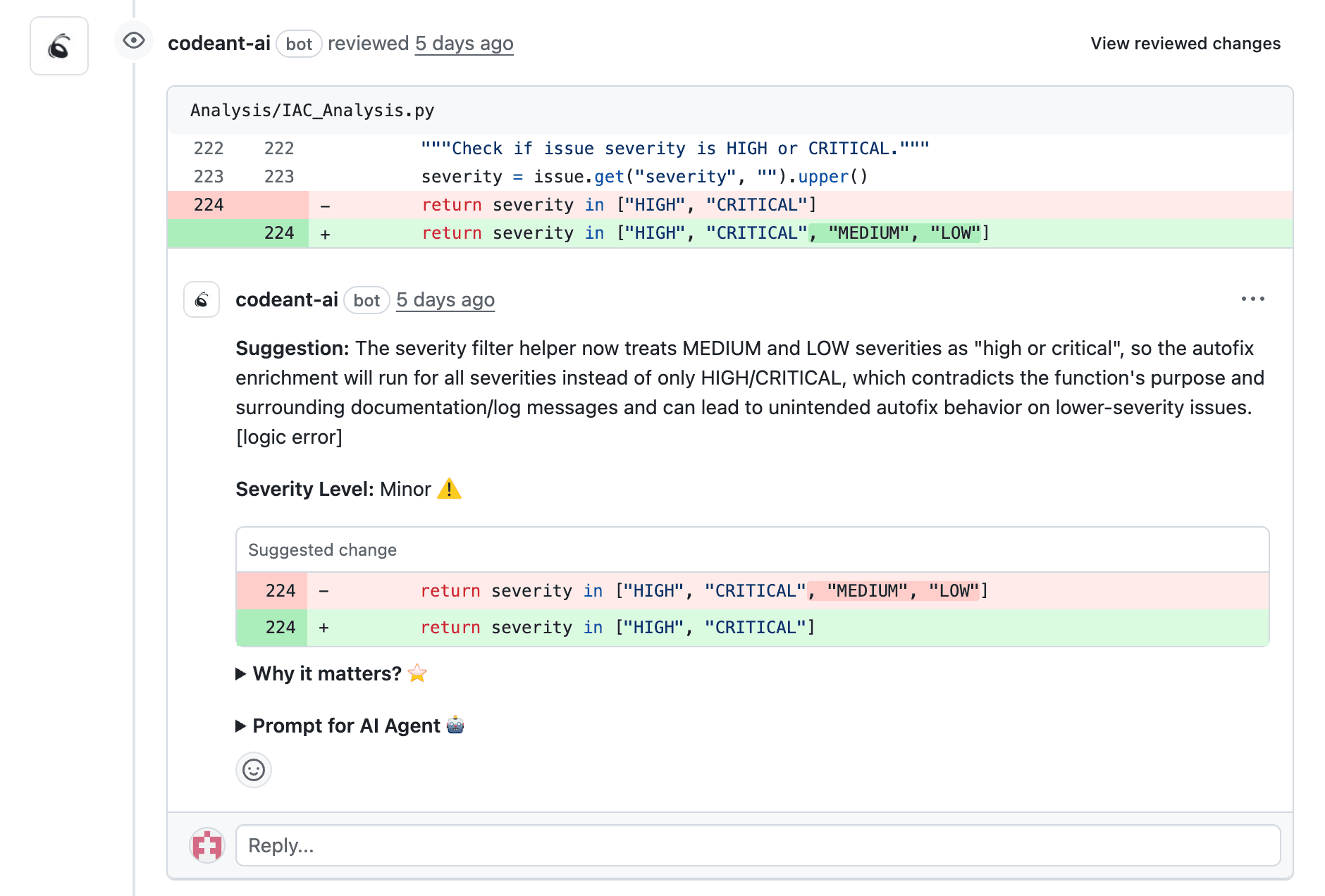

Business logic flaws, authorization bypass scenarios, and race conditions require understanding intent. AI lacks that understanding. Context-dependent security issues often slip through because AI doesn't know what your application is supposed to do. But, CodeAnt AI is beyond such limitations…as our platform is 100% context-aware, it doesn’t just scan code, it understands it. It reviews pull requests in real time, suggests actionable fixes, and reduces manual review effort by up to 80%.

The Risk of False Confidence in AI Findings

A clean AI report doesn't mean your code is secure. AI provides one layer in defense-in-depth, not a complete security validation. Treating it otherwise creates dangerous blind spots that attackers can exploit.

How to Validate AI Code Review Suggestions

Practical verification steps help you capture AI's value while avoiding its pitfalls.

1. Cross-Reference Against Static Analysis Results

Combining AI suggestions with traditional static analysis tools provides validation through multiple sources. Agreement across tools increases confidence; disagreement flags items for closer human review.

2. Apply the Two-Minute Rule for Quick Validation

If you can't verify an AI suggestion within two minutes, flag it for deeper human review. Don't blindly accept or reject. Escalate appropriately and move on.

3. Track Accuracy Metrics Over Time

Log accepted versus rejected AI suggestions. Over weeks, you'll build data on tool reliability for your specific codebase. That data guides configuration adjustments and helps you calibrate trust.

4. Establish Team Review Protocols for AI Findings

Create team standards for when AI suggestions require additional scrutiny. Security findings and architectural changes typically warrant extra human attention regardless of what AI says.

How to Configure AI Tools for Better Accuracy

Proper setup dramatically improves AI review reliability. Configuration is an investment that pays ongoing dividends, not a one-time chore.

Training AI on Your Codebase

AI tools learn from your repository's patterns, commit history, and existing code standards. The more context they have, the more relevant their suggestions become. First-week accuracy rarely matches third-month accuracy.

Setting Organization-Specific Rules and Standards

Custom rule configuration enforces your team's coding standards, security policies, and compliance requirements. Platforms like CodeAnt AI allow organization-specific rule enforcement that reflects how your team actually works, not generic best practices that may not apply.

Tuning Sensitivity to Reduce False Positives

Threshold adjustments and rule severity settings balance thoroughness against noise. Start stricter, then relax rules that generate too many false positives for your context. Finding the right balance takes iteration.

How Human and AI Code Review Work Together

The optimal model combines AI's speed and consistency with human judgment and context. Neither alone matches what both achieve together.

AI as First-Pass Filter for Pull Requests

AI catches obvious issues before human reviewers see the code. This reduces reviewer fatigue on routine catches, preserving human attention for complex decisions that actually require thought.

Human Review for Context-Dependent Decisions

Humans focus on business logic correctness, architectural fit, code readability, and team conventions. AI handles the mechanical checks; humans handle the judgment calls.

Feedback Loops That Improve AI Over Time

Accepting and rejecting AI suggestions creates a learning cycle. Adding custom rules based on repeated false positives refines the tool's relevance to your organization. The tool gets smarter as you use it.

What Accuracy Skeptics Get Wrong About AI Code Review

Some developers dismiss AI code reviews entirely based on misconceptions. Addressing common objections helps teams capture real value:

"AI can't understand my code": Modern AI analyzes syntax, semantics, and patterns effectively. It struggles with intent, not comprehension

"False positives mean the tool is useless": All review methods produce false positives. The question is whether true positives outweigh the noise

"I still have to review everything anyway": AI shifts review focus from routine catches to high-value decisions

"AI review is just glorified linting": AI goes beyond style to detect security flaws, complexity issues, and potential bugs

Where AI Code Review Accuracy Is Heading

AI accuracy is improving along several dimensions. The trajectory matters even if current tools have limitations.

Organization-Specific Learning at Scale

AI platforms are getting better at learning each organization's unique patterns, standards, and preferences. Personalization improves accuracy over time without requiring constant manual configuration.

Tighter Integration with Developer Workflows

Deeper IDE and CI/CD integration enables AI to provide suggestions at the right moment with better context. Timing and placement affect how useful suggestions feel to developers, and integration is improving rapidly.

Building a Code Review Process You Can Trust

Establishing reliable AI-assisted review workflows requires intentional design, not just tool adoption.

Start with clear accuracy expectations: AI augments human review; it doesn't replace judgment

Invest in configuration: Organization-specific rules and training dramatically improve relevance

Measure and iterate: Track AI accuracy for your codebase and adjust settings based on results

Combine AI with static analysis: Unified platforms like CodeAnt AI bring AI review, SAST, and quality metrics into a single view

Ready to see an AI code review that actually fits your workflow?Book your 1:1 with our experts today