AI Code Review

Dec 18, 2025

How to Calculate AI Code Review ROI Before You Commit Budget

Sonali Sood

Founding GTM, CodeAnt AI

Budget conversations around AI tools often stall at the same question: "How do we know this will actually pay off?" For engineering leaders evaluating AI code review, the pressure to justify every dollar makes this question unavoidable.

The answer lies in running the numbers before you commit, not after. This guide walks through the metrics, costs, and calculations that turn vague promises into concrete ROI projections you can defend to leadership.

Why Calculating ROI Before You Buy Protects Your Budget

AI code review tools can speed up your developers, cut down on manual checks, and catch bugs earlier. But here's the thing: success depends on setting clear goals upfront, measuring actual productivity gains, and accounting for costs you might not see coming—like integration time and team training.

Without running the numbers first, you risk overspending on features nobody uses. Or worse, you can't prove the tool worked when your VP asks for results next quarter.

Here's what tends to go wrong when teams skip ROI analysis:

Bundled features you'll never touch: Many tools pack in capabilities that sound great but sit unused

Hidden costs beyond licensing: Integration, onboarding, and ongoing maintenance add up fast

No baseline to prove improvement: If you don't measure your current state, you can't show progress

What Is an AI Code Review ROI Calculator

An ROI calculator is a framework (or sometimes an actual tool) that estimates your potential savings versus costs before you commit a budget. Engineering leaders use it to compare what they're spending on manual reviews today against what they'd spend with AI assistance.

Think of it as a sanity check. You plug in your team's numbers, and the calculator tells you whether the investment makes financial sense—or whether you're about to waste money.

Most ROI calculators look at four inputs:

Current review time per pull request: Your baseline before any automation

Developer cost per hour: Fully loaded salary divided by working hours

Projected time savings: How much faster reviews become with AI

Tool subscription cost: Monthly or annual licensing fees

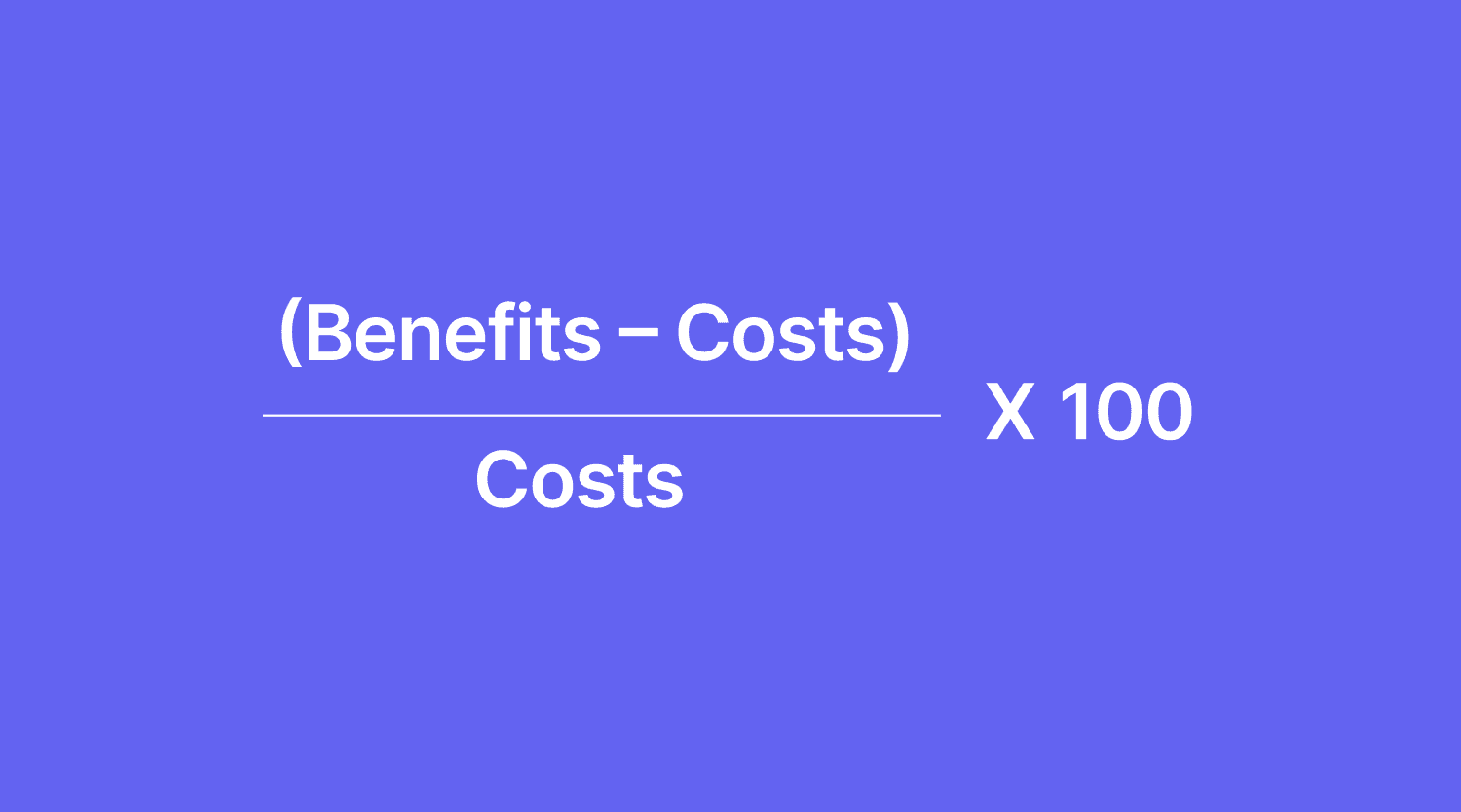

The formula is simple: (Benefits – Costs) / Costs × 100. The hard part is getting accurate numbers for both sides.

Key Metrics to Measure AI Code Review ROI

Vague claims like "we're faster now" won't satisfy finance or leadership. You want concrete numbers that tie directly to budget impact.

Review Cycle Time Reduction

Review cycle time is how long a pull request sits between creation and merge. Shorter cycles mean faster delivery and less context-switching for developers. If your average PR waits two days for review, cutting that to one day compounds across every merge your team makes.

Developer Hours Saved Per Sprint

Track time spent on code reviews before and after AI adoption. This metric connects directly to dollars—if developers spend 20% less time reviewing, that's 20% more time building features.

Bug Escape Rate and Defect Density

Bug escape rate measures how many defects reach production. Catching issues earlier is cheaper than fixing them after release, when you're dealing with incident response, customer complaints, and emergency patches.

Security Vulnerability Detection Rate

AI tools flag security issues earlier in development. Finding a vulnerability during code review costs a fraction of what it costs to remediate after a breach—not to mention the compliance headaches.

Technical Debt Reduction Over Time

Technical debt is the cost of rework you create when you choose quick fixes over better solutions. AI code review enforces standards that prevent debt from piling up, which keeps future development faster and cheaper.

Metric | What It Measures | Budget Impact |

Review cycle time | PR creation to merge duration | Faster releases, reduced delays |

Developer hours saved | Time spent reviewing code | Direct labor cost reduction |

Bug escape rate | Defects reaching production | Lower incident response costs |

Vulnerability detection | Security issues caught pre-merge | Avoided breach and compliance costs |

Technical debt | Code maintainability over time | Reduced refactoring spend |

The True Costs of AI Code Review Tools

Subscription fees are just the starting point. Cost-conscious teams often focus on licensing and miss the expenses that add 10–20% to actual costs.

Licensing and Subscription Pricing Models

Common pricing structures include per-seat, per-repository, or usage-based models. Per-seat works well for smaller teams but gets expensive at scale. Usage-based offers flexibility but makes budgeting harder to predict.

Integration and Onboarding Investment

Connecting tools with your existing CI/CD pipelines, GitHub, GitLab, or Azure DevOps takes time. Some platforms offer native integrations that reduce setup friction. Others require custom configuration that eats into your projected savings.

Ongoing Maintenance and Team Training

Teams need time to learn new tools and adjust workflows. Factor in ongoing administration, configuration updates, and the productivity dip during the learning curve.

The Hidden Cost of Managing Multiple Tools

Using separate tools for security, quality, and code review creates overhead. Each tool requires its own login, dashboard, and mental context switch. A unified platform approach reduces tool sprawl and the associated costs of managing multiple vendors.

How to Translate Time Savings Into Dollar Value

Converting saved developer hours into financial impact requires calculating the "fully loaded cost"—salary plus benefits and overhead. This number is typically 1.25x to 1.4x the base salary.

Here's how the calculation works:

Calculate fully loaded hourly rate: Include salary, benefits, and overhead

Estimate hours saved per developer per week: Based on review time reduction

Multiply across team size and time period: Project monthly or annual savings

Subtract tool costs: Net savings equals gross savings minus subscription fees

For a 50-person team saving 3 hours per developer per week at $100/hour, that's $15,000 weekly—or $780,000 annually. Even after subtracting tool costs, the ROI becomes clear.

Qualitative Benefits That Strengthen Your Business Case

Some ROI factors are harder to quantify but still matter when you're building a budget request. Leadership often cares about long-term team health as much as short-term savings.

Developer Satisfaction and Retention Impact

Removing tedious review tasks improves the developer experience. Higher satisfaction reduces turnover, and replacing a senior developer can cost 50–200% of their annual salary in recruiting and ramp-up time.

Code Consistency and Knowledge Sharing

AI-enforced standards create more uniform codebases. Consistent code reduces onboarding time for new team members and makes it easier for anyone to work on any part of the codebase.

Compliance Readiness and Audit Cost Reduction

Automated enforcement simplifies audit preparation. Platforms with built-in compliance checks reduce the manual work of gathering evidence and demonstrating adherence to standards like SOC 2 or ISO 27001.

Why AI Code Review Investments Fail to Deliver ROI

Not every AI code review investment pays off. Understanding common failure modes helps you avoid them.

No Clear Success Metrics Defined Upfront

Without baseline measurements, teams can't prove improvement. If you don't know your current review cycle time, you can't demonstrate that it got better. Define metrics before implementation.

Poor Adoption and Change Management

Tools fail when developers resist or ignore them. Successful adoption requires training, leadership support, and addressing developer concerns about AI-generated feedback.

Misaligned Expectations with Leadership

Overpromising ROI creates credibility problems. If you tell leadership you'll see 50% time savings in month one, and reality is 20% savings by month three, you've undermined trust—even though 20% is still valuable.

How to Evaluate AI Code Review Tools Before Purchasing

A practical evaluation framework helps cost-conscious buyers compare options objectively. Don't rely on vendor demos alone.

Accuracy and False Positive Rates

Too many false positives waste developer time and erode trust. Ask vendors for false positive rates and test with your own codebase during trials.

Integration with Your Existing CI/CD Workflow

Seamless integration reduces setup costs and friction. Check for native support with your Git provider and pipeline tools. If integration requires custom scripting, factor that time into your cost calculations.

Security and Compliance Capabilities

Built-in Static Application Security Testing (SAST), secret detection, and compliance enforcement add significant value. Unified platforms eliminate the need for separate security tools.

Scalability for Teams Over 100 Developers

Cost-conscious enterprise teams want tools that scale without per-seat costs becoming prohibitive. Evaluate pricing at your target team size, not just your current headcount.

How to Build a Business Case for AI Code Review Investment

Building a compelling budget request requires more than enthusiasm. Here's a step-by-step process for presenting ROI to leadership.

1. Quantify Your Current Review Bottlenecks

Measure your current state: average review time, PR backlog size, and developer time allocation. This establishes the baseline for improvement and gives you concrete numbers to reference.

2. Project Time and Cost Savings with AI

Estimate future state improvements based on tool capabilities. Use conservative projections to maintain credibility. It's better to under-promise and over-deliver.

3. Include Risk Reduction and Security Benefits

Quantify avoided costs: security incidents, compliance failures, and production bugs. A single security breach can cost millions; preventing one justifies significant tool investment.

4. Present a Realistic Break-Even Timeline

Calculate when cumulative savings exceed cumulative costs. Most teams see break-even within 3–6 months. Realistic timelines build leadership confidence.

Common AI Code Review ROI Pitfalls and How to Avoid Them

Here's a quick reference for avoiding the most common mistakes:

Starting without a baseline: Measure current state before implementing any tool

Ignoring adoption tracking: Monitor whether developers actually use the tool

Focusing only on time savings: Include quality and security improvements in ROI calculations

Choosing based on price alone: The cheapest tool may cost more if it lacks key capabilities

Delaying metric tracking: Start measuring ROI from day one, not months later

How to Maximize ROI After Adopting AI Code Review

ROI optimization continues after purchase. Teams that actively manage adoption see better returns than teams that set it and forget it.

Track ROI Metrics from Day One

Immediate measurement demonstrates value and catches issues early. Use dashboards to visualize progress and share results with stakeholders regularly.

Scale Adoption Beyond Initial Teams

Expanding usage across the organization multiplies ROI. Start with a pilot team, prove value, then roll out broadly.

Iterate Based on Developer Feedback

Gathering input from developers improves adoption and surface configuration improvements. Tools work best when tuned to your codebase and team preferences.

Start Measuring Your Code Health ROI Today

Calculating ROI before committing a budget protects cost-conscious teams from wasted spend and helps justify investments to leadership. The math isn't complicated, but it does require measuring your current state, projecting realistic improvements, and accounting for total cost of ownership.

CodeAnt AI provides a unified code health platform for code review, security, and quality, helping teams consolidate tools and maximize ROI. To know more about the product in detail, book your 1:1 with our experts today!