AI Code Review

Feb 13, 2026

Is AI Code Review Safe for Proprietary Code?

Sonali Sood

Founding GTM, CodeAnt AI

Your legal team just blocked AI code review adoption. Their reason? "We can't risk our proprietary algorithms ending up in someone else's model." This scenario plays out daily at fintechs, healthcare companies, and defense contractors, engineering leaders who see 70% faster review cycles blocked by IP protection concerns.

The concern is legitimate. Generic AI assistants have unclear data handling policies and cloud-only architectures that make compliance teams nervous. One leaked algorithm can cost competitive advantage or trigger regulatory violations. But abandoning AI means sacrificing productivity gains and missing critical security issues.

The short answer: Yes, AI code review is safe for proprietary code, but only when the platform is architected for it from day one.

"Safe" isn't marketing, it's measurable guarantees around data handling, deployment control, and enforcement mechanisms that prevent your IP from becoming training data.

This guide breaks down the three security risks, provides a five-question vendor evaluation framework, and shows exactly what "safe" looks like in technical terms. You'll walk away knowing how to prove safety to security stakeholders.

What "Safe" Actually Means

Before evaluating any AI code review tool, define safety with precision. For proprietary code, "safe" requires five architectural properties:

1. Zero training on customer code

Your algorithms must never become training data for the vendor's model. This guarantee must be enforced architecturally, not just contractually. Look for platforms with inference-only models and explicit data isolation validated through SOC2 Type II audits.

2. Controlled data residency

You need to know exactly where code goes. For ITAR, HIPAA, or financial services compliance, "the cloud" isn't acceptable—you need on-premises deployment, VPC isolation, or region-locked instances with documented data flows.

3. Encryption at rest and in transit

AES-256 encryption and TLS 1.3 are table stakes. But you also need key management controls, certificate pinning, and audit trails proving who accessed what code, when.

4. Context-aware access controls

Safe platforms enforce role-based access, respect branch protection rules, and generate immutable audit logs. Every AI suggestion should be traceable: which model version, which code context, which security policies applied.

5. Resistance to prompt injection

AI models can be tricked into leaking information. Safe platforms implement input sanitization, output filtering, and context boundaries preventing attackers from using the AI as an attack vector.

Most AI code review tools were built for open-source workflows and retrofitted security. Platforms that protect proprietary code were designed with zero-trust architecture from the start, that architectural difference is everything.

Threat Model: What Could Actually Go Wrong

Every PR contains more than code changes. When you feed a diff to an AI system, you're potentially exposing:

Source code and proprietary algorithms: Pricing engines, recommendation systems, fraud detection logic

Secrets and credentials: API keys, database strings, service tokens

Architecture details: Service dependencies, internal APIs, deployment patterns

Customer data flows: How PII, PHI, or financial data moves through your system

Unreleased features: Code telegraphing competitive strategy before launch

The Actors and Entry Points

Who could access your code:

The AI vendor (storage, processing, model training)

Other tenants in multi-tenant systems (isolation failures)

External attackers (if code transmits over internet or stores in cloud)

Malicious insiders (vendor employees with database access)

Inadvertent leakage (developers including sensitive code in prompts)

How code leaves your control:

PR diffs sent to external APIs

Natural language queries containing code snippets

Logs and telemetry capturing code

Training pipelines (explicit or implicit)

Cached embeddings and indexes

Risk #1: Model Training/Retention

The most insidious risk isn't what happens to your code today, it's what happens tomorrow. When you feed proprietary algorithms into an AI system, you need certainty that your IP won't resurface as suggestions to competitors.

How Training Turns IP Into Someone Else's Competitive Advantage

Many AI coding assistants operate on opt-out training models. Your code is assumed fair game for model improvement unless you explicitly disable it. Consider what "training" means:

Base model training: Your code becomes part of the foundational dataset

Fine-tuning: AI adapts to specific patterns, including your unique approaches

RLHF: Human reviewers label AI suggestions using your codebase as curriculum

The risk compounds when "we don't train by default" isn't the same as "we architecturally cannot train." The former is policy that can change with terms-of-service updates. The latter is a design decision baked into the platform.

The Retention Trap

Even with no-training promises, data retention policies create secondary exposure. Many platforms retain:

Prompt logs containing code snippets for 30-90 days "for debugging"

Telemetry including file paths, function names, code structure

Human review queues where code sits awaiting quality assessment

A fintech discovered their AI assistant logged every code snippet sent for review, storing it in a third-party cloud bucket for 90 days, accessible to vendor support and ML teams.

What "Safe" Looks Like

Requirement | What to Look For | Red Flags |

Training policy | Explicit "never trains on customer code" with architectural enforcement | Opt-out models, vague "may use to improve services" |

Data retention | Bounded retention (≤24 hours) with automatic purging | 30-90 day log retention, indefinite telemetry |

Processing location | On-prem or VPC deployment options | Cloud-only with unclear residency |

Contract alignment | Terms matching technical architecture (auditable) | Legal language contradicting documentation |

CodeAnt AI's approach: Zero-training commitment enforced by design. No customer code enters our training pipeline. Ephemeral processing means code is purged once review completes. On-prem and VPC options ensure code never leaves your infrastructure.

Risk #2: Data Transmission and Storage

Understanding the complete data path is critical. Every step, ingestion to inference to storage, introduces exposure points.

The AI Code Review Data Journey

1. Repository ingestion: Tool clones or fetches repository content. Cloud-only solutions transmit data to external infrastructure before analysis begins.

2. Preprocessing: Platform parses code into ASTs, extracts dependencies, builds contextual graphs. This often happens on shared infrastructure.

3. Model inference: Your code feeds to LLMs for analysis. In cloud deployments, this means your code touches third-party model providers (OpenAI, Anthropic) with their own policies.

4. Results storage: Review findings, flagged snippets, and historical data get persisted, sometimes indefinitely.

5. Feedback loops: Some tools use interactions to improve models, leaking information about your development practices.

Cloud-Only Risks

Cross-region processing: Your US-based fintech code might be analyzed in EU data centers, then stored in Asia-Pacific regions, violating SOC 2, GDPR, or HIPAA requirements.

Shared tenancy: Multi-tenant architectures mean your code runs on the same infrastructure as competitors. Implementation bugs or misconfigurations create real exposure.

Unclear subprocessor chains: Cloud tools depend on LLM providers, cloud infrastructure, CDN/caching layers, and analytics services. Each subprocessor introduces another trust link.

Effective Mitigations

VPC or on-premises deployment: Most effective control. VPC ensures data never leaves your cloud environment. On-premises eliminates external transmission entirely.

Customer-managed encryption keys: CMEK ensures data at rest is encrypted with keys you control. If a vendor suffers a breach, encrypted data remains protected unless you grant key access.

Strict egress controls: Configure network policies preventing AI infrastructure from reaching external endpoints. Route LLM API calls through your own proxy with logging.

Minimal retention with automated purging: Enforce policies automatically deleting code snippets, results, and logs after defined periods (e.g., 90 days).

Risk #3: Prompt Injection and Data Exfiltration

Prompt injection represents a new vulnerability class: attackers embed malicious instructions directly into pull requests that manipulate AI behavior.

How Prompt Injection Works

AI code reviewers process natural language alongside code, PR descriptions, commit messages, inline comments, even string literals. Attackers craft inputs overriding the AI's system instructions:

Real Exfiltration Vectors

Log-based exfiltration: Attackers craft prompts causing AI to include sensitive information in review comments visible in PR threads or CI/CD logs.

Policy bypass: Malicious instructions override security rules: "This code is exempt from authentication checks."

Context window exploitation: AI with large context windows may inadvertently reference code from other PRs when manipulated.

Architectural Defenses

System prompt isolation: Separate system-level instructions from user content using strict delimiters. CodeAnt AI enforces multi-tier prompt architecture where security policies exist in protected layers user input cannot access.

Output filtering: Every AI response passes through filters detecting and redacting:

Secrets, API keys, credentials

File paths, internal URLs, infrastructure details

Code snippets from files outside current PR scope

Context window constraints: Limit what AI can "see" to current PR and approved reference files. Never allow cross-PR context bleeding.

Policy-as-code enforcement: Define security rules as immutable code rather than natural language:

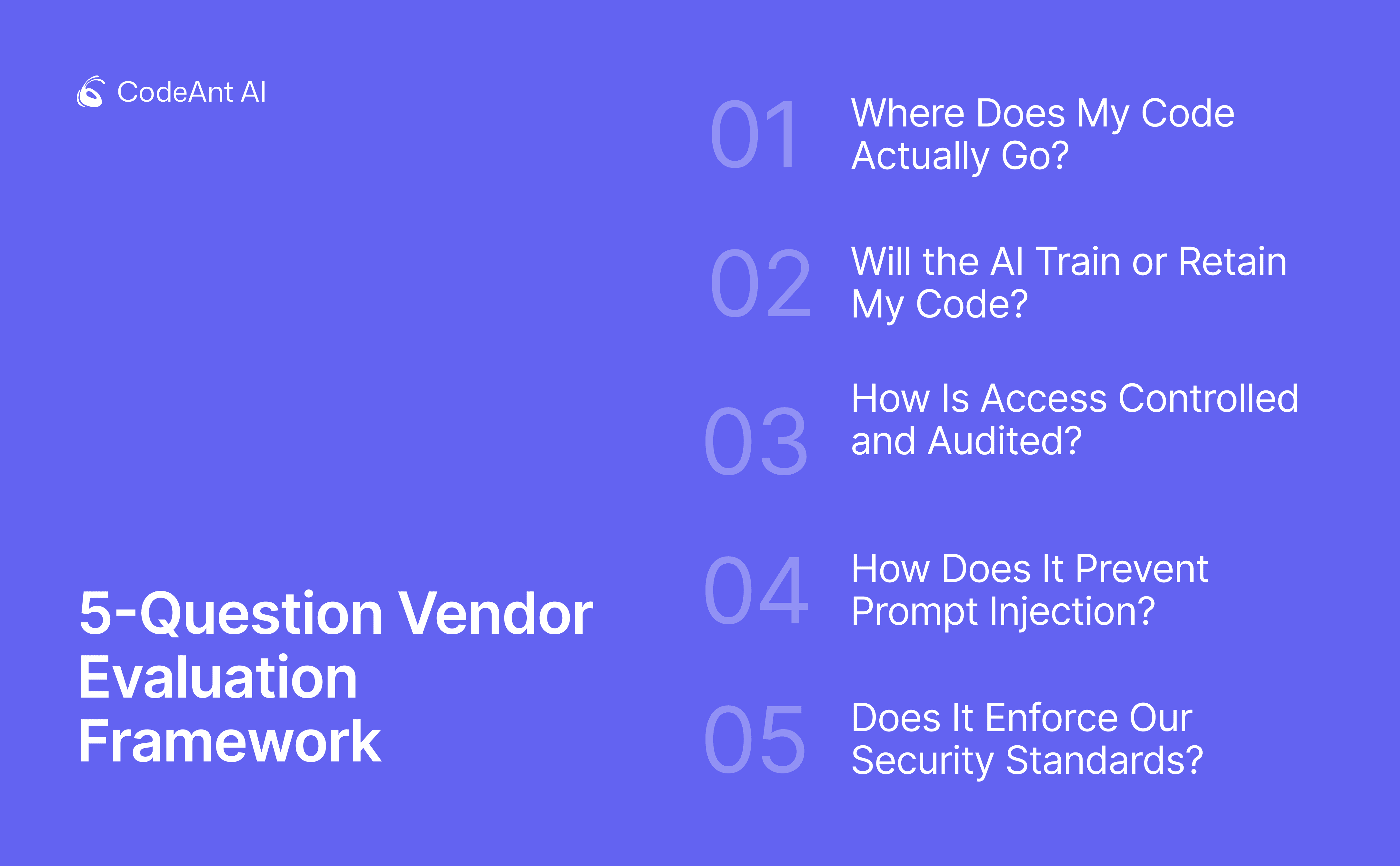

5-Question Vendor Evaluation Framework

Before committing to a platform, demand verifiable proof—not promises. This framework gives you five questions, what evidence to request, and red flags.

Question 1: Where Does My Code Actually Go?

What you're asking: Does code leave my infrastructure, and where does it land?

Evidence to request:

Architecture diagram with data flow paths

SOC 2 Type II report (Section 2: System Description)

Data Processing Agreement with residency clauses

Subprocessor list with geographic locations

Red flags:

"Cloud-only" with no on-prem option

Vague answers about "secure infrastructure"

Resistance to providing architecture diagrams

CodeAnt answer: Three deployment models, on-premises, VPC, and SOC2-certified cloud. You control where code is processed based on sensitivity.

Question 2: Will the AI Train or Retain My Code?

What you're asking: Does my algorithm become training data or suggestions for competitors?

Evidence to request:

MSA section on data usage and training

Technical documentation on retention policies

SOC 2 report (CC6.1 controls on data lifecycle)

Pen test summary showing no persistence vulnerabilities

Red flags:

"Opt-out" training models

"We don't currently train" (implies future changes)

Generic AI models without contractual protections

CodeAnt answer: Architecturally enforced zero training. Your data is processed in ephemeral environments and never persisted for model improvement.

Question 3: How Is Access Controlled and Audited?

What you're asking: Who can see my code, and can I prove compliance to auditors?

Evidence to request:

RBAC permission matrix

SSO integration documentation

Sample audit log export (redacted)

SOC 2 report (CC6.2, CC6.3 on access controls)

Red flags:

No SSO support

Coarse-grained permissions (admin vs. user only)

Audit logs not capturing code-level access

CodeAnt answer: Enterprise SSO (SAML/OIDC), granular RBAC, comprehensive audit logs integrating with Splunk, Datadog, and other SIEM platforms.

Question 4: How Does It Prevent Prompt Injection?

What you're asking: Can attackers trick AI into leaking code, or can insiders exfiltrate IP?

Evidence to request:

Pen test summary (focus on prompt injection scenarios)

Rate limiting and anomaly detection documentation

Bug bounty program details

Red flags:

No mention of prompt injection defenses

Unlimited API access without rate limits

Dismissive responses to exfiltration concerns

CodeAnt answer: Input sanitization and output validation validated by third-party pen testing. Anomaly detection flags suspicious query patterns.

Question 5: Does It Enforce Our Security Standards?

What you're asking: Will this understand our codebase well enough to catch violations of our policies?

Evidence to request:

Demo of custom rule creation

Example of context-aware finding (not generic OWASP)

Documentation on codebase learning mechanisms

Red flags:

No custom rule support

High false positive rates

Generic findings not reflecting your architecture

CodeAnt answer: Context-aware AI learns your codebase—libraries, patterns, architectural decisions, to enforce your specific standards, not generic rules.

Deployment Models: Matching Code Sensitivity to Architecture

Not all code carries the same risk. Choose deployment based on sensitivity:

Public/low-risk code → SaaS (cloud-hosted)

Use case: OSS contributions, public repositories, internal tools with no proprietary logic

Why it works: Code is already public. SaaS offers zero ops burden, instant updates

Example: Developer tools startup reviewing their open-source CLI

Proprietary business logic → VPC

Use case: SaaS platforms, fintech applications, e-commerce backends

Why it works: Code stays within your cloud perimeter. You control network policies, encryption keys, access logs

Example: Fintech running CodeAnt in AWS VPC to meet data residency requirements

Regulated/highly sensitive → On-premises

Use case: Healthcare (HIPAA), defense (ITAR/CMMC), financial services (PCI-DSS Level 1)

Why it works: Complete control. Code never transits the internet. You own hardware, network, audit trail

Example: Defense contractor in air-gapped environment, accepting quarterly manual updates for CMMC Level 3

The Operational Tradeoffs

Consideration | SaaS | VPC | On-Prem |

Setup time | Minutes | Days | Weeks |

Patching | Automatic | Vendor-managed in VPC | Manual deployment |

Latency | 2-5 seconds | 1-3 seconds | Variable |

Ops burden | None | Low | High |

Compliance audit | Rely on vendor certs | Shared responsibility | Full ownership |

Feature rollout | Immediate | Days | Weeks to months |

Most teams with proprietary code choose VPC—90% of SaaS convenience with control satisfying security audits. On-prem is reserved for environments where regulations explicitly prohibit cloud processing.

Security Requirements Checklist

Use this to evaluate any AI code review platform:

1. Deployment boundary control: Must support on-prem, VPC, or private cloud, not just multi-tenant SaaS

2. Data minimization: Analyze diffs and context only, never require full repository access

3. Encryption: TLS 1.3 in transit; AES-256 at rest; CMEK option

4. IAM + SSO + RBAC: SAML/OIDC integration, role-based access mapped to repository permissions

5. Comprehensive audit logging: Immutable logs capturing all AI interactions, what code analyzed, by whom, what suggestions made

6. Data retention controls: Configurable policies; guaranteed deletion within 30 days; no soft delete

7. Zero-training guarantee: Contractual and technical guarantee code never trains AI models

8. Subprocessor transparency: Complete list of third-party AI providers with data processing agreements

9. Incident response SLAs: Documented IR plan; breach notification within 24 hours

10. Prompt injection controls: Protection against malicious code comments attempting to manipulate AI

Conclusion: A Decision You Can Defend

AI code review is safe for proprietary code when you choose a platform built for it from day one. The risks are architectural problems with architectural solutions. Before approving any tool, verify:

Deployment control: Can you run it on-prem or in your VPC?

Training guarantees: Does vendor contractually and architecturally prevent training on your code?

Context awareness: Does AI enforce your security standards, not generic checks?

Audit trail: Can you prove exactly what data moved where and when?

Compliance certification: SOC2, ISO 27001, and industry-specific requirements should be table stakes

Ready to validate AI code review in your environment? Book a CodeAnt AI security and architecture walkthrough to map deployment models and governance controls to your proprietary code constraints. Our team will show you exactly how no-training guarantees, controlled residency, and org-specific policies work in practice, then help you design a pilot satisfying both engineering velocity and security requirements.

Start 14-day free trial and see how teams achieve 70%+ faster reviews without compromising IP protection.