AI Code Review

Feb 13, 2026

Does AI Code Review Leak Source Code?

Sonali Sood

Founding GTM, CodeAnt AI

Your CISO just asked the question that stops every AI tool procurement cold: "Does this leak our code?" It's not paranoia, it's due diligence. Your codebase contains proprietary algorithms, customer data handling logic, and security implementations that can't end up in someone else's training dataset or get exposed through a misconfigured cloud bucket.

The uncomfortable truth? Most AI code review tools can leak your code, even when vendors promise they won't. The difference isn't in privacy policies, it's in architecture. Tools that retain code for 30-90 days, send snippets to third-party LLMs, or store analysis results in cloud databases create leak surfaces that encryption alone can't eliminate.

This article maps exactly where AI code review tools handle your source code, identifies the real leak risks in the data pipeline, and provides a vendor evaluation framework to distinguish between platforms that promise not to leak and those engineered so they can't.

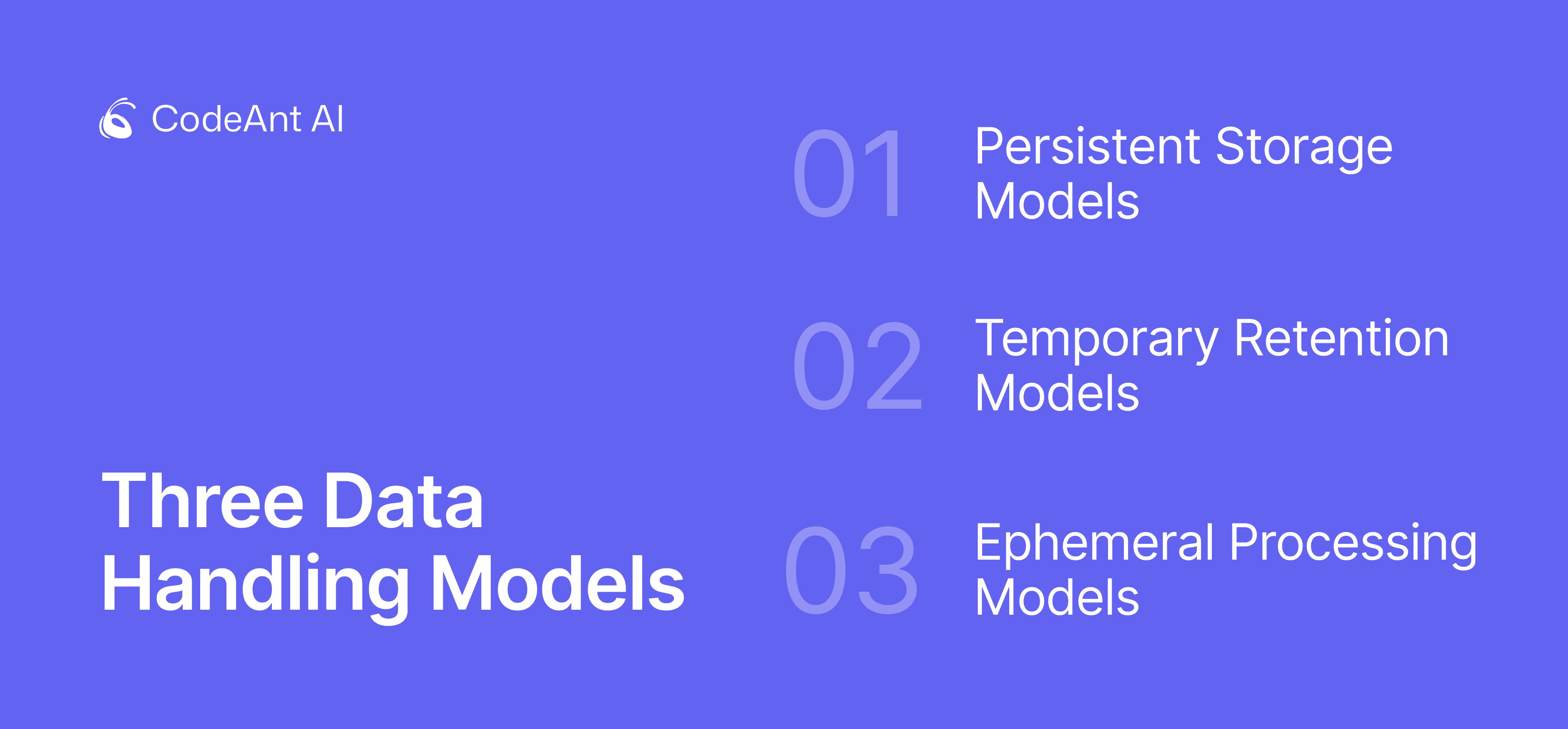

The Short Answer: Three Data Handling Models

AI code review tools fall into three architectural categories with radically different leak risk profiles:

1. Persistent Storage Models

Some tools retain code indefinitely to train proprietary models. Code snippets are stored in cloud buckets, indexed for training pipelines, and kept in backups. If your code is training data, it's permanently part of the model's knowledge base, and vulnerable to every attack vector that targets stored data.

Risk profile: Highest exposure. Code persists across your entire relationship with the vendor and potentially beyond.

2. Temporary Retention Models

The current industry standard. Tools retain code for 30-90 days in cloud storage for "conversation history," audit logs, or model context. The code is encrypted at rest, access is logged, and retention is time-limited. This model is SOC 2 compliant, but the code still exists in a database somewhere.

Risk profile: Medium exposure. A 30-90 day window where your code sits in vendor infrastructure, encrypted but accessible to authorized users (and potential attackers).

3. Ephemeral Processing Models

Code is analyzed in isolated, stateless containers, results are returned, and the code is immediately discarded. No databases, no persistent storage, no retention period. The code exists in memory for milliseconds during analysis, then vanishes.

Risk profile: Minimal exposure. Nothing persists to leak, making breaches structurally impossible rather than policy-prohibited.

The critical distinction: Tools in category 2 are policy-compliant but still create attack surfaces. Tools in category 3 are architecturally incapable of leaking because retention is impossible by design.

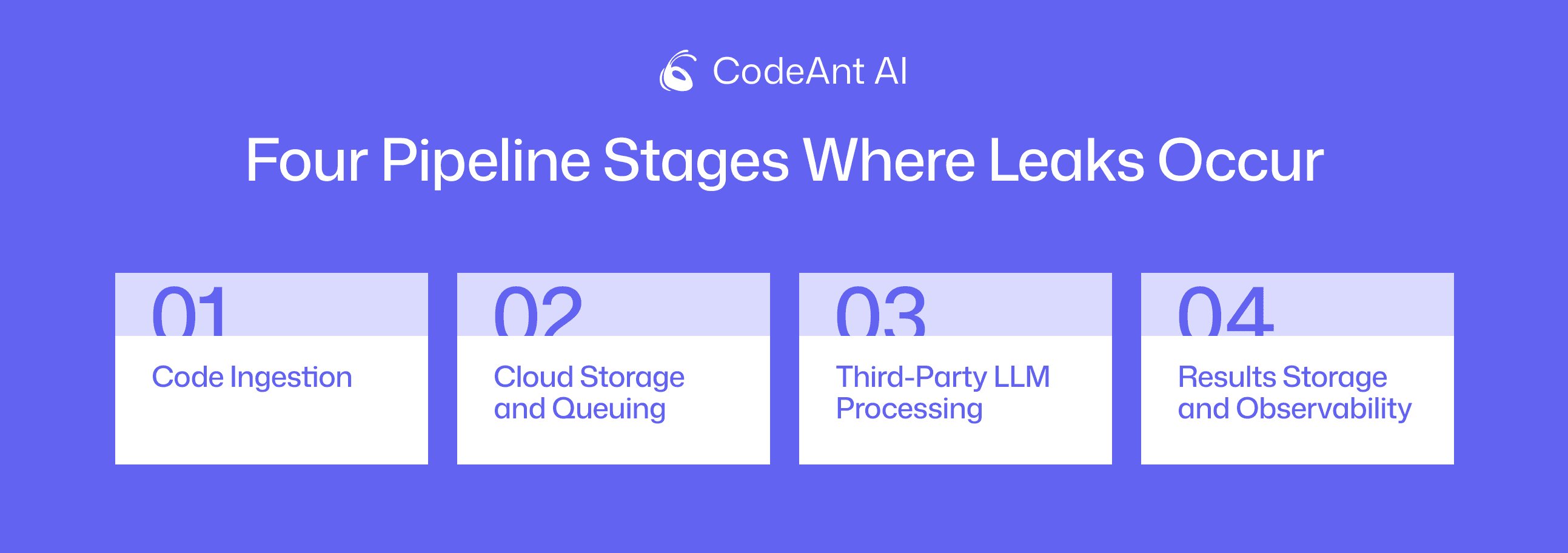

The Four Pipeline Stages Where Leaks Occur

Understanding where leaks happen requires mapping the full data flow. Most engineering leaders focus on "does the vendor train on my code?" but that's only one of four critical stages:

Stage 1: Code Ingestion

When a pull request triggers review, your code travels from your Git provider to the AI platform. Exposure points include:

Webhook payload logging: Full diffs logged at API gateways for debugging, retained for 30-90 days

Overprivileged access tokens: Tools requesting

repo:*scope instead of minimalpull_request:readRepository mirroring: Some platforms clone entire repos to vendor storage "for faster analysis"

What to verify: Review OAuth scopes, confirm webhook endpoints use signature validation, check whether the tool processes diffs in-stream or clones repositories.

Stage 2: Cloud Storage and Queuing

After ingestion, most platforms temporarily store your code while waiting for model capacity:

Object storage buckets (S3, GCS, Azure Blob): Misconfigured permissions are the #1 cause of source code leaks

Message queues: Code persists in queues until consumed; dead-letter queues can retain failed jobs indefinitely

Database staging tables: Creates indexed, queryable copies of your source

Even with AES-256 encryption, this data is accessible to anyone with cloud IAM permissions—platform engineers, support staff, or attackers who compromise credentials.

Stage 3: Third-Party LLM Processing

The analysis often happens outside the vendor's infrastructure entirely:

External API calls: Code sent to OpenAI, Anthropic, or Google endpoints crosses organizational boundaries

Model provider retention: Even with zero-training policies, providers may retain inputs for 30 days for abuse monitoring

Prompt logging: LLM platforms log prompts for rate limiting and compliance

This is the hidden risk most teams miss. Even if your vendor has zero retention, the LLM provider they call doesn't.

Stage 4: Results Storage and Observability

After analysis, the platform returns findings—but the pipeline doesn't end there:

Conversation history: Full review threads stored to enable follow-up questions

Audit logs: Compliance logging that includes code snippets for forensic analysis

Analytics telemetry: Aggregated metrics that may include hashed or tokenized code samples

Key insight: The AI model itself is stateless and ephemeral. The infrastructure around the model creates leak risk.

Real Leak Scenarios Engineering Teams Face

Prompt Injection Attacks

The IDEsaster research demonstrated that malicious instructions embedded in code comments can manipulate AI tools to exfiltrate sensitive snippets or recommend vulnerable patterns. Tools that retain conversation history create a permanent record of both the attack payload and any leaked data.

Ephemeral processing advantage: Stateless architectures process each review in isolated containers. The attack surface exists only during analysis, no payload persistence means no long-term exposure.

Misconfigured Cloud Storage

A 2023 incident at a code analysis vendor exposed 90 days of analyzed code through an S3 bucket with overly permissive IAM policies. The data was encrypted, but encryption keys were accessible to the same misconfigured role.

Zero-storage advantage: No cloud buckets means no misconfiguration risk. You can't leak what you don't store.

Over-Permissive Service Accounts

AI review tools typically require GitHub App installations or service accounts. A fintech customer granted repo:* scope instead of scoped pull_request:read. When the vendor's infrastructure was compromised via a supply-chain attack, attackers cloned 200+ private repositories.

Least-privilege advantage: Tools needing only PR diff access, not full repo clones, minimize damage from credential compromise.

Insider Threats

A Fortune 500 customer discovered their previous AI review vendor's support team had database-level access to "debug customer issues," meaning vendor engineers could theoretically query any customer's stored code.

Zero-storage advantage: If there's no database of customer code, there's nothing for employees to access, even with elevated privileges.

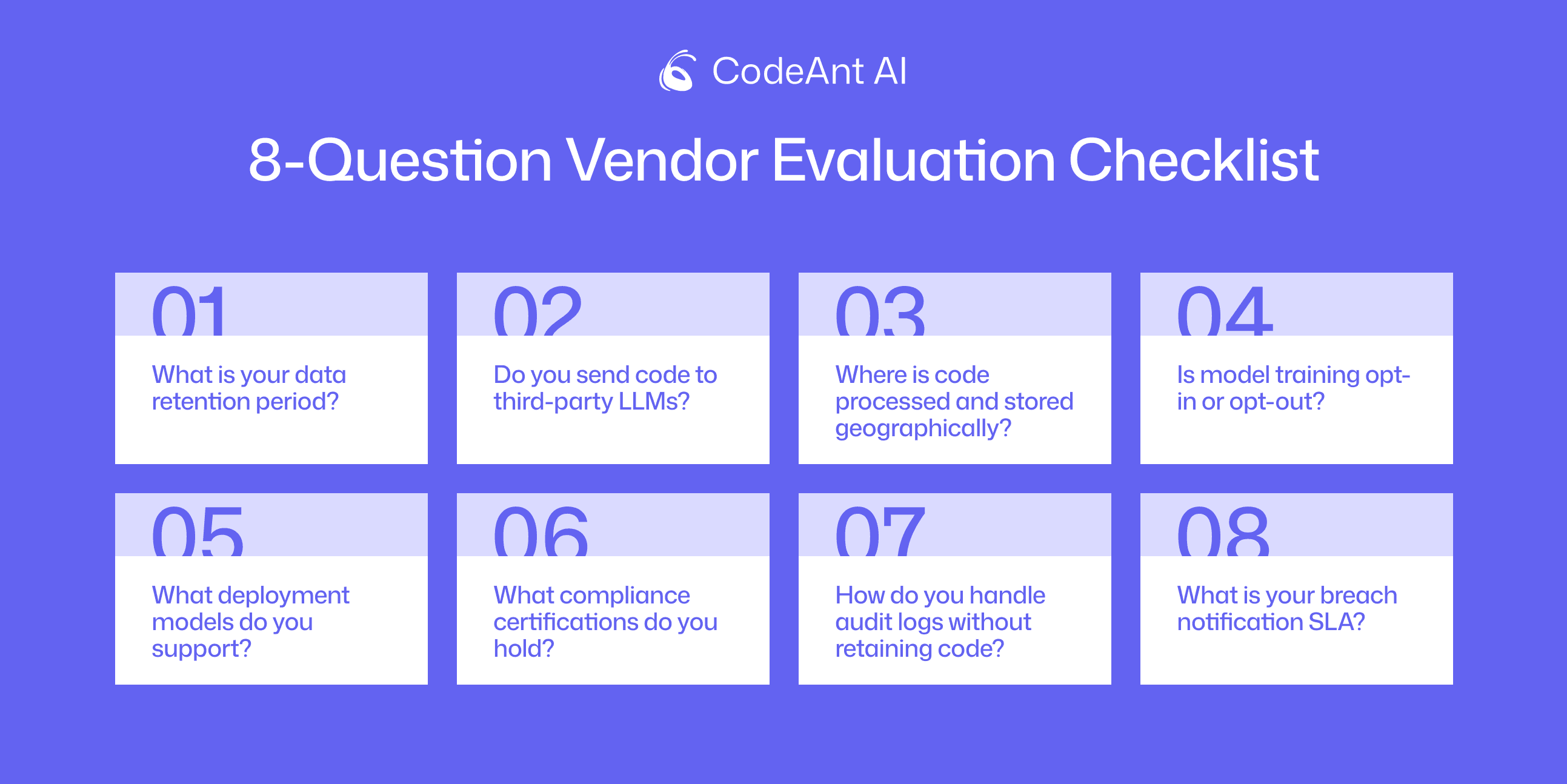

The 8-Question Vendor Evaluation Checklist

Ask these questions in writing, with links to documentation. If vendors can't provide clear answers, that's your red flag.

1. What is your data retention period?

Look for: Specific timeframes ("zero retention," "30 days," "indefinite"). If not "zero," ask where it's stored, who has access, and what triggers deletion.

Red flag: "As long as necessary" or any answer without a maximum retention window.

2. Do you send code to third-party LLMs?

Look for: Clear yes/no, and if yes, which providers, under what terms, and whether you can bring your own model.

Red flag: "We use industry-leading AI providers" without naming them, or "enterprise agreements protect your data" without explaining data flow.

3. Where is code processed and stored geographically?

Look for: Specific regions ("US-East-1," "EU-West-1") and whether you can enforce data residency.

Red flag: "Global cloud infrastructure" without letting you specify or restrict locations.

4. Is model training opt-in or opt-out?

Look for: Opt-in by default (explicit consent required), with audit logs proving data wasn't included in training.

Red flag: Opt-out policies (included unless you take action) or "we don't train by default" (the word "default" is doing heavy lifting).

5. What deployment models do you support?

Look for: Self-hosted, VPC, or air-gapped options that keep code in your infrastructure.

Red flag: SaaS-only with no on-prem option, or "enterprise plan required" without transparent pricing.

6. What compliance certifications do you hold?

Look for: SOC 2 Type II, ISO 27001, GDPR compliance, HIPAA readiness. Ask for actual audit reports, not just badges.

Red flag: "Compliance in progress" or "we follow industry best practices" without third-party validation.

7. How do you handle audit logs without retaining code?

Look for: Metadata-only logging (who reviewed what, when, which files) without storing code content.

Red flag: "We log all activity for security" without clarifying whether logs include code snippets.

8. What is your breach notification SLA?

Look for: Specific timeframe ("within 72 hours") and clear statement of what data could be compromised.

Red flag: "We will notify customers promptly" without addressing the blast radius of a breach.

Question | Red Flag Answer | Best-Practice Answer |

Retention period | "As long as necessary" | "Zero retention—ephemeral processing" |

Third-party LLMs | "Enterprise agreements protect data" | "Self-hosted or BYOM available" |

Geographic storage | "Global infrastructure" | "Customer-controlled regions" |

Training policy | "Opt-out available" | "Never used for training—architecturally impossible" |

Deployment options | "Cloud-only" | "Self-hosted, VPC, air-gapped supported" |

Compliance certs | "In progress" | "SOC 2 Type II, ISO 27001 in scope" |

Audit logging | "Full activity logs" | "Metadata-only, no code content" |

Breach SLA | "Per applicable law" | "24-hour notification, forensic support" |

Policy-Based vs. Architecture-Based Security

SOC 2, ISO 27001, and GDPR compliance are essential, but they verify that a vendor has controls in place, not that their architecture minimizes risk. A tool can be fully compliant while retaining code for 90 days, sending it to third-party LLMs, and storing it in cloud databases.

Compliance validates the process. Architecture determines risk.

What "Encrypted at Rest" Really Protects

Encryption at rest (AES-256) protects stored data from unauthorized access if an attacker gains physical access to storage media. It does not protect against:

Authorized access by vendor employees (they have the decryption keys)

Application-layer breaches (SQL injection, API vulnerabilities)

Cloud provider access (AWS/GCP/Azure admins can access encrypted data)

Insider threats (malicious employees with system access)

Subpoenas and legal requests (encrypted data can be decrypted and handed over)

The stronger approach: No data at rest means encryption is unnecessary. If code is processed ephemerally and never persists, there's nothing to encrypt, and nothing to leak.

Why "We Don't Train on Your Code" Isn't Enough

This promise addresses one leak vector (model training) while leaving others intact. Even with no-training policies, code still:

Sits in cloud storage for 30-90 days

Passes through third-party LLM APIs subject to their data handling

Exists in audit logs and conversation histories

Remains accessible to vendor employees with database access

The architectural difference: Zero-retention platforms don't need "we won't train" policies because training is impossible, there's no data to train on.

How Zero-Retention Architecture Works

CodeAnt AI's ephemeral processing model demonstrates how architectural security eliminates leak risk:

Stateless Execution Flow

Code ingestion → PR diff pulled directly from Git provider via OAuth

In-memory analysis → Code loaded into sandboxed container, analyzed by AI models

Result generation → Review comments and findings compiled

Immediate disposal → Container destroyed, all code data purged from memory

Metadata logging → Only audit trail metadata persisted (timestamp, user ID, repo name)

The key: There's no database storing code, no S3 bucket caching diffs, no training pipeline ingesting commits. Code literally doesn't exist in the infrastructure 30 seconds after analysis completes.

Deployment Options for Strict Requirements

VPC Deployment: Run CodeAnt entirely within your AWS, Azure, or GCP environment. Code never transits public networks.

On-Premises: Deploy on your hardware behind corporate firewalls. Ideal for healthcare (HIPAA), defense (ITAR), and financial services (PCI DSS).

Bring-Your-Own-Model (BYOM): Use your internally hosted LLMs instead of third-party APIs. Eliminates external dependencies entirely.

Audit Logs Without Code Retention

Security teams assume compliance requires storing code for audit trails. CodeAnt proves otherwise with metadata-only logging:

What we log:

User ID, repository name, PR number, timestamp

Finding severity, rule triggered, analysis duration

Policy decision (approved/flagged/blocked)

What we never log:

Source code content or diffs

File names or directory structures

Code snippets from findings

This satisfies SOC 2, ISO 27001, and HIPAA requirements without creating data retention risks.

Implementation: A Security-First Pilot

Phase 1: Scope Selection (Days 1-7)

Start with 2-3 low-sensitivity repositories with active development but no customer data or proprietary algorithms. Establish baseline metrics:

Average PR review cycle time

Defect escape rate (bugs found in production within 30 days)

Security findings per 1,000 lines of code

Manual review hours per week

Phase 2: Configure Guardrails (Days 8-10)

Before analyzing any code:

Disable any "conversation history" or retention features

Verify ephemeral processing (in-memory only, no disk writes)

Set organizational policies (restrict to feature branches, exclude sensitive paths)

Require manual approval for high-severity findings

Phase 3: Parallel Validation (Days 11-25)

Run AI review alongside existing human review, don't replace it yet:

Human reviewers see AI findings first and decide whether to surface them

Track agreement rates: What percentage of AI-flagged issues do humans confirm?

Measure time savings: How much faster do reviews complete?

Log false positives to refine exclusion rules

Phase 4: Measure and Expand (Days 26-30)

Compare pilot metrics to baseline. If metrics improve by 20%+ and false positives stay below 15%, expand to 5-10 more repos and enable auto-commenting for low-severity findings.

The Bottom Line: Architecture Beats Policy

If your AI code review tool retains code anywhere, sends snippets to third-party LLMs, or writes source into logs, leakage risk exists, even with SOC 2 and strong encryption. The difference between vendors isn't in their privacy promises; it's in whether their architecture makes leaks structurally impossible.

Policy-based security: "We promise not to leak your code" (trust required)

Architecture-based security: "We can't leak your code because we never store it" (trust unnecessary)

Three Immediate Next Steps

Map your current tool's data flow against the four pipeline stages: ingestion, analysis, storage, and output. Identify every point where code persists.

Run the 8-question vendor checklist to audit retention policies, third-party LLM usage, and logging practices.

Pilot a zero-retention approach starting with low-sensitivity repos. Test whether ephemeral analysis delivers the quality improvements you need without the architectural leak surface.

CodeAnt AI processes code in isolated, ephemeral sandboxes with zero retention, no third-party LLM dependencies, and no persistent storage. You get faster reviews, automated security scanning, and quality enforcement, without creating leak surfaces that compliance can't close.

Start your free trial and see how zero-retention architecture changes what's possible for teams shipping code that can't afford to leak.