AI Code Review

Feb 12, 2026

How Teams Handle Sensitive Code with AI Tools Securely

Sonali Sood

Founding GTM, CodeAnt AI

You install GitHub Copilot to ship faster. Two weeks later, your security team finds AWS credentials in a suggested code snippet. The AI was just doing its job, learning from your codebase, but now production keys are sitting in training data, and you're scrambling to rotate everything.

This isn't hypothetical. Engineering teams adopting AI coding assistants face a trust paradox: these tools need deep codebase access to be useful, but unrestricted access means credentials, PII, and proprietary logic get indexed by models you don't control. Most teams respond with manual fixes,.cursorignore files, privacy modes, organization-wide exclusions, but these approaches share a fatal flaw: they rely on developers to predict what's sensitive and maintain rules that break the moment your codebase evolves.

This guide breaks down why manual configuration fails at scale, what modern AI-native security looks like, and how platforms like CodeAnt AI eliminate the configuration nightmare entirely. You'll learn the three-layer approach that protects credentials automatically, adapts to your organization's patterns, and gives you audit-ready visibility, without adding a single .ignore file to your repo.

The Trust Paradox: Why AI Security Is Different

AI coding assistants deliver real value: faster code reviews, automated refactoring, context-aware completions. But these capabilities require deep codebase access, reading authentication logic, database schemas, API integrations. This creates exposure traditional security models weren't designed to handle.

What actually happens when AI tools access your code:

Credentials get indexed: That

.env.examplefile? The AI sees it alongside your actual.envand can't always distinguish production from placeholderPII appears in suggestions: Test fixtures containing real customer data become training examples

Proprietary logic gets embedded: Your competitive-advantage algorithms influence AI suggestions across the team

Compliance boundaries blur: HIPAA-regulated data in one file can influence suggestions in completely different parts of the codebase

The traditional security model assumes code leaves your environment at deployment. AI tools break that assumption, code leaves during development, often before it's reviewed or tested.

What "sensitive code" actually means

Protecting sensitive code requires understanding four distinct categories:

1. Credentials and secrets

API keys, database passwords, OAuth tokens, private keys

Often appear in files with generic names:

config.py,utils.js,constants.tsExample: Stripe key in

src/lib/payment-helpers.tsbypasses.envexclusions

2. Personally Identifiable Information (PII)

Customer emails, phone numbers, addresses in test fixtures

Sample data in seed files, migration scripts

Regulatory exposure: GDPR, CCPA violations if PII appears in AI training data

3. Proprietary business logic

Pricing algorithms, recommendation engines, fraud detection rules

Trade secrets in seemingly innocuous utility functions

Competitive risk: AI suggestions could leak your differentiation

4. Compliance-regulated data

HIPAA-protected health information, SOC2-scoped authentication flows

PCI DSS payment processing logic

Audit requirement: prove sensitive data never entered AI context

These patterns don't follow predictable naming conventions. A file called helpers.js might contain your most sensitive IP. Filename-based exclusions are fundamentally insufficient.

Why Current Approaches Fail at Scale

File-level exclusions: The 40% solution

Most teams start by blocking entire files or directories:

Where it breaks:

Generic filenames: AWS keys in

utils.pyor Stripe API keys inconfig.jswon't match your patternsTest data with real PII:

test_fixtures.pycontains actual customer emails but the filename doesn't signal "sensitive"Maintenance burden: Every new service adds new patterns. At 100+ developers, you're managing dozens of tool-specific ignore files that drift out of sync

Real cost: 8-12 hours initial setup, 2-3 hours monthly maintenance per repo. At scale, configuration becomes a full-time job.

Privacy modes: What they actually do

Enabling "privacy mode" or opting out of training sounds reassuring but addresses the wrong threat:

What privacy mode does:

Prevents your code from training future models

Stops your data from appearing in other users' suggestions

What it doesn't do:

Prevent the AI from reading sensitive files during code generation

Stop credentials from appearing in suggestions (the AI still sees them in context)

Provide audit trails

Privacy mode prevents training, not exposure. The primary risk remains unaddressed.

Organization-wide policies: Better but still brittle

Centralized policy management, GitHub Copilot's content exclusions, for example, represents the most sophisticated manual approach:

Why it still breaks:

Pattern maintenance becomes full-time work: Every new third-party service adds credential formats. Every compliance requirement adds PII patterns.

False negatives from pattern drift: Your AWS pattern catches

AKIA...but misses new IAM role formats.No semantic understanding: Regex matches strings, not meaning. Can't distinguish real API keys from code comments explaining key format.

Shadow AI tools bypass policies: Your GitHub Copilot policy is airtight, but 30% of developers use Cursor with zero controls.

At 50-person teams, you're managing 40-60 patterns. At 200-person teams, 200+ patterns across 8-10 tools with different syntaxes and no unified visibility.

The AI-Native Approach: Three Layers of Automated Protection

CodeAnt represents a fundamental shift from manual configuration to AI-powered context awareness. Instead of requiring developers to enumerate sensitive patterns, CodeAnt's AI analyzes code semantics to identify and protect credentials, PII, and proprietary logic automatically.

Layer 1: Pre-processing detection and redaction

Before any AI model sees your code, CodeAnt's engine scans every file using semantic analysis:

How it works:

Pattern recognition beyond regex: Identifies

sk_live_4eC39HqLyjWDarjtT1zdp7dcas a Stripe key regardless of filenameContext-aware classification: Distinguishes production tokens from documentation examples

Multi-format credential detection: AWS keys, GCP service accounts, database connection strings, OAuth tokens across 40+ formats

PII identification: SSNs, credit cards, emails in test fixtures, phone numbers in sample data

Real-time redaction:

AI models get full codebase context for accurate reviews and suggestions, but sensitive data never enters the processing pipeline.

Layer 2: Adaptive policies that learn your organization

Static rules break because every organization has unique sensitive patterns. CodeAnt's AI learns these automatically:

Automatic evolution:

Organization-specific pattern learning: After analyzing your first 10 PRs, CodeAnt identifies internal credential formats (e.g.,

ACME_API_KEY_v2_) and adds them to protection rulesTech stack awareness: Detects framework-specific secrets (Django

SECRET_KEY, Railssecret_key_base) based on dependenciesCustom PII detection: Learns that your

customer_tax_idorinternal_employee_codefields contain sensitive dataProprietary logic identification: Flags trade-secret algorithms based on code complexity, uniqueness, and access patterns

Unlike manual .cursorignore files requiring updates across every developer's machine, CodeAnt's policies update centrally and apply instantly.

Layer 3: Immutable audit trail and real-time visibility

Developer transparency:

Inline protection annotations showing what was redacted and why

Pre-commit warnings for new credential types

False positive feedback loop for continuous improvement

Security team audit:

Audit Feature | What It Tracks | Compliance Value |

Detection log | Every sensitive pattern with file path and line number | Proves no credentials exposed to AI (SOC 2, ISO 27001) |

Redaction history | Immutable record of what was stripped | Demonstrates defense-in-depth |

Policy changes | Automatic and manual updates with timestamps | Shows security posture evolution |

Access patterns | Which developers triggered sensitive file reviews | Identifies insider risk |

Real-time dashboard:

Credential exposure prevented: 847 credentials redacted across 1,203 PRs this month

New patterns detected: 12 organization-specific formats learned automatically

Policy coverage: 99.4% of codebase protected

Compliance status: Zero sensitive data exposure incidents in last 90 days

Implementation: From Manual Rules to Automated Protection

Week 1: Discovery and baseline

Inventory your landscape:

Catalog approved AI tools (GitHub Copilot, Cursor, Claude Code) and identify shadow AI usage

Map repository access across tools

Audit existing exclusion rules (most teams discover inconsistencies immediately)

Establish your sensitive data taxonomy:

Credentials: API keys, OAuth tokens, database passwords, SSH keys

PII: Customer data in test fixtures, seed data, mock objects

Proprietary logic: Pricing algorithms, fraud detection, recommendation engines

Compliance-regulated data: HIPAA, PCI DSS, SOC2-scoped secrets

CodeAnt accelerates discovery: Connect your GitHub/GitLab organization and get an instant baseline report showing exactly what's exposed today.

Week 2-3: Pilot on high-risk repository

Select criteria:

High-risk repo (payment processing, authentication, customer data)

Active team of 5-10 developers using AI assistants

Clear success metrics: credential exposure incidents, PR review time, developer satisfaction

Onboarding in 10 minutes:

Install CodeAnt AI extension or enable GitHub App

Connect pilot repository via OAuth

Protection starts immediately with pre-processing redaction

Developers see real-time feedback on what's protected

Governance checkpoint: Define policy owners (security team for org-wide patterns, engineering leads for proprietary logic, DevOps for CI/CD integration).

Week 4: Integrate with PR review and CI

PR review integration:

CodeAnt automatically comments on PRs when sensitive patterns are detected:

Recommended actions:

1. Move AWS credentials to environment variables

2. Replace real customer emails with faker-generated test data

CI/CD pipeline:

Week 5-8: Expand org-wide with shadow AI detection

Phased rollout:

Week | Scope | Focus |

5 | Core platform (20-30 devs) | High-risk services, payment, auth |

6 | Product engineering (50-70 devs) | Customer-facing features, PII-heavy code |

7 | Infrastructure/DevOps (10-15 devs) | IaC repos, Terraform, Kubernetes |

8 | Full organization (100+ devs) | All repositories |

Shadow AI detection:

Recognizes ChatGPT-style patterns, Claude Code formatting

Flags large code blocks without PR review

Retroactively scans for exposed credentials

Measuring Impact: Security + Productivity

Security posture metrics

Secret exposure incidents

Baseline: Run 90-day historical scan of merged PRs

Target: 95%+ reduction in secrets reaching main branch within 30 days

Why it matters: Each prevented leak eliminates credential rotation overhead

Time-to-remediate

Baseline: Average response time for last 10 credential exposure incidents

Target: Reduce from 48-72 hours to 2-4 hours

Why it matters: Shrinks window of exposure, satisfies auditors

Policy coverage

Baseline: Most teams have 30-40% of repos with consistent security scanning

Target: 100% coverage within 60 days

Why it matters: Eliminates blind spots, satisfies SOC 2/ISO 27001

Developer productivity metrics

PR review cycle time

Baseline: Median time over past 30 days, isolating security review duration

Target: 40-60% reduction in security-related delays

Why it matters: Features ship sooner, less context switching

Manual ignore rule changes

Baseline: Count commits modifying security config files over past quarter

Target: 80%+ reduction

Why it matters: Frees senior engineers for architecture work

Audit preparation time

Baseline: Hours spent on last SOC 2/ISO 27001/security questionnaire

Target: 70%+ reduction through automated audit trails

Why it matters: Less disruption to engineering roadmap

Reporting to stakeholders

For security leadership:

"We've eliminated 94% of credential leaks in 90 days, with 100% of repos now under automated security checks and SOC 2 evidence generated in 4 hours instead of 3 days."

For engineering leadership:

"PR review time dropped 45%, shipping features 2-3 days faster per sprint. Engineers spend 80% less time on security configurations, redirecting 15+ hours monthly to feature development."

For compliance teams:

"Automated audit trail shows 100% coverage across all code changes. Every detected secret includes timestamp, detection method, and remediation status—ready for audit review."

Minimum Viable Controls (Before Buying Anything)

If you're not ready for an AI-native platform, implement these baseline controls:

1. Automated secret detection at commit time

Install pre-commit hooks with

detect-secrets,gitleaks, ortruffleHogEnforce secret managers for all credentials

Rotate credentials on 90-day schedule (30-day for developer tokens)

2. Environment isolation

Separate dev/staging/production credentials completely

Disable repository-wide indexing by default

Use read-only repository access for AI tools

Implement network-level restrictions for AI API calls

3. Approved tool list and usage policies

Document vetted tools with security review

Define usage policies per tool

Monitor for unapproved tool usage

Provide quarterly training on secure AI usage

4. Incident response playbook

Define what constitutes an AI security incident

Document immediate response steps (credential revocation within 15 minutes)

Assign security team ownership

Test the playbook quarterly

Reality check: These controls establish baseline security but require 8-12 hours initial setup plus ongoing maintenance. They work, they're just not scalable.

Conclusion: Security That Scales With Your Team

Most teams start with file exclusions and privacy modes—reasonable first steps. But as codebases grow and AI tools multiply, manual configuration becomes the bottleneck. Filename-based rules miss 60%+ of sensitive data, static policies drift out of sync, and maintaining .ignore files across tools becomes a second job nobody wants.

The path forward requires:

Content-aware detection – Security that understands what code does, not just what it's named

Automatic redaction – Sensitive patterns stripped before AI models see them, with no manual maintenance

Continuous auditability – Immutable logs proving what was detected, when, and why

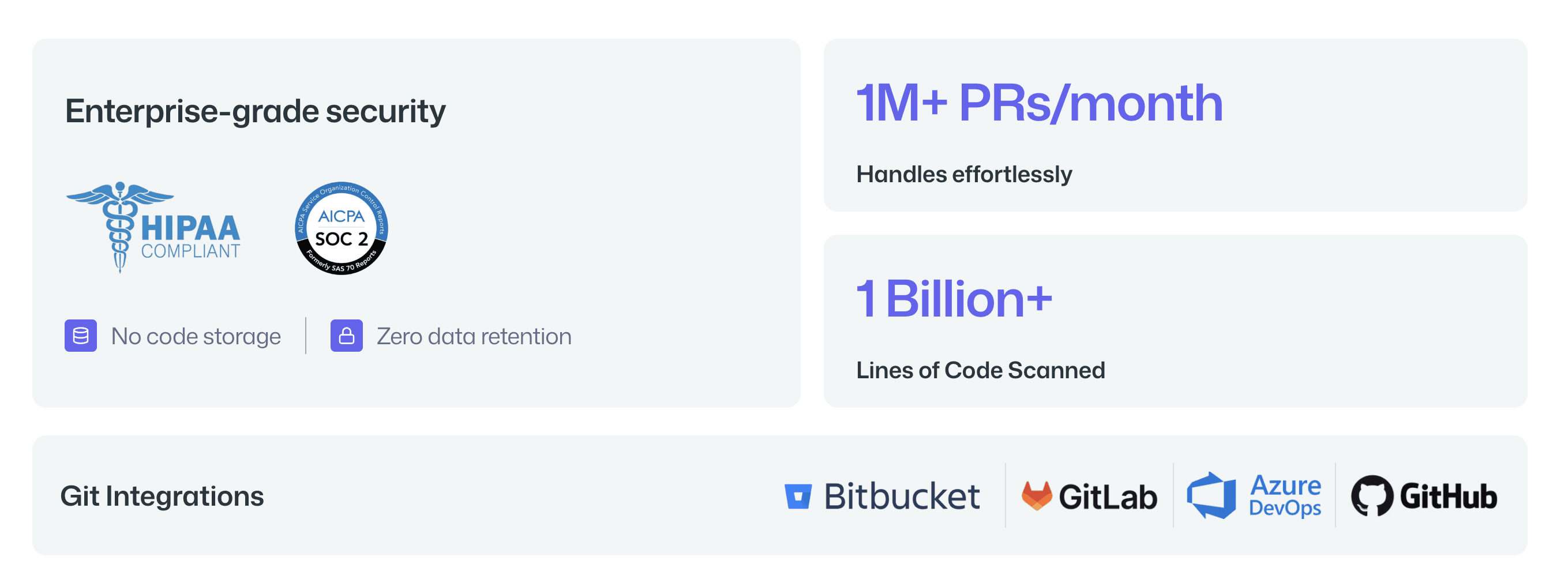

CodeAnt AI eliminates the configuration nightmare entirely. Our AI-native security layer analyzes your code semantically, redacts sensitive patterns in real-time, and provides full audit trails—with zero manual setup. Teams go from installation to protection in under 10 minutes, and security improves continuously as our models learn your organization's patterns.

Stop managing .ignore files and start protecting your code automatically.Start your 14-day free trial orbook a 1:1 demo to see how CodeAnt AI reduces risk without slowing your developers down.