AI Code Review

Dec 17, 2025

How AI Reduces Code Review Overhead for Teams

Amartya Jha

Founder & CEO, CodeAnt AI

Code reviews eat up 20-30% of engineering time at most organizations—and a surprising amount of that goes toward catching issues that never required human judgment in the first place. Style violations, common bug patterns, and security misconfigurations all follow predictable rules that AI can enforce automatically.

AI shifts the balance between self-review and peer review by handling mechanical checks so developers can focus on architecture, business logic, and mentorship. This article covers how AI changes what each review type focuses on, what AI can and can't replace, and practical strategies for combining AI automation with human judgment.

Why Self-Review and Peer Review Both Matter for Code Quality

AI changes the balance between self-review and peer review by acting as a third reviewer. Before AI, developers split their attention between catching syntax errors and evaluating design decisions. Now, AI absorbs repetitive work, so both self-review and peer review can focus on what machines can't evaluate.

Self-review happens when a developer examines their own code before requesting feedback. It catches obvious mistakes like typos, forgotten edge cases, and style violations. Peer review follows: a teammate evaluates the code for correctness, maintainability, and alignment with team standards.

Both matter, but for different reasons:

Self-review builds personal accountability and catches issues the developer already knows to look for

Peer review catches blind spots, spreads knowledge across the team, and validates design choices

The tension? Both consume developer time. And as teams scale, review bottlenecks slow everything down.

How AI Shifts the Balance Between Self-Review and Peer Review

AI doesn't replace either review type. Instead, it rebalances what each one focuses on. Think of AI as a tireless first-pass reviewer that handles mechanical checks so humans can concentrate on higher-order concerns.

AI Catches Issues Earlier in the Development Cycle

AI provides instant feedback during or even before PR submission. It flags bugs, style violations, and security vulnerabilities before peer reviewers ever see the code.

The result? Developers submit cleaner PRs. Peer reviewers start from a higher baseline instead of wading through formatting issues.

Peer Reviewers Focus on Architecture and Business Logic

With AI handling syntax, style, and common patterns, human reviewers redirect attention to what AI can't evaluate: design decisions, business requirements, and long-term maintainability. A peer reviewer's time becomes more valuable when they're not spending it on nitpicks.

Self-Review Becomes More Thorough with AI Assistance

Developers use AI feedback to improve their own code before requesting peer review. When AI flags a potential null pointer exception or a security risk, the developer fixes it immediately. This raises the quality bar of every submitted PR and reduces back-and-forth cycles.

What AI Can and Cannot Replace in Code Reviews

Setting realistic expectations matters here. AI excels at pattern recognition and rule enforcement, but it lacks context about your business, your users, and your team's unwritten conventions.

Review Task | AI Capability | Human Requirement |

Syntax and style enforcement | High | Low |

Security vulnerability detection | High | Medium (context needed) |

Business logic validation | Low | High |

Architecture decisions | Low | High |

Knowledge transfer and mentorship | None | High |

Review Tasks AI Handles Effectively

AI performs well on tasks with clear rules: style enforcement, common bug patterns like null pointer risks and resource leaks, security scanning for SQL injection and hardcoded secrets, and duplication detection for copy-pasted code blocks.

Review Tasks That Still Require Human Judgment

Some decisions depend on the context AI doesn't have. Architectural fit, business logic, edge cases the developer didn't consider, and mentorship opportunities all require human evaluation.

When AI Assists Rather Than Decides

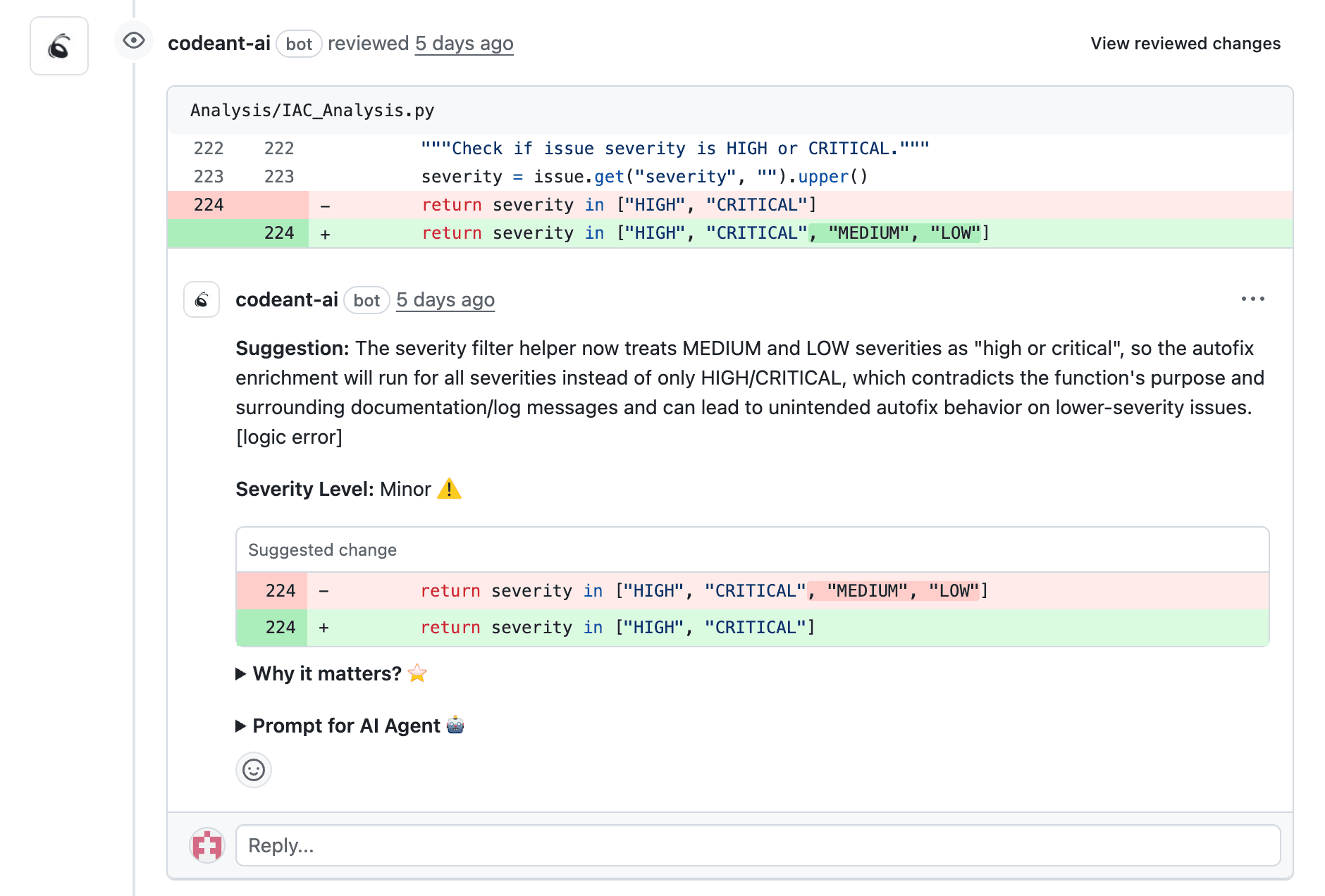

The "trust but verify" principle applies. AI suggestions are recommendations, not mandates. Humans retain final approval authority. AI might flag a function as too complex, but only a human knows whether that complexity is justified by the problem being solved.

How AI Enhances Developer Self-Review Before Pull Request Submission

AI transforms self-review from a manual checklist into an automated feedback loop. Platforms like CodeAnt AI provide this feedback automatically on every PR, before peer reviewers get involved.

Automated Detection of Bugs and Anti-Patterns

AI flags null pointer risks, infinite loops, resource leaks, and known anti-patterns. Developers fix these issues before peer review begins, eliminating entire categories of feedback.

Security Vulnerability Scanning During Development

SAST scans code for secrets, SQL injection, XSS, and misconfigurations in real-time. CodeAnt AI includes SAST as part of its unified platform, so security scanning happens alongside quality checks rather than as a separate step.

Code Style and Standards Enforcement

AI enforces organization-specific style guides automatically. No more "add a space here" or "use camelCase" feedback cluttering the conversation.

Complexity and Maintainability Feedback

Cyclomatic complexity measures how many independent paths exist through a function. Higher numbers mean harder-to-test code. AI identifies overly complex functions that benefit from refactoring before review.

How AI Supports Peer Reviewers Without Replacing Human Judgment

A common concern: does AI diminish peer review's value? Actually, it elevates it. When AI handles routine checks, peer reviewers focus on what matters most.

Pre-Filtering Routine Issues Before Human Review

By the time a peer reviewer sees the code, AI has already flagged and helped fix routine issues. Reviewers start from a higher baseline rather than wading through style violations to find the real problems.

Providing Context and Change Summaries for Faster Review

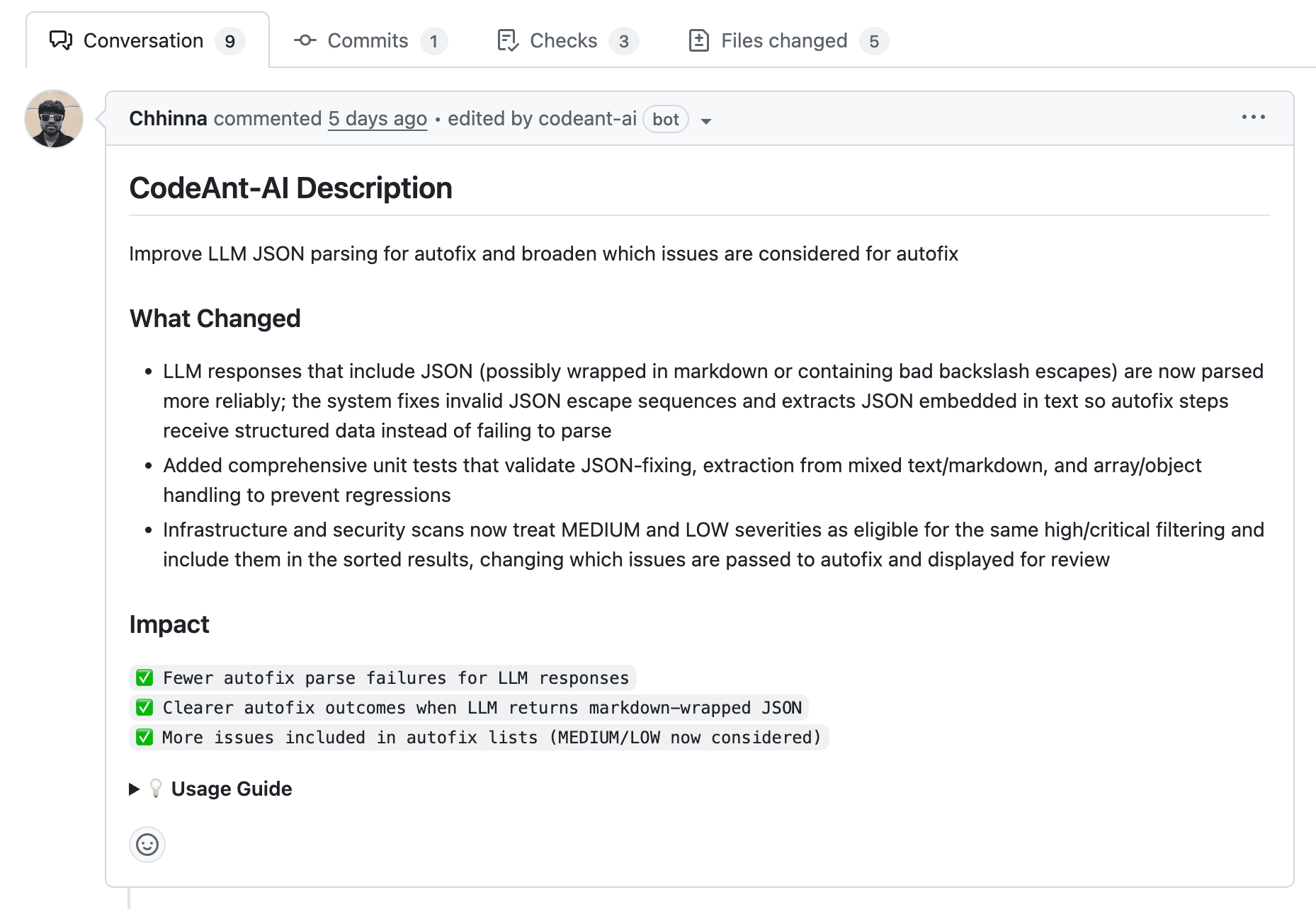

AI generates PR summaries explaining what changed and why. Reviewers understand context faster without reading every line. CodeAnt AI's PR summarization capability helps reviewers grasp the intent before diving into details.

Suggesting Fixes for Reviewers to Approve or Override

AI doesn't just flag problems. It suggests solutions. Reviewers evaluate suggestions rather than writing fixes themselves, which speeds up the feedback loop significantly.

Preserving Knowledge Transfer and Mentorship Opportunities

Peer review isn't just about catching bugs. It's about teaching. AI handles mechanical checks while humans handle coaching and knowledge sharing. Senior developers can focus their review comments on design principles and growth opportunities instead of formatting corrections.

Best Practices for Combining AI Review Automation with Human Review

How do you implement this balance on your team? Here's a practical checklist.

1. Define Clear Boundaries Between AI and Human Responsibilities

Document which checks AI handles (style, security, complexity) versus which require human approval (architecture, business logic). Create a shared team agreement so everyone knows what to expect.

2. Use AI as a First-Pass Filter Before Peer Review

Configure AI to run before the PR assignment. Only assign human reviewers after AI checks pass. This reduces reviewer fatigue and ensures humans focus on substantive issues.

3. Establish Override Protocols for AI Suggestions

Not every AI suggestion is correct. Define when and how developers can override AI recommendations with justification.

4. Keep Pull Requests Small for AI and Human Efficiency

Smaller PRs improve both AI accuracy and human review quality. Set team norms for PR size limits. A common target is 200-400 lines of code.

5. Maintain Review Rotations for Knowledge Distribution

Even with AI assistance, rotate human reviewers to spread codebase knowledge across the team. This prevents knowledge silos and builds team resilience.

Key Metrics to Track AI-Assisted Code Review Effectiveness

Engineering leaders want to measure impact. Here are the metrics that reveal whether AI is actually improving your review process:

Review cycle time: Time from PR open to merge. Shorter cycles indicate AI is reducing friction

Defect detection rate by source: Track which issues AI catches versus humans. Helps calibrate the balance

Reviewer workload: Time spent per PR. AI reduces time on routine checks, freeing reviewers for higher-value work

Code quality trends: Maintainability scores, complexity, and duplication over time

Developer satisfaction: Survey developers on review experience. Effective AI integration improves satisfaction by reducing friction

Platforms like CodeAnt AI track these metrics automatically, giving engineering leaders visibility into review health without manual data collection.

Moving Toward Smarter Code Reviews with AI and Human Collaboration

AI doesn't replace self-review or peer review. It rebalances both. The best teams use AI to handle mechanical checks while humans focus on judgment, mentorship, and design. Self-review becomes more thorough when AI provides instant feedback. Peer review becomes more valuable when reviewers focus on architecture and business logic instead of style nitpicks.

Ready to see how AI can optimize your team's code review process? Book your 1:1 with our experts today!