AI Code Review

Dec 19, 2025

10 Code Review Best Practices to Formalize Your Team Culture in 2026

Sonali Sood

Founding GTM, CodeAnt AI

Code review culture isn't something you bolt on later, it's the foundation that determines whether your team ships clean code or drowns in tech debt. Without shared norms and clear expectations, reviews become bottlenecks, feedback turns inconsistent, and knowledge stays locked in a few engineers' heads.

This guide covers 10 practices that help engineering teams formalize their code review process, from defining guidelines and assigning ownership to using AI automation and tracking metrics that reveal what's actually working.

Why Formalizing Code Review Culture Matters for Engineering Teams

For engineering teams formalizing code reviews in 2026, best practices center on AI integration, actionable feedback, small PRs, defined processes, and a collaborative culture. The most effective teams use automation for style and security checks while reserving human review for logic, architecture, and mentorship, balanced with metrics and SLAs to maintain both speed and quality.

Without a formalized review culture, teams hit predictable walls. PRs sit for days because no one owns them. Feedback quality varies wildly depending on who reviews. Knowledge stays locked in the heads of a few senior engineers.

Here's what typically breaks down:

Bottlenecks: PRs wait in limbo without clear ownership or turnaround expectations

Inconsistent feedback: One reviewer catches security issues while another rubber-stamps everything

Knowledge silos: Only certain engineers understand critical systems

Technical debt accumulation: Issues slip through without shared quality gates

Formalizing your review culture means documenting expectations, assigning ownership, and building rituals that scale with your team.

The Code Review Standard Every Team Follows

The guiding principle is simple: every change that improves the overall health of the codebase gets approved, even if it's not perfect. Reviewers aren't gatekeepers hunting for flaws. They're collaborators helping code get better.

If a PR moves the codebase forward and doesn't introduce regressions, it's ready to merge. Perfection isn't the bar; progress is.

That said, not all feedback carries equal weight. Blocking issues stop the merge—security vulnerabilities, broken functionality, architectural violations. Minor suggestions like style preferences or alternative approaches are conversations, not blockers. Distinguishing between the two keeps reviews moving.

10 Code Review Best Practices to Build Team Culture

Each practice below addresses a specific gap that slows teams down or creates friction. Together, they help teams move from ad-hoc reviews to a consistent, scalable process.

1. Define clear review guidelines and expectations

Document what "approval-ready" looks like for your team. Include scope limits, testing requirements, and documentation standards. Store guidelines in a CONTRIBUTING.md file or team wiki where everyone can reference them.

When expectations are explicit, reviewers spend less time debating subjective preferences. Authors know exactly what to deliver before requesting review.

2. Assign code owners for accountability

CODEOWNERS files automatically assign reviewers based on which files change. This removes ambiguity about who reviews what and ensures domain experts see relevant PRs.

Ownership drives faster turnaround. When someone's name is attached to a codebase area, they respond more quickly and review more thoroughly.

3. Keep pull requests small and focused

Smaller PRs get reviewed faster and more accurately. Aim for single-purpose changes—one feature, one bug fix, one refactor. Large PRs overwhelm reviewers and hide issues in the noise.

If a feature requires significant changes, break it into stacked PRs or use feature flags to merge incrementally.

4. Set review turnaround time targets

Agree on expected response windows as a team. A 24-hour SLA for first review is common. Predictable turnaround prevents context-switching and keeps developers unblocked.

Track this metric and discuss it in retros. If turnaround times slip, it's usually a sign of unclear ownership or overloaded reviewers.

5. Automate style and formatting checks

Linters and formatters running in CI eliminate subjective style debates from human reviews. Tools like Prettier, ESLint, or language-specific formatters enforce consistency automatically.

When style is automated, reviewers focus on what matters: logic, architecture, and correctness.

6. Use AI to catch bugs and security issues early

AI-powered code review tools flag vulnerabilities, anti-patterns, and complexity before human reviewers see the PR. This pre-screening catches issues that humans often miss, especially security gaps.

SAST scans code for known vulnerability patterns. CodeAnt AI integrates SAST directly into pull requests, surfacing security issues alongside quality feedback in a single view.

7. Write actionable and respectful comments

Vague feedback wastes everyone's time. Compare the difference:

Unhelpful: "This is confusing."

Helpful: "This loop may cause an N+1 query—consider batching the lookup."

Tone matters too. Frame feedback as questions when you're uncertain. Assume the author had good reasons for their approach.

8. Require tests with every code change

Tests aren't optional—they're a gating requirement. Define coverage expectations for unit tests and integration tests. Reviewing untested code is guesswork. Reviewing tested code is verification.

9. Rotate reviewers to share knowledge across the team

Spreading review responsibility prevents single points of failure. It also accelerates onboarding—junior engineers learn faster when they review code alongside senior teammates.

Pair new reviewers with experienced ones. Rotate assignments so everyone builds familiarity with different parts of the codebase.

10. Track and review your code review metrics

You can't improve what you don't measure. Track turnaround time, comment quality, and defect escape rate. Use this data to identify bottlenecks and adjust your process.

Review your metrics in team retros. Celebrate improvements and address regressions before they become habits.

What Reviewers Look For in Every Pull Request

Beyond "does it work," reviewers evaluate several dimensions of code quality. A checklist mindset helps ensure nothing gets missed.

Review Area | What to Check |

Design & architecture | Fits existing patterns, appropriate abstraction level |

Functionality | Meets requirements, handles edge cases |

Complexity | Readable, maintainable, not over-engineered |

Security | No vulnerabilities, secrets, or compliance gaps |

Tests | Adequate coverage, meaningful assertions |

Readability | Clear naming, logical structure |

Design and architecture fit

Does the change align with existing system design? Is the abstraction level appropriate for the problem? Architectural mismatches create long-term maintenance headaches.

Functionality and requirements alignment

Does the code do what the ticket or spec requires? Walk through the happy path and the failure paths. Check that edge cases are handled.

Code complexity and maintainability

Is the code easy to understand for the next engineer? Flag over-engineering or unnecessary cleverness. Simple code is maintainable code.

Security vulnerabilities and compliance

Check for injection risks, hardcoded secrets, and compliance with organizational security policies. AI tools automate much of this check—CodeAnt AI scans every PR for vulnerabilities and secrets automatically.

Test coverage and quality

Are tests present, meaningful, and covering critical paths? High coverage numbers mean nothing if the tests don't assert meaningful behavior.

Naming conventions and readability

Are variables, functions, and classes named clearly? Does the code follow team style guides? Good naming reduces the need for comments.

How to Write Code Review Comments That Improve Code

The communication skill of reviewing matters as much as technical accuracy. How you say something determines whether the author learns or gets defensive.

Lead with respect and curiosity

Frame feedback as questions when uncertain: "What was the reasoning behind this approach?" Assume the author had context you lack.

Be specific and explain the why

Point to the exact line and explain the concern. Vague comments like "this is wrong" don't help anyone.

Use nit for minor suggestions

Prefix small, non-blocking suggestions with "Nit:" so authors know they're optional. This keeps the conversation focused on what actually blocks the merge.

Acknowledge what works well

Positive feedback reinforces good patterns and builds trust. A quick "nice refactor here" goes a long way toward making reviews feel collaborative rather than adversarial.

How to Handle Pushback and Disagreement in Reviews

Conflict is normal in code review. What matters is how teams resolve it constructively.

Re-read the author's explanation before responding—they may have context you lack. Use data and examples to support your point rather than relying on opinion. Link to documentation, past incidents, or code examples.

Not every battle is worth fighting. Yield on minor preferences. Escalate to a tech lead only for architectural disagreements that affect the whole system. Above all, keep discussions focused on code, not people.

How AI and Automation Strengthen Code Review Culture

Automation handles repetitive checks so human reviewers focus on design and logic. AI acts as a co-reviewer, not a replacement.

Automate repetitive feedback with AI code review

AI catches common issues—unused imports, code smells, formatting violations—before human review. CodeAnt AI provides line-by-line feedback on every PR, reducing manual review effort significantly.

Catch security and quality issues before merge

Automated SAST and dependency scanning flag vulnerabilities early. CodeAnt AI integrates security checks into every PR, so issues surface before they reach production.

Reduce reviewer cognitive load

When AI handles the mundane, reviewers can focus on architecture, logic, and mentorship. This makes reviews faster and more valuable.

Enforce standards consistently across teams

AI applies the same rules every time—no variance based on reviewer mood or workload. CodeAnt AI supports organization-specific rule configuration, so your standards are enforced automatically.

Code Review Metrics That Measure Culture Health

Measuring the review process reveals where to improve. You can't fix what you don't track.

Review turnaround time: Time from PR opened to first meaningful review

Review depth and comment quality: Comments per PR, categorized by type (blocking, suggestion, praise)

Defect escape rate: Bugs found in production that review could have caught

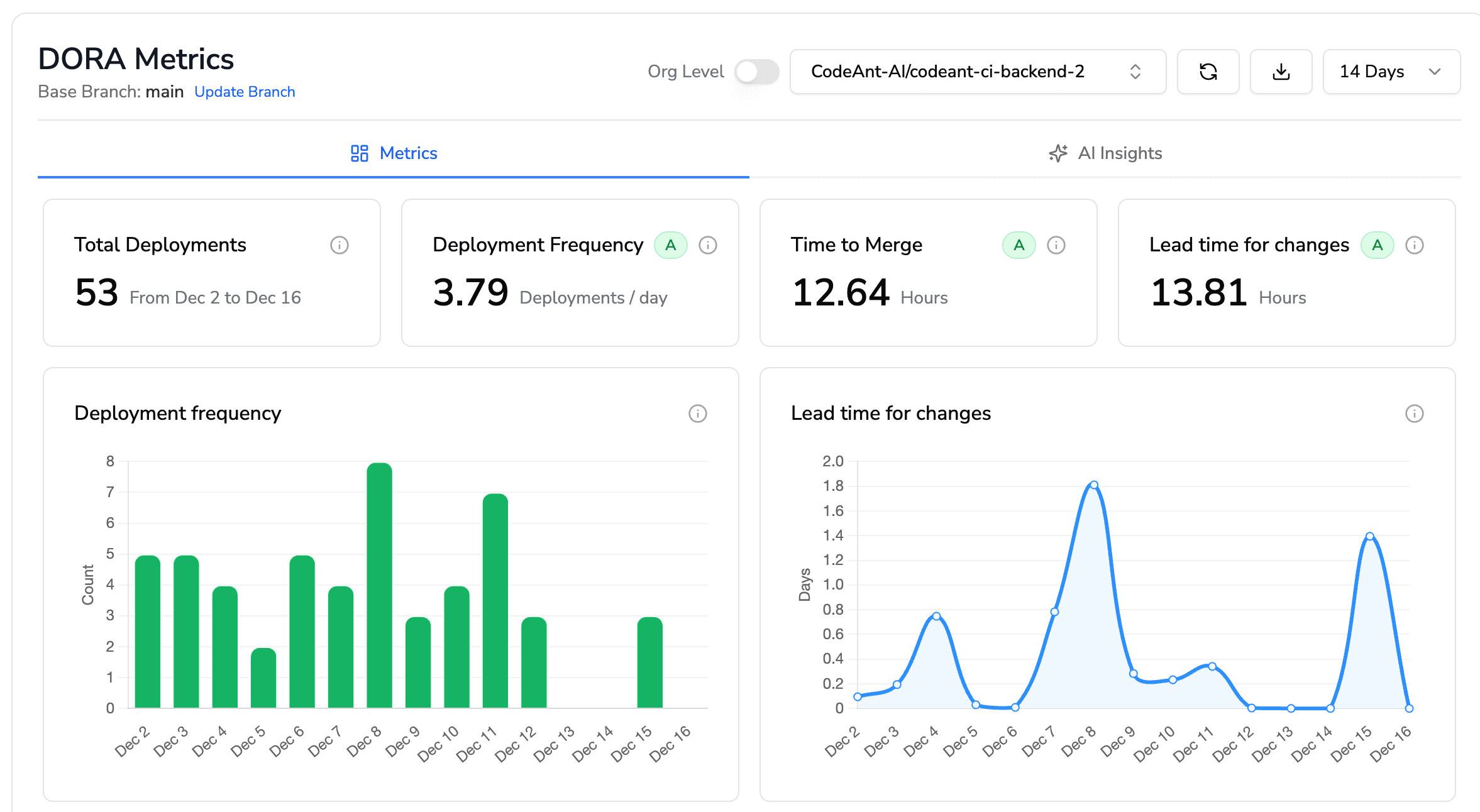

How DORA metrics connect to code review

DORA metrics measure engineering performance: deployment frequency, lead time, change failure rate, and mean time to recovery. Code review directly impacts lead time and change failure rate.

Faster, higher-quality reviews reduce lead time. Thorough reviews catch issues before production, lowering change failure rate. Tracking DORA metrics connects review culture to business outcomes.

How to Train and Onboard New Reviewers at Scale

Ramping up new team members as effective reviewers requires structure. Without it, junior engineers either rubber-stamp PRs or get overwhelmed.

Shadow reviews: New reviewers observe senior engineers reviewing PRs

Paired reviews: New and experienced reviewers collaborate on the same PR

Graduated ownership: Start with smaller, lower-risk PRs before critical systems

Feedback loops: Senior reviewers provide feedback on the new reviewer's comments

Within a few weeks, new reviewers contribute meaningfully to the process.

Build a Code Review Culture That Scales With Your Team

Formalizing review culture today pays dividends as your team grows. Clear standards, human collaboration, and AI automation work together to keep velocity high without sacrificing quality.

The teams that scale successfully don't rely on heroic individual reviewers. They build systems—documented expectations, automated checks, and metrics that reveal bottlenecks before they become crises.

CodeAnt AI brings together automated code review, security scanning, and quality metrics in one platform. Instead of juggling multiple tools, your team gets a single view of code health across the development lifecycle.

Ready to formalize your review culture?Book your 1:1 with our experts today!