AI Code Review

Dec 15, 2025

AI Code Review: Self-Review and Peer Review Combined

Amartya Jha

Founder & CEO, CodeAnt AI

You just spent three hours reviewing your own pull request. You're confident it's clean. Then a teammate spots a security vulnerability in the first five minutes.

Self-review and peer review both have blind spots—but they're different blind spots. AI changes the equation by catching what humans miss at each stage, whether you're reviewing your own code or examining a colleague's changes.

This guide breaks down how AI enhances both approaches, when to rely on each, and how to combine them into a workflow that actually scales.

What Is the Difference Between Self-Review and Peer Review in Code Development

AI enhances code review by automating routine checks, flagging security vulnerabilities, and providing instant feedback. This frees human reviewers to focus on complex logic and architectural decisions that require judgment.

Self-review happens when you check your own code before submitting a pull request. You're looking for obvious mistakes, cleaning up formatting, and making sure the code does what you intended. Peer review comes next, when another developer examines your changes after you've submitted them.

Aspect | Self-Review | Peer Review |

Who performs it | The original author | Another team member |

When it happens | Before PR submission | After PR submission |

Primary purpose | Catch obvious errors, clean up code | Validate logic, share knowledge |

Common limitation | Blind spots from familiarity | Time delays, reviewer availability |

Both approaches serve different purposes. Self-review catches the low-hanging fruit early, while peer review brings fresh perspective and diverse expertise to your code.

Why Developers Struggle With Code Review Today

Blind spots in self-review

You've been staring at the same code for hours. Your brain fills in gaps and glosses over mistakes because it knows what you meant to write. This familiarity bias makes catching your own security issues, edge cases, and style inconsistencies surprisingly difficult.

Even experienced developers miss problems in their own code. It's not a skill issue; it's how human attention works.

Bottlenecks in peer review

Senior developers often become review bottlenecks. PRs sit waiting for days while experienced engineers juggle their own work with review requests. Meanwhile, context-switching between coding and reviewing slows everyone down.

The math doesn't work either. If your team has two senior engineers and ten developers submitting PRs daily, those two reviewers become a chokepoint fast.

Inconsistent feedback quality across teams

Different reviewers focus on different things. One catches security issues; another only comments on formatting. A third might approve everything quickly just to clear the queue.

Without a standardized baseline, code quality varies wildly depending on who reviews your PR. This inconsistency creates gaps that bugs and vulnerabilities slip through.

How AI Enhances Developer Self-Review

Think of AI as your first-pass reviewer. It catches issues before any human sees your code, so you can fix problems while the context is still fresh in your mind.

Catching syntax and style issues before commit

AI flags formatting problems, naming convention violations, and style inconsistencies instantly. You fix them before opening a PR, so peer reviewers never waste time on trivial feedback.

This isn't about being lazy. It's about respecting your colleagues' time and keeping review conversations focused on what actually matters.

Identifying security vulnerabilities early

Static Application Security Testing (SAST) tools scan your code for common vulnerabilities, exposed secrets, and dependency risks during self-review. SAST analyzes source code without running it, looking for patterns that indicate security problems.

CodeAnt AI's security scanning catches vulnerabilities automatically in every commit. You find out about issues in minutes, not days later when a peer reviewer happens to notice.

Reducing cognitive load with automated suggestions

AI doesn't just flag problems. It suggests fixes. Instead of researching how to resolve an issue, you review the suggestion and apply it with a click.

This frees mental energy for complex logic rather than routine corrections. You spend your brainpower on the hard problems, not on remembering whether your team uses camelCase or snake_case.

Enforcing coding standards automatically

AI checks your code against organization-specific rules without you memorizing every standard. Common enforcement areas include:

Naming conventions: Variable and function naming rules

Architecture patterns: File structure and module organization

Documentation requirements: Comment and docstring standards

How AI Improves Peer Code Review

AI and peer review work together. AI handles routine checks so human reviewers focus on decisions that require judgment and experience.

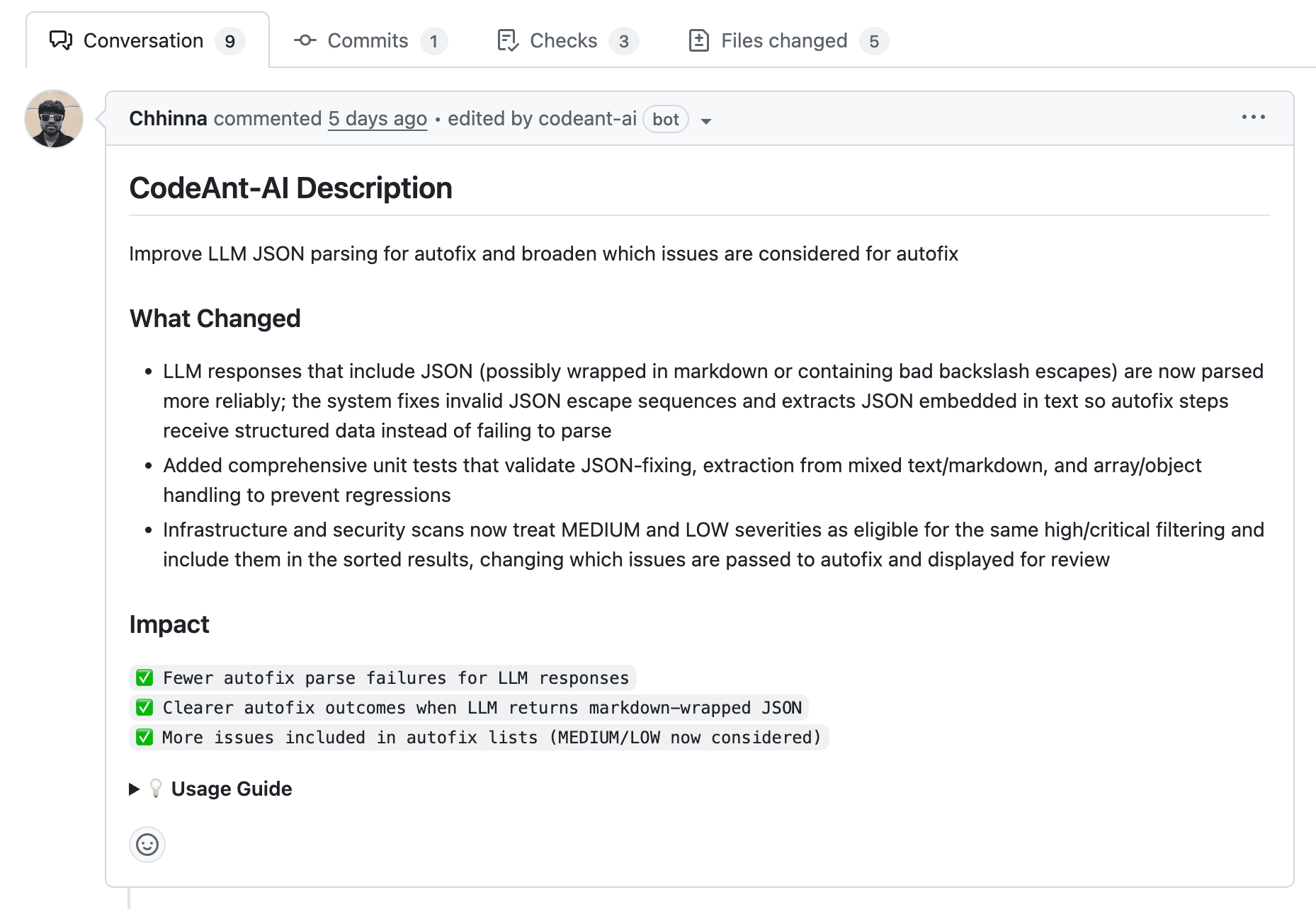

Summarizing pull request changes

AI generates plain-language summaries of what changed in a PR. Reviewers understand context faster without reading every line first.

CodeAnt AI's PR summarization feature gives reviewers a quick overview before diving into details. Instead of spending ten minutes figuring out what a PR does, reviewers start with a clear picture of the changes.

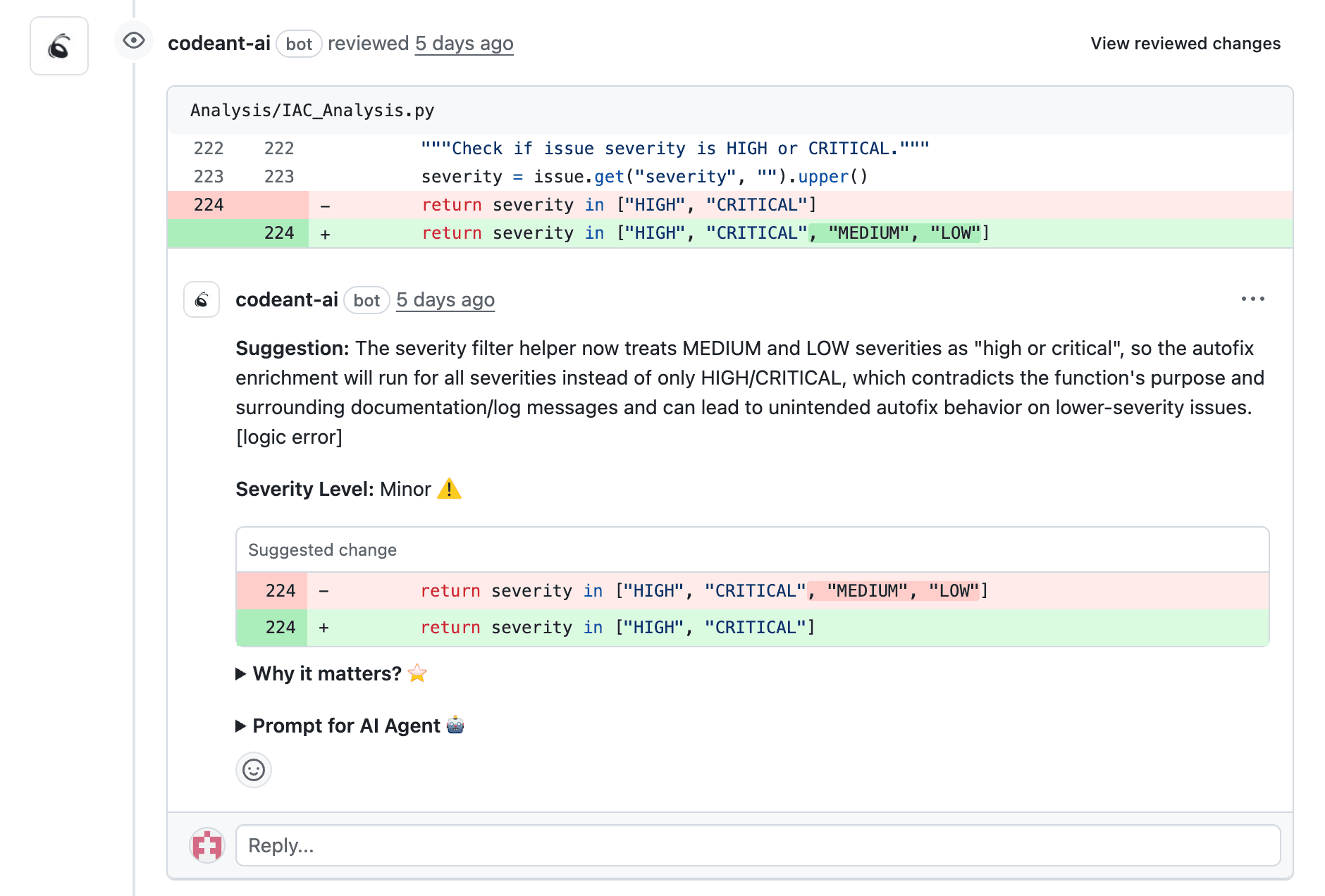

Flagging high-risk code for human attention

AI identifies security-sensitive areas, complex logic, and architectural changes. This directs reviewer attention where human judgment matters most.

Not all code changes carry equal risk. A one-line typo fix doesn't require the same scrutiny as a change to authentication logic. AI helps reviewers prioritize accordingly.

Accelerating review cycles with pre-screening

When AI catches obvious issues before human review begins, you eliminate back-and-forth cycles on trivial problems. The first round of human feedback focuses on substantive concerns, not missing semicolons.

Providing consistent feedback across reviewers

AI applies the same standards to every PR, regardless of which human reviewer is assigned. This creates a quality baseline that doesn't depend on individual reviewer preferences or how busy someone is that day.

When to Use AI-Assisted Self-Review vs Peer Review

Not every change requires the same review approach. The right choice depends on risk level, complexity, and what kind of feedback you actually need.

Routine bug fixes and minor changes

AI self-review often suffices for small, low-risk changes. Typo fixes, minor refactors, and documentation updates don't typically require a senior engineer's time.

Security-critical code paths

Use both AI and peer review for anything touching authentication, authorization, payment processing, or sensitive data. AI catches known vulnerability patterns; humans assess business-specific security implications that automated tools might miss.

Architectural decisions and complex logic

Peer review remains essential here. AI cannot evaluate design trade-offs, long-term maintainability implications, or whether your approach aligns with team direction.

When you're making decisions that will affect the codebase for years, you want human judgment from someone who understands the broader context.

High-velocity feature releases

AI pre-screening enables faster peer reviews during crunch periods. You maintain quality without slowing release cycles. AI handles the routine checks while humans focus on critical decisions.

What AI Cannot Replace in Human Code Review

Setting realistic expectations matters. AI augments human review; it doesn't eliminate the need for it.

Mentorship and knowledge transfer

Junior developers learn from peer review conversations. They see how senior engineers think about problems, what questions they ask, and how they approach trade-offs.

AI feedback doesn't build team knowledge, relationships, or shared understanding of the codebase. The mentorship aspect of code review has value beyond just catching bugs.

Judgment on trade-offs and technical debt

Humans decide when shortcuts are acceptable. AI flags issues but can't weigh deadline pressure against code quality. Sometimes shipping imperfect code today is the right call; sometimes it isn't. That judgment remains yours.

Addressing Bias and Security Risks in AI Code Review

Engineering leaders often ask about responsible AI adoption. Here's what to consider when adding AI to your review process.

Training data limitations

AI models reflect patterns in their training data. They might miss organization-specific anti-patterns or newer vulnerability types that weren't in the training set.

This means AI review works best as a complement to human review. The AI catches what it knows; humans catch what it doesn't.

Handling false positives and negatives

False positives flag good code as problematic. False negatives miss actual issues. Both happen with any automated tool.

Human verification remains important even with AI assistance. Over time, you can tune AI rules to reduce noise for your specific codebase.

Protecting sensitive code and secrets

Data privacy matters, especially for regulated industries. Enterprise-grade tools like CodeAnt AI offer self-hosted deployment options and SOC 2 compliance for teams that can't send code to external servers.

Best Practices for Combining AI and Peer Review

1. Run AI review before opening pull requests

Make AI review a pre-PR step. Developers fix AI-flagged issues before requesting human time. This respects your colleagues' attention and keeps review conversations focused.

2. Configure AI for your coding standards

Customize AI rules to match your organization's conventions. Generic defaults miss team-specific requirements and generate noise that developers learn to ignore.

3. Use AI summaries to prioritize reviewer focus

Train reviewers to read AI summaries first. Focus human attention on flagged high-risk areas rather than scanning every line looking for problems.

4. Track metrics to measure review effectiveness

DORA metrics help you track whether your review process improves over time:

Deployment Frequency: How often you ship to production

Lead Time: Time from commit to production

Change Failure Rate: Percentage of deployments causing failures

Mean Time to Recovery: How quickly you fix production issues

CodeAnt AI's dashboards surface review cycle time and catch rate improvements automatically.

5. Maintain human oversight on merge decisions

AI suggests; humans approve. Never automate merge decisions for critical code paths, even when AI gives the green light.

Building a Unified AI-Powered Code Review Workflow

The most effective teams don't treat self-review and peer review as separate activities. They build a continuous workflow where AI handles routine checks at every stage, and humans focus on decisions that require judgment.

Rather than juggling multiple point solutions for security, quality, and metrics, a unified platform brings everything together. CodeAnt AI provides a single view of code health across your entire development lifecycle.

What a unified workflow delivers:

Faster cycles: AI handles routine checks instantly

Consistent quality: Same standards applied to every PR

Focused expertise: Human reviewers tackle high-value decisions

Ready to see AI-powered code review in action?Book your 1:1 with our experts today!