AI Code Review

Feb 9, 2026

What Should Teams Avoid When Introducing AI Code Review?

Sonali Sood

Founding GTM, CodeAnt AI

You've deployed GitHub Copilot, velocity is up, and PRs are flying. Then you notice something: architectural patterns are drifting, security issues are slipping through, and your senior engineers spend more time fixing AI-generated code than reviewing human contributions. The productivity win just became a maintenance nightmare.

This isn't hypothetical, it's the reality for teams that treat AI code review like traditional review with automation bolted on. AI-generated code introduces fundamentally different risks: context loss that fragments your architecture, security vulnerabilities that traditional SAST tools miss, and quality patterns that erode faster than you can detect them.

The mistake isn't adopting AI coding assistants, it's assuming your existing review process can handle what they produce. In this guide, we'll walk through the five critical mistakes teams make when introducing AI code review and how to implement governance that prevents these issues.

Why Standard Code Review Breaks Down for AI-Generated Code

The core difference: intent versus probability. When a senior engineer writes code, they make deliberate architectural choices based on system context, team conventions, and maintainability goals. When AI generates code, it produces statistically probable patterns with no understanding of your organization's constraints, security posture, or architectural decisions.

This creates three challenges traditional review processes weren't designed to handle:

Volume overwhelms manual review

AI coding assistants can generate 3-5x more code per day. A developer using Copilot might produce 600+ lines daily versus 200 manually, but your review capacity hasn't scaled. The math is brutal: either you create review bottlenecks that kill velocity, or you rubber-stamp AI code to keep pace.

Context loss creates architectural drift

Human developers carry organizational memory, they know the authentication service exists, understand why the team chose event sourcing, and remember the performance issues with the old caching layer. AI assistants have none of this. They'll recreate components that already exist, introduce patterns that violate architectural boundaries, and bypass abstractions deliberately designed to enforce consistency.

Traditional SAST misses AI-specific risks

Standard static analysis tools catch common vulnerabilities (SQL injection, XSS) but miss AI-specific issues: prompt injection vulnerabilities, outdated security patterns from training data, and compliance violations that AI doesn't understand. An AI might generate a perfectly valid REST endpoint that accidentally exposes PII because it doesn't understand your data classification scheme.

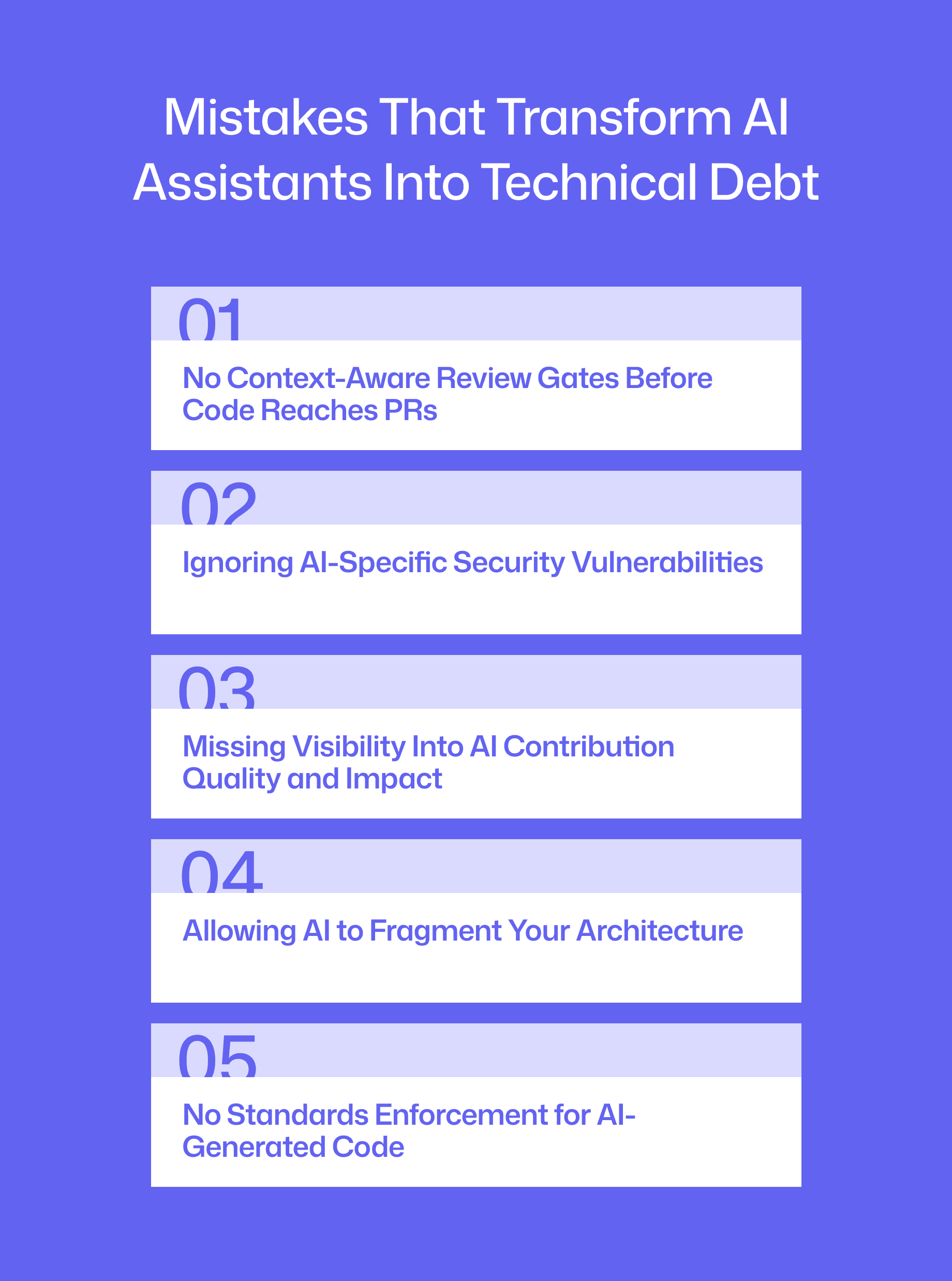

Five Critical Mistakes That Transform AI Assistants Into Technical Debt

Mistake #1: No Context-Aware Review Gates Before Code Reaches PRs

The problem: Most teams review AI-generated code only at the pull request stage, after patterns are embedded in the codebase. By the time a human reviewer sees the code, the AI has already made architectural decisions, duplicated components, and bypassed established abstractions.

Real impact: A fintech team discovered 23 duplicate implementations of rate limiting logic across microservices within 60 days of AI adoption—each slightly different, none using their established RateLimiter utility. Reviewers spent 40-60% more time on AI-heavy PRs explaining why suggested code doesn't fit the architecture.

What works instead: IDE-level prevention with codebase-aware analysis that stops issues before commit. CodeAnt AI's semantic graph maintains organizational memory that AI coding assistants lack—it knows your architectural boundaries, existing components, and team-specific patterns.

Mistake #2: Ignoring AI-Specific Security Vulnerabilities

The problem: Teams rely on traditional SAST tools (SonarQube, Snyk Code) that weren't designed for AI-generated code patterns. These catch common vulnerabilities but miss issues unique to AI-generated code: prompt injection risks, outdated security patterns from training data, and compliance gaps.

Real impact: A healthcare SaaS team discovered AI-generated code was using yaml.load() instead of yaml.safe_load() in 14 services—a critical arbitrary code execution risk their existing SAST missed. One team found 31 instances of deprecated crypto libraries in AI-generated code over three months.

AI models generate code based on statistical likelihood, not security best practices. When suggesting authentication logic, they draw from millions of examples—many containing outdated crypto libraries, missing authorization checks, or overly permissive CORS configurations.

What works instead: AI-specific vulnerability detection with compliance audit trails. CodeAnt AI's security engine is trained on AI-generated code patterns and catches issues traditional tools miss:

CWE-1236 (Formula injection): AI-generated CSV export functions that enable formula injection

CWE-918 (SSRF): AI-generated API clients that don't validate URLs

Outdated cryptography: AI suggesting

crypto.createCipher()(deprecated) instead ofcrypto.createCipheriv()

CodeAnt provides compliance audit trails showing which AI-generated code has been reviewed, approved, and meets security standards, critical for SOC 2 and ISO 27001 audits.

Mistake #3: Missing Visibility Into AI Contribution Quality and Impact

The problem: Teams have no metrics on AI code percentage, quality trends, or developer dependency patterns. They can't answer: How much of our codebase is AI-generated? Is AI code quality improving or degrading? Are junior developers becoming over-dependent?

Real impact: An enterprise team discovered their junior developers had 85% AI contribution rates with significantly higher cyclomatic complexity and lower test coverage—but only after manually auditing six months of commits. Without metrics showing developer dependency on AI, teams miss early warning signs that engineers are accepting suggestions without understanding them.

What works instead: Track AI contribution percentage, quality trends, and architectural compliance as first-class metrics. CodeAnt's unified dashboard shows:

AI vs. human code contribution by developer, team, and repository

Quality scores (complexity, duplication, test coverage) segmented by code origin

DORA metrics (deployment frequency, lead time, change failure rate) for AI-generated vs. human-written code

Trend analysis showing whether AI code quality is improving over time

Example: A Series B startup used CodeAnt's Developer 360 metrics to discover junior developers had 85% AI contribution with 2.8x higher refactor rates than seniors. They implemented targeted training and adjusted review processes, reducing refactor rates by 60% within two sprints.

Check out more use cases here.

Mistake #4: Allowing AI to Fragment Your Architecture

The problem: AI coding assistants are stateless, they don't remember your architectural decisions from last week, let alone last quarter. Without persistent context, AI recreates existing components, introduces inconsistent patterns, and bypasses established abstractions.

Real impact: A SaaS company found 47 different implementations of API response formatting across services after eight months of AI adoption, each requiring separate updates when adding CORS headers. Your team has a well-designed PaymentProcessor abstraction handling retries and idempotency, but AI generates a new feature with different retry logic and no idempotency guarantees.

What works instead: Persistent context engine that prevents AI from forgetting organizational patterns. CodeAnt AI's semantic graph understands your codebase structure, architectural boundaries, and component relationships. When AI tries to recreate existing functionality, CodeAnt:

Detects duplication based on semantic similarity, not just string matching

Suggests the existing component with usage examples

Enforces architectural boundaries (e.g., "Feature modules must use PaymentProcessor from @core/payments")

This is fundamentally different from stateless competitors that analyze each PR in isolation without understanding your broader codebase context.

Mistake #5: No Standards Enforcement for AI-Generated Code

The problem: AI assistants ignore team conventions, coding standards, and architectural boundaries because they don't know they exist. The result: inconsistent code style, pattern violations, and endless debates about whether to accept AI-generated code that "works but doesn't match our standards."

Real impact: Teams spend 30-50% of review time on style and convention corrections rather than logic review. One team found 12 different approaches to async error handling across their Node.js services. AI generates statistically probable code based on internet-scale training data, mixing camelCase and snake_case, ignoring architectural boundaries, and recreating deprecated patterns.

What works instead: Automated enforcement of organization-specific standards with one-click fixes. CodeAnt AI learns your team's patterns from existing code and enforces them automatically:

Naming conventions: "Use

handleprefix for event handlers, noton"Import organization: "Group imports by external/internal/relative"

Error handling patterns: "Use Result<T, E> type for operations that can fail, not exceptions"

Architectural rules: "Feature modules cannot import from other feature modules—use shared abstractions"

When AI-generated code violates a standard, CodeAnt provides one-click fixes that align the code with your conventions, no manual editing required.

Prevention Framework: What to Enforce Before Scaling AI Usage

Before scaling AI coding assistant adoption, implement a governance framework that prevents problems rather than detecting them after they've compounded.

1. Define Standards and Boundaries Upfront

Establish what "good" looks like before AI generates thousands of lines of code:

Architectural boundaries: Which patterns AI can use, which abstractions it must respect, where it can't introduce new dependencies

Security baselines: Approved libraries, authentication patterns, data handling requirements, compliance constraints

Code quality thresholds: Complexity limits, test coverage expectations, documentation standards

Team conventions: Naming patterns, file organization, API design principles

Without these definitions, AI makes statistically probable choices that may conflict with your intentional architectural decisions.

2. Insert Context-Aware Gates Pre-PR

Catch issues at the earliest possible point—before code reaches your repository:

IDE-level analysis that understands your full codebase context, not just changed files

Real-time feedback on architectural violations, duplicated components, or bypassed abstractions

Automated checks against your defined standards before

git commitcompletes

This prevents AI from recreating existing functionality or introducing deprecated security patterns.

3. Risk-Route Review Based on Code Impact

Automate approval for low-risk changes; route high-impact code to senior engineers:

Risk-based routing criteria:

Blast radius: Changes touching authentication, payment processing, or data access get human review

AI contribution percentage: PRs with >70% AI-generated code trigger additional checks

Historical quality signals: Route to senior reviewers if similar past changes caused production issues

Complexity metrics: Cyclomatic complexity above team thresholds requires architectural review

4. Measure AI Impact with Code Health Metrics

Track how AI affects codebase quality, security posture, and team velocity:

Essential metrics:

AI contribution percentage by developer, team, and repository

Quality trends: defect rates, refactor frequency, test coverage for AI vs. human code

Security signal: vulnerability introduction rates, compliance violations, secret exposure

Architectural drift: pattern consistency, duplication rates, dependency sprawl

Developer productivity: PR cycle time, review iterations, merge confidence

5. Continuously Scan for Security and Drift

AI code health requires ongoing monitoring:

Daily scans for new CVEs affecting AI-introduced dependencies

Drift detection: flag when AI code diverges from established patterns over time

Secret scanning with context awareness (not just regex patterns)

Compliance validation against evolving regulatory requirements

Outcome: Teams that implement all five stages see 60-80% reduction in review time while catching 3-4x more architectural and security issues than PR-stage review alone.

Measurement: What Actually Matters

Focus on high-signal metrics that drive decisions:

PR cycle time and review time: Track whether AI review accelerates merges or creates bottlenecks. A 40% reduction in review time should translate to faster feature delivery.

Escaped defects: Measure production incidents traced back to AI-generated code versus human-written code. This shows whether your review gates are calibrated correctly.

Duplication and complexity trends: Monitor whether AI introduces redundant components or inflates cyclomatic complexity over time. Rising duplication signals AI bypassing existing abstractions.

Dependency risk trend: Track vulnerable dependencies and outdated packages introduced by AI. If AI consistently pulls in risky libraries, adjust review policies to flag dependency changes for human scrutiny.

DORA metrics movement: Correlate deployment frequency, lead time, change failure rate, and MTTR with AI adoption phases. If lead time drops but change failure rate spikes, you're trading speed for stability.

Use metrics to tune policies, not punish AI usage. If junior developers show 85% AI contribution with 2.8x higher refactor rates, adjust review routing to catch architectural issues earlier or provide better context to the AI tooling.

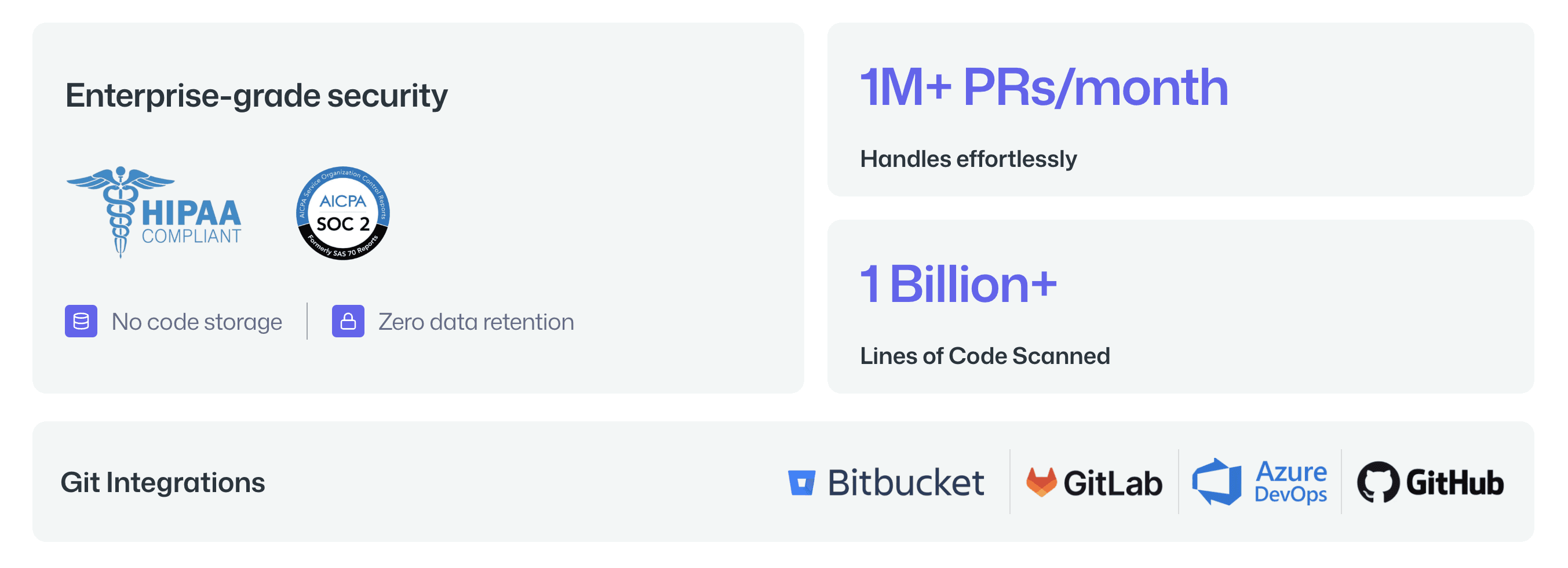

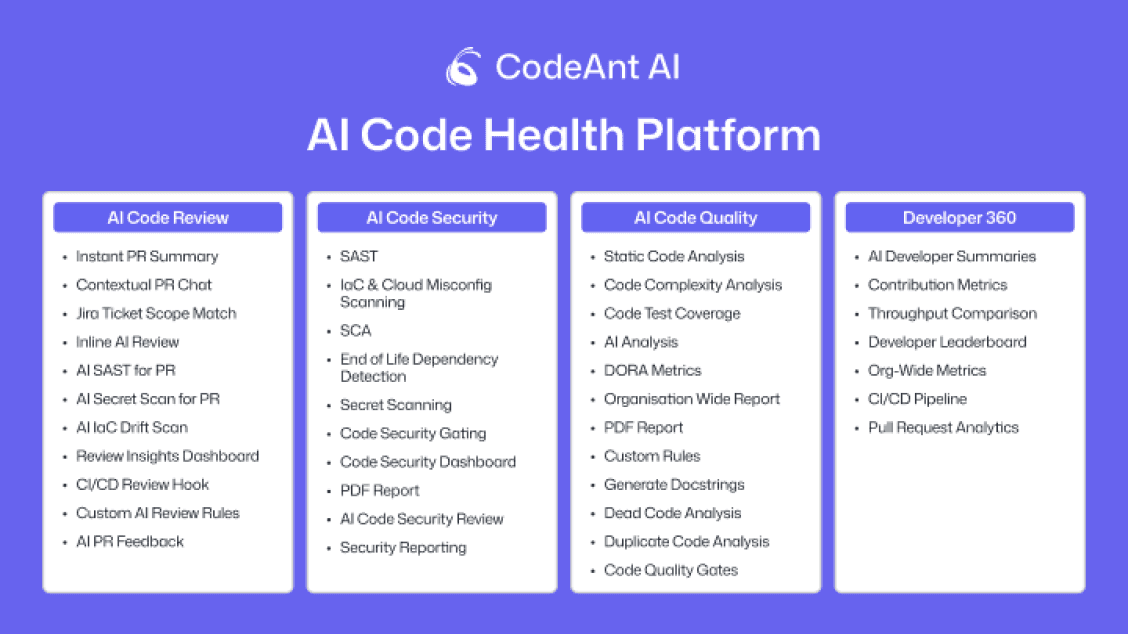

The CodeAnt Approach: Unified AI Code Governance

Unlike tools that retrofit AI onto traditional code review, CodeAnt provides an end-to-end platform designed specifically for teams building with AI coding assistants.

Why teams choose CodeAnt:

Unified platform: Single view of code health across review, security, quality, and productivity, no juggling SonarQube + Snyk + GitHub Insights

Context-aware engine: Maintains organizational memory that AI coding assistants lack, preventing architectural drift and duplication

Proactive prevention: Catches issues in the IDE before commit, not just at PR time when patterns are already embedded

AI-specific security: Detects vulnerability classes unique to AI-generated code that traditional SAST tools miss

Quantified outcomes: Customers report 80% reduction in review time, 3.2x more logic errors caught, and 60% decrease in post-deployment defects

The difference: CodeAnt's semantic graph understands your codebase structure, architectural boundaries, and component relationships. When AI tries to recreate existing functionality, CodeAnt flags it in the IDE before commit, with specific references to the existing implementation developers should use instead.

Your Implementation Roadmap

Week 1-2: Establish baseline and pilot scope

Select 2-3 representative repositories

Capture baseline metrics: PR review time, security findings, architectural violations, AI contribution percentage

Set up CodeAnt in monitoring mode (observe, don't block)

Week 2-3: Define and test policy packs

Configure security policies (OWASP Top 10, secrets detection, dependency vulnerabilities)

Set quality thresholds (complexity, duplication, test coverage)

Establish architectural rules (layer boundaries, approved patterns, component reuse)

Test with real PRs in monitor mode

Week 3-4: Integrate and enable enforcement

Add CodeAnt as required CI/CD check

Configure risk-based routing: auto-approve low-risk, flag high-risk for human review

Enable IDE and pre-commit feedback for real-time prevention

Week 4-5: Configure intelligent routing

Define auto-approve criteria (docs, tests, formatting changes)

Set automated review thresholds (feature additions with tests)

Require human review for security-sensitive code, architectural changes

Week 5-6: Measure success and expand

Validate success criteria: 40-60% faster review time, 3-5x more vulnerabilities caught

Roll out to additional teams in waves

Schedule training sessions, establish feedback loop for policy tuning

Conclusion: Prevent Debt Early, Scale AI Safely

The five mistakes, missing pre-PR gates, ignoring AI-specific vulnerabilities, lacking visibility, allowing architectural fragmentation, and skipping standards enforcement, all share a common root: treating AI code review as an afterthought rather than a first-class governance concern.

Teams that succeed implement prevention-first architecture from day one, with context-aware tooling that understands their codebase and enforces quality continuously across the development lifecycle.

Your next steps:

Pick a pilot repository: Choose a high-velocity codebase where AI adoption is happening

Define your policy pack: Document security baselines, architectural boundaries, and quality thresholds

Wire in pre-PR + PR gates: Implement IDE-level checks that catch issues before commit

Set your metrics baseline: Track AI contribution percentage, defect rates, and review cycle time

CodeAnt AI provides the unified platform teams need to prevent these mistakes from the start, context-aware analysis that maintains organizational memory, proactive enforcement that stops issues before PRs, and visibility into AI code health across your entire SDLC.

Book your demo to see how CodeAnt AI's governance framework works in your workflow—no generic demos, just practical guidance on making AI code review work for your organization.