AI Code Review

Feb 18, 2026

Best AI Code Review Platform for Fast PR Feedback

Sonali Sood

Founding GTM, CodeAnt AI

Your team ships code 3x faster with AI assistance, but your PR queue is a bottleneck. Reviews stretch into days, senior engineers spend half their time context-switching, and subtle bugs slip through. The constraint in modern software delivery has shifted from writing code to validating it.

The solution isn't more engineers, it's an AI code review platform that delivers sub-90-second reviews with the context-awareness to catch real issues and the precision to avoid false alarms. This guide shows you how CodeAnt AI outperforms GitHub Copilot, CodeRabbit, and SonarQube when speed and accuracy both matter.

The Verdict: CodeAnt AI for Context-Aware Speed

CodeAnt AI is the best platform for fast PR feedback, delivering sub-90-second reviews with <5% false positives through full codebase analysis. While GitHub Copilot offers speed without depth and CodeRabbit generates noisy suggestions, CodeAnt AI combines velocity with precision, cutting review cycles by 80% for teams managing 100+ developers.

This evaluation prioritizes three criteria: actionable feedback in under 2 minutes, low false positive rates that respect developer time, and workflow-native integration with zero configuration.

Why Fast Requires More Than Speed

Fast PR feedback means immediately actionable insights that don't require human verification. A tool flagging 50 issues in 30 seconds but generating 40 false positives wastes more time than a 2-minute review surfacing 3 critical problems.

The dual requirement:

Sub-2-minute initial feedback to maintain flow state

<5% false positive rate ensuring every flagged issue merits attention

Most platforms fail this balance. GitHub Copilot analyzes diffs in seconds but misses architectural dependencies. CodeRabbit generates comprehensive feedback quickly but buries signals in noise. SonarQube requires weeks of tuning before it stops crying wolf.

Why this matters: AI code generation has accelerated output 3x, but review capacity remains flat. Teams shipping 200+ PRs weekly can't afford 24-48 hour cycles or tools generating more work than they eliminate. The bottleneck has shifted from writing to validating code.

CodeAnt AI: Context-Aware Architecture Meets Sub-90-Second Reviews

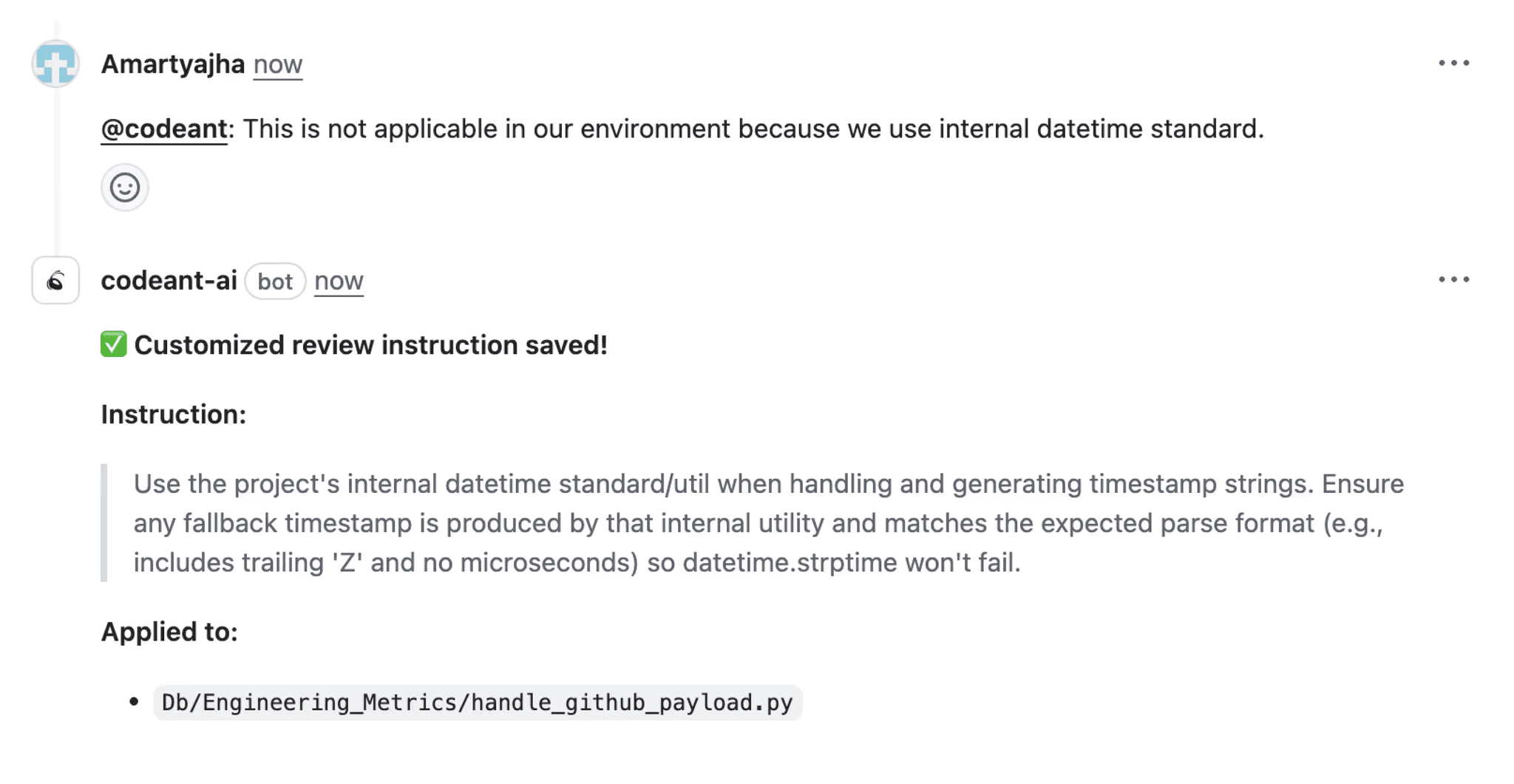

CodeAnt AI solves the speed-accuracy tradeoff through RAG-based context retrieval analyzing your entire codebase, not just changed lines. When a PR modifies an authentication service, CodeAnt AI traces dependencies across microservices, checks breaking changes in downstream consumers, and validates security configurations, all in under 90 seconds.

Key differentiators:

Full codebase awareness: Analyzes architectural impact, not just syntactic changes. Catches cross-service bugs diff-only tools miss.

Unified code health platform: Combines code review, security scanning, quality metrics, and DORA tracking, eliminating the SonarQube + Snyk + manual review stack.

Zero-config deployment: 2-click GitHub/GitLab/Bitbucket integration. First PR reviewed in <90 seconds without rule tuning.

Sub-5% false positive rate: AI learns your codebase patterns and organizational standards, filtering noise automatically.

Platform Comparison: Where Competitors Fall Short

Criteria | CodeAnt AI | GitHub Copilot | CodeRabbit | SonarQube |

Review Speed | <90s | ~30s | ~60s | 2-5 min |

Context Depth | Full codebase + dependencies | Diff only | Diff + limited | Configurable (requires tuning) |

False Positive Rate | <5% | ~15% | ~25% | 60-80% (without tuning) |

Security Scanning | SAST + IaC + secrets (unified) | Basic | Limited | Strong (separate tools needed) |

Setup Time | <5 min | Instant | <10 min | 2-4 weeks |

Platform Support | GitHub, GitLab, Bitbucket, Azure DevOps | GitHub only | GitHub, GitLab | Self-hosted or cloud |

GitHub Copilot: Speed Without Depth

Limitation: Analyzes only the diff, missing architectural dependencies and cross-service impacts. A change to a shared utility function won't trigger warnings about the 12 services importing it.

Checkout the best Github Copilot alternative.

CodeAnt AI advantage: Full codebase context means dependency mapping across repositories. When you modify a database schema, CodeAnt AI flags every ORM model, migration script, and API endpoint needing updates, before CI fails.

Security gap: Copilot offers basic secret detection. CodeAnt AI runs unified SAST, IaC misconfiguration checks, and dependency vulnerability scans in the same review cycle.

CodeRabbit: Comment Volume Creates Review Fatigue

Weakness: Generates comprehensive feedback but creates review fatigue with 20+ comments per PR, many suggesting stylistic preferences rather than critical issues.

Checkout the best CodeRabbit alternative.

CodeAnt AI precision: Sub-5% false positive rate means every flagged issue merits attention. Developers trust the feedback and act on it immediately rather than triaging noise.

Speed comparison: Both are fast, but CodeAnt AI's accuracy makes feedback actionable without human verification. CodeRabbit's speed advantage evaporates when developers spend 15 minutes filtering suggestions.

SonarQube: Configuration Burden Delays Value

Challenge: Requires 2-4 weeks of rule configuration to reduce false positives from 80% to acceptable levels. Teams need dedicated platform engineers to manage it.

Checkout the best SonarQube alternative.

CodeAnt AI advantage: Zero-config deployment with AI-driven learning. The platform adapts to your patterns automatically, no manual tuning required.

Modern architecture: CodeAnt AI's unified platform replaces SonarQube + Snyk + separate analytics tools, reducing TCO by 40% while delivering faster feedback.

Real-World Outcomes: 80% Faster PR Cycles

Fintech Startup: 4 Hours → 30 Minutes

Challenge: 15 engineers, 3 seniors spending 50% of time reviewing. 4-hour average review time created deployment bottlenecks.

CodeAnt AI solution:

Automated 80% of review work through context-aware analysis

Flagged cross-service security issues manual reviews missed

Enforced coding standards organization-wide without manual oversight

Outcome:

4-hour average → 30 minutes (87.5% reduction)

40% velocity increase (DORA deployment frequency)

Zero security incidents post-deployment (caught misconfigured S3 bucket before production)

Key differentiator: Context-aware AI identified that a new API endpoint exposed sensitive customer data through overly permissive CORS settings—a pattern-based issue diff-only tools can't detect.

Enterprise: 500-Person Engineering Org

Challenge: Inconsistent standards across 100+ repositories. Failed compliance audits due to untracked security vulnerabilities.

CodeAnt AI solution:

Custom rules engine enforced organization-wide standards automatically

360° insights dashboard tracked DORA metrics and bottlenecks

Unified security scanning replaced fragmented Snyk + SonarQube stack

Outcome:

Passed SOC2 audit on first attempt (previously failed twice)

Reduced security backlog from 2,000 to 200 critical issues in 90 days

50% deployment frequency increase

Read out all the real customer cases here.

Implementation: Rolling Out Fast Feedback

Week 1-2: Pilot with 3-5 Repositories

Choose repos representing organizational diversity:

High-velocity service (10+ PRs/day) for throughput testing

Security-critical component for detection accuracy

Legacy codebase with technical debt for context-aware analysis

Initial configuration:

Track daily:

Developer sentiment (target: 90%+ actionable feedback)

False positive rate (should stay <5%)

Review time delta

Blocking incidents

Week 3-4: Tune and Expand

Analyze metrics:

Acceptance rate (target: >85%)

False positive rate (<5%)

Review time reduction (>60%)

Critical security finds (2-5 per repo)

Calibrate severity:

Rollout criteria:

✅ >85% developer satisfaction

✅ <5% false positive rate sustained

✅ Measurable velocity gain (30%+ minimum)

✅ Zero blocking incidents on legitimate deployments

What to Measure

Velocity metrics:

Time-to-First-Feedback: <2 minutes (CodeAnt AI: <90 seconds)

Merge Latency: Total PR open to merge time

Review Bottleneck Rate: PRs waiting >24 hours (target: <5%)

Accuracy metrics:

False Positive Rate: Issues marked "won't fix" (target: <5%)

Acceptance Rate: AI suggestions applied without modification (target: >70%)

Reopen Rate: PRs reopened due to post-merge issues (target: <3%)

Security outcomes:

Escape Rate by Severity: Critical vulnerabilities reaching production

Mean Time to Remediate: Detection to fix deployment

Technical Debt Trend: Complexity, duplication, coverage over time

Conclusion: Choose Speed You Can Trust

The right AI code review platform delivers fast feedback you can trust. CodeAnt AI's context-aware architecture provides sub-90-second reviews with <5% false positives, catching what diff-based tools miss while eliminating the noise that creates review fatigue.

For US-based teams with 100+ developers managing multiple repositories, CodeAnt AI delivers proven outcomes: 80% review time reduction, 40% TCO savings, and measurable improvements in deployment frequency and code quality.

Start your 14-day free trial with zero-friction onboarding, 2-click integration, first PR reviewed in <90 seconds, full platform access.Book your 1:1 with our experts to see how CodeAnt AI delivers fast, accurate PR feedback your team needs to ship with confidence.