AI Code Review

Feb 18, 2026

How to Do a Good Code Review in Bitbucket

Sonali Sood

Founding GTM, CodeAnt AI

Your team uses Bitbucket, has configured branch permissions and default reviewers, and PRs are getting reviewed, but they still pile up, reviews feel inconsistent, and critical issues slip into production. The problem isn't Bitbucket's features; it's that manual-only reviews don't scale past 20-30 engineers. Reviewers burn out on repetitive checks, cross-timezone PRs sit idle for 12+ hours, and quality varies by who's reviewing.

This guide shows you how to build a code review process that catches security vulnerabilities and complexity issues automatically, provides instant feedback regardless of timezone, and enforces your standards consistently, without adding manual work. You'll learn the step-by-step Bitbucket workflow, when to add AI-powered pre-review, and how teams are cutting review time by 80% while improving code quality.

What Defines a Good Bitbucket Code Review

Effective code reviews deliver fast, consistent, actionable feedback that measurably improves code quality. In practice, this means three things working together:

1. Thoroughness Without Bottlenecks

Reviews must catch security vulnerabilities, logic errors, and maintainability issues before merge, but thoroughness can't kill velocity. The challenge: manual reviews excel at architectural feedback but struggle with repetitive checks (style violations, security patterns, complexity analysis) at scale.

2. Consistency Across Team and Time

Every PR should receive the same scrutiny regardless of who reviews it or when. Reality: junior reviewers miss issues seniors catch, and the same reviewer applies different standards when tired or rushed.

3. Actionable, Prioritized Feedback

Comments should be specific, reproducible, and ranked by severity, not a flat list mixing nitpicks with critical security issues. Reviewers need to know what blocks merge, what's a suggestion, and what's noise.

Where Manual Reviews Hit Limits

Even well-intentioned teams hit predictable bottlenecks:

Bottleneck | Impact | Root Cause |

Review Queue Buildup | PRs wait 8–24 hours for feedback | Limited reviewer availability across timezones |

Inconsistent Quality | Critical issues slip through while nitpicks dominate | Reviewer experience varies; no standards enforcement |

Nitpick Fatigue | Reviewers burn out on style comments | Manual checking of formatting and simple patterns |

Context Switching Cost | 15–30 minutes per PR just to understand changes | Bitbucket shows diffs, not full codebase context |

These aren't process failures, they're inherent limitations of manual-only workflows at scale.

Key Metrics for Review Quality

Before diving into workflow specifics, define success criteria:

Velocity:

Cycle time: PR open to merge (target: <24 hours for standard changes)

First response time: How quickly PRs get initial feedback (target: <2 hours)

Review iterations: Back-and-forth cycles (target: <3)

Quality:

Defect escape rate: Bugs in production that should've been caught (target: <5%)

Security detection: Vulnerabilities caught before merge (target: 100% of common patterns)

Team Health:

Reviewer workload: Are reviews evenly spread? (target: <30% variance)

Nitpick ratio: Style comments vs. substantive feedback (target: <20% nitpicks)

Setting Up Bitbucket for Effective Reviews

Configure Branch Permissions and Merge Checks

Proper branch protection prevents low-quality code from reaching production:

Core platform repositories (customer-facing services, shared libraries):

Restrict merges to tech leads and senior engineers

Required approvals: Minimum 2, with 1 from designated code owner

Enable: All builds must pass, all tasks resolved, no open conflicts

Internal tooling:

Allow all team members to merge

Required approvals: Minimum 1

Disable self-approval to prevent rubber-stamping

Configure in Bitbucket: Repository settings → Branch permissions → Add branch permission for main, master, or release/* patterns.

Essential merge checks:

Required before merge:

✓ All builds pass

✓ Minimum approvals met

✓ All tasks resolved

✓ No merge conflicts

✓ Default reviewers approved

Use PR Templates to Reduce Cognitive Load

Create .bitbucket/pull_request_template.md:

## What changed?

Brief description and why it was needed.

## Related Ticket

Fixes #1234 (link to issue)

## How to test

1. Check out branch

2. Run `npm run test:integration`

3. Verify endpoint returns 200

Configure default reviewers by path:

Repository Settings → Default reviewers

Add rules:

src/api/**→ API team,src/frontend/**→ Frontend team

Integrate CI Status Checks as Mandatory Gates

Essential CI checks to require:

Unit and integration tests with coverage thresholds (e.g., ≥80%)

Linting and formatting (ESLint, Prettier)

Security scanning (Snyk, Trivy, or CodeAnt for vulnerabilities and secrets)

Build verification in staging environments

Dependency audits for outdated or vulnerable packages

Example Bitbucket Pipeline:

The Step-by-Step Review Process

Phase 0: Author Preparation (Before Submitting)

Keep PRs focused: Target 200–400 lines per PR. Break large work into sequential PRs.

Write clear context:

What changed and why

Link to ticket/issue

How to verify the change

Migration or deployment notes

Self-review first: Walk through your own diff. Catch obvious issues before requesting review.

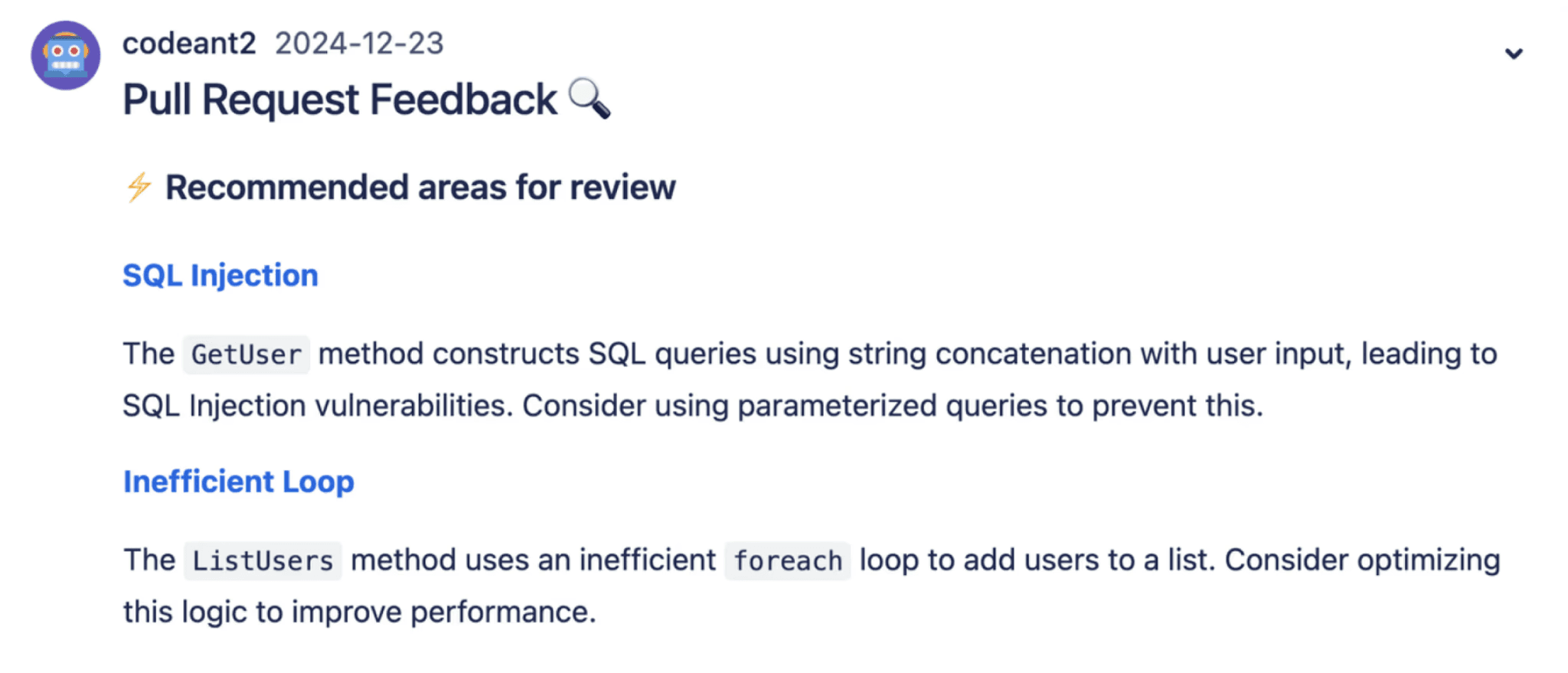

Phase 1: AI Pre-Review (0-2 Minutes)

Modern workflows insert automated analysis before human review. When integrated, AI tools like CodeAnt automatically:

Generate PR summary with full codebase context—how changes interact with existing patterns

Scan for security issues: hardcoded secrets, SQL injection, authentication bypasses

Check complexity and maintainability: cyclomatic complexity, duplication, missing error handling

Validate test coverage: identify untested branches

What reviewers see:

🔴 CRITICAL: SQL injection in PaymentService.findByTransactionId()

Line 47: User input concatenated into query

Fix: Use parameterized queries

🟡 MEDIUM: Missing error handling for payment gateway timeout

Line 112: NetworkException not caught

🟢 LOW: Inconsistent naming convention

Line 203: snake_case instead of camelCase

Auto-fix available

Time saved: From 20-30 minutes of manual scanning to 2 minutes of triaging ranked findings.

Phase 2: Human Review (10-15 Minutes)

With AI handling repetitive checks, focus on what requires judgment:

The Seven-Point Review Rubric

1. Intent and Requirements Alignment

Does the PR solve the stated problem?

Check for scope creep or unrelated changes

2. Correctness and Edge Cases

Trace happy path, then failure modes: null inputs, empty collections, timeouts

Look for off-by-one errors, missing validation

3. API and Contract Changes

Identify breaking changes to interfaces or data contracts

Verify backward compatibility

4. Security and Auth/Authorization

Missing permission checks before data access

SQL injection, XSS, or SSRF vulnerabilities

Exposed credentials or overly permissive policies

5. Performance and Failure Modes

N+1 queries, missing indexes, unbounded loops

Resource leaks: unclosed connections, unfreed memory

Graceful error handling and recovery

6. Data Migrations and Schema Changes

Verify migrations are idempotent and reversible

Check for data loss risks

7. Test Coverage and Quality

Unit tests for new logic, integration tests for workflows

Tests that verify behavior, not just coverage metrics

Edge cases and error conditions tested

Reading Diffs Effectively

Start with the file tree: Understand scope, localized or scattered?

Read tests first: Tests reveal intent better than implementation.

Look for asymmetry: If a function is added but never called, something's wrong.

Flag complex conditionals: Nested ifs and deeply nested loops hide bugs.

Phase 3: Feedback and Iteration (5-10 Minutes)

Structure feedback by priority:

Must fix before merge:

Fix SQL injection (line 47)

Add timeout error handling (line 112)

Add test coverage for failure scenarios

Should fix (strong recommendation):

Consider async processing for long-running transactions

Extract shared transaction logic to avoid duplication

Nice to have (non-blocking):

Rename method to match camelCase convention

Add inline documentation for edge cases

Write actionable comments:

❌ Vague: "This function is too complex."

✅ Actionable: "This function has cyclomatic complexity of 15. Extract validation logic (lines 45-67) into validateUserInput() to improve testability."

Phase 4: Re-Review and Approval (2-5 Minutes)

The author pushes updates. Verify:

Critical findings resolved

Suggested tests added

Quality gates pass

Approve with clear criteria:

✅ Approved! Nice work addressing security issues and adding test coverage.

Let's discuss async processing in our next architecture review.

Total review time: ~20 minutes for a 500-line PR, compared to 60-90 minutes purely manual.

Scaling Reviews Across Distributed Teams

Solving Cross-Timezone Delays

The async-first PR description framework:

Context block: ticket link, business problem, why this approach

Change summary: what changed at component level

Test plan: explicit verification steps

Deployment notes: migrations, feature flags, config changes

Reduce idle time:

AI review provides 24/7 feedback regardless of reviewer timezones

Developers fix issues before end-of-day, unblocking next timezone

Quality Gates enforce standards automatically

Pattern: Author submits PR → AI reviews instantly → Author addresses findings before logging off → Next timezone reviewer focuses on architecture, not bugs.

Maintaining Consistent Quality

Structural solutions:

Code ownership (CODEOWNERS): Require expert approval for critical paths

Rotating reviewer assignments: Pair juniors with seniors on rotation

Review debt tracking: Tag PRs with light review for follow-up audits

AI enforcement:

Modern AI tools learn your organization's patterns and enforce baseline standards automatically:

Every PR gets expert-level security, complexity, and style analysis

No PR merges with critical issues, regardless of reviewer experience

Human reviewers focus on the 20% requiring judgment: API design, business logic, architecture

Handling High PR Volume

Process optimizations:

Size limits: Enforce 400-line maximum via merge checks

Review capacity planning: Track workload, rebalance when reviewers hit 12+ PRs

Async-first culture: Don't expect immediate responses; use inline threads for discussions

AI leverage:

AI handles 80% of repetitive pattern matching, catching secrets, identifying unused code, flagging complexity. This cuts per-PR review time by 70-80%, letting teams handle 3-4x volume without increasing headcount.

Measuring and Improving Your Process

What to Measure

Flow metrics:

Time-to-first-review: Gap between PR creation and first comment (target: <4 hours)

Time-to-merge: PR open to merge (track by size: <24h for sub-100 lines)

Review iterations: Rounds of feedback before approval (target: <3)

Quality metrics:

Escaped defects: Production bugs that should've been caught (track by severity)

Security findings per PR: Vulnerabilities detected and resolved pre-merge

Test coverage trends: Are new features adequately tested?

Reviewer health:

Load distribution: Are 20% of reviewers handling 60%+ of PRs?

Comment resolution time: How long authors take to address feedback

Approval ratio: PRs approved without changes (80%+ suggests rubber-stamping; <40% suggests overly strict standards)

Using Dashboards for Continuous Improvement

Track trends to identify bottlenecks:

If review cycle time increases 30% in a quarter, drill into which repos are slowest

If the same security vulnerability appears in 15% of PRs, that's a systemic training gap

If certain reviewers are overloaded, rebalance assignments

Data-driven improvements:

High false positive rate on a rule? Adjust threshold or context

Critical issues passing review? Tighten Quality Gates

Repeated complexity violations in one service? Trigger refactor initiative

When to Add AI-Assisted Review

Bitbucket Native Is Sufficient When:

Team under 20 developers

Low PR volume (<10-15 PRs/day)

Non-regulated industry without strict compliance

Simple codebase with minimal dependencies

AI-Assisted Review Becomes Essential When:

Scenario | Pain Point | AI Solution |

100+ developers | Inconsistent quality across reviewers | Baseline standards enforced on every PR |

50+ PRs/day | Reviewer burnout, rubber-stamping | 80% time reduction, handles repetitive checks |

Distributed teams | Cross-timezone delays block merges | 24/7 feedback, developers iterate without waiting |

Compliance/security requirements | Manual checks miss vulnerabilities | Continuous scanning with SAST, secrets detection |

Complex codebases | Reviewers lack context for side effects | Full codebase analysis catches ripple effects |

Pragmatic Adoption Path

Week 1: Connect AI tool (e.g., CodeAnt) to Bitbucket; enable on one repo; let AI pre-review all PRs

Week 2-3: Configure Quality Gates to block critical issues; integrate with merge checks

Week 4+: Enable dashboards to track metrics; expand to additional teams

Expected ROI for 100-developer team:

Manual: 30 min/PR × 50 PRs/day = 25 hours/day

With AI: 6 min/PR × 50 PRs/day = 5 hours/day

Savings: 100 hours/week = $150K–$200K annually

Plus: 3x more security issues caught, faster cycle times, consistent quality.

Conclusion: Ship Better Code, Faster

A good Bitbucket code review is a repeatable system, not a heroic effort. The workflow: authors prepare focused PRs with context; AI pre-review catches security, complexity, and style issues instantly; humans focus on design, correctness, and business logic; iteration happens through inline tasks; merge checks enforce quality before code ships.

Your implementation roadmap:

Week 1: Configure merge checks, deploy PR templates, measure baseline cycle time

Week 2: Add AI pre-review on one high-traffic repo; let it handle repetitive checks

Week 3-4: Compare metrics (cycle time, defect rate, workload); expand to additional teams based on results

Success metrics to track:

Cycle time: 40-60% reduction

Time-to-first-review: <2 hours (vs. 8-12 hours)

Defect escape rate: 50%+ fewer issues reaching production

Security findings: 3-5x more caught pre-merge

Reviewer time savings: 70%+

Ready to transform your Bitbucket workflow?Start your 14-day free trial with CodeAnt AI on a single repo, no credit card required. Most teams see measurable improvements within the first week.