AI Code Review

Dec 17, 2025

How AI Tools Are Redefining Code Review Best Practices

Amartya Jha

Founder & CEO, CodeAnt AI

Code reviews are supposed to catch bugs. Instead, they often catch dust—sitting in queues while reviewers context-switch between their own work and a growing pile of pull requests.

AI-assisted self-review flips this model. Authors get instant, comprehensive feedback before another developer ever sees the code, and peer reviewers engage only when human judgment actually adds value. This guide breaks down when self-review beats peer review, what AI can and cannot handle, and how to implement workflows that ship faster without sacrificing quality.

Why Traditional Peer Review is Slowing Teams Down

Self-review beats peer review when AI tools provide instant, comprehensive feedback that catches issues before another developer ever sees the code. The traditional model, where every pull request waits in a queue for human review, creates bottlenecks that compound as teams scale.

Peer review is the process where another developer examines code before it merges. It remains valuable for knowledge sharing and catching subtle logic errors. However, the process introduces friction that accumulates across dozens of daily pull requests.

Here's where traditional peer review typically breaks down:

Reviewer availability: Senior engineers juggle review requests with their own feature work

Context-switching costs: Reviewers spend time understanding unfamiliar code before providing feedback

Feedback loops: Back-and-forth comments extend cycle time from hours to days

Rubber-stamping: Overloaded reviewers approve without deep inspection just to clear the queue

AI-assisted self-review changes this dynamic. Authors get immediate feedback while context is fresh, and reviewers only engage when human judgment actually adds value.

What AI Reveals About Code Review Effectiveness

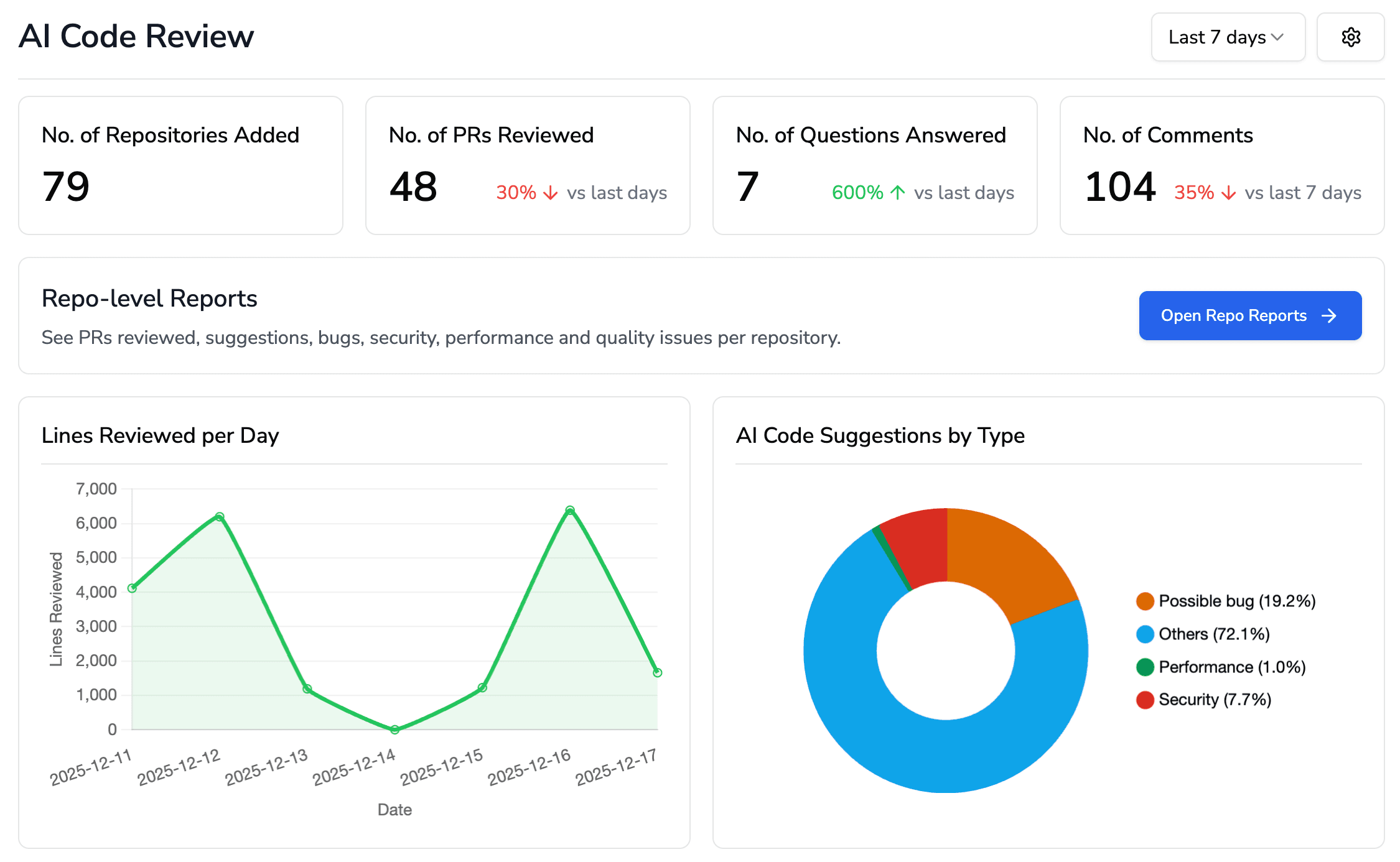

AI code review tools generate data about what actually works in review workflows. By analyzing pull requests at scale, AI tools reveal patterns that challenge long-held assumptions about how teams review code.

How AI code review tools are being adopted

Teams integrate AI-driven review tools directly into CI/CD pipelines to get instant feedback on every PR. The adoption curve has accelerated as large language models (LLMs) improve at understanding code context.

Most implementations follow a similar pattern. The AI reviews code automatically when a PR opens, posts comments inline, and flags issues before human reviewers engage. This pre-screening catches routine problems like style violations, common bugs, and security vulnerabilities. Human reviewers then focus on architecture and business logic.

Which review comments actually lead to code changes

Developers act on specific, actionable comments far more often than vague suggestions. A comment like "This SQL query is vulnerable to injection, use parameterized queries instead" gets implemented. A comment like "Consider improving this code" gets ignored.

AI tools reveal that comment clarity and relevance determine whether feedback gets implemented. The best AI reviewers provide not just what's wrong, but why it matters and how to fix it.

Why speed and quality are not a tradeoff

The assumption that faster reviews sacrifice thoroughness doesn't hold up under scrutiny. AI enables both speed and quality by catching issues instantly while freeing human reviewers for higher-value work.

Teams using AI-assisted review often report catching more bugs while reducing cycle time. The AI doesn't get tired, doesn't rush through reviews on Friday afternoon, and applies the same rigor to the hundredth PR as the first.

When Self-Review Beats Peer Review

The old debate about self-review versus peer review assumed humans work alone. AI changes that assumption entirely.

Self-review is when the author examines their own code, often with AI assistance, before requesting peer review. This approach combines the author's deep context with comprehensive automated analysis.

Scenario | Self-review (AI-assisted) | Peer review |

Small, low-risk changes | Preferred | Optional |

Hotfixes and urgent deployments | Faster, safer | Creates delays |

High test coverage | Sufficient with AI | Redundant |

Complex architectural changes | Initial pass | Required |

Small and low-risk pull requests

Minor changes like typo fixes, config updates, and dependency bumps don't require senior engineer time. AI catches style and syntax issues instantly, and the blast radius of small changes is minimal.

Routing every small PR through peer review wastes reviewer capacity and trains the team to rubber-stamp approvals.

Hotfixes and time-sensitive deployments

Emergency scenarios demand speed. Waiting for peer review during an outage creates risk that outweighs the benefit of a second pair of eyes.

AI-assisted self-review lets authors validate security and quality before shipping. The AI catches obvious mistakes while the author's urgency and context drive the fix.

Code with strong automated test coverage

When comprehensive tests already validate behavior, AI review adds security and style checks. The combination of high test coverage plus AI analysis often exceeds what a rushed human reviewer would catch.

Changes made by experienced domain experts

Senior engineers working in familiar codebases can self-review effectively with AI backup. They understand the system's quirks, the historical context, and the downstream implications.

Reserve peer review for cross-functional knowledge sharing or when the author explicitly wants another perspective.

What AI Can and Cannot Handle in Code Review

Setting realistic expectations matters. AI is powerful but not a replacement for human judgment on architectural decisions, business logic, or mentorship.

Issues AI catches better than human reviewers

AI excels at pattern recognition across large codebases:

Security vulnerabilities: SQL injection, hardcoded secrets, insecure dependencies

Code style violations: Formatting, naming conventions, linting errors

Common bugs: Null pointer risks, resource leaks, error handling gaps

Duplication and complexity: Repeated code patterns and maintainability issues

Platforms like CodeAnt AI combine AI review with Static Application Security Testing (SAST) for comprehensive coverage. The AI never gets tired, never misses a file, and applies consistent standards across every PR.

Where human judgment remains essential

Some decisions require context that AI cannot learn from code alone:

Architectural decisions: System design tradeoffs require understanding business constraints

Business logic validation: Only humans understand domain requirements

Mentorship and knowledge transfer: Junior developers learn from peer feedback

Edge cases and intent: AI may miss subtle requirements the author intended

Humans own the merge button because they understand why code exists, not just what it does.

How to Implement AI-Assisted Self-Review Workflows

Moving from theory to practice requires concrete steps. Here's a playbook teams can adopt immediately.

1. Run AI review in your IDE before opening a PR

Catching issues before pushing code reduces review cycles dramatically. Developers can fix problems while context is fresh, with no waiting for CI to run and no context-switching back to yesterday's code.

CodeAnt AI and similar tools integrate directly into development environments, providing feedback as you write.

2. Enforce automated quality and security gates

Quality gates are automated checks that block merges until criteria are met. Include security scans, test coverage thresholds, and complexity limits in CI pipelines.

Quality gates ensure that self-reviewed code meets organizational standards without requiring human verification of every check.

3. Define clear criteria for peer review escalation

Not every PR requires human eyes. Create policies based on:

File paths: Changes to critical directories require peer review

Risk level: Security-sensitive code always gets human review

Author experience: Junior developers escalate more frequently

Document escalation criteria so the team applies them consistently.

4. Track metrics to validate self-review effectiveness

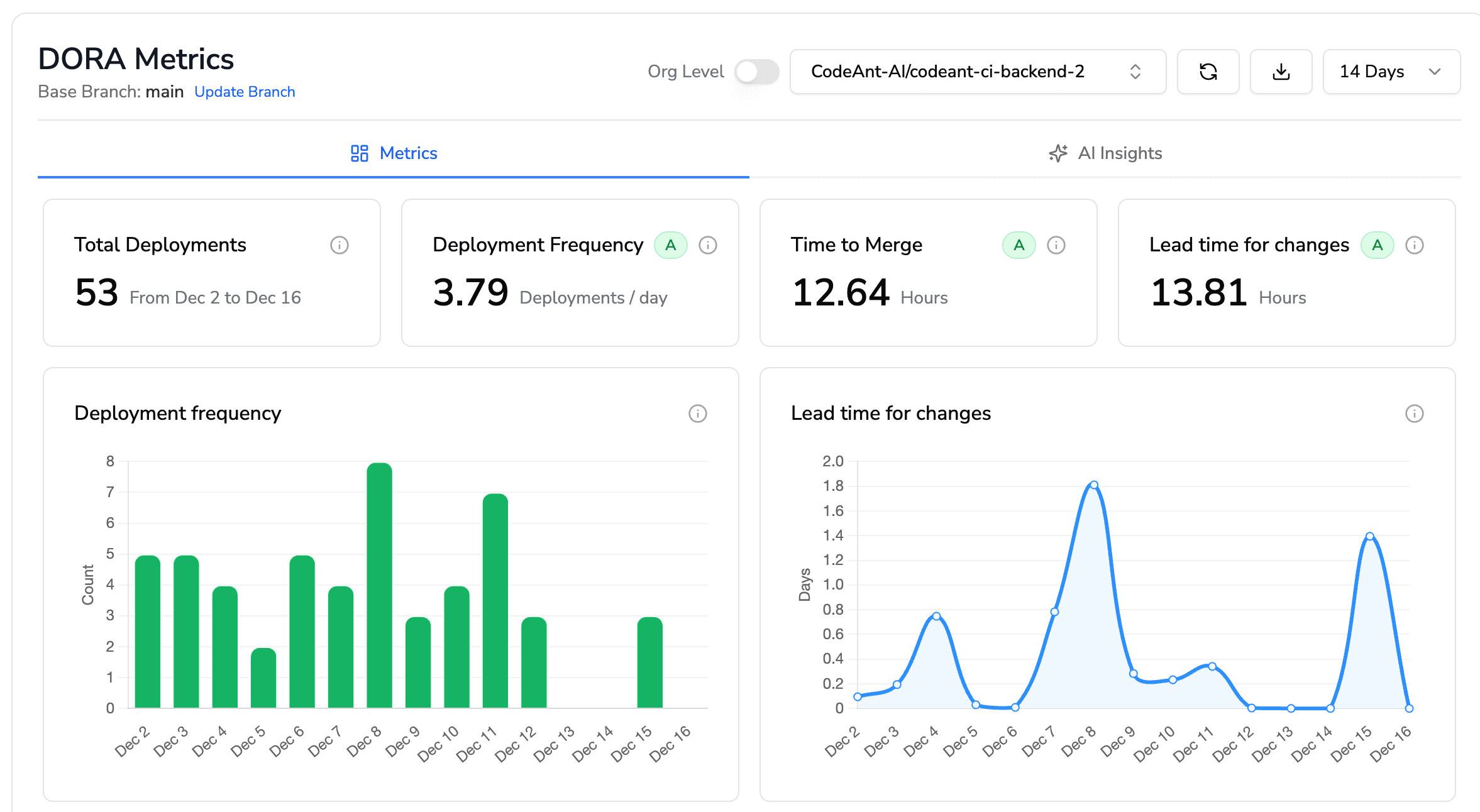

Measure cycle time (how long PRs stay open), defect escape rate (bugs reaching production), and review throughput. DORA metrics like Deployment Frequency, Lead Time, Change Failure Rate, and Mean Time to Recovery provide a framework for tracking improvement.

Use data to refine your self-review policies over time.

Tip: CodeAnt AI provides dashboards that track review metrics automatically, helping teams identify bottlenecks and validate that self-review maintains quality.

Best Practices for Balancing Self-Review and Peer Review

The goal isn't eliminating peer review. It's using peer review strategically where it adds the most value.

Set risk-based review requirements

Tiered policies work well. Low-risk PRs self-merge with AI approval, while high-risk changes require human sign-off. Define "risk" by factors like code criticality, blast radius, and compliance requirements.

A config file change and a payment processing update shouldn't follow the same review process.

Use AI to triage review priority

AI can flag which PRs require urgent human attention versus routine approval. This helps reviewers focus on what matters instead of processing a queue in order.

CodeAnt AI provides PR summaries and risk assessments automatically, so reviewers know where to spend their time.

Preserve peer review for knowledge sharing and mentorship

Peer review isn't just about catching bugs. It spreads knowledge across the team. Use peer review intentionally for onboarding, cross-training, and architectural discussions.

When a junior developer submits code, peer review teaches them patterns and practices. When a senior developer submits code in an unfamiliar area, peer review shares context.

Why Human Judgment Still Matters in AI-Powered Workflows

AI assists but doesn't replace developers. Humans own the merge button because they understand context, intent, and organizational standards that AI cannot learn from code alone.

What humans uniquely provide:

Strategic thinking: Weighing tradeoffs that affect long-term maintainability

Contextual awareness: Understanding why code exists, not just what it does

Accountability: Developers remain responsible for what they ship

Continuous learning: Teams improve through collaborative review conversations

The best workflows combine AI's speed and consistency with human judgment and creativity.

How to Ship Faster Without Sacrificing Code Quality

Modern code review workflows combine AI-assisted self-review with strategic peer review. Teams that adopt this approach reduce bottlenecks while maintaining or improving quality and security.

The shift requires rethinking old assumptions. Not every PR requires peer review. Self-review with AI assistance often catches more issues than a rushed human reviewer. And reserving peer review for high-value scenarios makes it more effective, not less.

Ready to modernize your code review workflow? CodeAnt AI brings AI-driven review, security scanning, and quality metrics into a single platform.Book your 1:1 with our experts today!