AI Code Review

Sep 30, 2025

The Code Review Challenge on Azure DevOps (and How AI Fixes It)

Sonali Sood

Founding GTM, CodeAnt AI

If you ship on Azure DevOps and live in VS Code, you know the real slowdown isn’t writing code, it’s waiting on code reviews. Hours turn into days, momentum fades, and everyone’s watching the queue instead of the roadmap. Here’s what that looks like on the ground:

A big chunk of the cycle sits idle while PRs wait for eyes.

Context goes stale, small fixes balloon, and releases slip.

Under load, critical quality and security checks get missed.

False positives and LoC-based licensing distract from actual risk and value.

AI code reviews flip that loop. Instead of queuing for feedback, developers get:

Context-aware guidance at commit/PR open

Logic errors

Security risks

Performance issues, so fixes land immediately, not days later.

Leaders gain visibility into review velocity, lead time, and risk hotspots, turning guesswork into decisions.

That’s where CodeAnt.ai slots in for Microsoft-centric teams. It sits right inside Azure DevOps and VS Code, unifying AI code review + quality analysis + security scanning so you don’t juggle five tools to get one answer. You keep your workflows, policies, and pipelines, CodeAnt adds the brains, the guardrails, and the proof.

So why do reviews still feel slow and brittle in Microsoft-first teams? Let’s unpack where the native flow breaks.

Why Traditional Code Reviews Fall Short

Microsoft’s developer tools (Azure DevOps, Visual Studio, VS Code) offer solid foundations: pull requests, branch policies, inline comments, and required sign-offs. But these features stop at the basics. As teams grow, common gaps appear:

1. Superficial Analysis

Out of the box, Azure DevOps doesn’t perform deep static code analysis or advanced linting. It won’t flag hidden bugs, performance issues, or security vulnerabilities on its own.

2. Manual Bottlenecks

Every comment, link, or approval is done by people. Reviewers get overloaded, PRs stagnate, and momentum dies. Distributed teams suffer even more from time zone delays. (FYI, GitHub code reviews often wait 2–4 days for feedback.)

3. Siloed Tooling

Dev teams often juggle 4 to 5 different products for reviews, tests, security scans, and metrics. This fragmentation means inefficiencies, integration headaches, and hidden costs.

4. Limited Metrics

Built-in tools lack advanced analytics. Teams can’t easily see DORA metrics (lead time, deployment frequency, etc.) or developer-level insights across repositories without extra tooling.

In short, “Doing things by hand” in Azure DevOps or VS Code becomes unsustainable at scale. Slower reviews, untracked quality slips, and compliance gaps erode velocity and increase risk. This is where AI changes the game.

AI-Powered Code Reviews: How They Work

AI code review tools transform pull requests from wait-stations into intelligent, automated processes. Instead of relying solely on static rules and human attention, they understand code context.

In practice, an AI assistant will “glance over a pull request and, within seconds, summarize the changes, highlight bugs, point out anti-patterns, point to security risks, and even suggest fixes”. This blend of static code analysis and learned reasoning means the feedback feels like a tireless teammate who never gets fatigued.

Key benefits of AI-driven code reviews include:

1. Instant Feedback

AI code review tools can deliver near-instant analysis of a new PR or commit. Developers get guidance the moment code is pushed, rather than hours or days later. This cuts the “time to first review” dramatically.

2. Automated Bug and Vulnerability Detection

AI scans instantly for logic errors, edge cases, security flaws (e.g. SQL injection, XSS, secret leaks), and even performance bottlenecks. Common patterns and critical issues are caught automatically, eliminating many rounds of manual fix-ups.

3. Consistency and Coverage

Unlike humans who vary in focus, AI code review tools applies uniform standards to every review. It can also check code that might be skipped by busy reviewers. Organizations using AI see fewer bugs reaching production (often 50 to 75% fewer) because the AI never overlooks the same issue twice.

4. “Always-On” Productivity

AI reviewers work 24/7. Teams don’t have to wait for colleagues in other time zones to unblock a PR; the AI co-worker is always “online”. Distributed teams unblock faster, increasing throughput.

5. Developer Focus

With AI handling repetitive checks, engineers free up cognitive bandwidth for creative tasks. They’re less burdened by trivial style debates and can focus on architecture or user-value changes.

In short, AI code review tools supercharge productivity. One recent field study found an AI-assisted workflow delivered a 31.8% reduction in PR review cycle time and drove 85% developer satisfaction with code review features. Such gains translate to faster merges, fewer hotfixes, and happier developers.

Why CodeAnt.ai Stands Out

Many platforms promise AI code review, but CodeAnt.ai was built specifically for high-velocity engineering teams that need both code quality and security. Here’s what makes CodeAnt.ai unique:

1. Full-Code Coverage (New and Legacy)

CodeAnt.ai continuously scans every line of code, not just freshly opened PRs. It analyzes new commits and existing branches across all repos, ensuring legacy tech debt and hidden issues are flagged. This dual focus means even long-untouched modules get safety and quality checks.

2. Context-Aware Analysis

Unlike simple linters, CodeAnt’s AI “reads” code with intent awareness. It learns your team’s patterns, coding styles, and architecture decisions. This context yields actionable insights. For example, it can spot that a code change violates a company’s own naming conventions or flags an anti-pattern relevant to your stack. By contrast, generic tools lack this project-specific intuition.

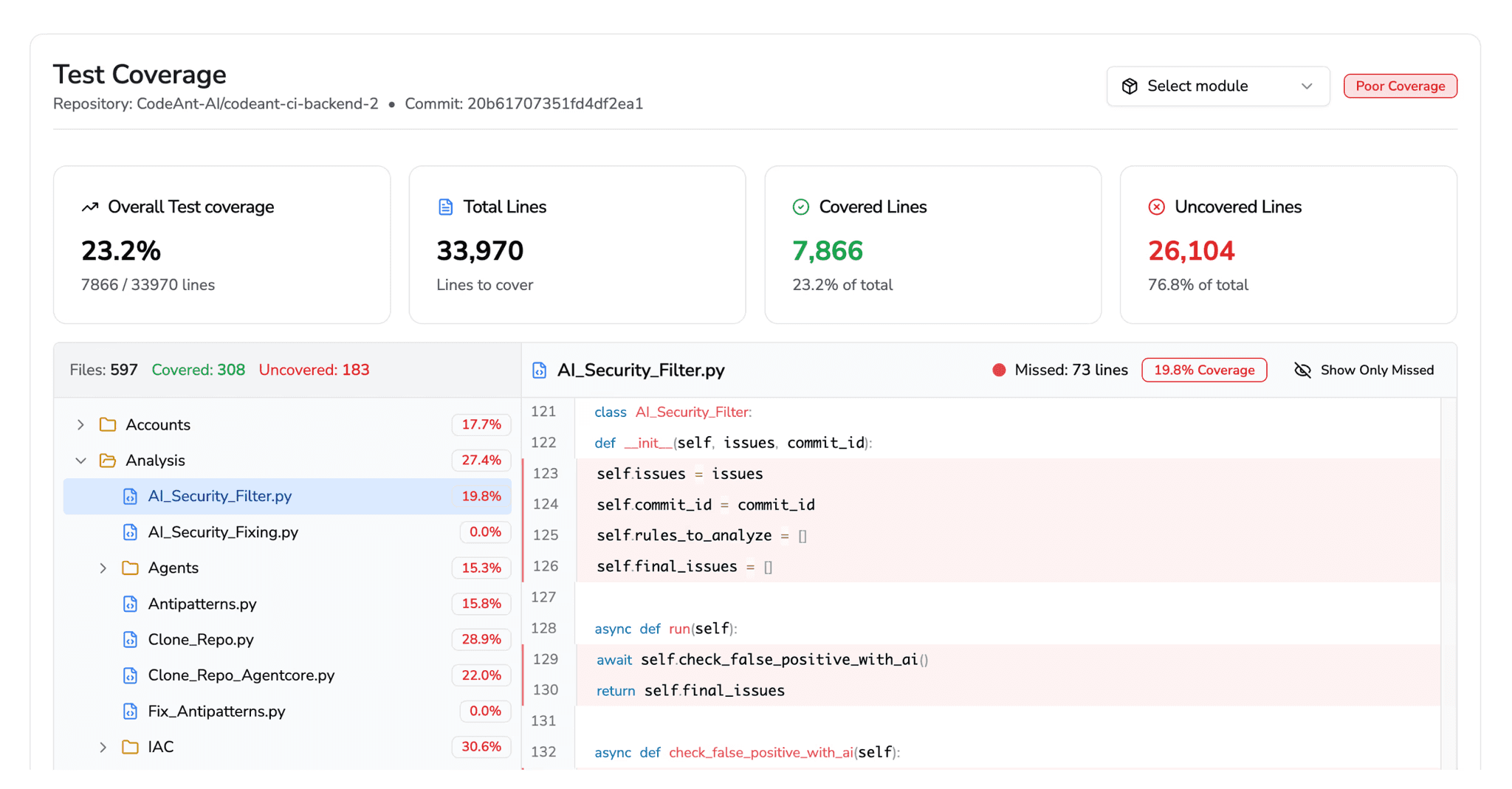

3. Comprehensive Quality & Security

CodeAnt.ai combines multiple disciplines in one tool. It performs deep static code analysis (catching complex bugs, code smells, duplicate code, dead code, cyclomatic complexity, etc.); security scanning (SAST, secret detection, IaC misconfig checks, SCA dependency analysis); test coverage gating; and even DORA & developer metrics. No need to stitch together separate linters, SAST tools, or analytics platforms.

4. Inline, Intelligent Fixes

Instead of merely flagging issues, CodeAnt.ai provides inline suggestions and fixes right in the PR or IDE. Engineers see clear diffs of recommended changes and can apply them with one click. This “auto-fix” capability turns tedious review comments into fast code edits. In fact, teams using CodeAnt.ai report hundreds of thousands of issues auto-fixed, shrinking cleanup overhead.

5. Adaptive Learning

The AI gets smarter over time. By learning from your manual reviews, comment resolutions, and accepted fixes, CodeAnt.ai fine-tunes its feedback to match your standards and risk tolerance. It’s like training a new team member: the more you use it, the better it aligns with your workflows.

6. Developer & Leader Insights

Beyond “pass/fail” code checks, CodeAnt.ai provides engineering leaders with a 360° view of performance. It tracks DORA metrics (lead time, deployment frequency, etc.), PR sizes, review velocities, and even per-developer stats (commits, comment turnaround, review load). Armed with this data, managers can identify bottlenecks, balance workloads, and prove ROI.

7. Scalability & Compliance

CodeAnt.ai is enterprise-grade. It’s SOC 2 compliant, offers on-prem/private-cloud deployment options, and never retains your code (zero data storage). It enforces quality/security gates automatically (blocking merges that fail policy) and generates audit-ready reports on-demand. All of this makes it suitable for regulated industries (finance, healthcare, etc.).

Crucially for Microsoft-centric shops, CodeAnt.ai is designed to “feel native.” Teams don’t leave Azure DevOps or VS Code. Instead, CodeAnt.ai lives where developers do:

1. Azure DevOps Integration

CodeAnt.ai has an official Azure DevOps extension (Azure Marketplace). It brings AI-powered, line-by-line reviews directly into your DevOps pipeline. Every pull request is auto-scanned, quality bugs, security risks, code style, before any merge. Policies and boards remain the same; CodeAnt.ai simply inserts its feedback inline.

For example, Bajaj Finserv now performs AI reviews “inside Azure DevOps” and has completely replaced SonarQube with CodeAnt.ai, making PRs go from hours to seconds. (Check out this Bajaj Finserv Health’s use case.) Pipelines and permissions didn’t change, CodeAnt.ai “runs at flat per-developer pricing, all inside Azure DevOps”.

Related links:

https://www.youtube.com/watch?v=-afSL2IC4vg

https://www.youtube.com/watch?v=FuyzaosFllA

https://www.youtube.com/watch?v=xrf_7HBxPa8

https://www.youtube.com/watch?v=HAE54s5qsTw

2. VS Code Extension

CodeAnt.ai also offers a VS Code extension. Developers can run CodeAnt.ai on local commits before pushing. In VS Code, CodeAnt.ai auto-reviews your changes as you commit or on demand via a side panel. It then surfaces any issues with diffs and fixes suggestions.

This lets teams catch and fix problems even before opening a PR, leading to cleaner pull requests. Importantly, the extension’s workflow is non-blocking (analysis runs asynchronously in 2–3 minutes), so devs keep coding while the review runs.

A typical “With CodeAnt.ai” workflow becomes:

(instead of the multi-step “write, push, fail CI, fix, push again, review, fix again” loop).

3. GitHub/GitLab

While not exclusively Microsoft tools, CodeAnt.ai likewise integrates with GitHub and GitLab. For organizations using GitHub (with VS Code or Enterprise), CodeAnt.ai brings the same AI review capabilities into GitHub pull requests. The principles are identical, all workflows supported, no new interfaces to learn.

This deep integration means Microsoft developers get AI code reviews without context switching. No extra dashboards, the comments and summaries appear where you already work (Azure DevOps PRs or VS Code).

Yet behind the scenes, CodeAnt.ai continuously analyzes code, enforces code quality gates, and feeds metrics back to managers.

Measurable Outcomes: Speed, Quality, and ROI

CodeAnt’s AI assistance translates directly into hard numbers that you care about. In field use, teams see radical improvements in velocity and quality. For example, CodeAnt’s VS Code users report:

50% faster code review cycles on average. Pull requests merge twice as quickly when AI feedback is baked in early.

75% fewer bugs reaching production, as most issues are caught pre-merge

90% drop in “fix PR comments” commits, since many issues are fixed before PR creation

Ultimately, what drives impact is tying the tool back to business goals.

Shorter review cycles mean faster feature release and higher throughput.

Fewer defects and security gaps mean lower incident costs.

Better team insights mean less developer burnout and lower turnover (recall that developers frustrated by review delays were found to be 2.5x more likely to look for a new job).

CodeAnt.ai customers routinely report not just technical wins but also improved team morale and confidence in their delivery pipeline.

Code Security and Compliance: Shoring Up Defenses

Security shouldn’t sit outside the review loop. With CodeAnt.ai , vulnerability checks run alongside style and quality so risky changes are caught where they happen, at the PR, in VS Code, and inside Azure DevOps.

Built-in security, not bolt-on: Real-time SAST on every PR (OWASP Top 10 like SQLi/XSS), plus SCA for dependency risks and IaC checks (Terraform/CloudFormation) to catch misconfigs early.

Your standards, enforced: Define plain-English secure-coding rules once; CodeAnt.ai applies them automatically on every PR, and can block merges on critical issues.

Compliance made practical: Aligns with ISO 27001/SOC 2/NIST practices and produces audit-ready PDFs/CSVs of findings across repos, no last-minute fire drills.

Because analysis is toolchain-agnostic, legacy and new code live under the same guardrails. The net effect: immediate, inline feedback without extra handoffs, fewer production incidents, and a genuine shift-left posture.

How These Guardrails Plug Into Your Azure DevOps / VS Code Flow (Without Friction)

The goal isn’t “another tool.” It’s the same workflow, smarter guardrails.

In Azure DevOps (ADO Repos / Pipelines)

You keep your PR rituals: branch policies, required reviewers, status checks.

Drop-in setup: Install the CodeAnt.ai extension, connect your org, and scope repos/projects. No repo rewiring.

Runs as a PR status check: Every pull request triggers CodeAnt.ai automatically. You’ll see:

a summary comment (what changed, what’s risky, suggested fixes),

inline annotations on exact lines,

a pass/fail gate tied to your severity thresholds (e.g., block on High/Critical).

Lives inside branch policies: Treat CodeAnt.ai like tests, make its check required before merge. You can set:

quality/security gates (SAST, secrets, IaC),

coverage thresholds, duplication/complexity limits,

custom rules you wrote in plain English.

No pipeline slowdown: Analysis runs asynchronously and incrementally; developers aren’t stuck waiting on a full CI roundtrip to get signal.

Audit on demand: Export findings (per PR or across repos) as PDFs/CSVs when compliance asks, no extra sprint needed.

In VS Code (developer inner loop)

Catch issues before the PR so reviewers focus on substance.

One-time install: Add the CodeAnt VS Code extension and sign in.

On-save / on-commit analysis: Review staged changes locally; see inline diagnostics and code actions to auto-apply fixes.

Diff-first workflow: Open suggested patches as diffs, accept in one click, and commit once, clean PRs by default.

Team conventions enforced: Your org’s policies (naming, security patterns, approved libraries) apply locally, so “works on my machine” actually matches “works for our standards.”

What doesn’t change

Your PR templates, approvals, and release pipelines.

Your repo structure and permissions.

How reviewers comment, only now they start from a higher baseline.

What does change

Signal shows up immediately where devs work (ADO + VS Code).

Low-value back-and-forth disappears; fixes land in one click.

Gates reflect your risk appetite, and prove it with exportable evidence.

Code Review Best Practices & Practical Steps

Making the most of AI code reviews means adopting smart workflows:

1. Small, Focused PRs

As always, keep pull requests small. AI excels when it can analyze changes quickly (many customers find <30-minute PRs ideal). Use CodeAnt.ai to validate each small PR fast rather than blasting huge changes at once.

2. Embed in Pipelines

Activate CodeAnt.ai in your CI/CD. For Azure Repos, install the CodeAnt extension in your project and add it to your PR pipeline. For GitHub, add the CodeAnt GitHub Action or integrate via settings. This ensures every pull request triggers an AI review.

3. Use the IDE Extension

Encourage developers to install the CodeAnt.ai VS Code extension. This creates a friendly feedback loop: code gets reviewed pre-commit so PRs arrive nearly clean. The side-panel UI is intuitive (issues listed with diffs), and fixes apply with a click.

4. Customize Rules

Spend time configuring any custom linting, security, or style rules your org needs (CodeAnt.ai supports plain-English policy definitions). This ensures the AI’s feedback is aligned with your standards.

5. Review and Learn

Use CodeAnt’s summary reports and dashboards to coach the team. If certain anti-patterns appear repeatedly, arrange training or adjust the policy. The goal is continuous improvement: the AI highlights systemic issues so you can fix them, not just one-off mistakes.

6. Track Metrics

Finally, let data guide you. Monitor CodeAnt’s DORA and performance metrics to see where reviews are slow or unstable. For example, if lead time is high, check whether PR review time is the bottleneck. Then adjust by adding more reviewers or refining policies. CodeAnt.ai makes these metrics visible, and acts on them to keep improving.

By following these steps you can tie CodeAnt.ai usage to business outcomes: shorter release cycles, higher uptime, and stronger compliance postures.

Read our code review best practices guide here.

Conclusion: Faster, Safer Code Review Inside Your Microsoft Stack

AI code review is now standard practice for Microsoft-first teams on Azure DevOps and VS Code. CodeAnt AI brings context-aware reviews, quality checks, and security scanning into the tools your engineers already use. Reviews stop being a queue and start becoming leveraged. You can see DORA-aligned metrics, predictable per-developer pricing, and audit-ready evidence without adding new friction.

What changes when you run CodeAnt AI

Faster merges with instant, in-context feedback at PR open

Fewer escaped defects through real-time SAST, SCA, and IaC checks

Clear visibility into review velocity, lead time, and risk hot spots

Practical compliance with exportable ISO 27001 and SOC 2 artifacts

Less tool sprawl with one platform for AI code review, quality, and security

Your next step

Baseline PR cycle time and escaped defects today, compare after two sprints

If results match your targets, expand to critical services and make the gate permanent

Book a walkthrough with our sales team and see your own PRs reviewed by CodeAnt.ai, or enable the Azure DevOps and VS Code integrations and run a pilot this week

Ship faster. Reduce risk. Show the numbers.