Everything’s green. You’ve written clean code, linted it, pushed to CI, all tests pass, and the PR gets approved. A few days later you get a message: “We found a vulnerability in the new form input. It’s letting users inject scripts.”

At first, it's frustrating. Then it's confusing. You start digging through your code. Turns out, the issue was in how the user input was being rendered, no escaping, no validation. The kind of thing a good reviewer might catch, but not always. Especially on a busy day.

Now the question hits you: why didn't the tools catch this?

This is where static code analysis, especially Static Application Security Testing (SAST), comes in. Yet many devs don’t use SAST properly. Some have never tried it. Others gave up after floods of false positives. That's fair. The way most static analyzers are built doesn't really help developers.

In this blog you'll learn:

What SAST is (and isn't)

How it works under the hood (no jargon, we promise)

What makes most SAST tools annoying

How to make it work for you, not against you

Let's start with the basics.

What is SAST?

So now you're probably thinking: "Alright, sounds like SAST could've caught that bug. But what even is it?"

Let's clear that up, without sounding like an OWASP dictionary. SAST (Static Application Security Testing) is exactly what it sounds like: analyzing your application's source code without running it. No browser. No test server. No live app. Just your raw code, scanned for potential vulnerabilities.

But where's it running. They'll throw you words like "secure SDLC," "left-shifted analysis," or "security-first architecture", and none of that helps you as a developer trying to ship code. So here's the better way to think about it:

SAST is like having a paranoid code reviewer sitting inside your IDE. Instead of asking "will this code run?", it's asking "can this code break?"

It's scanning through functions, variables, and inputs, and trying to figure out if anything you wrote could be dangerous if the wrong kind of data flows through it. That includes common vulnerabilities like:

Cross-site scripting (XSS)

Insecure file access

Hardcoded secrets

And plenty more

But unlike a linter (which mostly cares about formatting and syntax) or tests (which care about behavior), static code analysis tools dig into how data flows through your code, from input to sink. And because it doesn't run your code, it can work early, like, while you're still writing that feature. That's part the people mean when they say "shift left."

Now, to clarify one more thing, because this gets confused a lot:

SAST is not the same as SCA

Another tool you might've heard about is SCA (Software Composition Analysis). That one checks your dependencies, your package.json, requirements.txt, pom.xml, whatever, for known vulnerabilities in open-source libraries. It's useful. It's important. But it's not the same thing as SAST. Where SCA looks at other people's code, SAST looks at your code.

Here's the takeaway so far:

Static code analysis (via SAST) helps catch security flaws in your logic

It does this early, without running your app

It's not a replacement for tests or dependency scanners, it's a different layer of protection

Now that we've cleared that up, the next logical question is: “Okay, but how does it work under the hood?”

Let's go there.

How Static Code Analysis Works (For Real)

Most people throw around "SAST" without really understanding how it works. And most articles explaining it are... not great. They either oversimplify or overwhelm. This section won't do either. If you're a developer, you'll walk away knowing what static analysis tools do under the hood, so you can trust them, debug them, or even question them.

Let's see.

1. First, It Turns Your Code Into a Tree

When you write this line of code:

code res.send(req.query.name)

code res.send(req.query.name)

It seems simple. But a SAST tool doesn't "read" your code like a human. It parses it, meaning it breaks it down into its underlying structure, known as an Abstract Syntax Tree or AST.

Here's what that means: The above line becomes a tree-like structure:

code CallExpression ├── callee: MemberExpression │ ├── object: res │ └── property: send └── arguments: └── MemberExpression ├── object: req.query └── property: name

code CallExpression ├── callee: MemberExpression │ ├── object: res │ └── property: send └── arguments: └── MemberExpression ├── object: req.query └── property: name

Why? Because the tool isn't interested in how the code looks, only what it does. It wants to understand:

Who is calling what

What data is being passed

Where that data originally came from

This is step one. No security analysis yet, just turning code into a format the machine can reason with.

2. Then It Tracks Tainted Data (That's you, req.query)

Once the code is parsed, static analyzers look for data coming from outside the app. These are called tainted sources, meaning untrusted.

Examples of tainted sources:

Express: req.body, req.query, req.params

React: event.target.value

Python Flask: request.args, request.form

PHP: $_GET, $_POST

This is where things get smart. Let's say you do this:

code const name = req.query.name; res.send("<h1>" + name + "</h1>");

code const name = req.query.name; res.send("<h1>" + name + "</h1>");

The tool sees:

✅ name comes from user input

✅ it flows into the res.send() — a rendering sink

❌ and there's no escaping or sanitization

So even though you just see two simple lines of code, the tool simulates the path:

User Input → Variable → HTML Output → Not Sanitized → ⚠️ Potential XSS

3. Then It Checks: "Is This Dangerous?"

Now, not all user input is bad. Sometimes it's handled safely. Good SAST tools don't just scream every time they see req.query. They check what happens to the input. Take this:

code const name = req.query.name; const safeName = sanitizeHtml(name); res.send("<h1>" + safeName + "</h1>");

code const name = req.query.name; const safeName = sanitizeHtml(name); res.send("<h1>" + safeName + "</h1>");

Here, the tainted data is interrupted by a sanitizer function. So the tool should not flag this. But that depends on whether the tool knows sanitizeHtml() is safe. Which leads to the next point...

4. Good SAST Tools Understand Context. Bad Ones Don't.

Here's the difference between a good SAST tool and a noisy one.

Bad SAST:

Flags every eval(), even in test code

Can't track variables across files

Doesn't know your sanitizers

Misses real bugs in favor of loud ones

Good SAST:

Follows data flow across function calls and file boundaries

Understands sanitization logic (even if it's custom)

Prioritizes risky flows over harmless ones

Gives meaningful, fixable alerts — not spam

This is where most static analyzers fall apart. They either under-approximate (miss bugs) or over-approximate (spam the dev). If you want to teach deeply: Show a multi-file example where input comes in one module, gets passed through two functions, and lands in a sink.

Then ask: Would your SAST catch this?

What You Should Remember

If someone asks you tomorrow how SAST works, you should be able to say: "It turns your code into a tree, traces tainted data through it, flags risky flows, and (hopefully) tells you what's dangerous, before you ever deploy." That's it. No sales talk. No buzzwords. Just how it works.

And now that you know how SAST tools think... It's time to ask: why are most of them still a pain to use?

Let's get into that.

Why Most SAST Tools Fail in Real Life (From a Developer Who's Been Burned)

If you've ever actually tried running a SAST tool as a developer, not as an AppSec engineer reading PDF reports, you've probably had one of these moments:

Your CI lights up with 38 critical issues. You're not even using the report, and it flags a harmless test helper in a .spec.js file.

It warns you about

eval()in a library you're not even using in production.You spend an hour trying to "fix" something, only to realize it was a false positive caused by a misconfigured rule.

It's annoying. But it's "worse team." The problem is that most static analysis tools are built for audits, not for dev workflows. And that's the root of the disconnect.

1. They Think Security Issues Happen in Isolation

Here's the reality: the security bug isn't in the line that triggers the alert, it's usually three functions deep, across a service boundary, and tied to some weird edge case. But most SAST tools don't think like that. They treat your code like it's a bunch of unrelated files. So they'll flag a function that looks dangerous without understanding how it's used.

Example:

function renderUserHTML(input) { return `<div>${input}</div>`; }

function renderUserHTML(input) { return `<div>${input}</div>`; }

The tool sees this and screams:

"⚠️ Unescaped user input in HTML!"

But it doesn't bother to check if this input ever actually comes from the user. Maybe you call it like this:

const html = renderUserHTML("Welcome, admin!");

const html = renderUserHTML("Welcome, admin!");

That's not a security issue. That's just... HTML. What the tool should do is trace the input. Is it tainted? Does it cross trust boundaries? Most can't. And if it can't, you get flooded with alerts that don't matter, and miss the one that does.

2. They Don't Respect How Real Projects Are Structured

Let's be real, codebases are messy.

You have utils, helpers, shared, and legacy folders.

Some files are 3 years old and untouched.

Some code is only meant to run in test/dev environments.

But many SAST tools just walk through the entire repo like it's flat. They:

Flag dev-only code in your test harness

Warn about

eval()inside anode_modulesshimCrawl files ignored in

.eslintignoreor.gitignore

They don't care that a file is only ever imported in mock test setups — it's code, and they'll flag it. The result? You get a report full of red, but most of it is code that never even runs in production.

3. They Assume You Have Time to Dig Through This Stuff

Here's a common scenario: You're in the middle of shipping a feature. You open a PR. The SAST scan runs. Suddenly, your pipeline fails, and critical security issues are found. You click through to the report. It's a third-party dashboard, behind auth, loading slowly. You wait. It opens a giant table. No clear explanation. No reproduction steps. No fix suggestion. Just: "Rule: Potential Command Injection in child_process.spawn() — Critical"

You don't even use child_process in your code. Turns out it's from an unused script in the scripts/ folder. By the time you figure that out, 20 minutes are gone. And your feature still isn't merged.

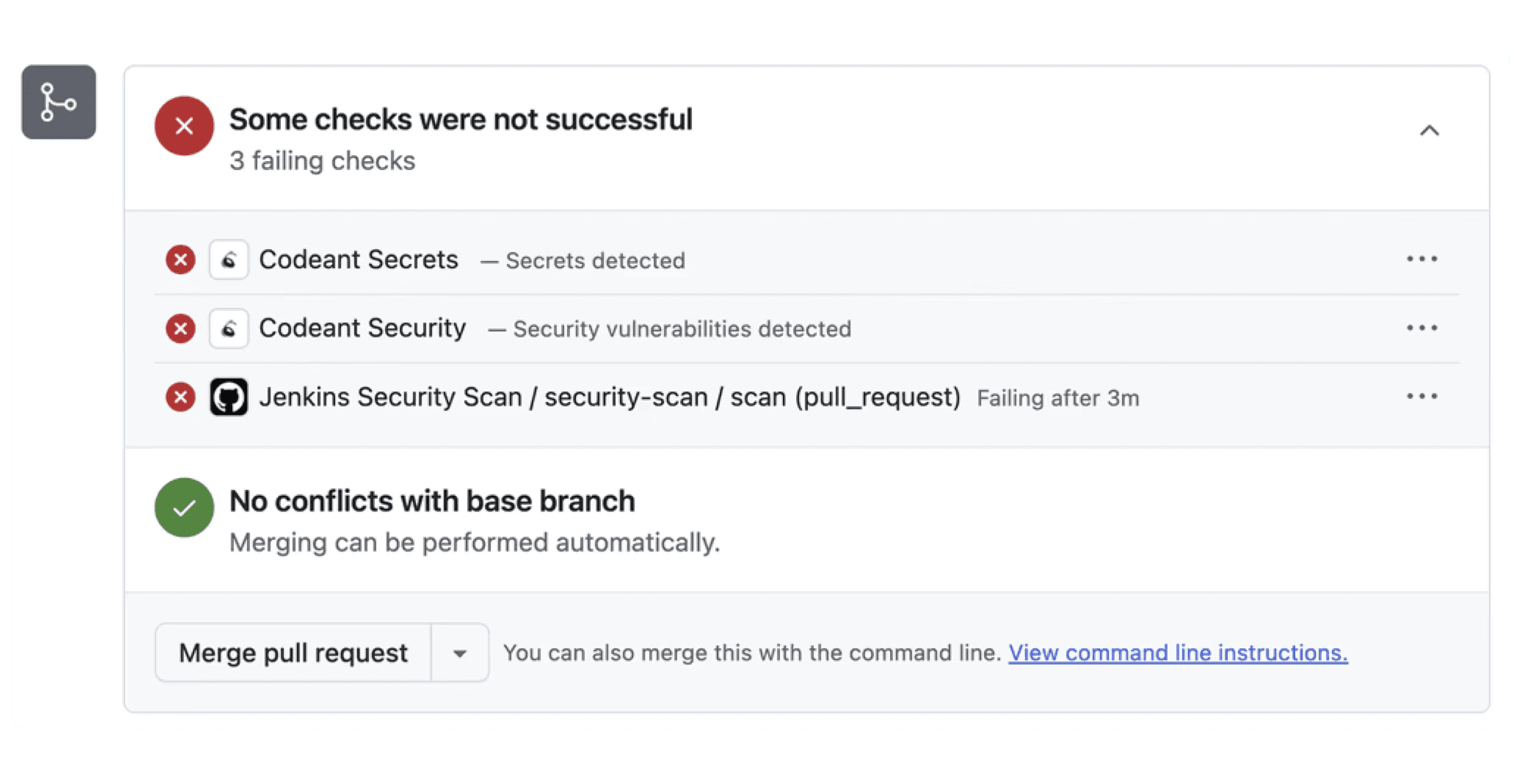

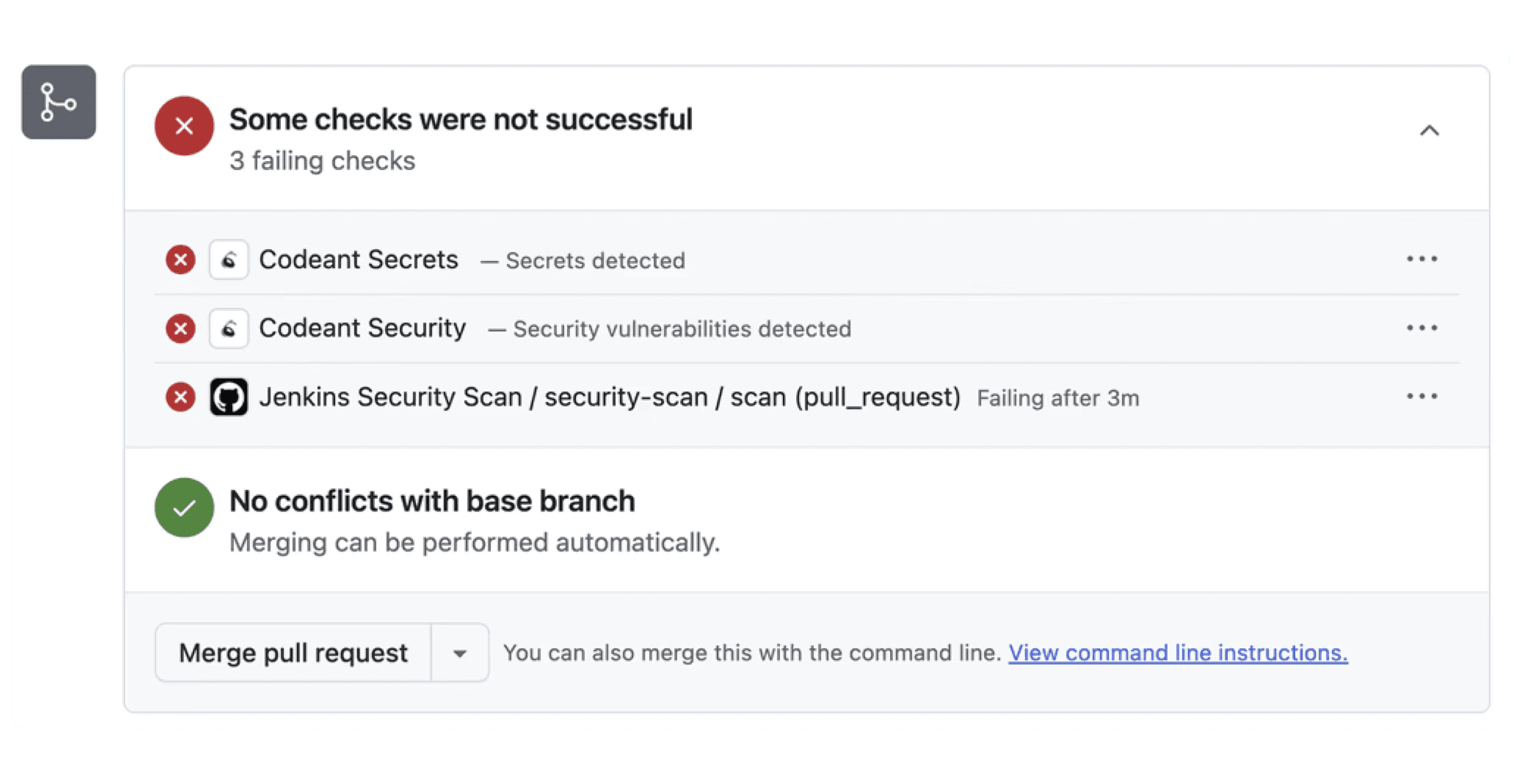

4. They Break The CI Without Giving You Context

This is one of the most frustrating sins: SAST tools that break builds, but don't help you understand why. You see this:

SAST FAILED: 5 High issues

SAST FAILED: 5 High issues

That's it. No logs, no links, no context inline in the PR. Imagine if linting worked that way:

Lint failed: 17 issues. No details. Good luck

Lint failed: 17 issues. No details. Good luck

You'd disable ESLint in a second. But somehow, this is standard for many SAST tools. Failing CI should only happen if the issue is:

Real

Severe

Actionable right now

And unless the tool proves that in the output, it's doing more harm than good.

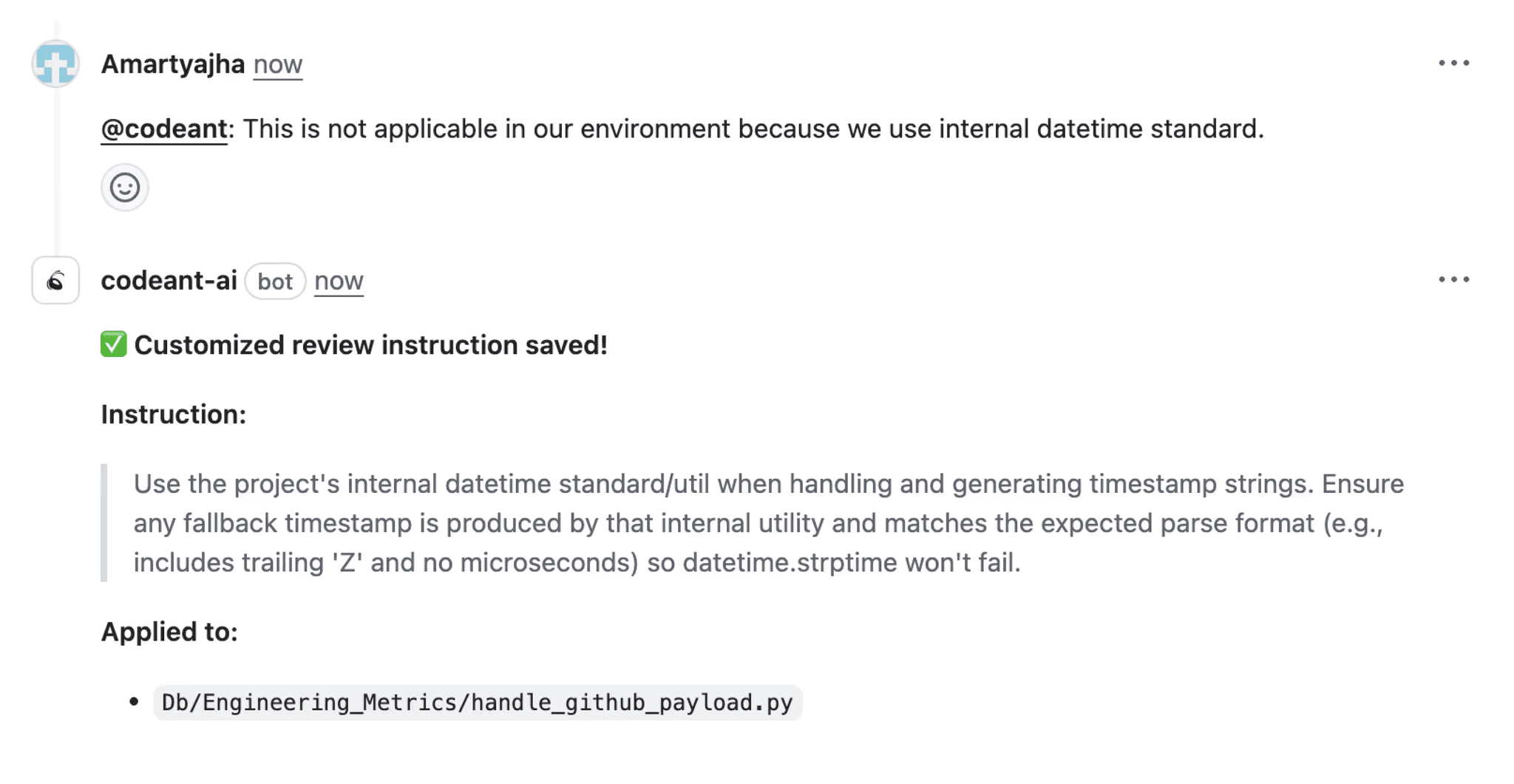

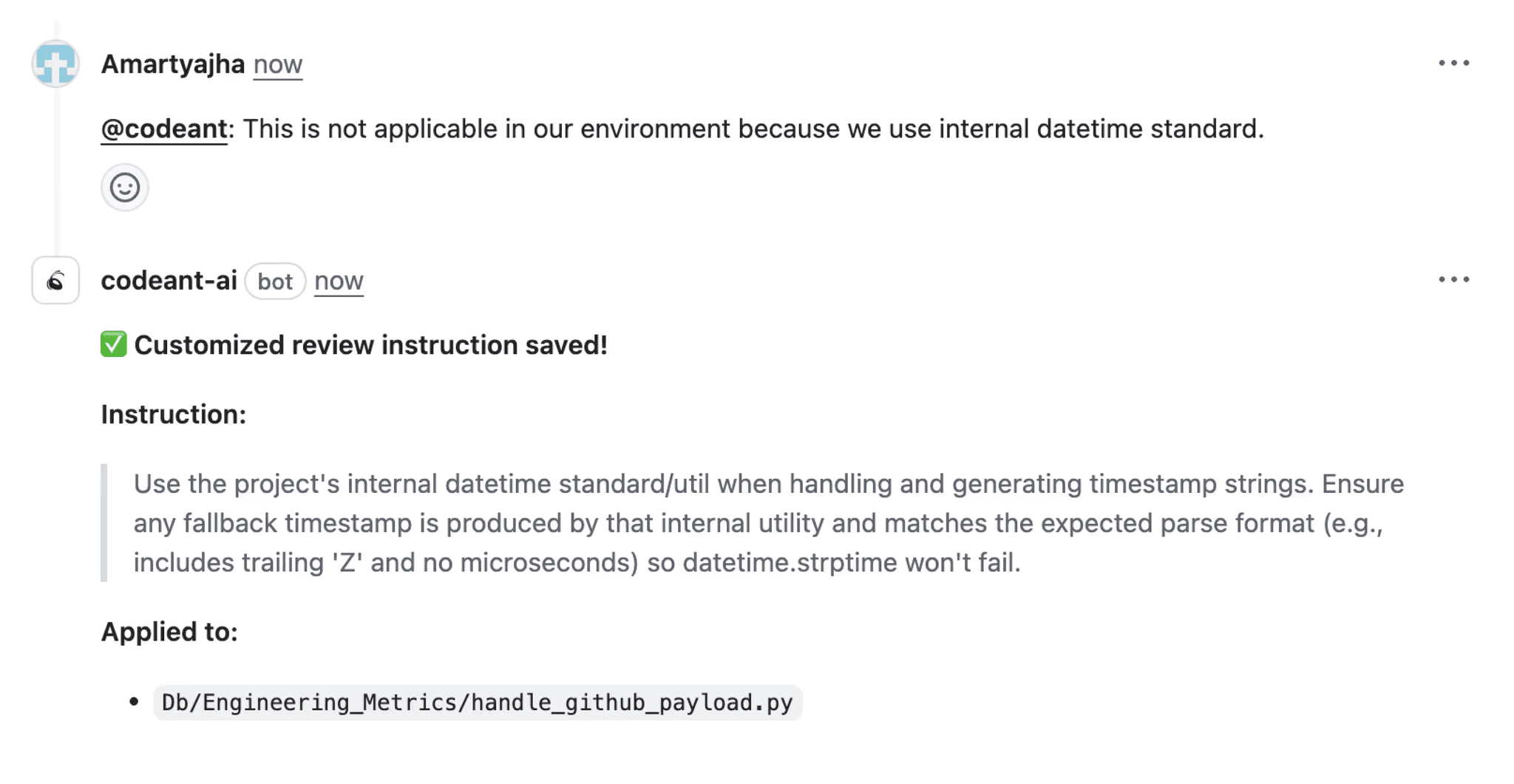

5. They Don't Let You Train or Tune the Tool Like a Teammate

Here's the irony: most static analyzers treat your code like a stranger. You've fixed the same warning in 8 places? Cool. It'll flag it again on the 9th. You know a certain pattern is safe in your app? There's no way to tell the tool, unless you want to write a custom suppression rule using YAML nested under 4 directories. What developers want is a tool that learns as they go. That adapts. That remembers what you muted and why. Not one that resets to zero every time it runs.

So, Why Are We Even Talking About SAST Then?

Because the idea behind SAST is sound. The problem is the execution. Most tools just weren't built with developers in mind. They were made for checklists, compliance reports, and PowerPoint slides. What if SAST were a real-time code reviewer that's:

Smart enough to trace tainted input across your app

Quiet when things are safe

Loud when things are risky

Context-aware and fix-focused

We're not dropping a pitch here, we'll show you how to integrate this kind of tooling into your flow in the next section. But just know this: If you've hated static analysis before — that's not your fault. It's the fault of tools that never considered how developers work. Let's fix that.

How to Actually Use SAST Without Hating It

By now, we've seen why most SAST tools flop in real-world engineering teams. They're noisy. They're vague. They break things without explaining why. But that doesn't mean static analysis is broken. It means most teams are using tools that weren't built for how developers actually work. So, what does a good integration look like? Let's walk through how to use static analysis, specifically SAST, in a way that feels natural, actionable, and developer-first. We'll also show how CodeAnt solves the hard parts without needing a 30-page config file or a full-time AppSec team.

1. Plug SAST into the Pull Request, Not Just the Pipeline

Most SAST tools treat CI/CD like a gatekeeper: scan on PR, fail the build, and leave you with a vague report. That's not helpful. You're busy. You're trying to get a PR merged, not read a 40-line vulnerability dump from an external dashboard. A smarter approach is to:

Run static analysis on every pull request.

Leave inline comments directly in the PR

Summarize the entire code change + issues in one spot

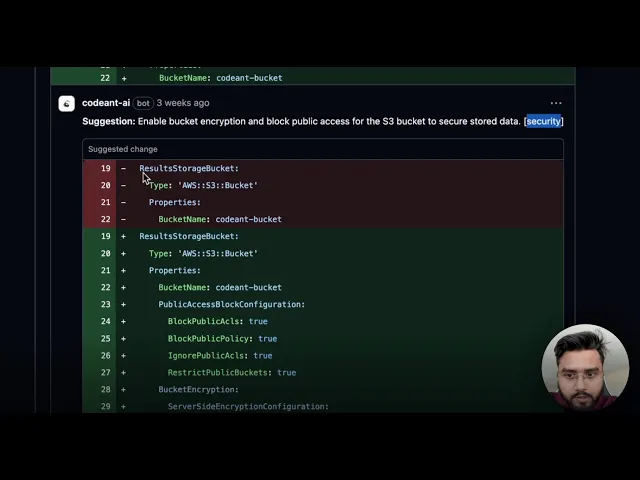

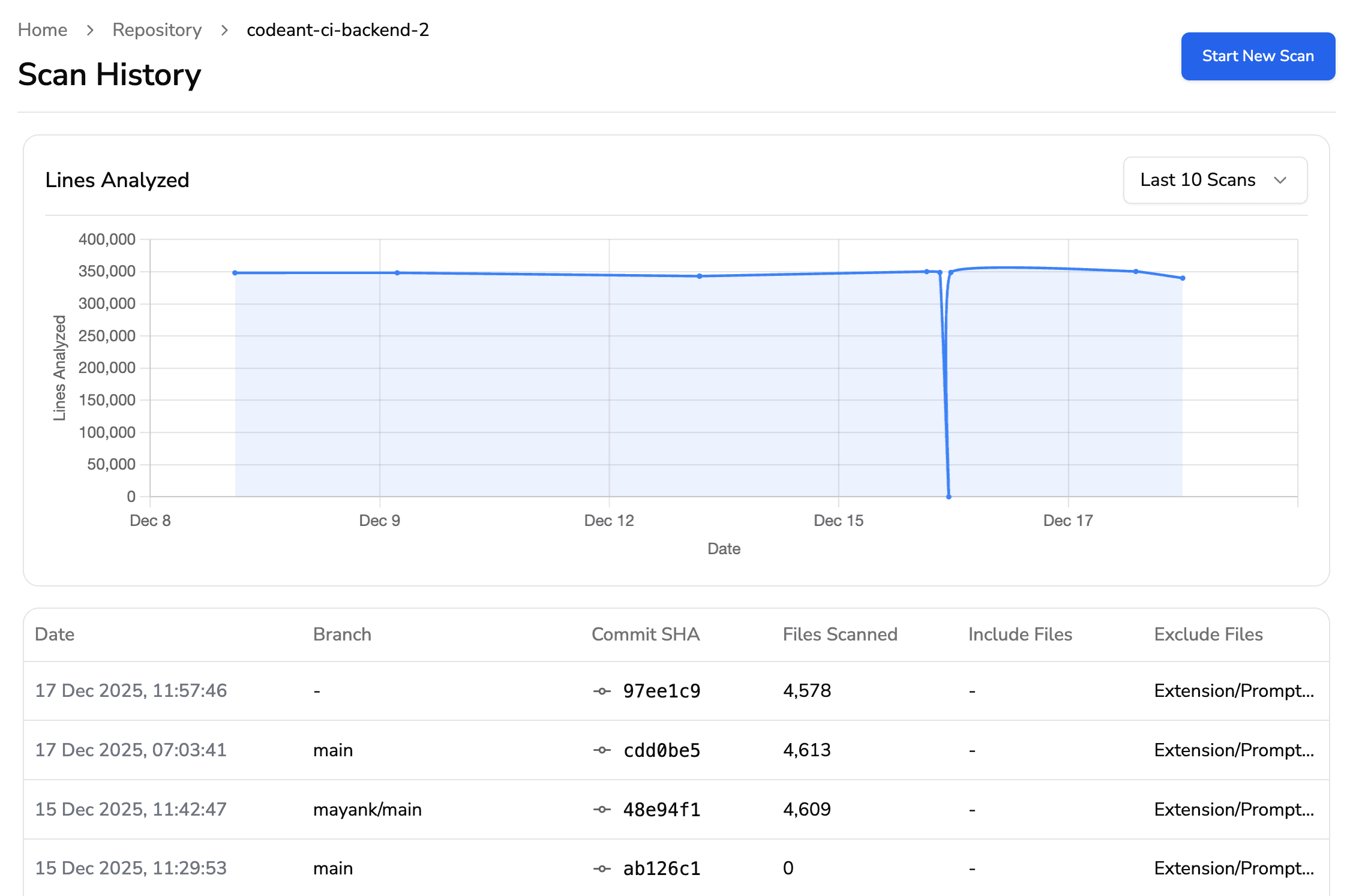

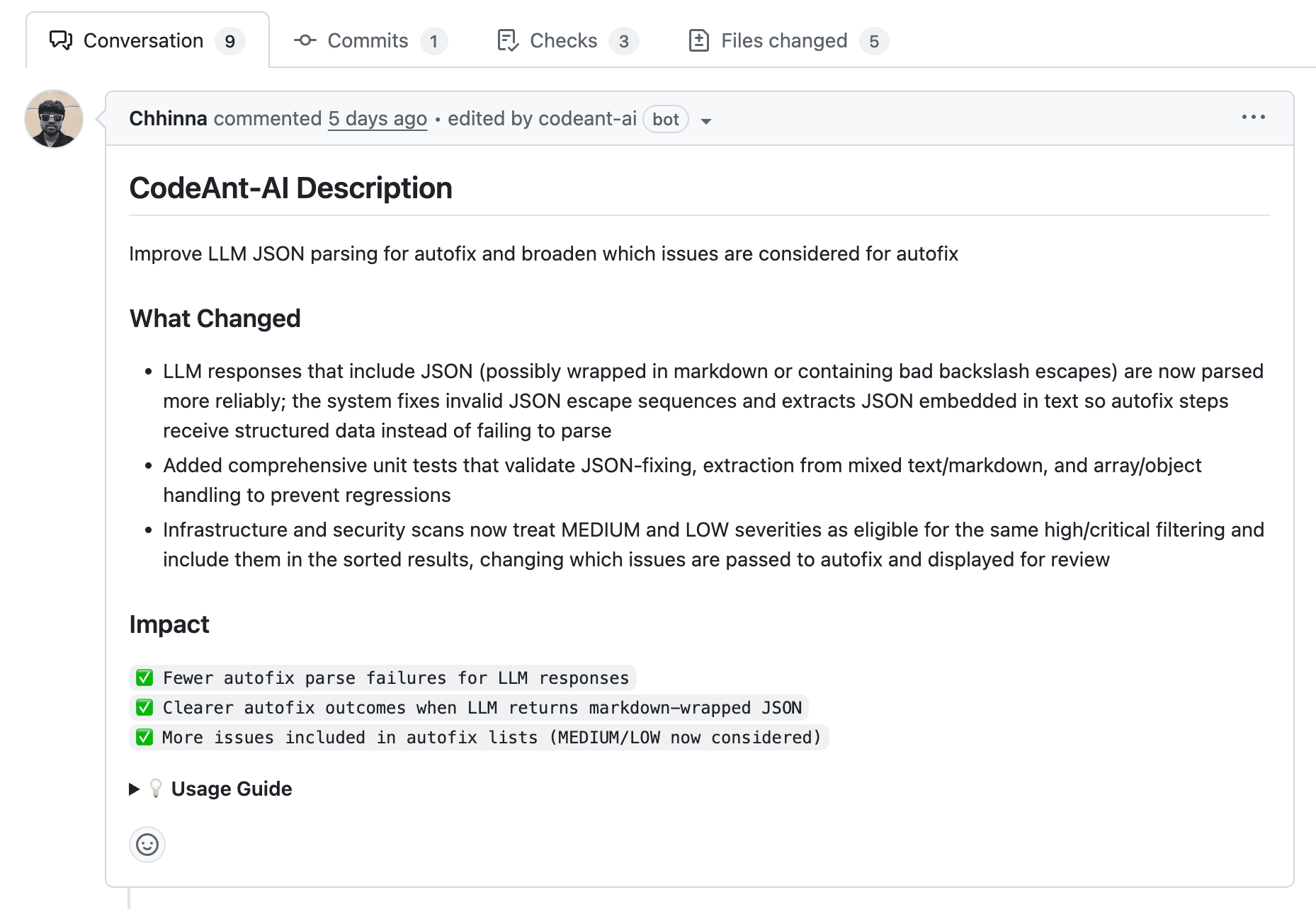

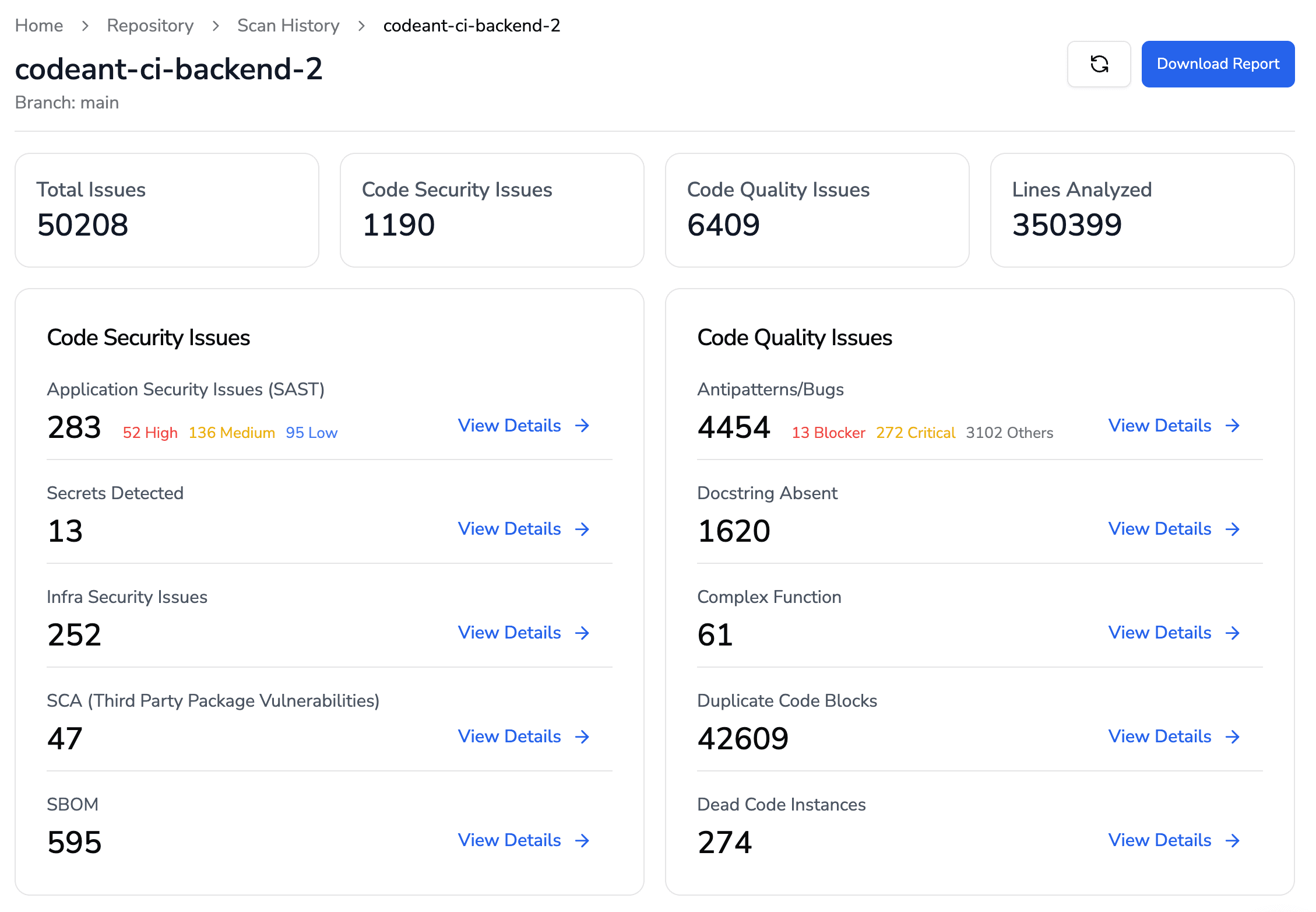

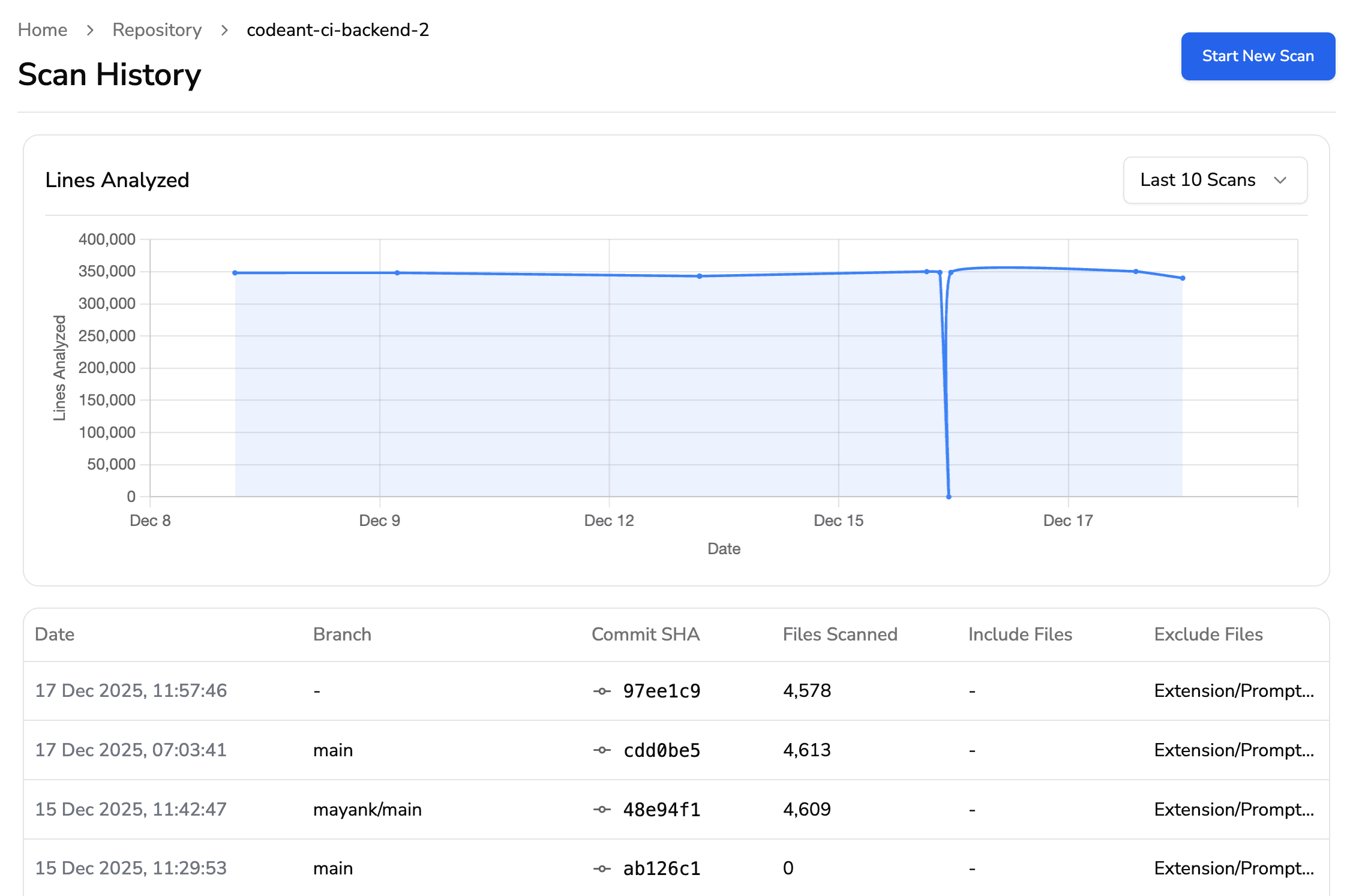

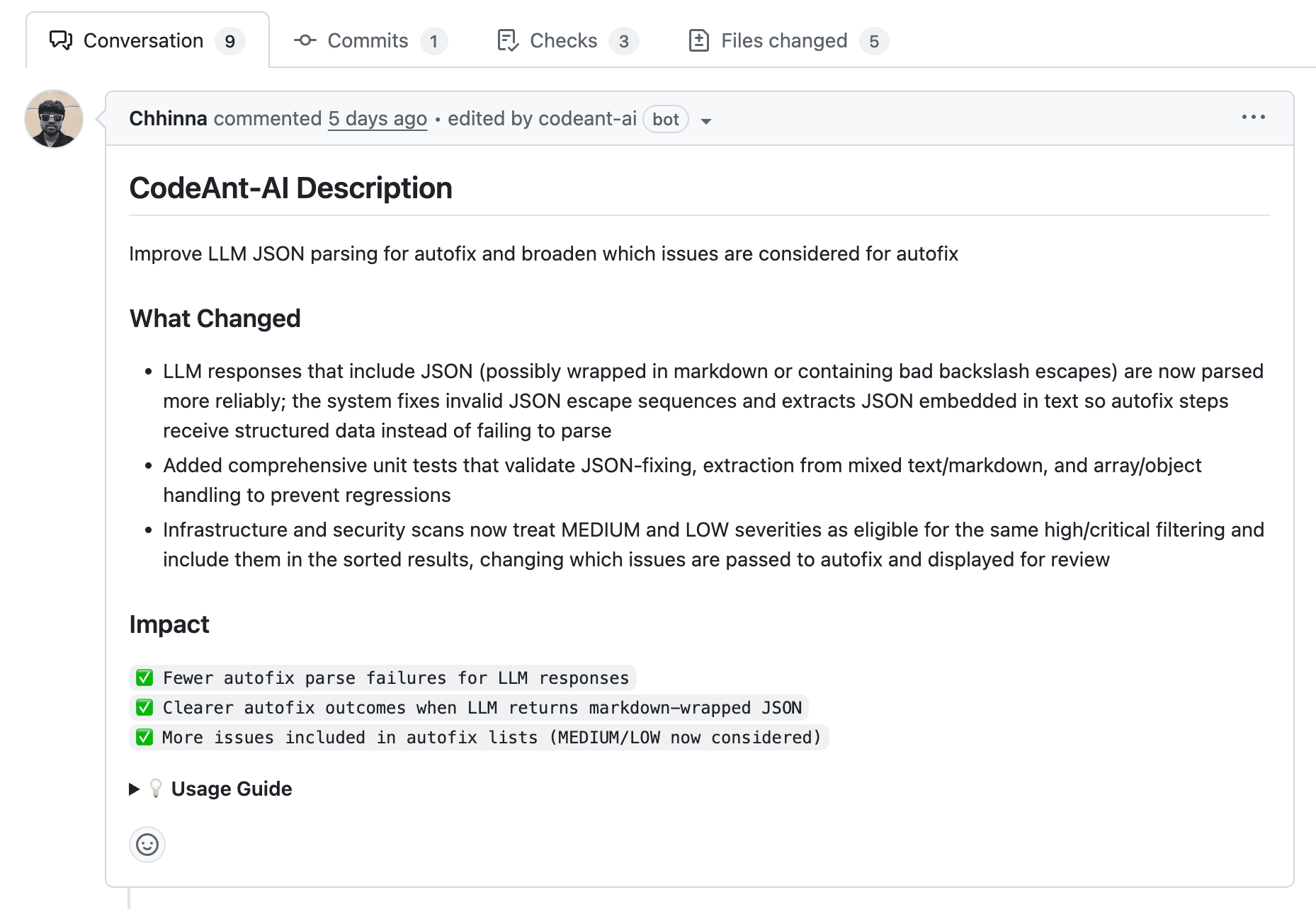

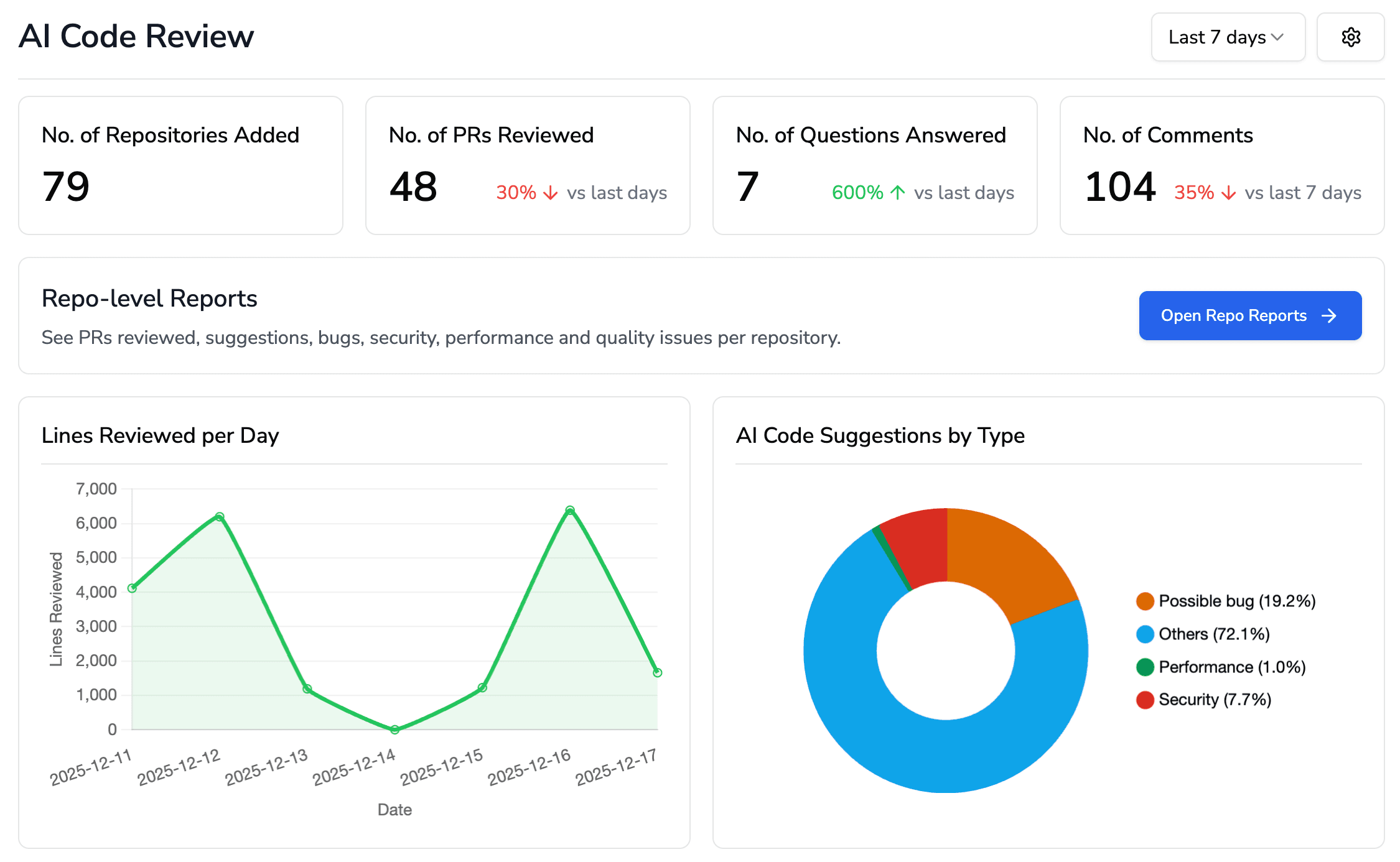

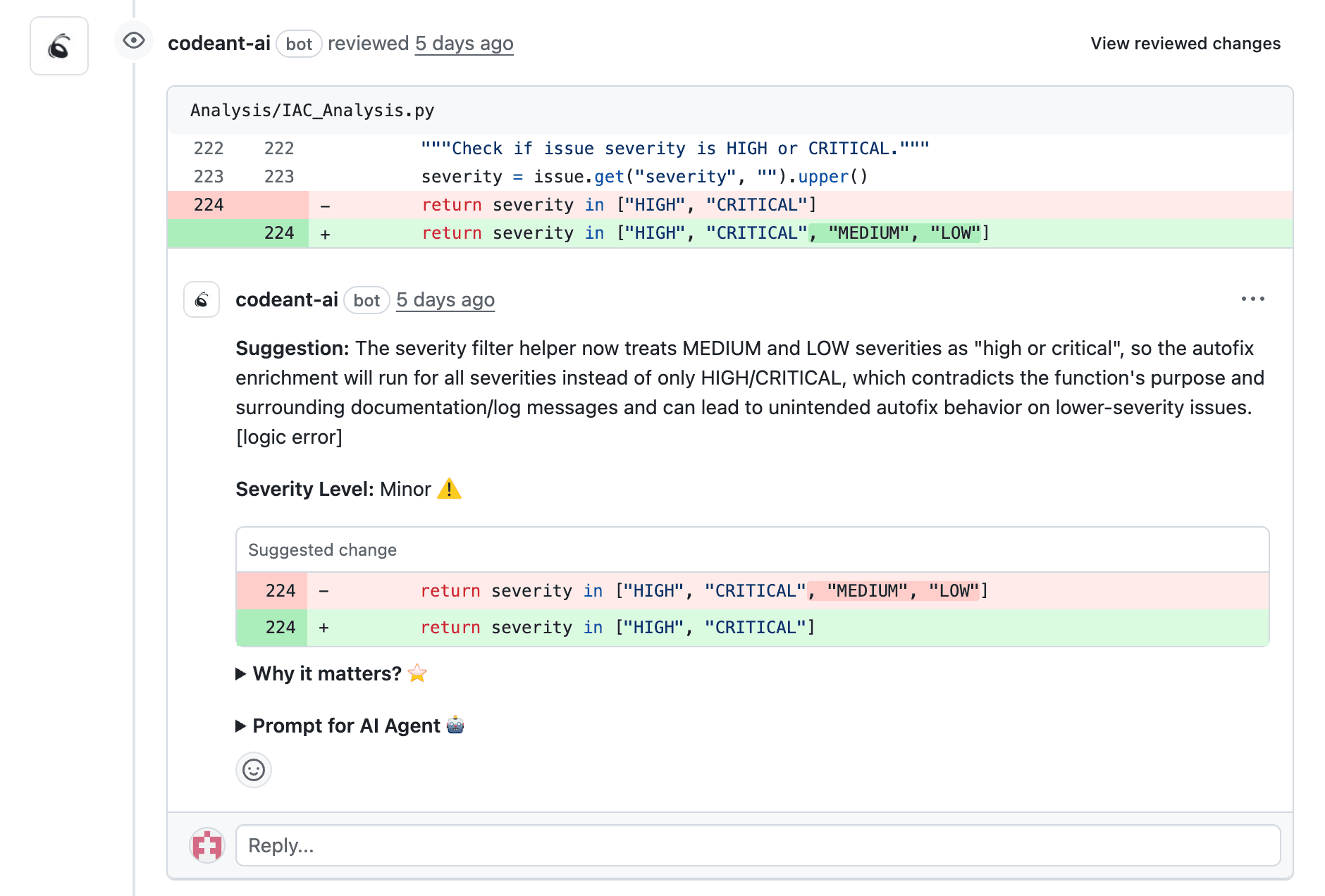

Here's what that looks like in practice using CodeAnt: Recent scan activity shows commits automatically analyzed as part of PR workflows.

That's exactly how CodeAnt works. You commit, open a PR, and in under 2 minutes, you get:

A clean AI-generated summary of the code changes

Inline comments where issues were found

A full view of security risks, bugs, and code smells

And a central dashboard to track all this at once

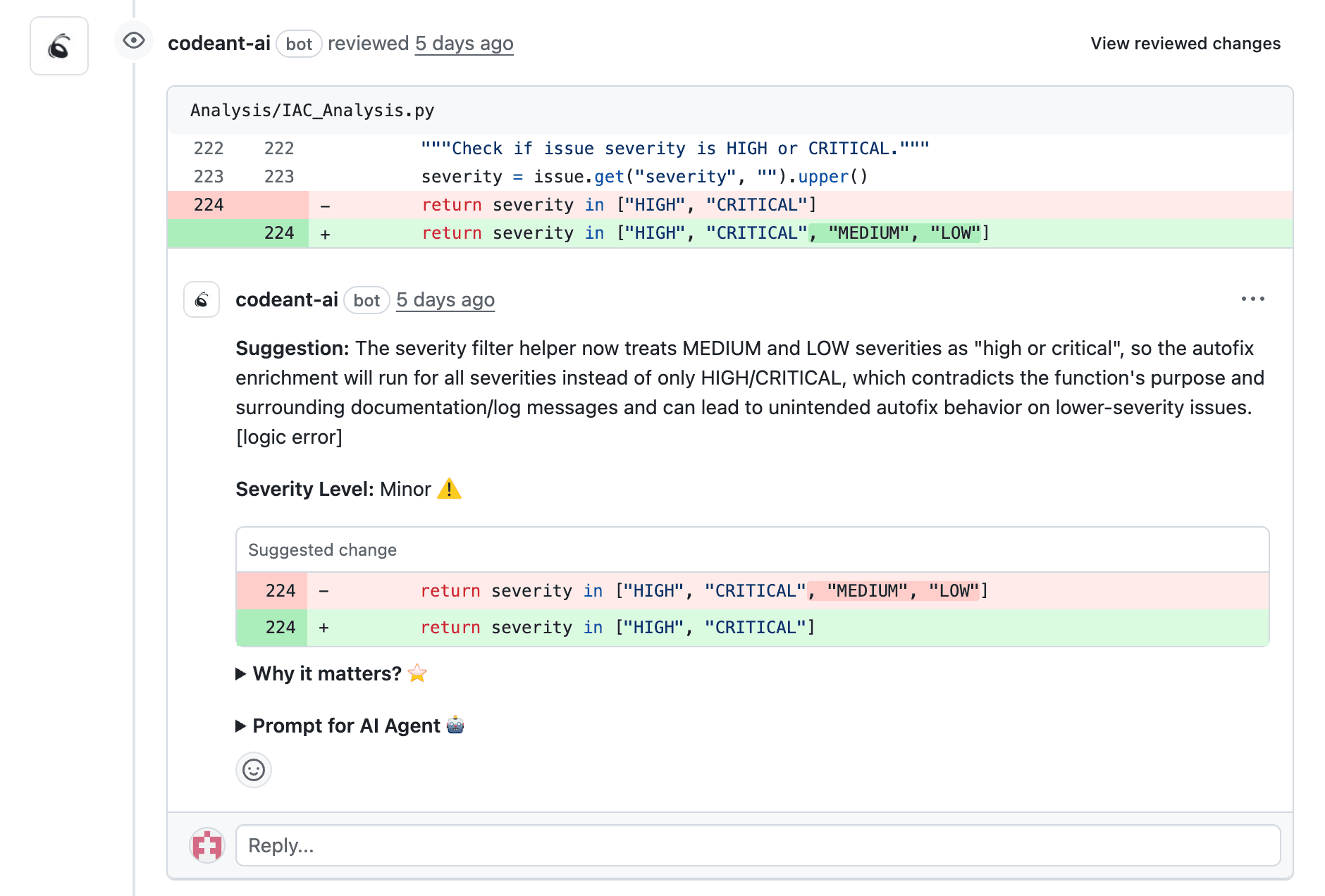

2. Don't Just Detect Issues, Prioritize and Fix Them

Most tools dump a list of 50+ "possible" issues. You don't know what's real, what's critical, or what's even reachable. Good static analysis helps you triage:

Which flows actually involve user-controlled input

Which sinks are exploitable vs theoretical

Which issues block merging (and which ones can wait)

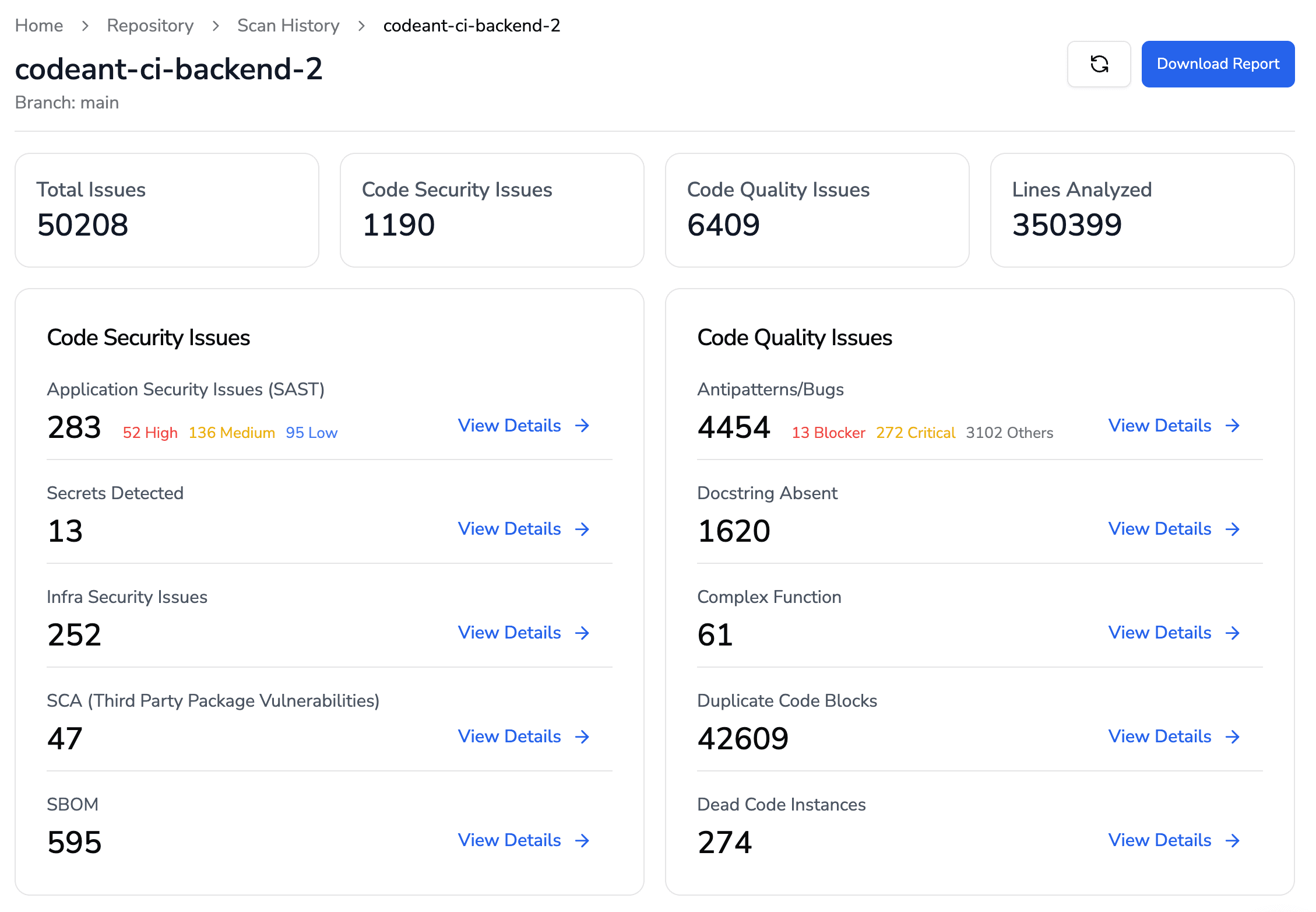

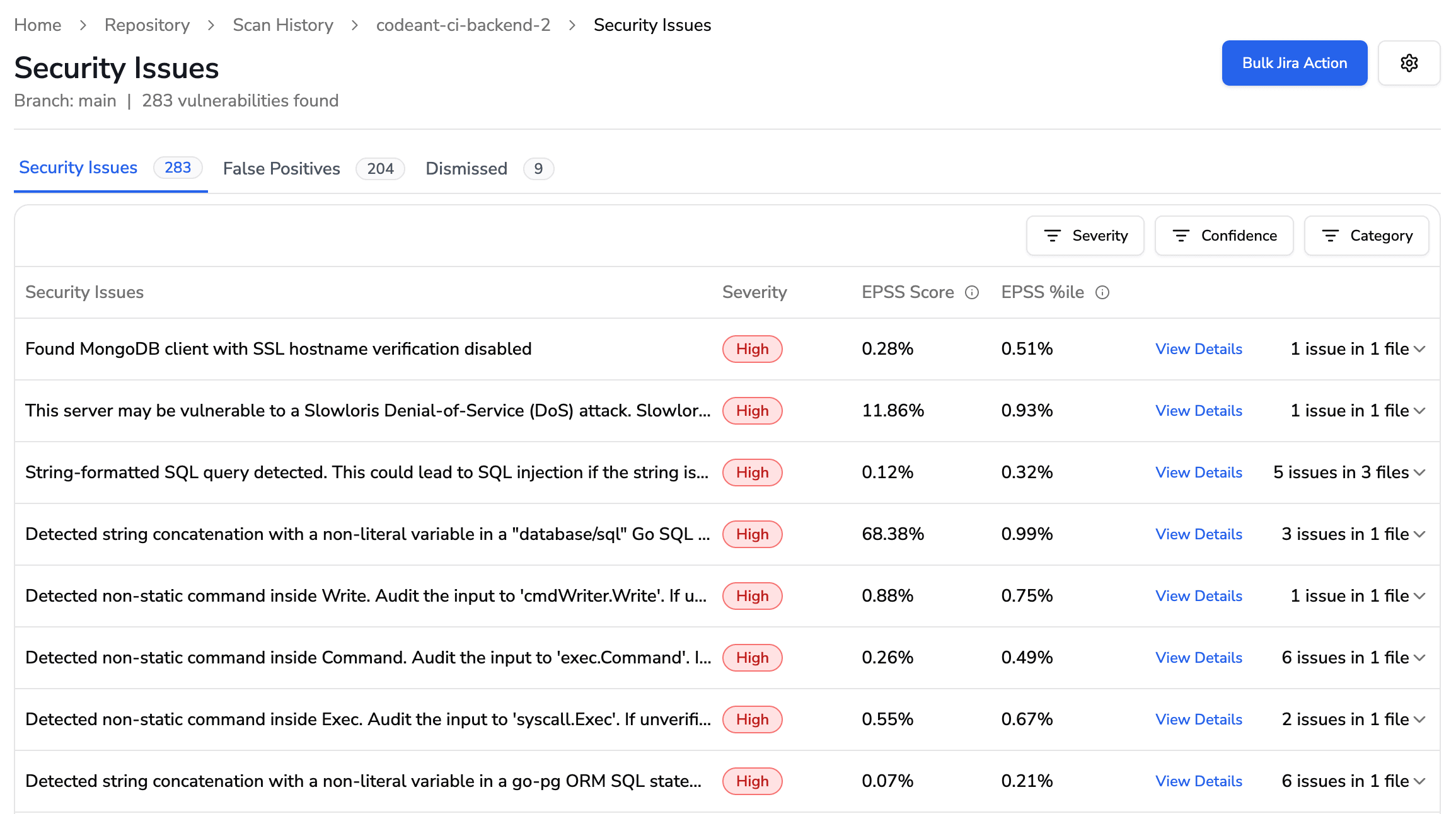

With CodeAnt.ai, this prioritization is built in. You don't get a flat report, you get a ranked view of risks, complexity, antipatterns, and overall code health.

This is why CodeAnt treats static analysis as a Code Quality and Security Gate, one that runs in CI, but only blocks PRs for:

Confirmed critical vulnerabilities

Secret exposures

Policy-breaking patterns

That way, you can get signal, not noise. You stay fast, but secure. And yes, you can configure this however you want using AI Code Review Configuration, down to custom prompts, severity thresholds, or team rules.

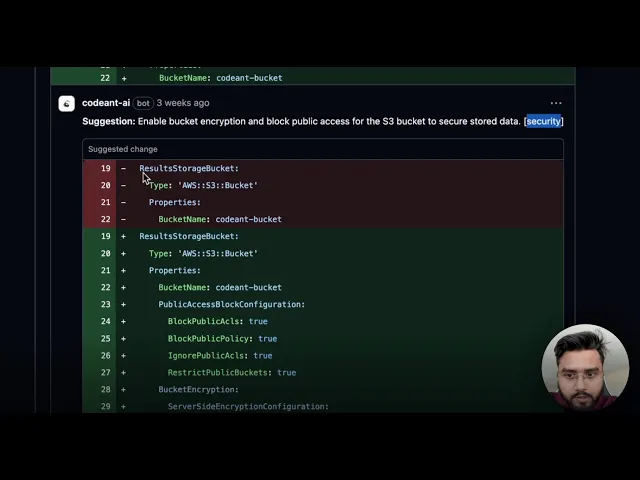

3. Fixes Shouldn't Live in PDFs. They Should Live in Your IDE.

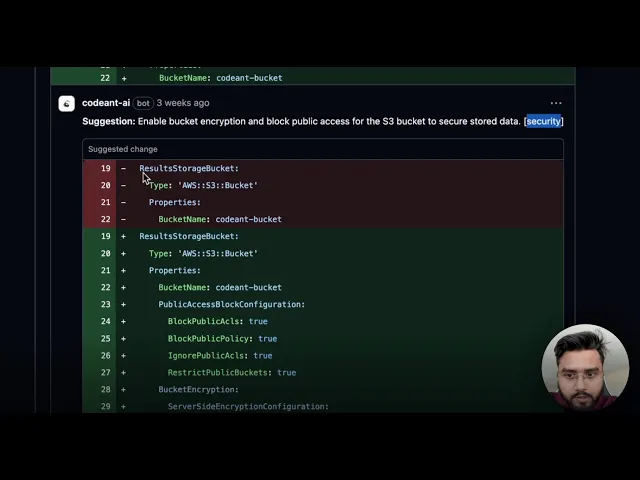

If you've ever been told "here's the vuln, now go read this 6-page OWASP doc to fix it", you know the pain. You shouldn't have to piece together a fix from GitHub issues and StackOverflow. A real tool, like CodeAnt, goes further:

Flags issues and explains why they're risky

Suggests one-click fixes where possible

Even surfaces algorithmic inefficiencies, edge cases, and dead code

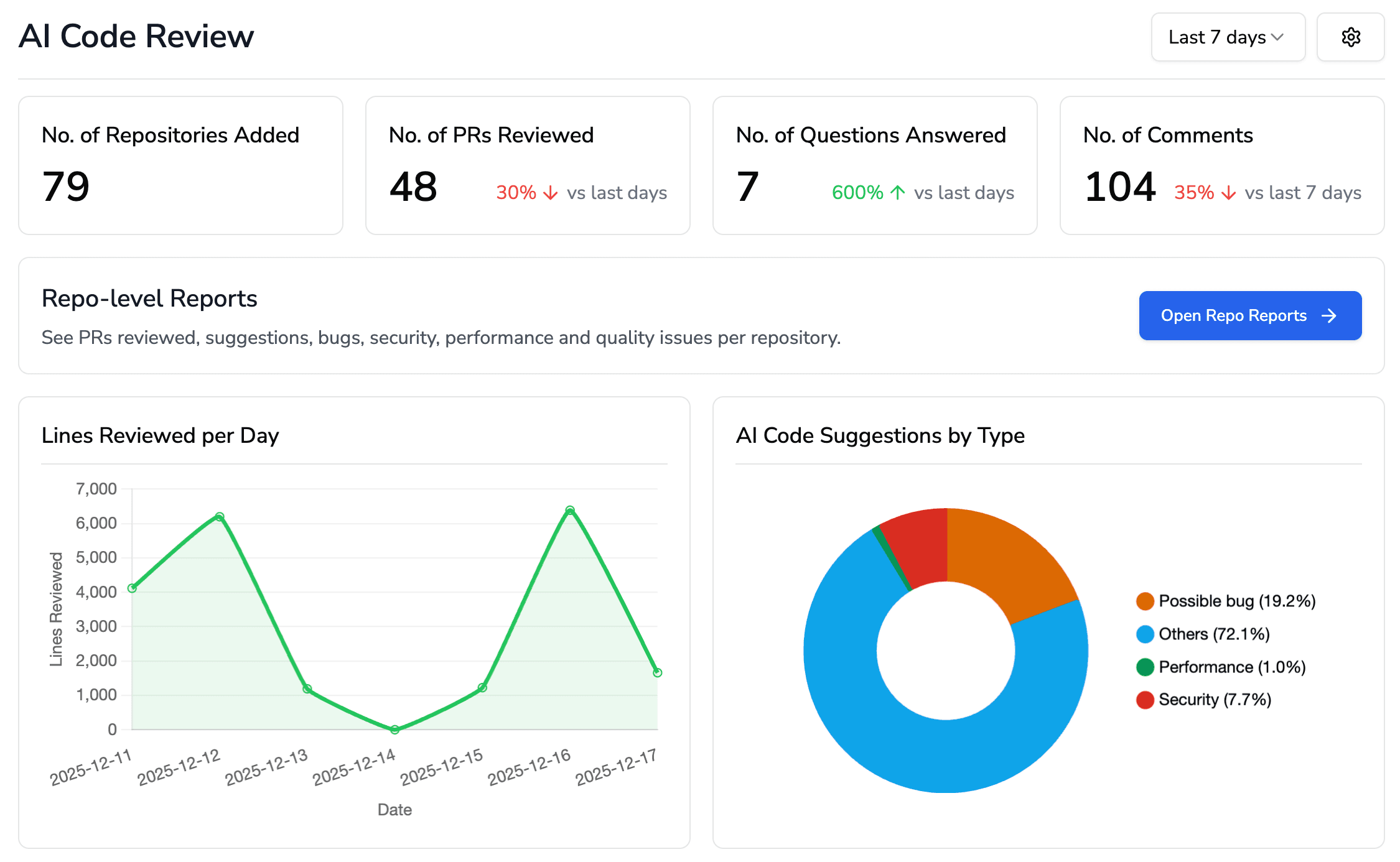

4. Let the Whole Team See What's Happening

SAST often lives in silos. Only a few senior engineers know what got flagged. Managers never see it. Junior devs don't know if they fixed it right. That's broken. Static analysis should be visible, trackable, and collaborative. That's why CodeAnt provides:

A real-time dashboard that shows every AI-generated comment

A count of open issues by repo, by team, by PR

A high-level summary that managers or leads can actually understand

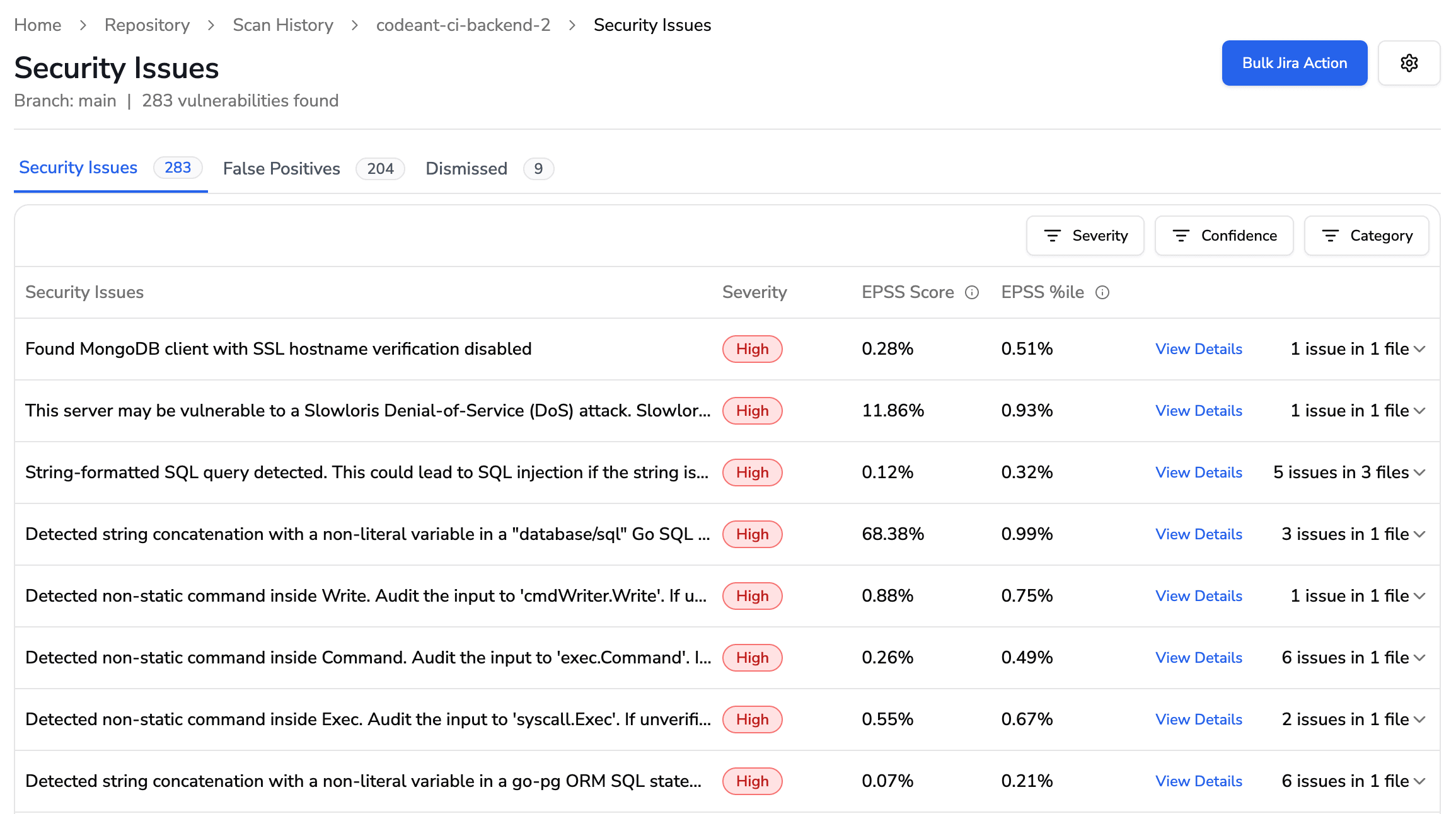

Live breakdown of security issues by severity, repo, and status, built for team-wide visibility.

Clicking on the specific, you can understand the same in detail. For example:

So whether you’re leading a team of 5 or managing an org of 500, you can instantly answer:

“How much risky code are we pushing?”

“What did the AI catch this week?”

“What are we improving over time?”

5. Customize It. Don't Just Suffer Through It.

Here's something most tools forget: your team writes code in a certain way. You use specific patterns. You have your own internal rules. You review PRs your way. Here's something most tools forget: you have your own internal rules. You review PRs your way. You use specific patterns. You configure AI code reviews using natural language prompts. Want to:

Flag all shell commands in Node.js backends?

Suppress comments on legacy code in a folder?

Require checks for missing docstrings in data models?

You can. It's as flexible as you are. And unlike legacy rule engines, it doesn't require diving into YAML or writing AST rules from scratch.

Bottom Line: Static Analysis Should Feel Like a Code Reviewer, Not an Alarm System

If a tool just throws alerts at you and slows you down, you’ll ignore it. That’s fair.

But if it helps you catch real issues before they land, explains what’s wrong, and gives you fixes, then it’s more than a tool. It’s a teammate.

That’s what we’ve tried to build with CodeAnt — something that blends code quality, security, and actual usability into a single experience.

Now that you’ve seen what good static analysis should feel like, let’s step back and clarify something that trips up even experienced developers:

What’s the difference between SAST, SCA, and SBOM — and when should you care about each?

Let’s break it down.

SAST vs. SCA vs. SBOM, Understand the Difference Before You Ship

In the previous section, we looked at how SAST tools can fit cleanly into a developer's workflow, reviewing code like a teammate, not a blocker. But code you write is just one part of the system that ships. Let's fix that. Instead of treating them as acronyms, think of them as three distinct questions you'll eventually be asked about every codebase:

"Is the code you wrote safe?"

"Are the libraries you used safe?"

"Can you prove what you shipped, and what was in it?"

Each one of these, Let's walk through them properly.

1. Checking Your Own Code, That's SAST

SAST (Static Application Security Testing) is about logic you wrote. You're writing a feature. You take input from a user, pass it into a function, maybe render it, or hand it off to another part of your system. SAST traces that flow without running the app. It checks:

What paths user data can travel through

Whether that data ever reaches a risky place (e.g., DB, HTML, shell)

If anything's missing — like escaping, validation, or auth checks

This helps catch the kind of bugs that:

Tests didn't cover

Reviewers missed

End up as security issues in production

2. Checking Code You Didn't Write — That's SCA

Now think about what's in your package.json, requirements.txt, pom.xml, or Gemfile. Dozens, sometimes hundreds, of dependencies. SCA (Software Composition Analysis) scans the full dependency tree and compares it to vulnerability databases. If a library you use has a known exploit, even in a transitive dependency, SCA will catch it. It's not about theoretical logic bugs. It's about known problems:

Remote code execution in version X

Insecure crypto in version Y

Path traversal in a helper package you didn't even realize was bundled

SCA won't help you if you wrote an insecure flow. But it'll tell you if someone else's code is already compromised, and sitting in your repo.

3. Keeping a Record of What You Shipped — That's SBOM

Let's say your company pushes a release on Friday. A new CVE is announced the next week. Your security team asks: "Did our last build include that vulnerable package?" This is where SBOM (Software Bill of Materials) comes in. It's like a snapshot of everything that made it into your build: source, deps, tools, infra, all versioned and signed off. SBOM doesn't catch bugs. It lets you answer: "What did we ship?" That matters for:

Post-incident forensics

Audit trails

Compliance (e.g., SOC 2, ISO, FedRAMP)

Why This Separation Matters

A lot of teams use one of these and assume they're "secure." That's risky thinking. Each tool protects a different layer:

Concern | You Need… |

|---|---|

Your logic might be vulnerable | SAST |

Your dependencies might be broken | SCA |

Your release might have unknowns | SBOM |

And most real-world failures happen when one of these layers gets skipped or misunderstood.

Best Practices for Using SAST in 2025

Most teams have already wired up a scanner and added it to their CI. That's not the hard part. What separates real engineering orgs from those playing security theater is how they use SAST to shape how code is written, reviewed, and shipped. Here's how the best do it:

1. Use SAST as a Source of Truth for Tech Debt You Can't See

Good SAST alerts you show you what parts of your system attract fragile logic — the kind people avoid touching because it breaks easily.

2. Designate a "no-fix, just-learn" path for junior devs

Instead of throwing vague vulnerability alerts at them, let juniors:

Explore how taint flows triggered an alert

Manually trace the input/output logic

Write a PR comment explaining how it could have been worse

3. Hardcode what SAST won't do

No tool covers everything. Instead of pretending otherwise:

List what SAST covers

List what it intentionally does not (e.g., runtime auth, role-based behavior)

Share this as part of onboarding docs or team playbooks

4. Rewrite or delete the rule you've muted three times

This one's simple. If a rule keeps getting suppressed:

It's either badly written,

Or not relevant for your stack,

Or your team hasn't been trained on how to address it

5. Stop asking "Does this pass?" and start asking "Does this teach?"

Passing a scan means nothing if the next commit does the same thing again. Make it your standard to always explain the why of every critical alert during PR reviews.

6. Use findings to influence code design, not just PR fixes

If a component consistently fails SAST, maybe it's not just the implementation. Maybe the component is designed to be too flexible, too smart, or too permissive.

7. Turn consistent patterns into lint rules before they show up in SAST

If the same 4 SAST warnings show up in 70% of your PRs, don't just keep fixing them. Turn them into ESLint / Pylint / whatever rules, so they never get to the security layer in the first place.

8. Build a culture of ignoring what doesn't matter — loudly

Not every alert is worth acting on. But ignoring it silently trains your team to distrust the tool. Instead, normalize this: "We're ignoring this issue in this context, because we've validated it's not exploitable, and we've documented why." Say it in the PR. Log it in the tool. Make it visible.

9. Treat dangerous patterns like UX bugs, not logic bugs

If devs keep writing insecure code, it's often not a function they're careless, it's because the API encouraged it. You should need to think twice about insecure code, not about how to escape it.

Wrapping Up: What SAST Is Really About

Static analysis isn’t just about catching bugs or ticking off compliance boxes.

At its best, it helps you understand your code from the inside out — how it processes input, where assumptions fall apart, and what patterns could become problems before anything reaches production.

But you only get that value when it’s set up to fit how developers actually think and work.

Thanks for sticking with this guide, If you’ve read this far, you're probably not just looking to “check the SAST box.” You're trying to improve how your team writes, reviews, and reasons about code. And that’s where real engineering maturity shows up.

What to Explore Next (If You're Serious About Getting This Right)

Map your gaps. Do you have a scanner for your code logic (SAST), dependencies (SCA), and release visibility (SBOM)?

Review your current tool's last 20 alerts. Were they helpful? Ignored? Triaged?

Ask your team how they feel about security feedback. If the words "annoying" or "random" come up, something's off in how your tooling is integrated.

Start small, but start visible. Pick one repo, one flow, and integrate useful feedback directly into PRs, not dashboards that nobody checks.

A Not So Quiet Plug, If You're Curious

We built CodeAnt.ai because we were tired of tools that made things noisier, not clearer.

If you want something that:

Fits inside your PR workflow

Flags security and quality issues with real context

Offers smart, one-click suggestions

Blocks only what actually matters

…then this might be the one worth trying.

No credit card. No pushy follow-ups. Just something you can test inside your workflow — and judge for yourself.

Everything’s green. You’ve written clean code, linted it, pushed to CI, all tests pass, and the PR gets approved. A few days later you get a message: “We found a vulnerability in the new form input. It’s letting users inject scripts.”

At first, it's frustrating. Then it's confusing. You start digging through your code. Turns out, the issue was in how the user input was being rendered, no escaping, no validation. The kind of thing a good reviewer might catch, but not always. Especially on a busy day.

Now the question hits you: why didn't the tools catch this?

This is where static code analysis, especially Static Application Security Testing (SAST), comes in. Yet many devs don’t use SAST properly. Some have never tried it. Others gave up after floods of false positives. That's fair. The way most static analyzers are built doesn't really help developers.

In this blog you'll learn:

What SAST is (and isn't)

How it works under the hood (no jargon, we promise)

What makes most SAST tools annoying

How to make it work for you, not against you

Let's start with the basics.

What is SAST?

So now you're probably thinking: "Alright, sounds like SAST could've caught that bug. But what even is it?"

Let's clear that up, without sounding like an OWASP dictionary. SAST (Static Application Security Testing) is exactly what it sounds like: analyzing your application's source code without running it. No browser. No test server. No live app. Just your raw code, scanned for potential vulnerabilities.

But where's it running. They'll throw you words like "secure SDLC," "left-shifted analysis," or "security-first architecture", and none of that helps you as a developer trying to ship code. So here's the better way to think about it:

SAST is like having a paranoid code reviewer sitting inside your IDE. Instead of asking "will this code run?", it's asking "can this code break?"

It's scanning through functions, variables, and inputs, and trying to figure out if anything you wrote could be dangerous if the wrong kind of data flows through it. That includes common vulnerabilities like:

Cross-site scripting (XSS)

Insecure file access

Hardcoded secrets

And plenty more

But unlike a linter (which mostly cares about formatting and syntax) or tests (which care about behavior), static code analysis tools dig into how data flows through your code, from input to sink. And because it doesn't run your code, it can work early, like, while you're still writing that feature. That's part the people mean when they say "shift left."

Now, to clarify one more thing, because this gets confused a lot:

SAST is not the same as SCA

Another tool you might've heard about is SCA (Software Composition Analysis). That one checks your dependencies, your package.json, requirements.txt, pom.xml, whatever, for known vulnerabilities in open-source libraries. It's useful. It's important. But it's not the same thing as SAST. Where SCA looks at other people's code, SAST looks at your code.

Here's the takeaway so far:

Static code analysis (via SAST) helps catch security flaws in your logic

It does this early, without running your app

It's not a replacement for tests or dependency scanners, it's a different layer of protection

Now that we've cleared that up, the next logical question is: “Okay, but how does it work under the hood?”

Let's go there.

How Static Code Analysis Works (For Real)

Most people throw around "SAST" without really understanding how it works. And most articles explaining it are... not great. They either oversimplify or overwhelm. This section won't do either. If you're a developer, you'll walk away knowing what static analysis tools do under the hood, so you can trust them, debug them, or even question them.

Let's see.

1. First, It Turns Your Code Into a Tree

When you write this line of code:

code res.send(req.query.name)

It seems simple. But a SAST tool doesn't "read" your code like a human. It parses it, meaning it breaks it down into its underlying structure, known as an Abstract Syntax Tree or AST.

Here's what that means: The above line becomes a tree-like structure:

code CallExpression ├── callee: MemberExpression │ ├── object: res │ └── property: send └── arguments: └── MemberExpression ├── object: req.query └── property: name

Why? Because the tool isn't interested in how the code looks, only what it does. It wants to understand:

Who is calling what

What data is being passed

Where that data originally came from

This is step one. No security analysis yet, just turning code into a format the machine can reason with.

2. Then It Tracks Tainted Data (That's you, req.query)

Once the code is parsed, static analyzers look for data coming from outside the app. These are called tainted sources, meaning untrusted.

Examples of tainted sources:

Express: req.body, req.query, req.params

React: event.target.value

Python Flask: request.args, request.form

PHP: $_GET, $_POST

This is where things get smart. Let's say you do this:

code const name = req.query.name; res.send("<h1>" + name + "</h1>");

The tool sees:

✅ name comes from user input

✅ it flows into the res.send() — a rendering sink

❌ and there's no escaping or sanitization

So even though you just see two simple lines of code, the tool simulates the path:

User Input → Variable → HTML Output → Not Sanitized → ⚠️ Potential XSS

3. Then It Checks: "Is This Dangerous?"

Now, not all user input is bad. Sometimes it's handled safely. Good SAST tools don't just scream every time they see req.query. They check what happens to the input. Take this:

code const name = req.query.name; const safeName = sanitizeHtml(name); res.send("<h1>" + safeName + "</h1>");

Here, the tainted data is interrupted by a sanitizer function. So the tool should not flag this. But that depends on whether the tool knows sanitizeHtml() is safe. Which leads to the next point...

4. Good SAST Tools Understand Context. Bad Ones Don't.

Here's the difference between a good SAST tool and a noisy one.

Bad SAST:

Flags every eval(), even in test code

Can't track variables across files

Doesn't know your sanitizers

Misses real bugs in favor of loud ones

Good SAST:

Follows data flow across function calls and file boundaries

Understands sanitization logic (even if it's custom)

Prioritizes risky flows over harmless ones

Gives meaningful, fixable alerts — not spam

This is where most static analyzers fall apart. They either under-approximate (miss bugs) or over-approximate (spam the dev). If you want to teach deeply: Show a multi-file example where input comes in one module, gets passed through two functions, and lands in a sink.

Then ask: Would your SAST catch this?

What You Should Remember

If someone asks you tomorrow how SAST works, you should be able to say: "It turns your code into a tree, traces tainted data through it, flags risky flows, and (hopefully) tells you what's dangerous, before you ever deploy." That's it. No sales talk. No buzzwords. Just how it works.

And now that you know how SAST tools think... It's time to ask: why are most of them still a pain to use?

Let's get into that.

Why Most SAST Tools Fail in Real Life (From a Developer Who's Been Burned)

If you've ever actually tried running a SAST tool as a developer, not as an AppSec engineer reading PDF reports, you've probably had one of these moments:

Your CI lights up with 38 critical issues. You're not even using the report, and it flags a harmless test helper in a .spec.js file.

It warns you about

eval()in a library you're not even using in production.You spend an hour trying to "fix" something, only to realize it was a false positive caused by a misconfigured rule.

It's annoying. But it's "worse team." The problem is that most static analysis tools are built for audits, not for dev workflows. And that's the root of the disconnect.

1. They Think Security Issues Happen in Isolation

Here's the reality: the security bug isn't in the line that triggers the alert, it's usually three functions deep, across a service boundary, and tied to some weird edge case. But most SAST tools don't think like that. They treat your code like it's a bunch of unrelated files. So they'll flag a function that looks dangerous without understanding how it's used.

Example:

function renderUserHTML(input) { return `<div>${input}</div>`; }

The tool sees this and screams:

"⚠️ Unescaped user input in HTML!"

But it doesn't bother to check if this input ever actually comes from the user. Maybe you call it like this:

const html = renderUserHTML("Welcome, admin!");

That's not a security issue. That's just... HTML. What the tool should do is trace the input. Is it tainted? Does it cross trust boundaries? Most can't. And if it can't, you get flooded with alerts that don't matter, and miss the one that does.

2. They Don't Respect How Real Projects Are Structured

Let's be real, codebases are messy.

You have utils, helpers, shared, and legacy folders.

Some files are 3 years old and untouched.

Some code is only meant to run in test/dev environments.

But many SAST tools just walk through the entire repo like it's flat. They:

Flag dev-only code in your test harness

Warn about

eval()inside anode_modulesshimCrawl files ignored in

.eslintignoreor.gitignore

They don't care that a file is only ever imported in mock test setups — it's code, and they'll flag it. The result? You get a report full of red, but most of it is code that never even runs in production.

3. They Assume You Have Time to Dig Through This Stuff

Here's a common scenario: You're in the middle of shipping a feature. You open a PR. The SAST scan runs. Suddenly, your pipeline fails, and critical security issues are found. You click through to the report. It's a third-party dashboard, behind auth, loading slowly. You wait. It opens a giant table. No clear explanation. No reproduction steps. No fix suggestion. Just: "Rule: Potential Command Injection in child_process.spawn() — Critical"

You don't even use child_process in your code. Turns out it's from an unused script in the scripts/ folder. By the time you figure that out, 20 minutes are gone. And your feature still isn't merged.

4. They Break The CI Without Giving You Context

This is one of the most frustrating sins: SAST tools that break builds, but don't help you understand why. You see this:

SAST FAILED: 5 High issues

That's it. No logs, no links, no context inline in the PR. Imagine if linting worked that way:

Lint failed: 17 issues. No details. Good luck

You'd disable ESLint in a second. But somehow, this is standard for many SAST tools. Failing CI should only happen if the issue is:

Real

Severe

Actionable right now

And unless the tool proves that in the output, it's doing more harm than good.

5. They Don't Let You Train or Tune the Tool Like a Teammate

Here's the irony: most static analyzers treat your code like a stranger. You've fixed the same warning in 8 places? Cool. It'll flag it again on the 9th. You know a certain pattern is safe in your app? There's no way to tell the tool, unless you want to write a custom suppression rule using YAML nested under 4 directories. What developers want is a tool that learns as they go. That adapts. That remembers what you muted and why. Not one that resets to zero every time it runs.

So, Why Are We Even Talking About SAST Then?

Because the idea behind SAST is sound. The problem is the execution. Most tools just weren't built with developers in mind. They were made for checklists, compliance reports, and PowerPoint slides. What if SAST were a real-time code reviewer that's:

Smart enough to trace tainted input across your app

Quiet when things are safe

Loud when things are risky

Context-aware and fix-focused

We're not dropping a pitch here, we'll show you how to integrate this kind of tooling into your flow in the next section. But just know this: If you've hated static analysis before — that's not your fault. It's the fault of tools that never considered how developers work. Let's fix that.

How to Actually Use SAST Without Hating It

By now, we've seen why most SAST tools flop in real-world engineering teams. They're noisy. They're vague. They break things without explaining why. But that doesn't mean static analysis is broken. It means most teams are using tools that weren't built for how developers actually work. So, what does a good integration look like? Let's walk through how to use static analysis, specifically SAST, in a way that feels natural, actionable, and developer-first. We'll also show how CodeAnt solves the hard parts without needing a 30-page config file or a full-time AppSec team.

1. Plug SAST into the Pull Request, Not Just the Pipeline

Most SAST tools treat CI/CD like a gatekeeper: scan on PR, fail the build, and leave you with a vague report. That's not helpful. You're busy. You're trying to get a PR merged, not read a 40-line vulnerability dump from an external dashboard. A smarter approach is to:

Run static analysis on every pull request.

Leave inline comments directly in the PR

Summarize the entire code change + issues in one spot

Here's what that looks like in practice using CodeAnt: Recent scan activity shows commits automatically analyzed as part of PR workflows.

That's exactly how CodeAnt works. You commit, open a PR, and in under 2 minutes, you get:

A clean AI-generated summary of the code changes

Inline comments where issues were found

A full view of security risks, bugs, and code smells

And a central dashboard to track all this at once

2. Don't Just Detect Issues, Prioritize and Fix Them

Most tools dump a list of 50+ "possible" issues. You don't know what's real, what's critical, or what's even reachable. Good static analysis helps you triage:

Which flows actually involve user-controlled input

Which sinks are exploitable vs theoretical

Which issues block merging (and which ones can wait)

With CodeAnt.ai, this prioritization is built in. You don't get a flat report, you get a ranked view of risks, complexity, antipatterns, and overall code health.

This is why CodeAnt treats static analysis as a Code Quality and Security Gate, one that runs in CI, but only blocks PRs for:

Confirmed critical vulnerabilities

Secret exposures

Policy-breaking patterns

That way, you can get signal, not noise. You stay fast, but secure. And yes, you can configure this however you want using AI Code Review Configuration, down to custom prompts, severity thresholds, or team rules.

3. Fixes Shouldn't Live in PDFs. They Should Live in Your IDE.

If you've ever been told "here's the vuln, now go read this 6-page OWASP doc to fix it", you know the pain. You shouldn't have to piece together a fix from GitHub issues and StackOverflow. A real tool, like CodeAnt, goes further:

Flags issues and explains why they're risky

Suggests one-click fixes where possible

Even surfaces algorithmic inefficiencies, edge cases, and dead code

4. Let the Whole Team See What's Happening

SAST often lives in silos. Only a few senior engineers know what got flagged. Managers never see it. Junior devs don't know if they fixed it right. That's broken. Static analysis should be visible, trackable, and collaborative. That's why CodeAnt provides:

A real-time dashboard that shows every AI-generated comment

A count of open issues by repo, by team, by PR

A high-level summary that managers or leads can actually understand

Live breakdown of security issues by severity, repo, and status, built for team-wide visibility.

Clicking on the specific, you can understand the same in detail. For example:

So whether you’re leading a team of 5 or managing an org of 500, you can instantly answer:

“How much risky code are we pushing?”

“What did the AI catch this week?”

“What are we improving over time?”

5. Customize It. Don't Just Suffer Through It.

Here's something most tools forget: your team writes code in a certain way. You use specific patterns. You have your own internal rules. You review PRs your way. Here's something most tools forget: you have your own internal rules. You review PRs your way. You use specific patterns. You configure AI code reviews using natural language prompts. Want to:

Flag all shell commands in Node.js backends?

Suppress comments on legacy code in a folder?

Require checks for missing docstrings in data models?

You can. It's as flexible as you are. And unlike legacy rule engines, it doesn't require diving into YAML or writing AST rules from scratch.

Bottom Line: Static Analysis Should Feel Like a Code Reviewer, Not an Alarm System

If a tool just throws alerts at you and slows you down, you’ll ignore it. That’s fair.

But if it helps you catch real issues before they land, explains what’s wrong, and gives you fixes, then it’s more than a tool. It’s a teammate.

That’s what we’ve tried to build with CodeAnt — something that blends code quality, security, and actual usability into a single experience.

Now that you’ve seen what good static analysis should feel like, let’s step back and clarify something that trips up even experienced developers:

What’s the difference between SAST, SCA, and SBOM — and when should you care about each?

Let’s break it down.

SAST vs. SCA vs. SBOM, Understand the Difference Before You Ship

In the previous section, we looked at how SAST tools can fit cleanly into a developer's workflow, reviewing code like a teammate, not a blocker. But code you write is just one part of the system that ships. Let's fix that. Instead of treating them as acronyms, think of them as three distinct questions you'll eventually be asked about every codebase:

"Is the code you wrote safe?"

"Are the libraries you used safe?"

"Can you prove what you shipped, and what was in it?"

Each one of these, Let's walk through them properly.

1. Checking Your Own Code, That's SAST

SAST (Static Application Security Testing) is about logic you wrote. You're writing a feature. You take input from a user, pass it into a function, maybe render it, or hand it off to another part of your system. SAST traces that flow without running the app. It checks:

What paths user data can travel through

Whether that data ever reaches a risky place (e.g., DB, HTML, shell)

If anything's missing — like escaping, validation, or auth checks

This helps catch the kind of bugs that:

Tests didn't cover

Reviewers missed

End up as security issues in production

2. Checking Code You Didn't Write — That's SCA

Now think about what's in your package.json, requirements.txt, pom.xml, or Gemfile. Dozens, sometimes hundreds, of dependencies. SCA (Software Composition Analysis) scans the full dependency tree and compares it to vulnerability databases. If a library you use has a known exploit, even in a transitive dependency, SCA will catch it. It's not about theoretical logic bugs. It's about known problems:

Remote code execution in version X

Insecure crypto in version Y

Path traversal in a helper package you didn't even realize was bundled

SCA won't help you if you wrote an insecure flow. But it'll tell you if someone else's code is already compromised, and sitting in your repo.

3. Keeping a Record of What You Shipped — That's SBOM

Let's say your company pushes a release on Friday. A new CVE is announced the next week. Your security team asks: "Did our last build include that vulnerable package?" This is where SBOM (Software Bill of Materials) comes in. It's like a snapshot of everything that made it into your build: source, deps, tools, infra, all versioned and signed off. SBOM doesn't catch bugs. It lets you answer: "What did we ship?" That matters for:

Post-incident forensics

Audit trails

Compliance (e.g., SOC 2, ISO, FedRAMP)

Why This Separation Matters

A lot of teams use one of these and assume they're "secure." That's risky thinking. Each tool protects a different layer:

Concern | You Need… |

|---|---|

Your logic might be vulnerable | SAST |

Your dependencies might be broken | SCA |

Your release might have unknowns | SBOM |

And most real-world failures happen when one of these layers gets skipped or misunderstood.

Best Practices for Using SAST in 2025

Most teams have already wired up a scanner and added it to their CI. That's not the hard part. What separates real engineering orgs from those playing security theater is how they use SAST to shape how code is written, reviewed, and shipped. Here's how the best do it:

1. Use SAST as a Source of Truth for Tech Debt You Can't See

Good SAST alerts you show you what parts of your system attract fragile logic — the kind people avoid touching because it breaks easily.

2. Designate a "no-fix, just-learn" path for junior devs

Instead of throwing vague vulnerability alerts at them, let juniors:

Explore how taint flows triggered an alert

Manually trace the input/output logic

Write a PR comment explaining how it could have been worse

3. Hardcode what SAST won't do

No tool covers everything. Instead of pretending otherwise:

List what SAST covers

List what it intentionally does not (e.g., runtime auth, role-based behavior)

Share this as part of onboarding docs or team playbooks

4. Rewrite or delete the rule you've muted three times

This one's simple. If a rule keeps getting suppressed:

It's either badly written,

Or not relevant for your stack,

Or your team hasn't been trained on how to address it

5. Stop asking "Does this pass?" and start asking "Does this teach?"

Passing a scan means nothing if the next commit does the same thing again. Make it your standard to always explain the why of every critical alert during PR reviews.

6. Use findings to influence code design, not just PR fixes

If a component consistently fails SAST, maybe it's not just the implementation. Maybe the component is designed to be too flexible, too smart, or too permissive.

7. Turn consistent patterns into lint rules before they show up in SAST

If the same 4 SAST warnings show up in 70% of your PRs, don't just keep fixing them. Turn them into ESLint / Pylint / whatever rules, so they never get to the security layer in the first place.

8. Build a culture of ignoring what doesn't matter — loudly

Not every alert is worth acting on. But ignoring it silently trains your team to distrust the tool. Instead, normalize this: "We're ignoring this issue in this context, because we've validated it's not exploitable, and we've documented why." Say it in the PR. Log it in the tool. Make it visible.

9. Treat dangerous patterns like UX bugs, not logic bugs

If devs keep writing insecure code, it's often not a function they're careless, it's because the API encouraged it. You should need to think twice about insecure code, not about how to escape it.

Wrapping Up: What SAST Is Really About

Static analysis isn’t just about catching bugs or ticking off compliance boxes.

At its best, it helps you understand your code from the inside out — how it processes input, where assumptions fall apart, and what patterns could become problems before anything reaches production.

But you only get that value when it’s set up to fit how developers actually think and work.

Thanks for sticking with this guide, If you’ve read this far, you're probably not just looking to “check the SAST box.” You're trying to improve how your team writes, reviews, and reasons about code. And that’s where real engineering maturity shows up.

What to Explore Next (If You're Serious About Getting This Right)

Map your gaps. Do you have a scanner for your code logic (SAST), dependencies (SCA), and release visibility (SBOM)?

Review your current tool's last 20 alerts. Were they helpful? Ignored? Triaged?

Ask your team how they feel about security feedback. If the words "annoying" or "random" come up, something's off in how your tooling is integrated.

Start small, but start visible. Pick one repo, one flow, and integrate useful feedback directly into PRs, not dashboards that nobody checks.

A Not So Quiet Plug, If You're Curious

We built CodeAnt.ai because we were tired of tools that made things noisier, not clearer.

If you want something that:

Fits inside your PR workflow

Flags security and quality issues with real context

Offers smart, one-click suggestions

Blocks only what actually matters

…then this might be the one worth trying.

No credit card. No pushy follow-ups. Just something you can test inside your workflow — and judge for yourself.

FAQs

What is Static Application Security Testing (SAST) and how is it different from tests or linters?

SAST vs SCA vs DAST vs SBOM...when do I use each?

Why do SAST tools raise false positives, and how do I reduce noise?

How do I integrate SAST into developer workflow without slowing releases?

How do we measure SAST effectiveness and ROI?

Table of Contents

Start Your 14-Day Free Trial

AI code reviews, security, and quality trusted by modern engineering teams. No credit card required!

Share blog: