AI Code Review

Dec 23, 2025

How to Review Code in Trunk-Based Development Workflows

Sonali Sood

Founding GTM, CodeAnt AI

Trunk-based development (TBD) is built for speed. Teams merge changes into a single shared trunk multiple times a day, work on short-lived branches, and rely on continuous integration to keep the codebase deploy-worthy at all times. As simple as this sounds, it completely reshapes how code reviews must work.

In traditional branching models, reviews can be batched, queued, or delayed for days. But in trunk-based workflows, review delays immediately block integration, slow delivery, and increase the risk of merge conflicts. That means teams need code review systems that are fast, consistent, and automated—without sacrificing accuracy or safety.

This guide explains exactly how to review code effectively in trunk-based development, what to automate, and how to build a review culture that keeps trunk healthy.

pasted

What Is Trunk-Based Development and Why It Changes Code Review

Trunk-based development revolves around a single core idea: everyone integrates small changes into trunk frequently—usually daily or multiple times per day.

Unlike long-lived feature branches, where a change might sit isolated for days or weeks, TBD requires constant merging. That means code review becomes time-sensitive and tightly integrated into the development loop.

Core differences that affect code review:

Branches are short-lived, often lasting only hours

Merges to trunk happen continuously

Long review queues are unacceptable

Small, incremental changes are the norm

Review must happen fast—without compromising quality

Trunk-based development isn’t just a branching strategy. It’s a philosophy of continuous integration, shared ownership, and rapid feedback loops.

Core Principles of Trunk-Based Development

These are the pillars that determine how code review must operate:

Single shared trunk: Everyone commits to the same main branch.

Short-lived branches: Branches are small, focused, and quickly merged.

Frequent integration: Code integrates often to reduce drift and conflicts.

Deployment readiness: Trunk remains stable enough to deploy anytime.

In TBD, review is not an optional step—it’s a continuous safety mechanism that enables fast integration without breaking production.

Trunk-Based Development vs. GitFlow Review Workflows

GitFlow and trunk-based development require very different approaches to code review.

Here’s a clear comparison:

Aspect | Trunk-Based Development | GitFlow |

|---|---|---|

Branch lifespan | Hours to a few days | Days to weeks |

Review timing | Immediate or synchronous | Asynchronous queues acceptable |

Merge frequency | Multiple times daily | Per feature or per sprint |

Review scope | Small, focused changes | Large feature sets |

GitFlow allows review queues to build because branches live longer. Trunk-based development cannot tolerate that delay—reviews must be near-immediate.

How Short-Lived Branches Affect Review Timing

Short-lived branches create urgency.

If a branch sits open too long:

trunk moves ahead

conflicts emerge

developers lose context

integration becomes risky

That’s why TBD teams review continuously—not at the end of the day, not when convenient, but as part of the daily flow.

Synchronous vs. Asynchronous Code Reviews for Trunk Dev Teams

In a world where changes must be reviewed quickly, the question becomes: should reviews be real-time or queued? The answer depends on team structure, collaboration style, and time zones.

When Pair Programming Replaces Pull Request Reviews

Pair programming is the ultimate synchronous review.

Benefits include:

code is reviewed as it’s written

no separate PR cycle

instant feedback

increased shared understanding

This works especially well for:

colocated teams

high-stakes subsystems

junior–senior pairing

design-heavy changes

In pair programming, the PR becomes a formality rather than the review venue.

When Asynchronous Reviews Still Work in Trunk-Based Workflows

Async reviews are common—but they need speed.

For TBD, async only works when:

reviewers respond within hours

automation handles the early checks

PRs are extremely small

clear review expectations exist

If async reviews take a day or more, you’re no longer practicing trunk-based development—you're practicing delayed integration.

Hybrid Review Approaches for Distributed Teams

Globally distributed teams often use a hybrid model:

Authors open PRs

CodeAnt AI or CI automation reviews instantly

Reviewers follow up when online

Risk hotspots are flagged early to speed human review

This “AI-first, human-approved” model ensures trunk never waits for a time zone to wake up.

Code Review Best Practices for Trunk-Based Development

Reviewing code in TBD requires both speed and precision. Here are the best practices tailored specifically for fast integration cycles.

1. Keep Commits Small and Review-Ready

Small commits keep trunk clean. They:

reduce review load

isolate issues

simplify reverts

speed up approvals

A small, atomic PR is the foundation of high-velocity trunk-based work.

2. Review Before Every Merge to Trunk

This is the unbreakable rule: Nothing merges to trunk without review.

Skipping reviews undermines the entire workflow:

defects slip in

trunk becomes unstable

deployment confidence drops

Fast reviews don’t mean shallow reviews—they mean well scoped reviews.

3. Use Feature Flags to Separate Review From Release

Feature flags let you:

merge incomplete work safely

reduce long-lived branches

limit blast radius

test in production safely

With flags, reviewers no longer need to check “is the feature ready for users?” They only check “is the code safe to merge?”

4. Set Clear Review Turnaround Expectations

TBD cannot survive ambiguous review timelines.

Define expectations such as:

respond within 2–4 hours

complete review by end of day

escalate if blocked for more than one cycle

These expectations keep feedback loops tight.

5. Balance Human Review With Automation

Automation handles the repetitive parts:

linting

formatting

dependency checks

test execution

Humans handle:

business logic

architecture

edge cases

tradeoffs

This balance is essential for trunk stability.

Automating Code Review in CI/CD Pipelines for Trunk-Based Deployment

Automation isn’t “nice to have” in TBD—it is required to keep trunk safe while merging continuously.

Pre-Merge Automated Quality Checks

These should run before review even begins:

Linting & formatting: enforce consistency automatically

Unit tests: ensure no regressions

Build verification: confirm code compiles

Dependency scanning: detect vulnerable packages

When PRs fail these checks, reviewers avoid unnecessary overhead.

AI-Powered Code Review for Continuous Integration

AI-powered tools like CodeAnt AI add a new layer of review:

PR summaries

risk detection

maintainability scoring

complexity warnings

security red flags

line-by-line fix suggestions

This gives reviewers a guided path through the PR instead of starting from scratch.

Security Scanning and Quality Gates

Quality gates ensure merging is safe.

Common gates include:

secret detection

license compliance

high-severity vulnerability blocking

In trunk-based workflows, these gates are essential because merges happen so frequently.

Tools for Code Review in Trunk-Based Development Workflows

Different tools support different parts of the review lifecycle—choose ones that keep pace with fast-moving integration.

Pull Request Code Review Platforms

GitHub, GitLab, Azure DevOps, and Bitbucket provide:

PR discussions

inline comments

reviewer assignment

branch protections

But for high-velocity teams, native features may not be enough.

Static Analysis and Automated Review Tools

These help enforce standards:

ESLint

Prettier

Black

Static analysis ensures consistent codebase hygiene at scale.

AI Code Review Assistants

AI tools are now essential:

they scale review coverage

provide context-aware suggestions

catch logic-level issues

reduce cognitive load on reviewers

CodeAnt AI goes further by combining:

AI review

SAST

static analysis

dependency scanning

quality scoring

in a single platform.

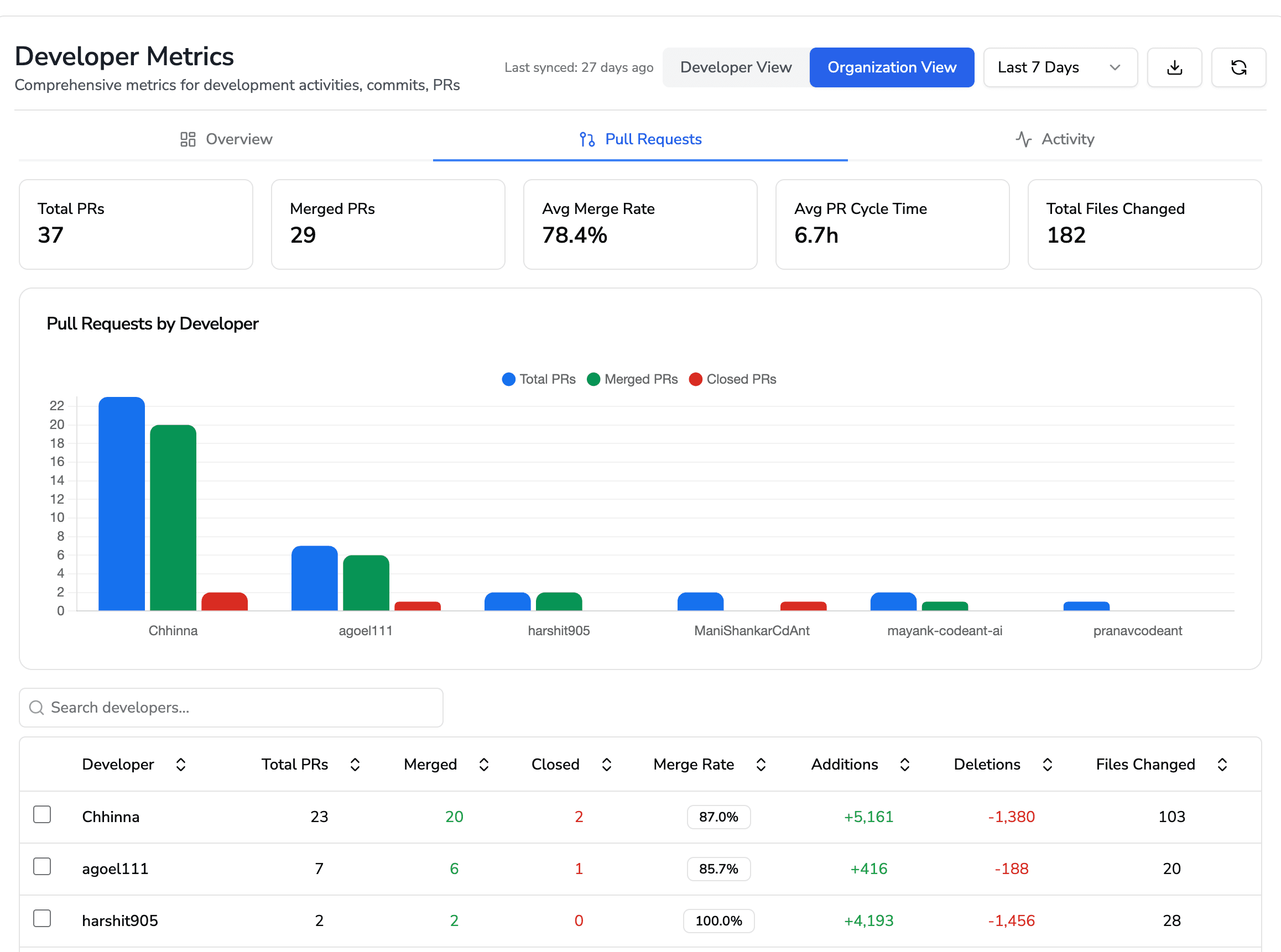

Code Review Metrics for Trunk-Based Teams

Measurement is crucial because feedback loops are short.

Review Cycle Time

Time from PR opened → approved. Should be measured in hours.

Defect Escape Rate

How many bugs reach production despite review? An essential signal for review quality.

Review Coverage

Percentage of code changes reviewed. In TBD, this should be near 100%.

Reviewer Load Distribution

Ensures no single engineer becomes a bottleneck. CodeAnt AI provides dashboards across all repos to help teams balance reviewer load.

Common Code Review Pitfalls in Trunk-Based Workflows

Avoid these common mistakes that break trunk stability.

1. Skipping Reviews to Maintain Merge Velocity

Speed without quality breaks trunk. Fast ≠ careless.

2. Review Queues That Block Continuous Integration

Review queues are the enemy of trunk-based development.

Fix with:

smaller PRs

more reviewers

automation

clear SLAs

3. Inconsistent Standards Across Teams

Inconsistency leads to unpredictable code quality. Document standards and enforce them with automation.

4. Over-Reliance on Automated Checks Without Human Oversight

Automation catches patterns. Only humans catch business logic. Both are required for TBD.

Scaling Code Review for Large Trunk-Based Development Teams

Large engineering teams often struggle with reviewer bottlenecks and uneven ownership. These practices help scale review capacity.

Code Ownership and Automated Review Routing

Use:

CODEOWNERS

team boundaries

domain ownership maps

Automate assignment to reduce delays and remove ambiguity.

Modular Codebases and Review Boundaries

Modularization helps teams review only what they own, not the entire codebase. This keeps review load predictable as teams scale.

High-Volume Review Automation

Large teams generate hundreds of PRs weekly. AI-assisted review is the only scalable way to maintain:

coverage

safety

codebase health

without increasing reviewer headcount.

Building a Review Culture That Powers Continuous Code Integration

Successful trunk-based development requires treating review as a priority, not a disruption. When review is fast, consistent, and automated, trunk stays healthy and deployment-ready.

Automation handles repetitive checks; humans focus on reasoning and correctness.

Teams ready to accelerate both delivery and code quality rely on platforms like CodeAnt AI.

Run yourself through our free 14-day trial at https://app.codeant.ai to learn more.