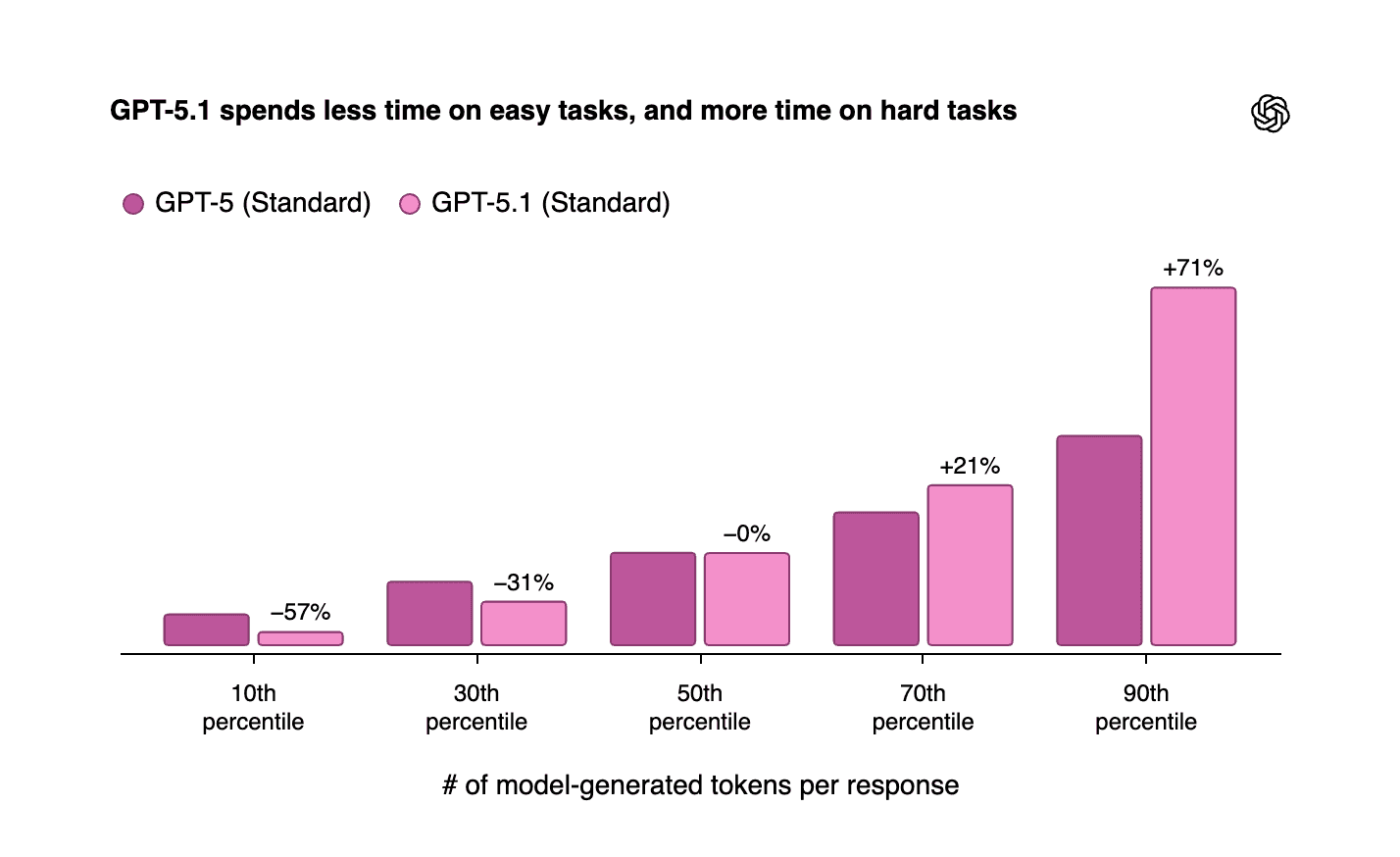

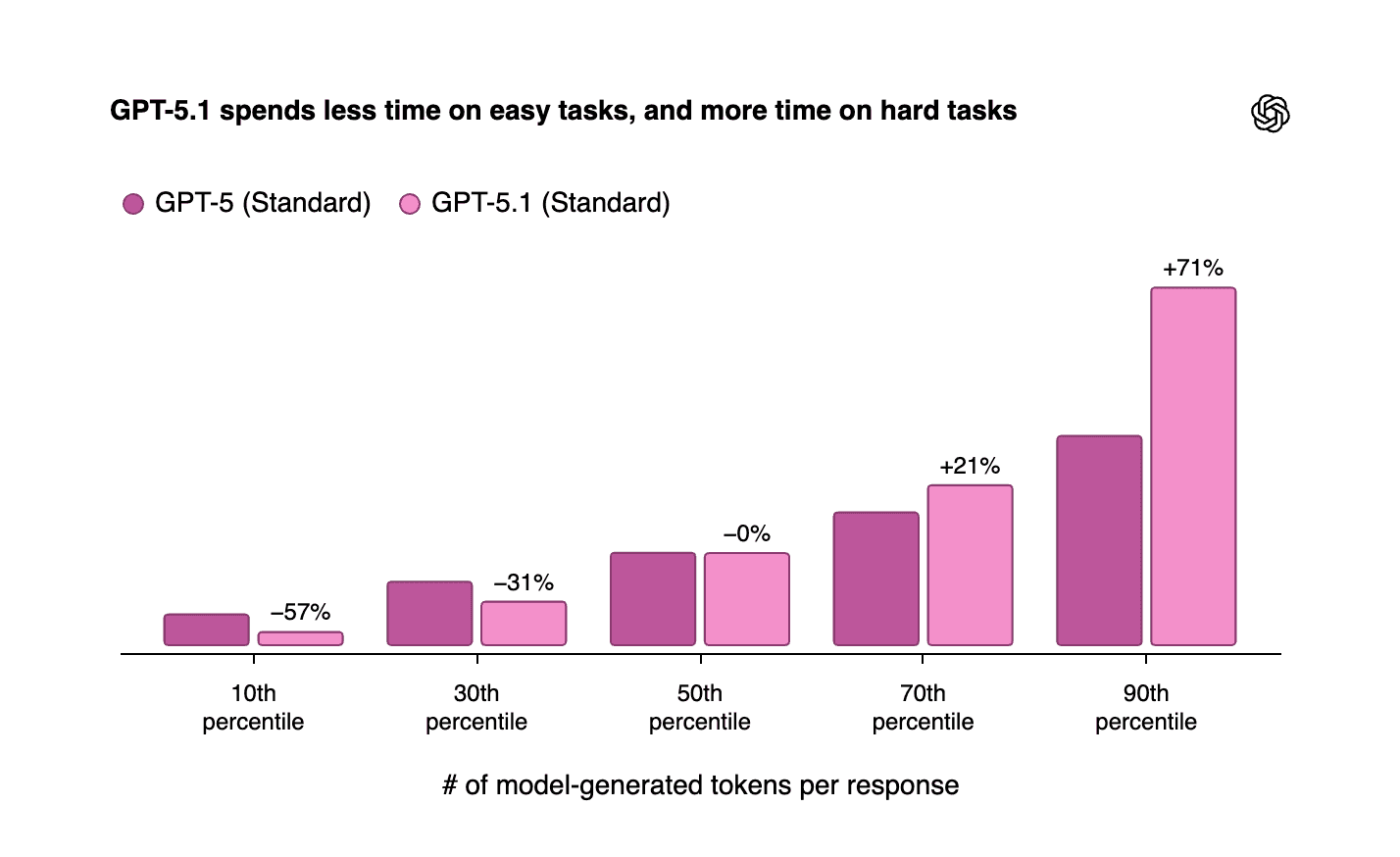

When building AI-powered code review systems, choosing the right model is critical. OpenAI offers two specialized models for coding tasks:

GPT-5.1: A general-purpose reasoning model.

GPT-5.1-Codex: A specialized coding model optimized for "long-running, agentic coding tasks."

The conventional wisdom suggests that GPT-5.1-Codex should excel at code analysis tasks due to its specialized training for software engineering workflows. However, our testing revealed surprising results that challenge this assumption.

We set out to answer a simple question: Which model is better for automated code review tasks?

Our Test Setup

Test Codebases

Small Codebase (Simple Bug)

Bug Type: Missing

awaitkeyword in async function callComplexity: Single file, single issue

Files: 3 JavaScript files

Location:

api.js:28-const success = this.userService.updateUserProfile(userId, profileData);(should beawait)

Large Codebase (Complex Bug)

Bug Type: Case sensitivity mismatch in permission lookup across multiple files

Complexity: Requires tracing flow through 5 interconnected files

Files: 8 files across multiple directories (models, services, middleware, config)

Location:

config/permissions.js:22-'Admin': ['manage_users', 'view_analytics'](should be'admin'lowercase)Impact: Admin users cannot access protected routes despite having correct role

Agent Harness

We built a simple LangChain-based tool-calling agent with:

5 Tools: Bash, Read, LS, Glob, Grep for codebase exploration

Token Tracking: Comprehensive monitoring of token usage and tool calls

Azure OpenAI: Using Azure endpoints for both models

Configuration:

API Version:

2024-05-01-previewMax Iterations:

50Max Execution Time:

600 seconds

Test Prompt

System: You are an expert code reviewer specializing in JavaScript/Node.js applications. Your task is to analyze the codebase and identify any bugs or issues.

User: There is obviously one bug in this codebase. Please find that bug.

Models Tested

gpt-5.1-codex: OpenAI’s specialized coding model

gpt-5.1: OpenAI’s general-purpose reasoning model

Test Results

Both models successfully identified the bugs in both codebases. However, their approaches and efficiency differed dramatically.

Small Codebase Results

GPT-5.1-Codex Performance:

✓ Found Bug: Missing await in api.js:28

Duration: 14.56 seconds

Tool Calls: 5 (LS, LS, Read, Read, Read - sequential)

LLM Calls: 6

Total Tokens: 9,233

- Prompt Tokens: 8,089

- Completion Tokens: 1,144

Avg Tokens per Call: 1,538.83

GPT-5.1 Performance:

✓ Found Bug: Missing await in api.js:28

Duration: 8.00 seconds

Tool Calls: 5 (Glob, Read, Read, Read, Read - parallel)

LLM Calls: 3

Total Tokens: 4,571

- Prompt Tokens: 3,900

- Completion Tokens: 671

Avg Tokens per Call: 1,523.67

Key Observation: GPT-5.1 was 1.82x faster and used 49.5% fewer tokens despite finding the same bug.

Large Codebase Results

GPT-5.1-Codex Performance:

✓ Found Bug: Case sensitivity mismatch in permissions.js:22

Duration: 25.72 seconds

Tool Calls: 13 (6 LS sequential, 7 Read sequential)

LLM Calls: 14

Total Tokens: 37,890

- Prompt Tokens: 37,144

- Completion Tokens: 746

Avg Tokens per Call: 2,706.43

...

GPT-5.1 Performance:

✓ Found Bug: Case sensitivity mismatch in permissions.js:22

Duration: 13.89 seconds

Tool Calls: 8 (1 Glob, 7 Read parallel)

LLM Calls: 3

Total Tokens: 7,855

- Prompt Tokens: 6,561

- Completion Tokens: 1,294

Avg Tokens per Call: 2,618.33

...

Key Observation: GPT-5.1 was 1.85x faster and used 79.3% fewer tokens on the complex multi-file bug.

Performance Comparison

Metric | Small Codebase | Large Codebase |

Speed Winner | GPT-5.1 (1.82× faster) | GPT-5.1 (1.85× faster) |

Token Efficiency | GPT-5.1 (49.5% fewer) | GPT-5.1 (79.3% fewer) |

Tool Calls (Codex) | 5 sequential | 13 sequential |

Tool Calls (GPT-5.1) | 5 (1 parallel batch) | 8 (1 parallel batch) |

LLM Calls (Codex) | 6 | 14 |

LLM Calls (GPT-5.1) | 3 | 3 |

Codex Duration | 14.56s | 25.72s |

GPT-5.1 Duration | 8.00s | 13.89s |

Codex Tokens | 9,233 | 37,890 |

GPT-5.1 Tokens | 4,571 | 7,855 |

Tool-Calling Patterns

Codex → sequential LS pattern

GPT-5.1 → parallel Glob + Read batch

Analysis: Why GPT-5.1 Outperformed GPT-5.1-Codex

Our Initial Hypothesis

“gpt-5.1-codex models are optimized for long-running, agentic coding tasks…” — OpenAI

Key Reasons for the Performance Gap

Parallel vs Sequential Tool Calling

Task Type Mismatch

Reasoning Mode Optimization

Tool Call Strategy Differences

Important Caveat: Potential Harness Optimization Issue

Our Conclusion

For our specific use case (autonomous bug finding in code review): GPT-5.1 is clearly superior: 1.8x faster, 50-80% fewer tokens, better tool-calling strategy.

However, this may not reflect GPT-5.1-Codex’s full capabilities because:

The task (analysis) doesn’t align with Codex’s strength (generation with iteration)

The harness likely isn’t optimized for Codex’s expected workflow

Limited documentation made proper Codex optimization difficult

When to Use Each Model

Use GPT-5.1 for | Use GPT-5.1-Codex for |

Code analysis & bug finding | Long-running code generation |

Parallel exploration | Iterative development loops |

Low-latency ops | Multi-file refactoring |

Multiple independent tool calls | Persistent iteration over hours |

General coding assistance | Codex-optimized harness workflows |

We’re eager to learn if others have found ways to optimize GPT-5.1-Codex for analysis tasks or have different experiences with these models.

When building AI-powered code review systems, choosing the right model is critical. OpenAI offers two specialized models for coding tasks:

GPT-5.1: A general-purpose reasoning model.

GPT-5.1-Codex: A specialized coding model optimized for "long-running, agentic coding tasks."

The conventional wisdom suggests that GPT-5.1-Codex should excel at code analysis tasks due to its specialized training for software engineering workflows. However, our testing revealed surprising results that challenge this assumption.

We set out to answer a simple question: Which model is better for automated code review tasks?

Our Test Setup

Test Codebases

Small Codebase (Simple Bug)

Bug Type: Missing

awaitkeyword in async function callComplexity: Single file, single issue

Files: 3 JavaScript files

Location:

api.js:28-const success = this.userService.updateUserProfile(userId, profileData);(should beawait)

Large Codebase (Complex Bug)

Bug Type: Case sensitivity mismatch in permission lookup across multiple files

Complexity: Requires tracing flow through 5 interconnected files

Files: 8 files across multiple directories (models, services, middleware, config)

Location:

config/permissions.js:22-'Admin': ['manage_users', 'view_analytics'](should be'admin'lowercase)Impact: Admin users cannot access protected routes despite having correct role

Agent Harness

We built a simple LangChain-based tool-calling agent with:

5 Tools: Bash, Read, LS, Glob, Grep for codebase exploration

Token Tracking: Comprehensive monitoring of token usage and tool calls

Azure OpenAI: Using Azure endpoints for both models

Configuration:

API Version:

2024-05-01-previewMax Iterations:

50Max Execution Time:

600 seconds

Test Prompt

System: You are an expert code reviewer specializing in JavaScript/Node.js applications. Your task is to analyze the codebase and identify any bugs or issues.

User: There is obviously one bug in this codebase. Please find that bug.

Models Tested

gpt-5.1-codex: OpenAI’s specialized coding model

gpt-5.1: OpenAI’s general-purpose reasoning model

Test Results

Both models successfully identified the bugs in both codebases. However, their approaches and efficiency differed dramatically.

Small Codebase Results

GPT-5.1-Codex Performance:

✓ Found Bug: Missing await in api.js:28

Duration: 14.56 seconds

Tool Calls: 5 (LS, LS, Read, Read, Read - sequential)

LLM Calls: 6

Total Tokens: 9,233

- Prompt Tokens: 8,089

- Completion Tokens: 1,144

Avg Tokens per Call: 1,538.83

GPT-5.1 Performance:

✓ Found Bug: Missing await in api.js:28

Duration: 8.00 seconds

Tool Calls: 5 (Glob, Read, Read, Read, Read - parallel)

LLM Calls: 3

Total Tokens: 4,571

- Prompt Tokens: 3,900

- Completion Tokens: 671

Avg Tokens per Call: 1,523.67

Key Observation: GPT-5.1 was 1.82x faster and used 49.5% fewer tokens despite finding the same bug.

Large Codebase Results

GPT-5.1-Codex Performance:

✓ Found Bug: Case sensitivity mismatch in permissions.js:22

Duration: 25.72 seconds

Tool Calls: 13 (6 LS sequential, 7 Read sequential)

LLM Calls: 14

Total Tokens: 37,890

- Prompt Tokens: 37,144

- Completion Tokens: 746

Avg Tokens per Call: 2,706.43

...

GPT-5.1 Performance:

✓ Found Bug: Case sensitivity mismatch in permissions.js:22

Duration: 13.89 seconds

Tool Calls: 8 (1 Glob, 7 Read parallel)

LLM Calls: 3

Total Tokens: 7,855

- Prompt Tokens: 6,561

- Completion Tokens: 1,294

Avg Tokens per Call: 2,618.33

...

Key Observation: GPT-5.1 was 1.85x faster and used 79.3% fewer tokens on the complex multi-file bug.

Performance Comparison

Metric | Small Codebase | Large Codebase |

Speed Winner | GPT-5.1 (1.82× faster) | GPT-5.1 (1.85× faster) |

Token Efficiency | GPT-5.1 (49.5% fewer) | GPT-5.1 (79.3% fewer) |

Tool Calls (Codex) | 5 sequential | 13 sequential |

Tool Calls (GPT-5.1) | 5 (1 parallel batch) | 8 (1 parallel batch) |

LLM Calls (Codex) | 6 | 14 |

LLM Calls (GPT-5.1) | 3 | 3 |

Codex Duration | 14.56s | 25.72s |

GPT-5.1 Duration | 8.00s | 13.89s |

Codex Tokens | 9,233 | 37,890 |

GPT-5.1 Tokens | 4,571 | 7,855 |

Tool-Calling Patterns

Codex → sequential LS pattern

GPT-5.1 → parallel Glob + Read batch

Analysis: Why GPT-5.1 Outperformed GPT-5.1-Codex

Our Initial Hypothesis

“gpt-5.1-codex models are optimized for long-running, agentic coding tasks…” — OpenAI

Key Reasons for the Performance Gap

Parallel vs Sequential Tool Calling

Task Type Mismatch

Reasoning Mode Optimization

Tool Call Strategy Differences

Important Caveat: Potential Harness Optimization Issue

Our Conclusion

For our specific use case (autonomous bug finding in code review): GPT-5.1 is clearly superior: 1.8x faster, 50-80% fewer tokens, better tool-calling strategy.

However, this may not reflect GPT-5.1-Codex’s full capabilities because:

The task (analysis) doesn’t align with Codex’s strength (generation with iteration)

The harness likely isn’t optimized for Codex’s expected workflow

Limited documentation made proper Codex optimization difficult

When to Use Each Model

Use GPT-5.1 for | Use GPT-5.1-Codex for |

Code analysis & bug finding | Long-running code generation |

Parallel exploration | Iterative development loops |

Low-latency ops | Multi-file refactoring |

Multiple independent tool calls | Persistent iteration over hours |

General coding assistance | Codex-optimized harness workflows |

We’re eager to learn if others have found ways to optimize GPT-5.1-Codex for analysis tasks or have different experiences with these models.

FAQs

What is the main difference between GPT-5.1 and GPT-5.1-Codex?

Why did GPT-5.1 outperform GPT-5.1-Codex in bug-finding tasks?

Which model should I use for code generation or refactoring?

How much faster was GPT-5.1 in benchmarks?

Can GPT-5.1-Codex performance improve with an optimized harness?

Table of Contents

Start Your 14-Day Free Trial

AI code reviews, security, and quality trusted by modern engineering teams. No credit card required!

Share blog: