AI Code Review

Dec 22, 2025

How to Review Code Faster When Merge Conflicts Slow Your Team Down

Sonali Sood

Founding GTM, CodeAnt AI

Merge conflicts and slow code reviews are two silent killers of engineering velocity. Most teams treat them as routine nuisances, but their impact compounds fast: stale branches, delayed feedback, abandoned PRs, rushed approvals, and massive context-switching losses. By the time conflicts surface, teams have already lost hours or days—not because the code was bad, but because the workflow was inefficient.

This guide explains why merge conflicts cost more than you think, what causes slow reviews, and how teams can adopt scalable, modern review workflows (including AI-assisted code review) to move faster without sacrificing code quality.

Why Merge Conflicts and Code Review Delays Cost More Than You Think

Teams often underestimate the real cost of merge conflicts. A conflict isn’t just an annoying Git message—it’s a cascading delay that affects the entire delivery chain.

Velocity Impact on Release Cycles

When a pull request sits open too long, the codebase continues evolving around it. New changes land in main, dependencies shift, and integration points diverge. Even a perfectly written PR can become outdated in a matter of days.

This slows release cycles because:

dependent features can’t move forward

QA has to test rebased code multiple times

product managers must adjust timelines

engineers revisit old work instead of progressing

Velocity isn’t just “how fast we merge PRs.” It’s how quickly the team delivers value while maintaining stability.

Developer Productivity and Context Switching Losses

Every engineer knows the pain: You open a PR you wrote last week and think, “What is this logic again?”

Long waits force developers to:

re-understand code

re-evaluate decisions

re-run test scenarios

re-explain logic to reviewers

This cognitive reset is expensive. Even a 10–15 minute re-ramp per PR adds up across dozens of developers and hundreds of PRs.

Business Costs of Shipping Delays

When review delays pile up, the business pays the price:

missed release dates

slower customer feedback

delayed onboarding of new features

mounting tech debt from diverging branches

Most organizations don’t quantify the cost of review delays, but it compounds silently every sprint.

What Causes Merge Conflicts and Slow Code Reviews

Before fixing review workflows, teams need to understand the root causes. These are the highest-impact friction points.

Long-Lived Branches and Stale Pull Requests

The longer a branch stays open, the more it drifts from main. Divergence increases the chance of conflict, and conflict increases the time needed to merge safely.

A “long-lived branch” is any branch where changes accumulate for days without syncing. Many teams unintentionally create them by:

waiting too long for reviews

batching too many changes together

avoiding merges until the feature is “perfect”

Stale PRs are conflict magnets.

Oversized Pull Requests That Overwhelm Reviewers

Large, monolithic PRs slow reviews more than almost anything else. Reviewers subconsciously avoid them because they require too much focus to process.

Oversized PR problems include:

Too many files changed → reviewers can’t hold context

Mixed concerns → refactors bundled with new features

No clear focus → reviewers don’t know where to start

A PR shouldn’t be a novel. It should be a clear, scoped change.

Unclear Code Review Standards and Expectations

When teams lack review guidelines, every reviewer invents their own standards. This leads to:

inconsistent feedback

subjective debates

unclear expectations for authors

unnecessary back-and-forth

Teams without checklists or documented rules always review slower.

Reviewer Bottlenecks From Unbalanced Workloads

Many teams rely too heavily on 1–2 senior engineers who become bottlenecks:

every critical PR waits for them

they can’t get to everything

review queues pile up

dependent teams get blocked

This “hero reviewer” pattern is unsustainable.

How to Prevent Merge Conflicts Before They Start

Here is a structured, sequential system teams can adopt immediately.

1. Keep Branches Short-Lived With Trunk-Based Development

Trunk-based development is the practice of merging small, continuous changes into main frequently. Short-lived branches naturally reduce merge drift because they’re updated regularly and never diverge far from the canonical codebase.

Teams using trunk-based workflows see dramatically fewer merge conflicts.

2. Break Large Changes Into Smaller Pull Requests

Scoping PRs to a single logical change reduces conflicts and speeds up feedback.

Dependent PRs? Use stacked PRs (vertical chaining) where each PR builds on the previous one. This breaks a large initiative into bite-sized reviews without blocking progress.

Small PRs move faster. Fast movement prevents conflicts.

3. Rebase and Sync With Main Frequently

A daily rebase habit keeps branches aligned with main. Rebasing early ensures conflicts are small, local, and fixable—before they grow into multi-file disasters.

Developers with less Git experience may need clarity: a rebase reshapes your local branch so it's built on the latest main commits, reducing drift.

4. Use Feature Flags to Merge Incomplete Work Safely

Feature flags allow you to merge partially complete work without exposing it to users. This keeps branches short-lived, reduces divergence, and prevents the nightmare of late-stage conflicts.

Flags make merging a safe, continuous operation.

How to Speed Up Code Reviews Without Sacrificing Quality

This is the heart of the problem: how do you move faster and keep quality high?

1. Set Clear Time-to-Review Expectations

Ambiguity slows everything. Setting team-wide SLAs such as:

“initial review within 4–6 business hours”

“all PRs reviewed same day unless complex”

reduces review anxiety and avoids unintentional delays.

2. Use Self-Review as the First Quality Gate

Before requesting review, authors should perform a quick self-review:

Read the diff with fresh eyes

Remove debugging artifacts (console logs, TODOs, commented-out code)

Verify tests and CI checks pass

Self-review eliminates 30–40% of the mistakes reviewers normally catch.

3. Write PR Descriptions That Answer Reviewer Questions Upfront

A well-written PR description can save hours of review time.

Strong PR descriptions include:

What: A clear summary of what changed

Why: The reason, including links to tickets or decisions

How to test: Reviewer steps to evaluate the change

Risks: Things to pay attention to

PRs with good descriptions get approved faster—because reviewers don’t have to ask.

4. Automate Linting and Static Analysis Before Human Review

Humans should not be correcting style issues or catching syntax errors.

Automate linting and static analysis so reviewers focus on logic, design, and correctness. CodeAnt AI also runs automated SAST security checks, quality scoring, and risk detection before reviewers engage.

Automation removes mechanical workload, enabling reviewers to think deeper.

5. Build a Review Rotation to Distribute Reviewer Load

A fair review rotation eliminates reviewer overload. Round-robin, CODEOWNERS-based routing, or load-balanced assignment ensures no engineer becomes the bottleneck.

Balanced review distribution improves review throughput for the entire team.

Async Code Review Workflows for Distributed Teams

Remote teams must optimize for asynchronous speed.

Timezone-Friendly Review Handoffs

Structure work so PRs are ready at the end of one timezone’s day, allowing the next timezone to handle review. This “follow-the-sun” pattern reduces idle time and accelerates merge cycles.

Standardized Comment Templates for Faster Feedback

Comment classification improves clarity and reduces friction:

Blocking — must change

Suggestion — optional improvement

Nit — minor preference

Question — clarification

Clear annotation shortens feedback cycles dramatically.

Escalation Protocols for Blocking Issues

Teams need defined rules for:

when to ping reviewers

when to pull in a second reviewer

when to escalate to a tech lead

Clarity prevents PRs from stagnating.

How to Handle Reviewer Disagreements Constructively

Disagreements are inevitable—but they don’t need to derail reviews.

Preference Versus Performance Disagreements

Most disagreements stem from subjective preferences (styling, naming). These should defer to documented standards.

Objective disagreements—like performance regressions or correctness issues—deserve deeper discussion.

Knowing which category you're in prevents unnecessary conflicts.

Decision Frameworks for Resolving Conflicts

A simple framework works:

If the debate is about preference → author decides

If the debate affects correctness or performance → reviewer concerns take priority

If disagreement persists → involve a neutral lead

This prevents endless back-and-forth.

When to Escalate and When to Compromise

Escalate only when the cost of delay outweighs the cost of the decision. Many disagreements can be resolved by choosing the simpler, safer approach.

Tools and Automation That Accelerate Code Reviews

AI-Powered Code Review Assistants

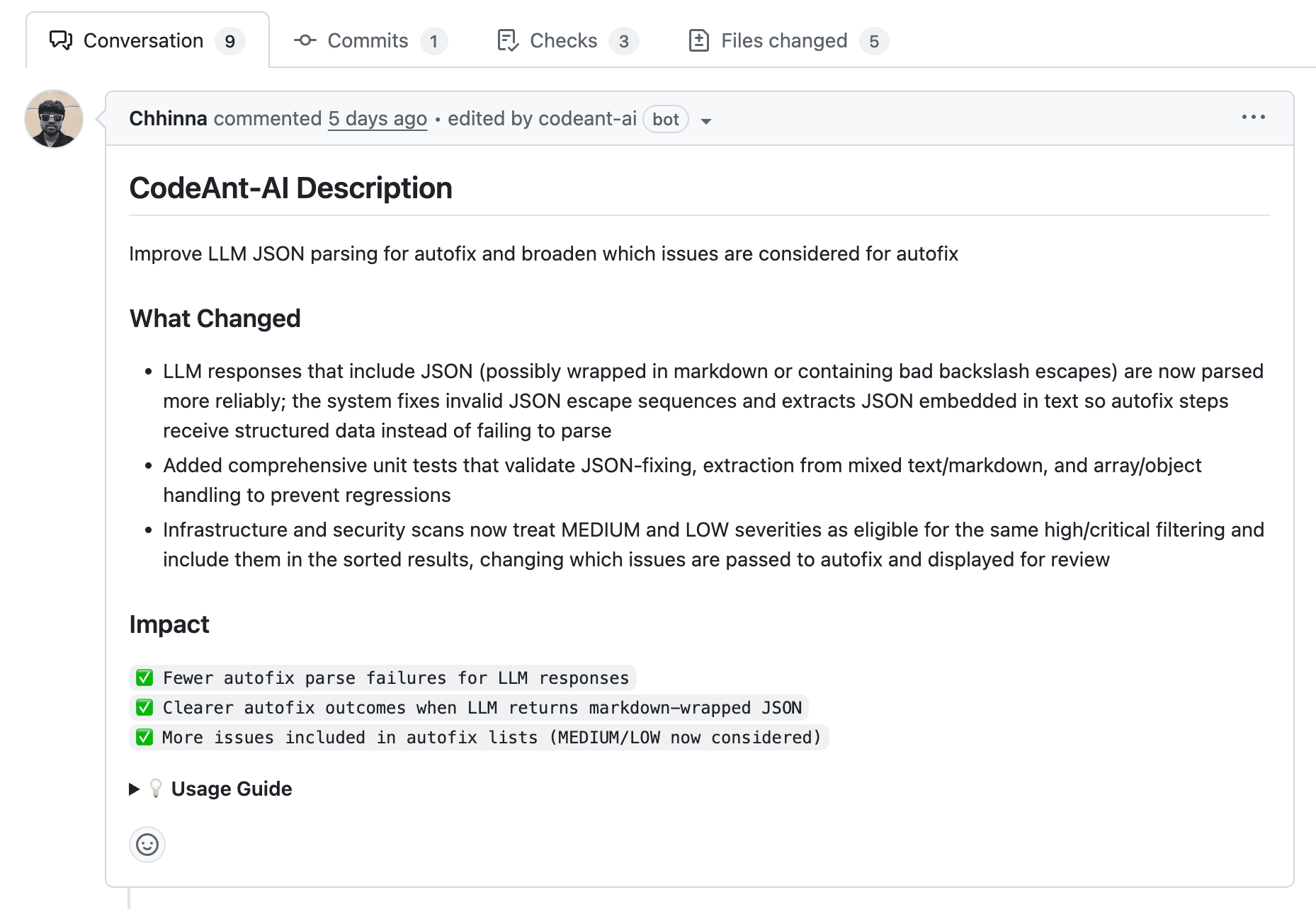

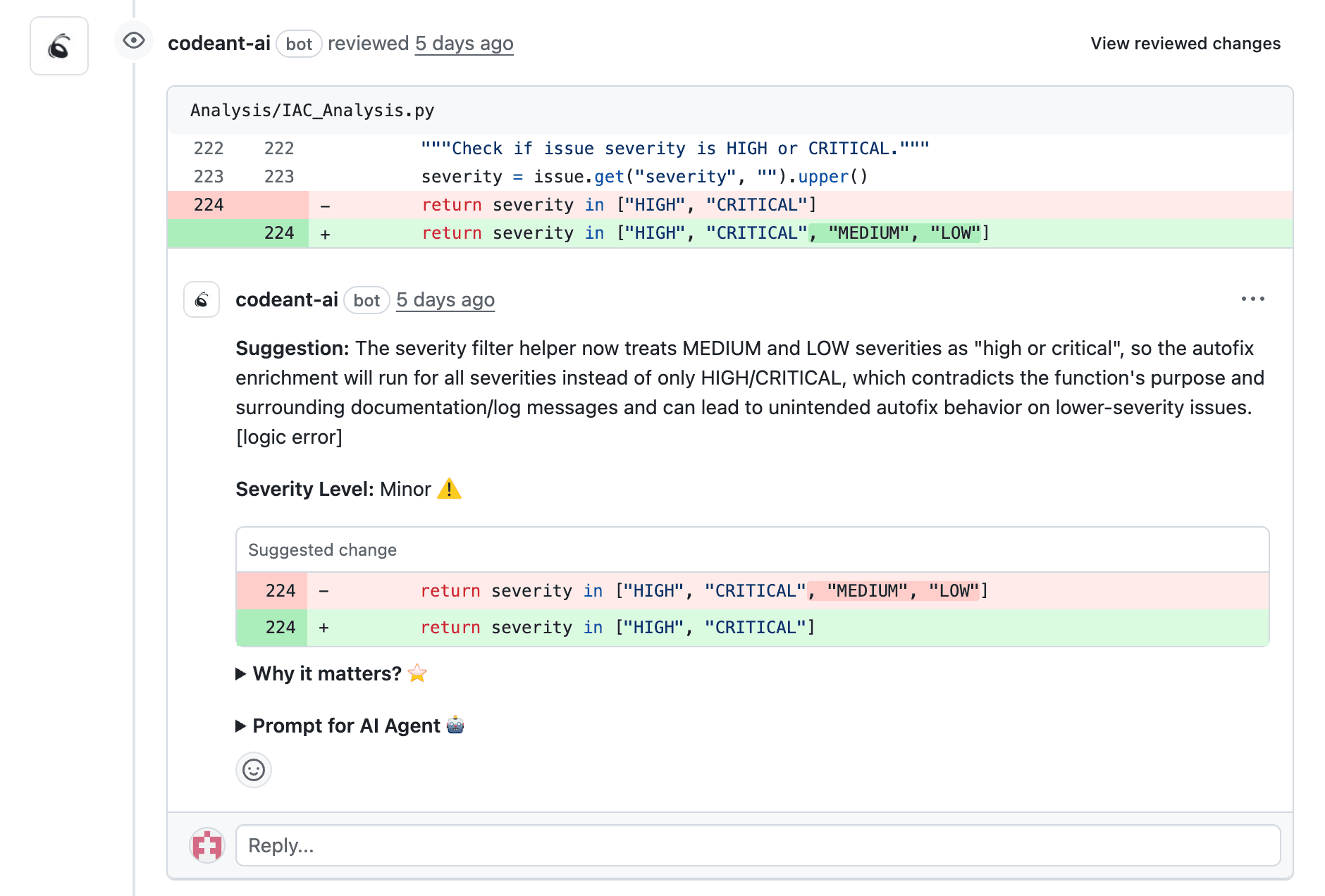

AI tools like CodeAnt AI accelerate PR feedback by:

reviewing PRs instantly

summarizing changes

generating suggestions

detecting bugs and risky patterns

identifying potential conflicts

AI reduces review latency dramatically.

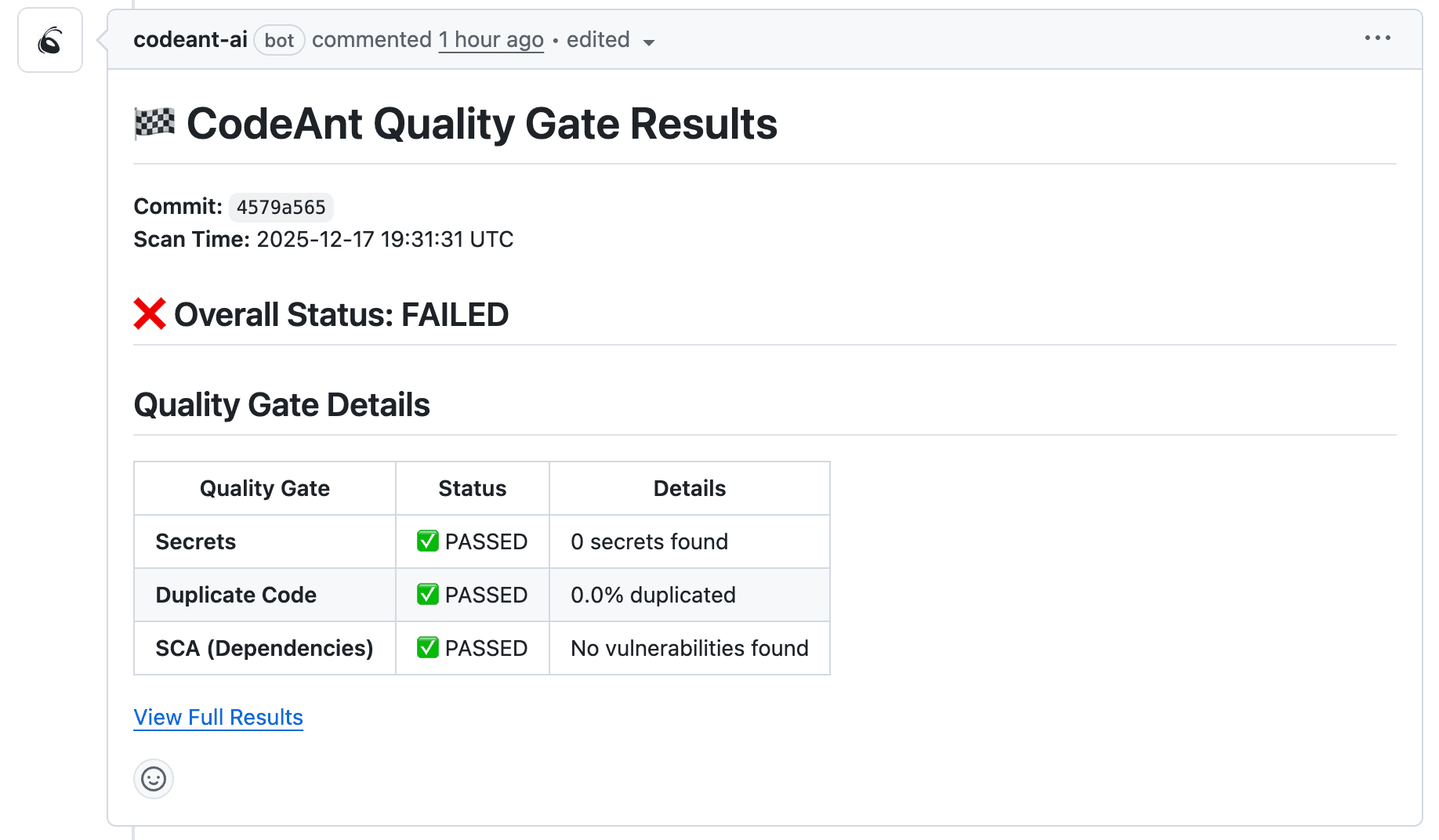

Automated Quality Gates With Static Analysis and Security Scanning

Quality gates guarantee baseline standards. Combined static analysis + SAST provide comprehensive coverage of:

complexity

duplication

vulnerabilities

secrets

CodeAnt AI bundles these checks into a single PR workflow.

CI/CD Integration for Pre-Review Validation

CI ensures reviewers only see code that has passed basic checks. Tests, linting, formatting, type checks, and SAST should run automatically before review.

Here's a simple summary table:

Code Review Metrics to Track for Faster Cycles

Metrics make improvement measurable.

Time-Based Metrics for Review Speed

The core speed metrics are:

Time-to-first-review → how long it takes for anyone to respond

Review cycle time → total time from PR open to approval

Time-to-merge → the complete duration from PR creation to merge

These show where delays originate.

Quality Metrics That Balance Speed With Thoroughness

Quality signals include:

defect escape rate

review coverage

PR re-open rate

incidents caused by merged changes

A healthy process balances speed and correctness.

Using Metrics to Identify and Fix Bottlenecks

Dashboards reveal patterns:

overloaded reviewers

repos with slow PR cycles

weeks or days with consistent delays

high-risk areas with frequent issues

CodeAnt AI centralizes code health and PR analytics so teams get visibility across all repos.

Ship Faster With a Healthier Code Review Process

Merge conflicts and slow reviews are not inevitable technical issues—they’re workflow problems that can be eliminated with the right practices and tooling.

Shorter-lived branches, smaller PRs, clear review expectations, async-friendly workflows, and AI-assisted quality gates dramatically reduce friction and improve velocity.

Modern teams use platforms like CodeAnt AI to automate objective checks, standardize review quality, reduce conflict windows, and give reviewers time to focus on what actually matters.

Ready to eliminate merge delays and speed up reviews? Book your 1:1 with our tech experts today.