AI Code Review

Dec 22, 2025

How to Scale Code Reviews Without Slowing Down Delivery

Sonali Sood

Founding GTM, CodeAnt AI

As engineering teams scale, pull request (PR) volume rises faster than reviewer capacity. What used to be a simple two-person review process suddenly becomes a bottleneck that slows the entire delivery pipeline. PR queues grow, merge conflicts multiply, and developers get stuck waiting for approvals instead of shipping code.

The challenge is simple to describe but harder to solve: How do you maintain code quality while moving at the speed your product demands?

This guide breaks down exactly why review bottlenecks form, and how modern teams use clear standards, lean review practices, automation, and AI to scale code reviews without sacrificing quality.

Why Code Reviews Become Bottlenecks in Fast-Moving Teams

Fast-moving engineering teams generate continuous PR activity. Without intentional review workflows, volume quickly exceeds reviewer bandwidth. Before we explore solutions, it’s important to understand why bottlenecks form.

Reviewer fatigue

Senior engineers usually shoulder the bulk of review workload. As PR queues grow, they’re forced to split attention between deep work and constant reviews, creating burnout and slower approvals.

Context switching

Developers need uninterrupted focus for design-heavy or logic-heavy work. When reviews interrupt their day unpredictably, they lose flow state. Each interruption adds invisible time cost.

Unclear ownership

If PRs are not routed to the right reviewers automatically—or if ownership boundaries are vague—PRs sit idle. The “someone will pick this up” assumption often leads to delays.

Growing queues

As teams add more developers, PR volume increases exponentially. Without load-balancing and automation, review capacity fails to keep up, creating cascading delays.

These constraints affect teams of every size. But the fastest-growing teams are hit hardest.

Principles for Scaling Code Reviews Without Sacrificing Quality

Before diving into tactics, teams need the right foundational mindset. These principles determine whether your review process scales or collapses under growth.

Focus on Improving the Codebase First

The real purpose of code review is to make the codebase healthier, safer, and easier to maintain—not to gatekeep contributions. A review should answer:

Does this change improve the system?

Does it reduce risk?

Is it consistent with long-term architecture?

Reviews that focus on code quality (instead of nitpicking or enforcing personal preference) create a culture where engineers value review time instead of dreading it.

Prioritize Progress Over Perfection

A perfect PR is not the goal. A good, safe, and well-tested PR is. Excessive nitpicking destroys momentum without delivering meaningful quality gains. Teams need a healthy threshold between:

must-fix issues (correctness, safety, test coverage)

optional suggestions (style, alternatives, patterns)

Fast teams approve PRs that are safe and correct—even if they’re not “perfect”—and follow up later when needed.

Treat Reviews as Knowledge-Sharing Opportunities

Strong teams treat reviews as collaborative learning moments:

junior developers gain architecture and domain knowledge

senior engineers explain decisions and patterns

reviewers spread best practices organically

This reduces the bus factor, improves shared ownership, and makes review load more evenly distributed as more developers grow competent in more parts of the system.

How to Set Clear Code Review Guidelines and Expectations

Once your principles are in place, you need a documented process. Clear expectations eliminate confusion, reduce back-and-forth, and help reviewers and authors move faster.

Define What Reviewers Should Evaluate

Reviewers should have a consistent, predictable scope. A clear checklist reduces cognitive load and prevents “I’m not sure what to look for” hesitation.

Reviewers should evaluate:

Functionality: Does the code behave as intended? Does it introduce regressions?

Readability: Can another engineer understand this logic easily?

Security: Are there vulnerabilities, exposed secrets, or dangerous patterns?

Performance: Could this change degrade performance or increase resource usage?

Test Coverage: Are new scenarios covered? Does the change break tests?

Everything outside this scope—formatting, naming preferences, personal style—belongs to automated checks.

Create and Enforce a Style Guide

Style debates waste time and sap reviewer energy. A style guide ensures:

consistent naming conventions

consistent structure

consistent patterns across the codebase

Automate style enforcement with formatters and linters like:

Prettier

ESLint

Black

gofmt

These tools set up the automation section later in this article.

Establish Response Time Expectations

Teams move faster when expectations are clear. Define:

Initial response time: e.g., 4–6 business hours

Review completion time: e.g., same day for standard PRs

Escalation rules: e.g., ping after 24 hours

Async rules: e.g., reviewers leave clear questions and avoid vague comments

Time-boxed expectations prevent PRs from aging in the queue.

Best Practices for High-Volume Code Reviews

This is the tactical heart of the article. Implement these and you’ll feel immediate improvements.

1. Keep Pull Requests Small and Focused

Small PRs move dramatically faster. They’re easier to understand, easier to test, and easier to approve.

A “small PR” usually aligns with:

a single feature

a single refactor

a single bug fix

For large changes, use stacked PRs: sequence multiple small PRs instead of opening one giant one.

2. Distribute Reviewer Load Across the Team

Avoid the trap where senior developers handle every review. Distribute load using:

CODEOWNERS for domain-based routing

round-robin assignment for fairness

load-balancing tools to avoid reviewer overload

Shared ownership prevents bottlenecks and burnout.

3. Use Checklists to Standardize Reviews

A checklist ensures reviewers evaluate consistently, not based on memory or preference.

Sample Review Checklist:

Code follows style guidelines

No hardcoded secrets or credentials

Error handling and edge cases covered

New code includes appropriate tests

Documentation updated if needed

Checklists reduce cognitive overhead and improve review quality.

4. Give Clear and Constructive Feedback

Vague comments create delays. Strong review feedback:

distinguishes blocking issues from suggestions

provides context and reasoning

offers alternatives when appropriate

avoids emotional or ambiguous phrasing

Instead of “This isn’t good,” prefer:

“I think this could break when X happens. Can we handle that case with Y?”

Clear feedback shortens the cycle.

5. Batch Reviews to Reduce Context Switching

Reviewing every time a notification appears destroys deep work. Instead:

schedule daily or twice-daily review blocks

batch reviews together

turn off PR notifications outside review windows

Batching reduces mental fragmentation and increases both review speed and code quality.

How Automation and AI Reduce Code Review Time

Modern teams don’t scale review by adding more reviewers—they scale by reducing the number of things humans must do manually. Automation handles repetitive checks; humans focus on logic and design.

Automate Style and Formatting Checks

Linters and formatters catch:

styling issues

indentation errors

inconsistent naming

accidental whitespace changes

CI can block PRs that fail style checks, ensuring reviewers never waste energy on trivial issues.

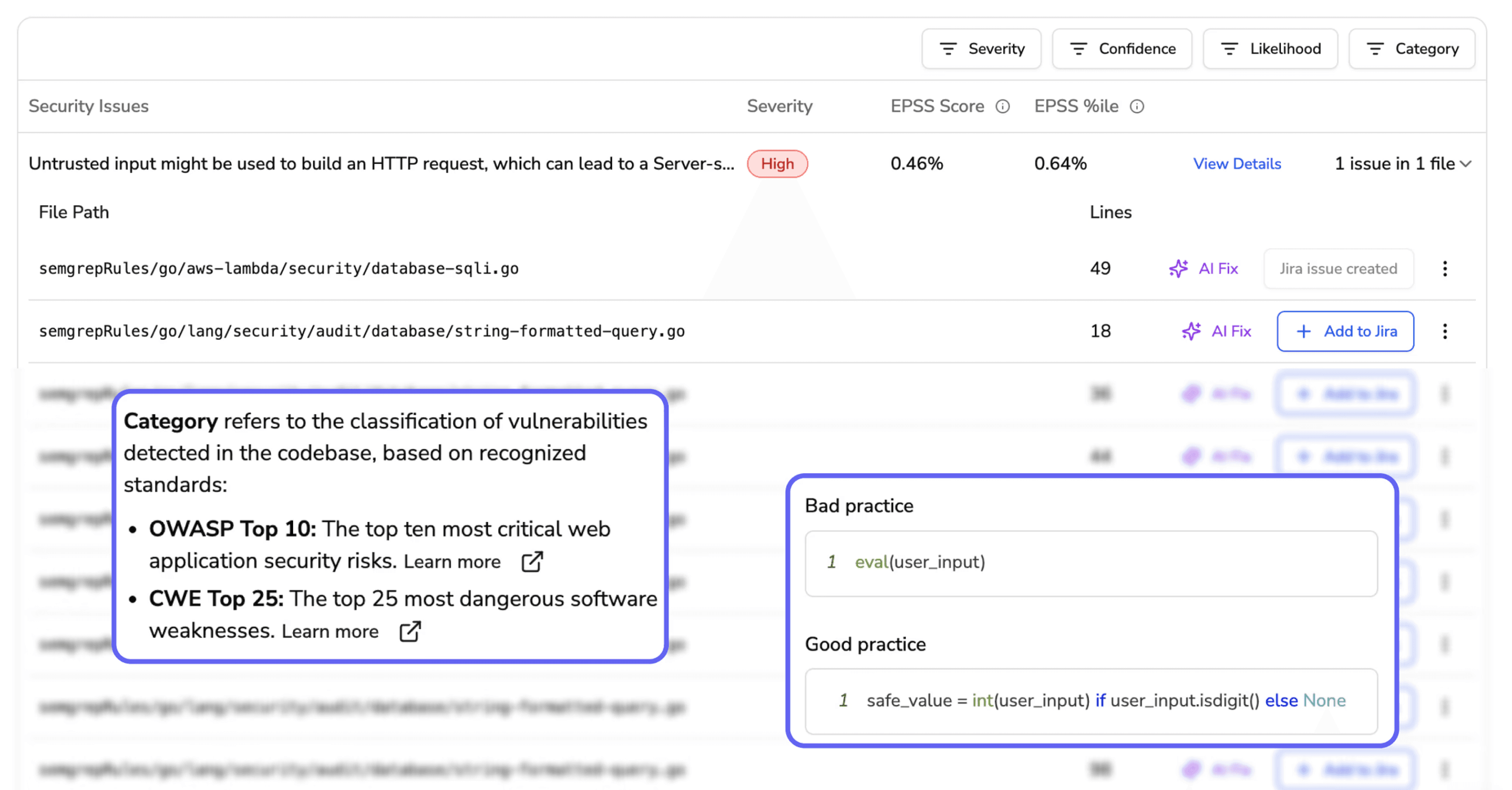

Use Static Analysis to Catch Bugs Before Review

Static analysis identifies deeper issues:

unreachable code

unused variables

complexity hotspots

dangerous code patterns

SAST tools add security scanning on top of this, catching:

injection vulnerabilities

unsafe API calls

hardcoded secrets

dependency CVEs

CodeAnt AI provides static analysis + SAST directly inside the PR.

Use AI for PR Summarization and Fix Suggestions

AI reduces reviewer workload by:

summarizing PR changes

explaining risky sections

identifying logic flaws

generating fix suggestions

reviewing instantly when PR is opened

This is especially valuable for large diffs, multi-service changes, or legacy code review.

Comparison Table:

Review Task | Manual Approach | Automated Approach |

|---|---|---|

Style enforcement | Reviewer leaves formatting notes | Linters automatically fix or block the PR |

Security scanning | Reviewer manually identifies vulnerabilities | SAST tools automatically flag security risks |

PR summarization | Reviewer reads the entire diff | AI generates a concise, context-rich summary |

Bug detection | Reviewer catches logic errors during review | Static analysis tools automatically identify issues |

Key Metrics to Track Code Review Efficiency

Teams that measure code reviews improve them. Teams that don’t measure drift into chaos.

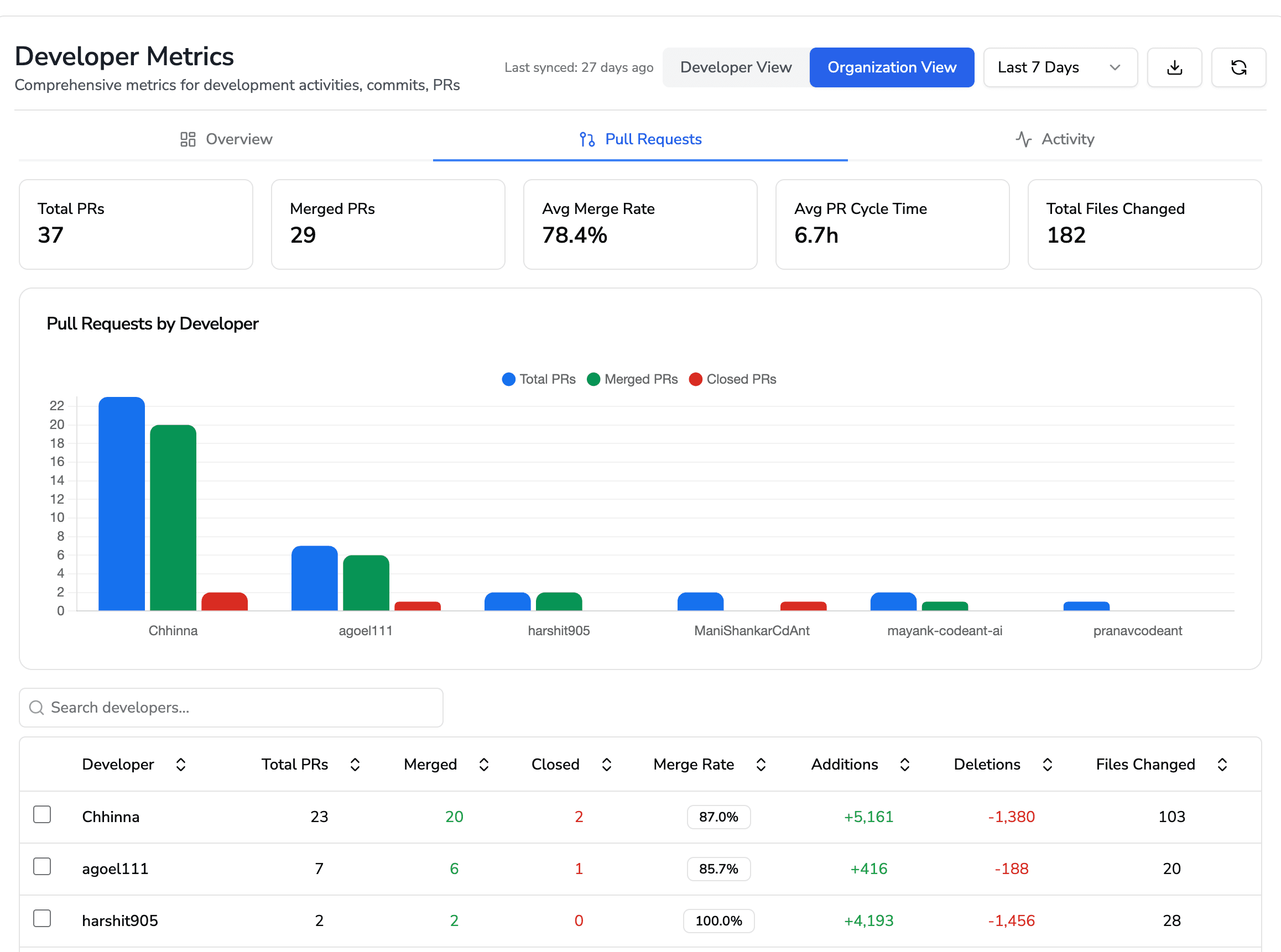

Review Cycle Time

The time from PR opened → PR merged. A healthy cycle is usually under 24 hours for most PRs.

Long cycle time signals:

overloaded reviewers

unclear ownership

oversized PRs

PR Throughput

The number of PRs merged per week or sprint.

Higher throughput = healthier velocity.

Declining throughput often indicates bottlenecks in review workflow.

Review Depth and Coverage

Depth answers the question: Are reviewers actually catching issues? Coverage ensures every PR receives meaningful attention.

Low depth = rubber-stamping

Low coverage = risky merges

Automation + AI can increase both without slowing teams down.

Reviewer Load Distribution

Uneven distribution creates hidden bottlenecks.

If a few developers carry most of the review load, throughput decreases and burnout rises.

Tools like CodeAnt AI show reviewer load across all repos so you can rebalance fairly.

Building a Code Review Process That Scales With Your Team

Scaling reviews is not just a tooling problem, it’s a cultural and process problem. High-performing teams share three traits:

clear review standards

small, frequent PRs

automation that handles repetitive work

CodeAnt AI unifies all of this by combining:

complexity + maintainability scoring

reviewer load analytics

review cycle metrics

cross-repo dashboards

If you want to scale code reviews without slowing delivery—and without burning out your senior engineers, this is the foundation. Wanna know more? Try out our 14-day free trial period.