AI Code Review

Dec 18, 2025

Why Long Code Review Cycles Slow Teams and How AI Fixes It

Sonali Sood

Founding GTM, CodeAnt AI

Your team ships code faster than ever. But somehow, pull requests still sit in review queues for days, blocking releases, frustrating developers, and quietly accumulating technical debt while everyone waits for feedback.

AI code review tools change this dynamic by providing instant, automated feedback on every PR. This guide covers why long review cycles hurt engineering teams, how AI accelerates the process, and what to look for when choosing a tool that fits your workflow.

Why Long Code Review Cycles Hurt Engineering Teams

AI tools accelerate code reviews by providing immediate, automated feedback on every pull request. Instead of waiting hours or days for a human reviewer, developers get instant comments on bugs, style violations, and security issues. The result is faster delivery, higher code quality, and engineers who spend time on architecture and logic rather than formatting debates.

But why do review cycles drag on in the first place? Usually, it comes down to a few predictable bottlenecks that compound as teams grow.

Developers Lose Momentum to Context Switching

When a developer opens a pull request and waits two days for feedback, they don't sit idle. They pick up another task, dive into a different problem, and mentally shift gears. Then the review comments arrive, and suddenly they're forced to context-switch back to code they wrote earlier in the week.

Context switching kills productivity. Regaining focus after an interruption takes real time, and multiplying that across every PR, every developer, every week, and you're looking at significant lost hours.

Senior Engineers Become Review Bottlenecks

On most teams, a handful of experienced engineers handle the bulk of code reviews. They know the codebase, understand the architecture, and catch issues others miss. The problem? They're also the people you want writing code, mentoring juniors, and solving hard problems.

When senior engineers spend half their day reviewing PRs, two things happen: their own work slows down, and everyone else waits in a queue. The more your team grows, the worse this bottleneck becomes.

Technical Debt Accumulates With Rushed Reviews

Here's the irony: when review queues pile up, teams feel pressure to clear them quickly. Reviewers skim instead of scrutinize. They approve PRs with a quick "LGTM" just to keep things moving.

Technical debt—the accumulated cost of shortcuts and deferred fixes—grows quietly in the background. Bugs slip through. Security vulnerabilities hide in plain sight. Six months later, your team spends more time fixing problems than building features.

Release Velocity Suffers Across the Organization

Slow reviews don't just frustrate individual developers. They ripple outward. Features miss deadlines. Releases get delayed. Product managers start asking uncomfortable questions about why a "simple change" took two weeks to ship.

What Is AI Code Review

AI code review uses machine learning models to analyze code changes automatically. Instead of waiting for a human reviewer to spot a missing null check or a security vulnerability, AI tools scan every pull request the moment it's opened.

Here's what AI code review typically handles:

Automated analysis: Scans for bugs, vulnerabilities, and style inconsistencies

Instant feedback: Comments on PRs within minutes, not hours

Pattern recognition: Identifies common mistakes based on millions of code samples

Context awareness: Learns your team's coding standards and adapts suggestions accordingly

Think of AI as a first-pass filter. It catches the obvious issues so human reviewers can focus on what actually requires human judgment—architecture decisions, business logic, and edge cases.

How AI Code Review Reduces Review Cycle Time

The primary value of AI code review is speed. But speed alone isn't useful if it comes at the cost of quality. Here's how AI shortens cycles while maintaining review standards.

Automating Repetitive Review Tasks

Most code review comments fall into predictable categories: formatting issues, naming conventions, missing documentation, basic logic errors. Important, yes, but they don't require senior engineering expertise.

AI handles repetitive checks automatically:

Style and formatting violations

Unused variables and imports

Common anti-patterns

Documentation gaps

Basic security issues like hardcoded credentials

When AI catches low-level issues first, human reviewers skip the tedious stuff and jump straight to meaningful feedback.

Providing Instant Feedback on Every Pull Request

Traditional reviews depend on human availability. If your reviewer is in meetings all morning, your PR sits untouched. If they're in a different timezone, you might wait overnight.

AI reviews happen in seconds. A developer opens a PR, and within moments, they see comments highlighting potential issues. They can fix problems immediately, before a human reviewer even looks at the code. Instead of waiting for feedback, developers iterate in real time.

Catching Bugs and Security Issues Before Human Review

AI excels at pattern matching. It scans for known vulnerability patterns, insecure configurations, and common bug signatures faster than any human.

SAST tools, often built into AI review platforms, analyze code for security flaws without executing it. SAST catches issues like SQL injection risks, cross-site scripting vulnerabilities, and exposed secrets before code ever reaches production.

When AI pre-filters obvious problems, human reviewers focus on higher-level concerns: Does this approach make sense? Will it scale? Does it align with our architecture?

Benefits of AI Code Review for Developer Productivity

Beyond faster cycles, AI code review delivers compounding benefits that improve how teams work day-to-day.

Improved Code Quality and Consistency

Large codebases with multiple contributors tend to drift. Different developers have different styles, different habits, different blind spots. Over time, the codebase becomes inconsistent, harder to read, harder to maintain.

AI enforces consistent standards across every PR, every contributor, every repository. The result is a cleaner, more maintainable codebase that's easier for new team members to understand.

Faster Bug Detection and Resolution

The earlier you catch a bug, the cheaper it is to fix. A bug found during code review costs a fraction of what it costs to fix in production, both in engineering time and customer impact.

AI shifts bug detection left in the development lifecycle. Issues surface during the PR stage, when context is fresh and fixes are simple.

Stronger Security Coverage Without Slowing Down

Security reviews often create friction. They add another step, another reviewer, another potential delay. Teams sometimes skip security checks under deadline pressure, and pay the price later.

AI security scanning runs in parallel with other checks. Every PR gets scanned for vulnerabilities, secrets, and misconfigurations automatically. Security coverage improves without adding friction to the workflow.

Better Developer Experience and Morale

Developers don't enjoy waiting. They don't enjoy nitpicky comments about semicolons. They don't enjoy feeling like their work is stuck in a queue.

When AI handles the tedious parts of review, developers get faster feedback, fewer frustrating comments, and more time for meaningful work.

Limitations and Challenges of AI Code Review

AI code review isn't perfect. Understanding its limitations helps you use it effectively.

Contextual Misinterpretation Risks

AI models don't understand your business. They don't know why you made a particular architectural decision or what edge cases matter most for your users.

Sometimes AI flags code that's perfectly appropriate for your context. Other times, it misses issues that require domain knowledge to spot. Human oversight remains essential. But, CodeAnt AI is beyond such limitations…as our platform is 100% context-aware, it doesn’t just scan code, it understands it. It reviews pull requests in real time, suggests actionable fixes, and reduces manual review effort by up to 80%.

False Positives and False Negatives

False positives occur when AI flags acceptable code as problematic. False negatives occur when AI misses actual issues.

Both happen. Tuning AI tools to your codebase reduces false positives over time, but you'll never eliminate them entirely. Teams that treat AI suggestions as conversation starters, rather than final verdicts, get the best results.

Overreliance on Automated Feedback

The biggest risk with AI code review is complacency. If developers assume AI catches everything, they stop thinking critically about their own code. If reviewers assume AI already checked for bugs, they skim instead of scrutinize.

AI augments human review. It doesn't replace it.

Best Practices for Implementing AI Code Review

Getting value from AI code review requires thoughtful implementation. Here's what works.

1. Treat AI Feedback as a First-Pass Filter

Configure your workflow so developers see AI feedback before requesting human review. They can fix obvious issues immediately, which means human reviewers see cleaner PRs with fewer distractions.

2. Combine AI Insights With Human Judgment

Establish clear guidelines for what AI handles versus what requires human sign-off. AI catches syntax, style, and common bugs. Humans evaluate architecture, business logic, and strategic decisions.

3. Embed AI Checks Into Your CI/CD Pipeline

Continuous Integration/Continuous Deployment (CI/CD) pipelines automate build, test, and deployment processes. Adding AI code review to your pipeline ensures every commit gets analyzed automatically—no manual steps required.

Platforms like CodeAnt AI integrate directly with GitHub, GitLab, Bitbucket, and Azure DevOps, adding automated feedback to your existing workflow.

4. Train Your Team on Reviewing AI Suggestions

Not every AI suggestion is correct. Teams benefit from guidance on when to accept, modify, or reject AI feedback. A brief onboarding session helps developers understand how to interpret AI comments and when to push back.

Metrics to Measure AI Code Review Success

How do you know if AI code review is working? Track a few key metrics.

Metric | What It Measures | Why It Matters |

Average PR review time | Hours from PR open to approval | Shows direct cycle time improvement |

PR throughput | Number of PRs merged per sprint | Indicates team velocity |

Defect escape rate | Bugs found in production vs. review | Measures review effectiveness |

Developer satisfaction | Team feedback on review process | Tracks adoption and morale |

Average Pull Request Review Time

Measure the time from PR creation to final approval. If AI is working, this number drops—often noticeably within the first few sprints.

PR Throughput and Merge Frequency

Higher throughput means faster feature delivery. Track how many PRs your team merges per week or sprint, and watch for improvement after AI adoption.

Defect Escape Rate

This metric tracks bugs that reach production versus bugs caught during review. A lower escape rate indicates AI is catching more issues before they ship.

Developer Satisfaction and Review Quality Scores

Survey your team periodically. Are reviews faster? Less frustrating? More useful? Satisfaction correlates with retention and long-term productivity.

AI Code Review Tools Worth Evaluating

Several tools bring AI-powered review to your existing workflow. Here's a quick comparison.

Tool | AI Review | Security Scanning | CI/CD Integration | Best For |

CodeAnt AI | Yes | Yes | Yes | Teams wanting unified code health |

GitHub Copilot for PRs | Yes | Limited | GitHub only | GitHub-native teams |

CodeRabbit | Yes | Limited | Yes | Fast PR summaries |

SonarQube | Limited | Yes | Yes | Enterprise static analysis |

Amazon CodeGuru | Yes | Yes | AWS only | AWS-centric organizations |

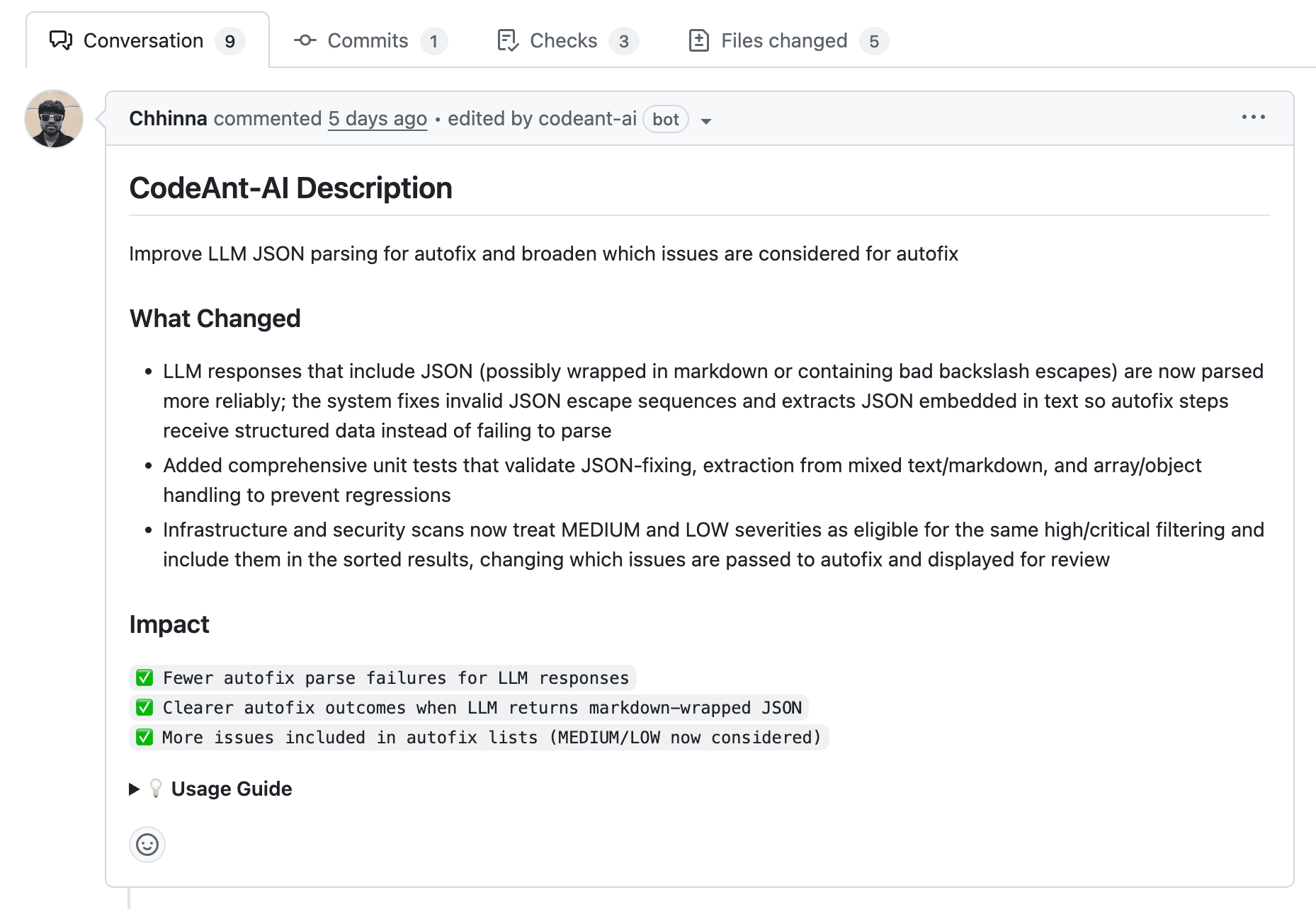

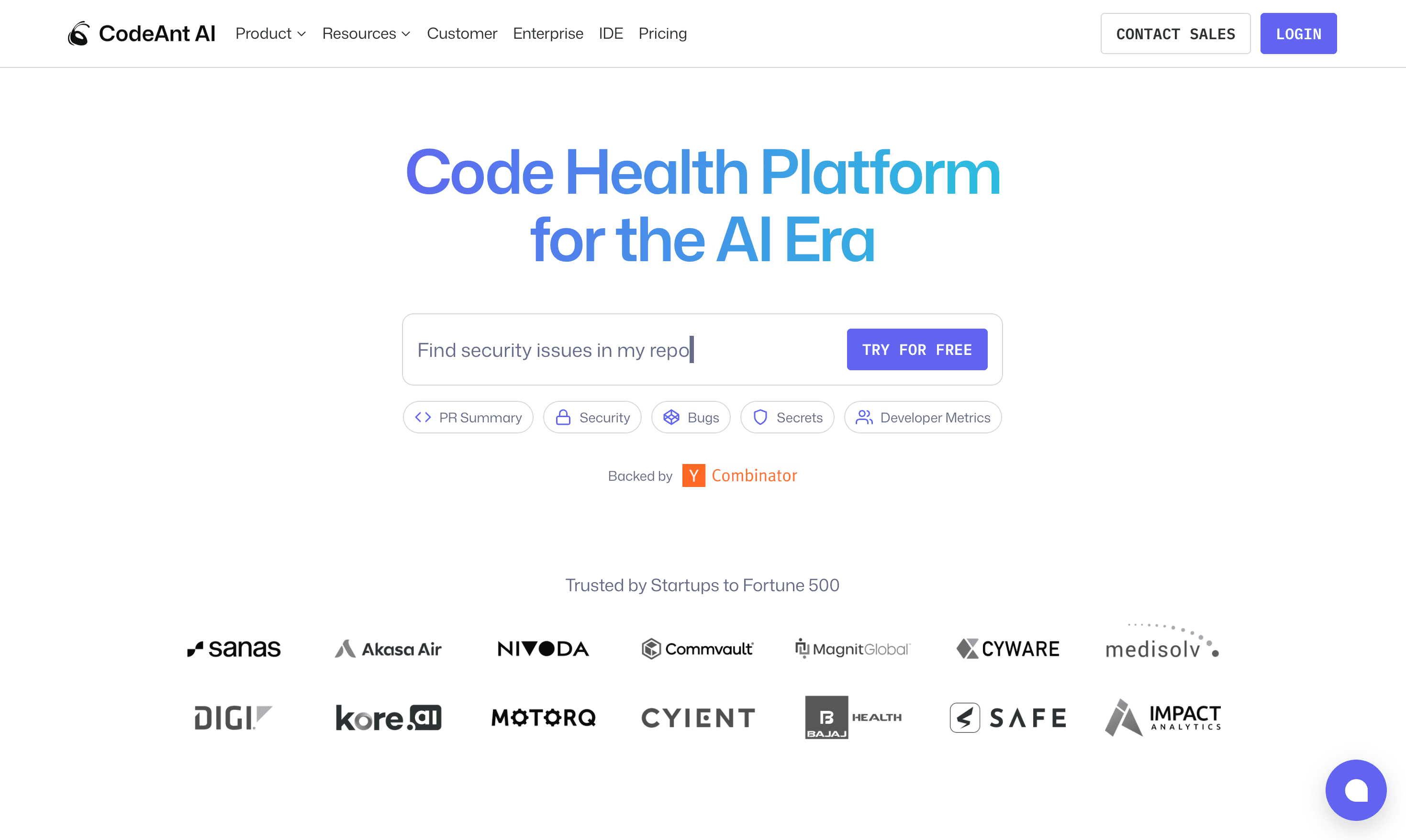

CodeAnt AI

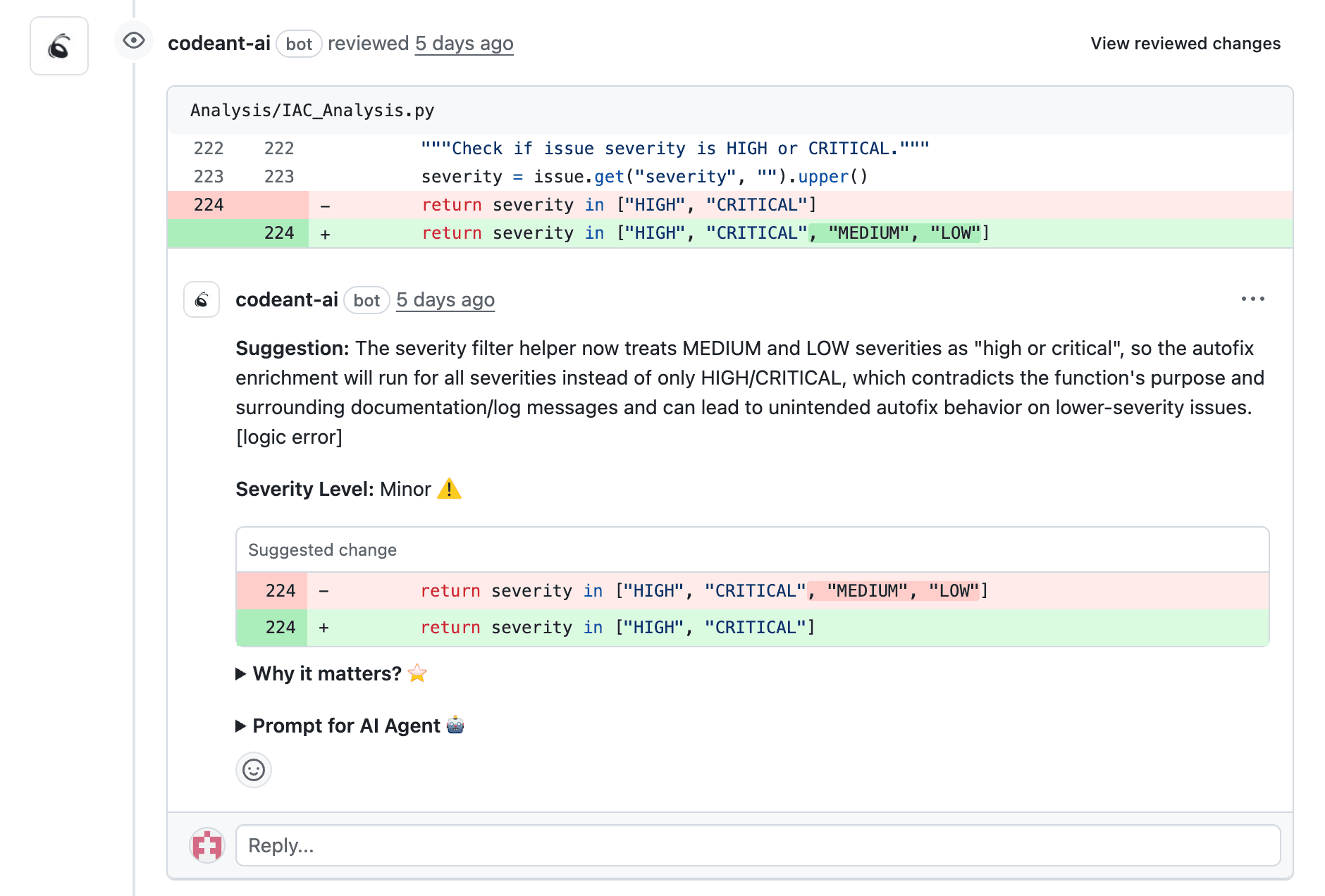

CodeAnt AI combines AI-powered code review, security scanning, and quality metrics in a single platform. It reviews every PR with line-by-line suggestions, scans for vulnerabilities and secrets, and tracks technical debt indicators like complexity and duplication.

Features:

Automatic PR reviews with actionable suggestions

Security scanning for vulnerabilities, secrets, and misconfigurations

Support for 30+ languages

Integration with GitHub, GitLab, Bitbucket, and Azure DevOps

DORA metrics and engineering insights

Pricing: 14-day free trial, no credit card required. Plans start at $10/user/month.

GitHub Copilot for Pull Requests

GitHub's AI assistant provides PR summaries and code suggestions directly within GitHub. It works well for teams already committed to the GitHub ecosystem, though security scanning capabilities are limited compared to dedicated tools.

Checkout this GitHub Copilot alternative.

CodeRabbit

CodeRabbit focuses on fast, automated PR summaries and feedback. It's lightweight and quick to set up, making it a good option for teams that want AI review without extensive configuration.

Checkout this CodeRabbit alternative.

SonarQube

SonarQube offers strong static analysis and quality gates, particularly for enterprise teams. Its AI capabilities are more limited than dedicated AI review tools, but it excels at enforcing code quality standards.

Checkout this SonarQube Alternative.

Amazon CodeGuru

CodeGuru provides ML-powered code recommendations for teams building on AWS. It integrates tightly with AWS services but offers limited value for teams using other cloud providers.

How to Balance AI Automation With Human Code Review

The goal isn't to remove humans from code review—it's to make human review more valuable.

Use AI for volume: Let AI handle every PR so humans review only flagged issues

Reserve humans for judgment calls: Architecture decisions, business logic, and edge cases require human expertise

Set clear boundaries: Define which issues AI can auto-approve versus those requiring human sign-off

Review AI suggestions together: Use AI feedback as a conversation starter in team reviews

Faster Code Reviews Start With Smarter Automation

Long review cycles don't have to slow your team down. AI handles the repetitive checks, catches bugs and security issues early, and frees your senior engineers to focus on work that requires human judgment.

The teams shipping fastest aren't choosing between speed and quality. They're using AI to get both.

Ready to cut your review cycle time?Book your 1:1 with our experts today!