AI Code Review

Dec 18, 2025

8 Ways to Maintain Code Review Quality During High-Pressure Sprints

Sonali Sood

Founding GTM, CodeAnt AI

It's the final days of a sprint, PRs are stacking up, and your team is torn between shipping on time and actually reviewing code properly. Something has to give—and usually, it's review quality.

The tension between velocity and thoroughness isn't a character flaw. It's a process problem with practical solutions. This guide covers eight strategies that help teams maintain meaningful code reviews even when backlogs are high and deadlines are tight.

Why Code Review Quality Drops During High-Pressure Sprints

When backlogs pile up, code review quality drops first. Teams facing deadline pressure often rubber-stamp PRs, skip reviews entirely, or rush through feedback without catching critical issues. You've probably seen this before: a sprint deadline looms, PRs stack up, and suddenly "LGTM" becomes the default response.

The pattern is predictable. Reviewers skim instead of scrutinize. Authors merge without addressing comments. The backlog clears, but bugs slip into production, security vulnerabilities go unnoticed, and technical debt compounds quietly in the background.

Several factors drive this breakdown:

Backlog accumulation: PRs pile up faster than reviewers can process them

Context switching: Developers juggle coding and reviewing at the same time

Deadline anxiety: Teams prioritize shipping over catching issues

Reviewer bottlenecks: Senior developers become single points of failure

The good news? Thoughtful reviews and fast delivery aren't mutually exclusive. With the right practices and automation, your team can maintain quality even when pressure peaks.

What Makes a Good Code Review When Time Is Limited

A quality code review doesn't mean examining every line with equal intensity. When time is tight, effective reviewers focus on what matters most and let automation handle the rest.

Business Logic and Critical Path Validation

Start with the "why" behind the change. Does this code actually solve the intended problem? Understanding business context helps you catch logical errors that syntax checks miss entirely.

Ask yourself: if this code runs in production, will it behave as the author expects? This question surfaces issues that automated tools can't detect.

Security and Compliance Verification

Security checks can't be skipped regardless of time pressure. Authentication flows, data handling, and external integrations deserve careful attention because vulnerabilities hide in exactly those places.

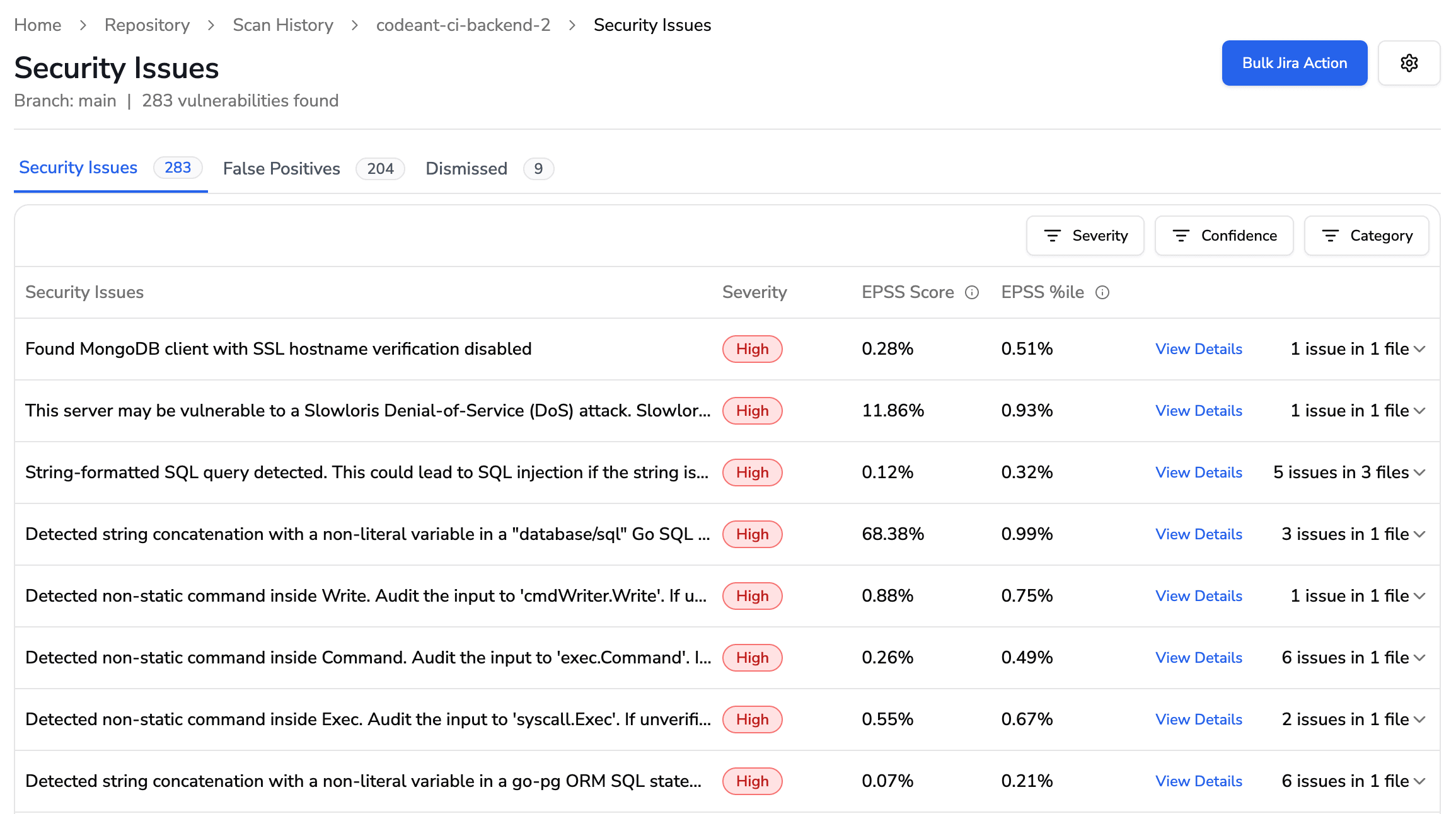

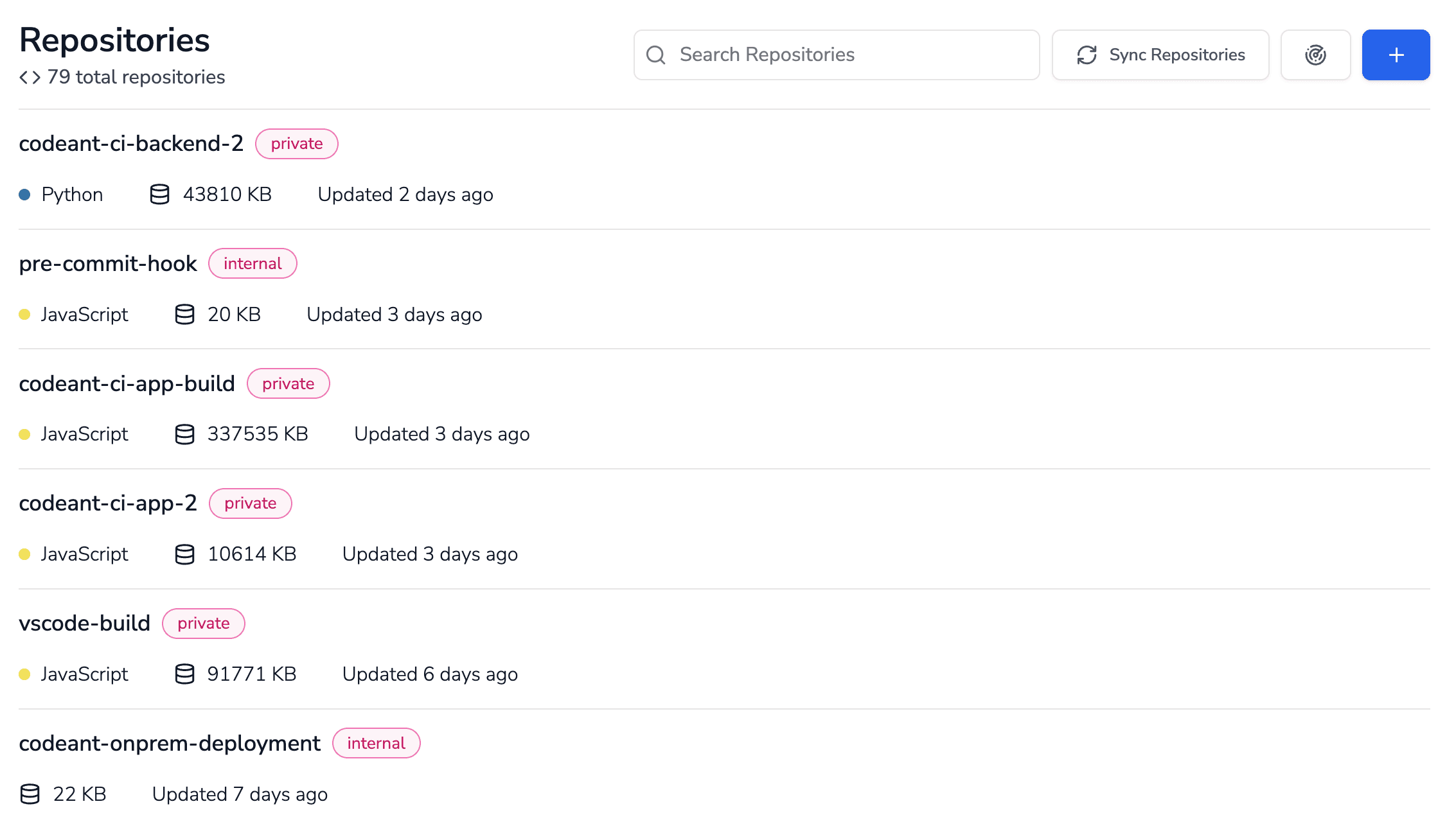

SAST tools scan code for known vulnerability patterns automatically. CodeAnt AI performs continuous security scanning across your codebase, catching issues before human review even begins.

Architectural Consistency Checks

New code that breaks established patterns creates maintenance headaches later. Verify that changes follow existing conventions and don't introduce unnecessary complexity.

Look for code that "works" but doesn't fit. A clever solution that ignores team standards often costs more to maintain than it saves in development time.

Test Coverage Assessment

Check that tests exist for new functionality. Edge cases matter, especially in code paths that handle errors, authentication, or financial calculations.

Missing tests aren't just a quality issue. They signal that the author may not fully understand how their code behaves under stress.

8 Best Practices for Thoughtful Code Reviews During Backlog Crunch

1. Set Clear Review SLAs for Your Team

A Service Level Agreement (SLA) for reviews creates accountability without forcing rushed feedback. Define expectations like first response within 4 hours and final approval within 24 hours.

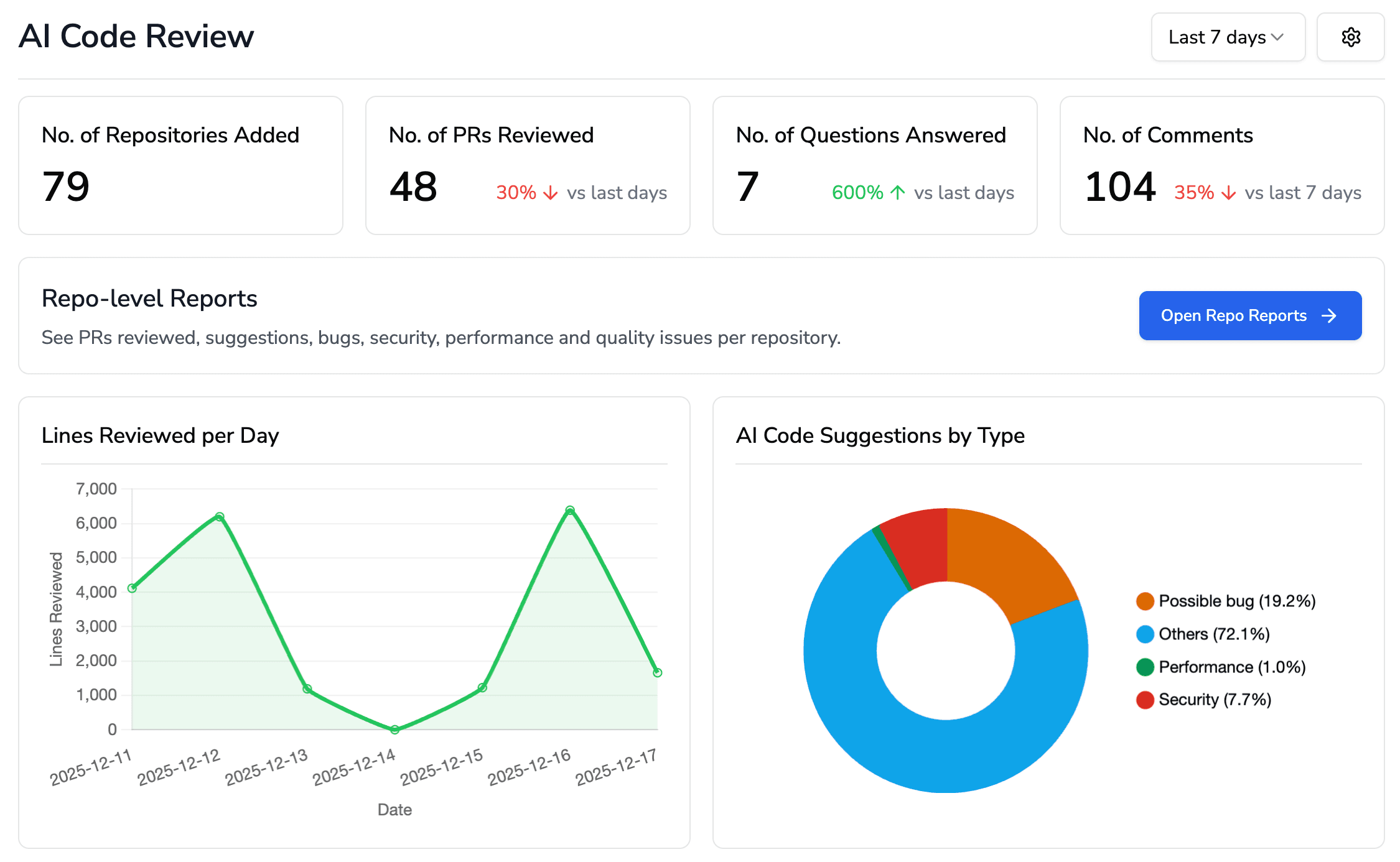

Time-bound commitments prevent PR stagnation while giving reviewers permission to take the time they actually need. CodeAnt AI tracks review cycle times automatically, helping you measure SLA compliance across your team.

2. Break Large Pull Requests into Reviewable Chunks

Smaller PRs get faster, more thorough reviews. A 50-line change receives focused attention, while a 500-line change gets skimmed.

Aim for PRs that address a single concern:

Single responsibility: Each PR addresses one feature or fix

Logical boundaries: Split by component, layer, or functional area

Draft PRs: Open early for incremental feedback before final review

3. Use Standardized Code Review Checklists

Checklists ensure consistency when cognitive load is high. Reviewers don't waste mental energy remembering what to check because they follow a proven process instead.

A basic checklist might include:

Security vulnerabilities addressed

Error handling implemented

Breaking changes documented

Tests added or updated

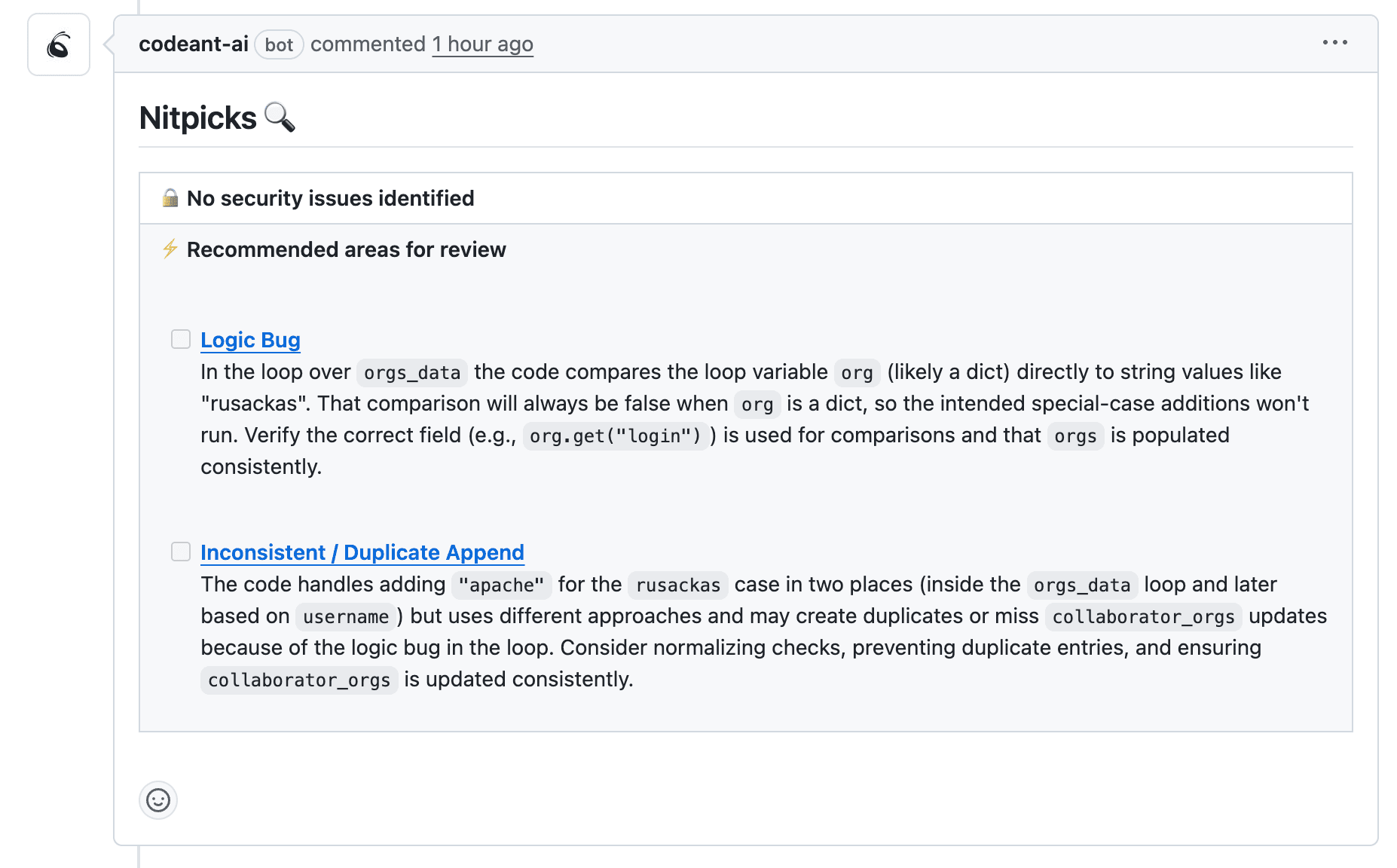

AI-powered tools like CodeAnt AI can automate checklist enforcement, flagging missing items before human review begins.

4. Prioritize High-Risk and Security-Critical Sections

Not every line deserves equal scrutiny. Triage your attention toward authentication logic, data validation, payment processing, and external API integrations.

Low-risk changes like formatting updates, documentation fixes, and simple refactors can receive lighter reviews. Save your deep focus for code that could cause real damage if it fails.

5. Automate Routine Checks with AI-Powered Review Tools

Automation handles repetitive tasks so human reviewers focus on logic and design. Linting, formatting, and basic security scans don't need to consume reviewer bandwidth.

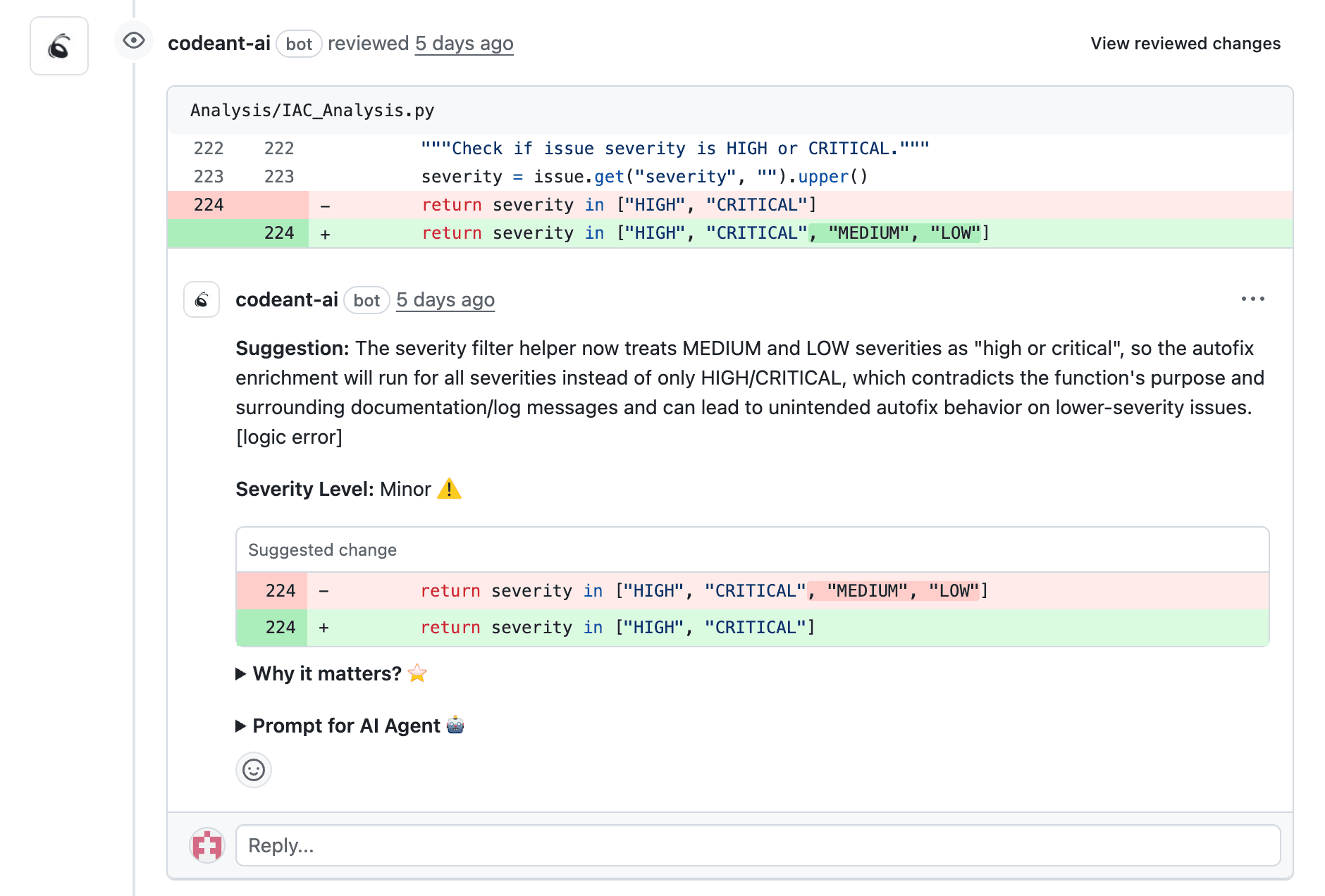

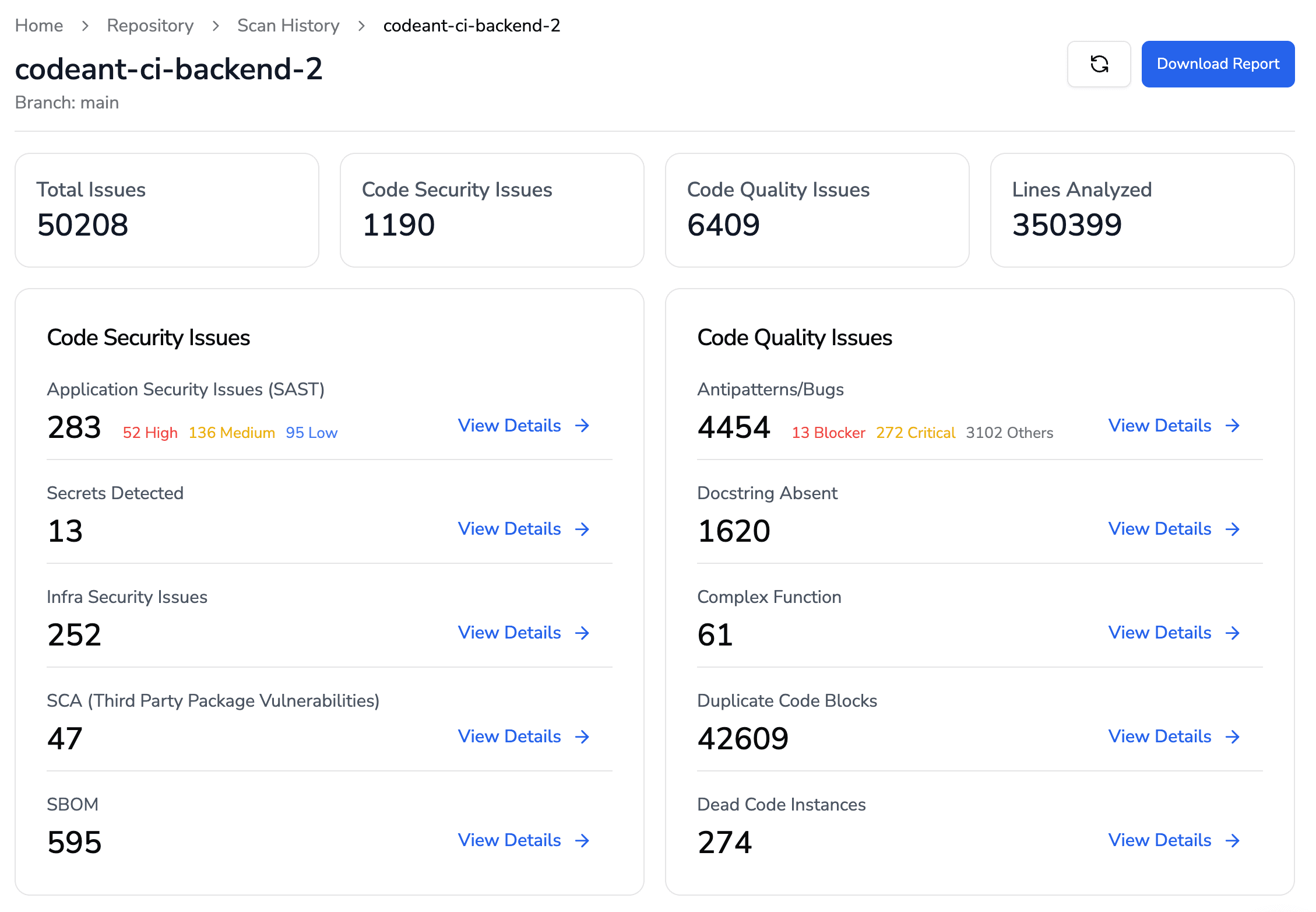

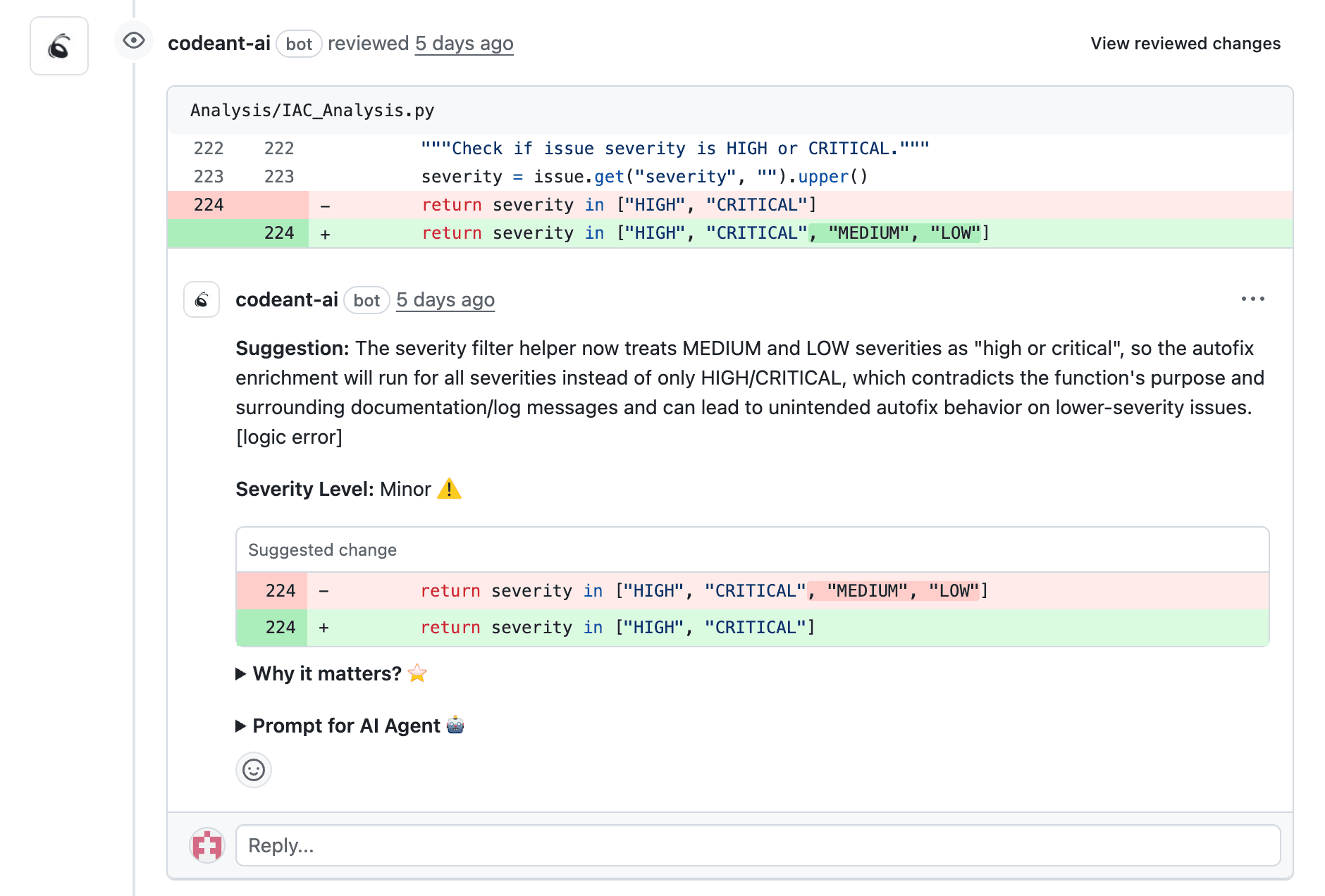

CodeAnt AI provides line-by-line AI reviews, security scanning, and fix suggestions automatically on every PR. The platform is 100% context-aware, meaning it doesn't just scan code but understands coding patterns, team standards, and architectural decisions.

Tip: Start with automated checks that block merges on critical issues (security vulnerabilities, failing tests) while allowing warnings for style preferences.

6. Open Draft PRs Early for Incremental Feedback

Don't wait until code is "done" to request feedback. Draft PRs let authors get directional input early, preventing major rewrites late in the sprint.

Early feedback catches architectural misalignment before it becomes expensive. A 5-minute conversation on day one saves hours of rework on day five.

7. Distribute Review Load Across Team Members

Code ownership files (CODEOWNERS) and rotation strategies prevent bottlenecks. When one senior developer reviews everything, they become a single point of failure.

Spread reviews across the team. Junior developers learn faster when they review code, and their fresh perspective often catches issues that experienced eyes miss.

8. Block Dedicated Time for Deep Code Reviews

Context switching destroys review quality. A reviewer who checks PRs between meetings produces shallow feedback.

Calendar blocking works. Reserve 30 to 60 minute blocks specifically for uninterrupted review sessions. Focused time produces better feedback than scattered attention throughout the day.

Code Review Metrics That Reveal Quality Issues Before They Escalate

Tracking metrics helps teams identify process breakdowns early. Without data, you're guessing whether reviews are actually effective.

Metric | What It Measures | Warning Sign |

Review Cycle Time | Hours from PR open to merge | Increasing delays indicate bottlenecks |

First Response Time | Time until first reviewer comment | Long waits signal capacity issues |

Defect Escape Rate | Bugs found post-merge vs. in review | Rising rate means reviews miss issues |

Review Coverage Ratio | Percentage of PRs receiving review | Low coverage suggests skipped reviews |

CodeAnt AI tracks review metrics automatically and surfaces them in dashboards. The platform also delivers developer-level insights like commits and PR sizes per developer, review velocity and response times, and security issues mapped to contributors.

Review Cycle Time

Review cycle time measures total time from PR submission to merge. Long cycle times hurt developer flow and indicate process problems. If PRs sit for days, authors lose context and motivation.

First Response Time

First response time tracks how quickly reviewers acknowledge PRs. Fast initial response keeps authors engaged and unblocked, even if full review takes longer.

Defect Escape Rate

Defect escape rate is the ratio of bugs caught in production versus during review. High escape rates signal that reviews aren't catching what they're supposed to catch.

Review Coverage Ratio

Review coverage ratio shows what percentage of code changes receive peer review. Dropping coverage during sprints signals teams are cutting corners under pressure.

How Automation Supports Thoughtful Reviews at Scale

When human bandwidth is limited, automated tooling maintains quality baselines. The goal isn't replacing human judgment but freeing humans to focus on decisions that require judgment.

Static Analysis and Security Scanning

Static analysis examines code without executing it, catching vulnerabilities, secrets, and misconfigurations automatically. These checks run before human review begins, filtering out obvious issues.

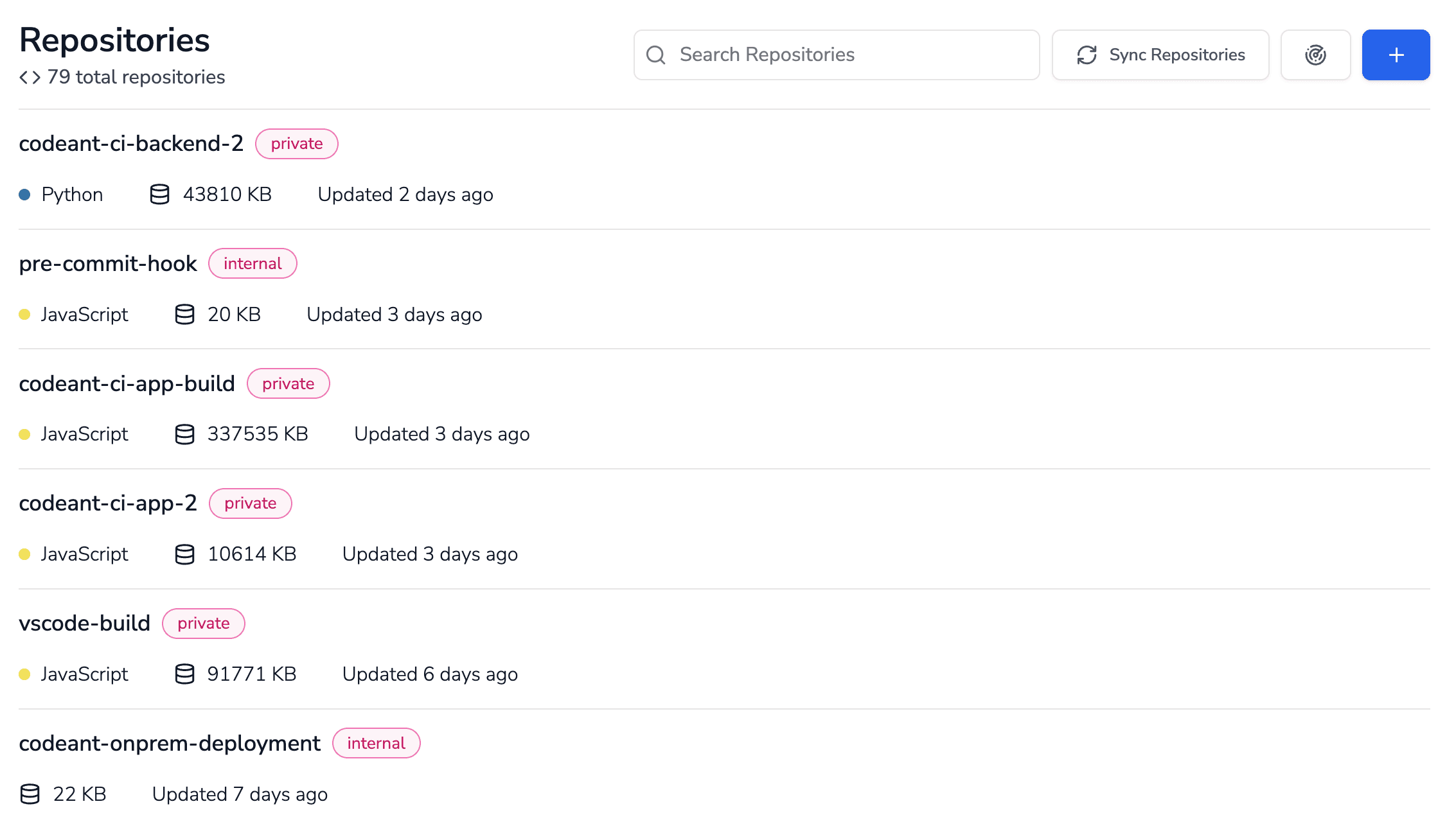

CodeAnt AI performs continuous security scanning across your entire codebase, not just new PRs. For existing code, CodeAnt continuously scans every repository, every branch, and every commit to uncover critical quality and security issues.

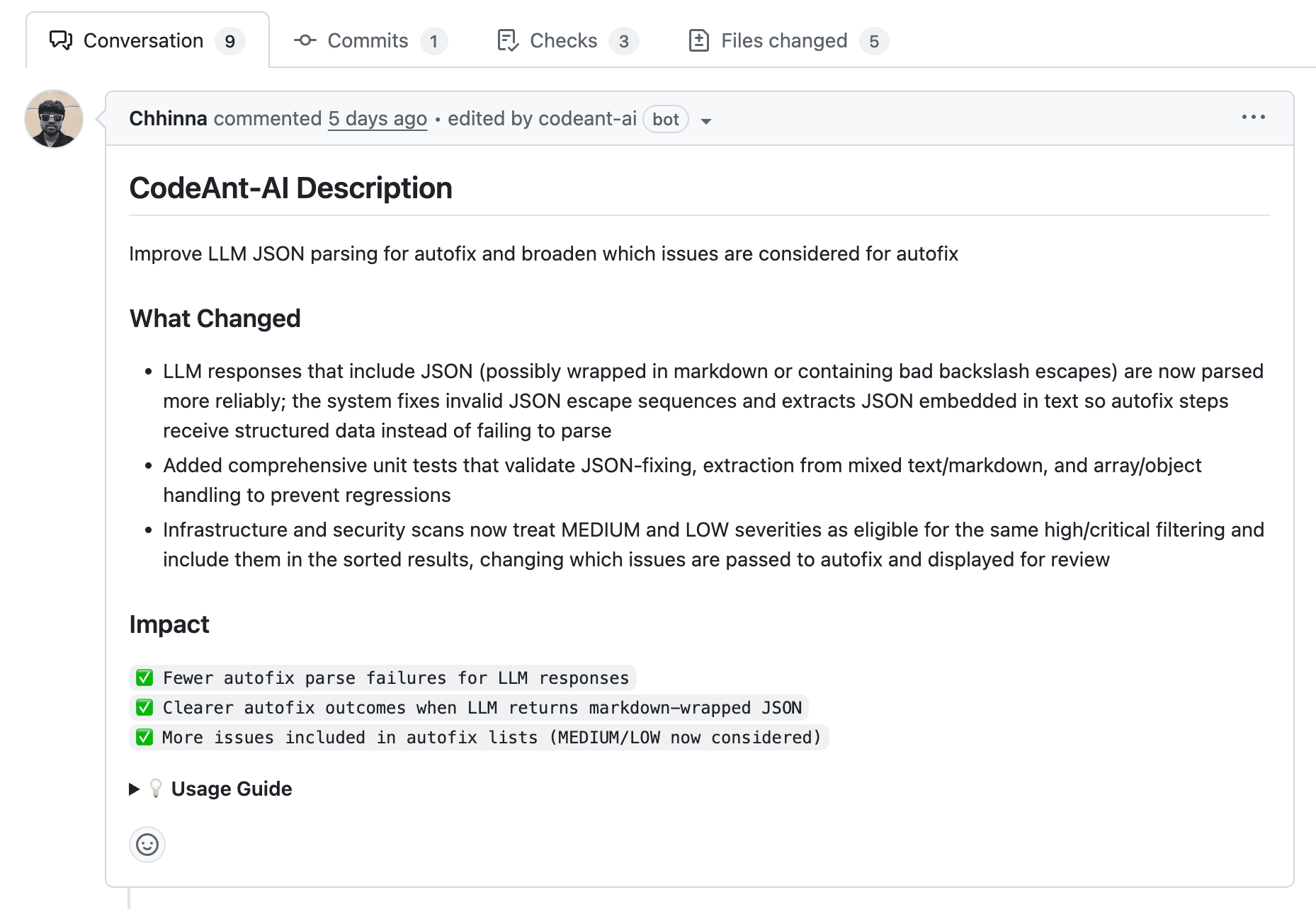

AI-Generated Summaries and Fix Suggestions

AI summarizes PR changes and suggests fixes, reducing cognitive load for reviewers. Instead of parsing hundreds of lines to understand intent, reviewers start with context.

CodeAnt AI provides actionable one-click fixes for common issues. The platform reviews pull requests in real time and suggests actionable fixes, reducing manual review effort significantly.

Automated Standards Enforcement

Automation enforces organization-specific coding standards consistently. Human reviewers no longer waste time on style debates when tools handle enforcement automatically.

Consistency matters especially during high-pressure sprints, when reviewers might otherwise let style issues slide to save time.

Building a Code Review Culture That Holds Up Under Pressure

Process alone isn't enough. Team culture determines whether quality survives sprint pressure or becomes the first casualty.

Deliver Feedback That Is Specific and Actionable

Vague criticism wastes everyone's time. Specific guidance helps authors improve quickly.

Compare the difference:

Vague: "This could be better"

Specific: "Extract this logic into a helper function to improve testability"

The second comment tells the author exactly what to do and why. That's feedback they can act on immediately.

Make Clarification Requests Normal

Reviewers who ask questions aren't admitting ignorance. They're improving the codebase. If code requires explanation, it probably requires better documentation or clearer naming.

Encourage authors to welcome questions as opportunities to improve code clarity, not as criticism of their work.

Recognize Quality Reviews Not Just Fast Merges

What you celebrate, you reinforce. If your team only recognizes shipping speed, quality will suffer under pressure.

Acknowledge thorough reviews that catch issues before production. Recognize reviewers who provide constructive, educational feedback. Over time, these behaviors compound.

Ship Faster Without Cutting Corners on Code Quality

Thoughtful reviews and fast delivery aren't mutually exclusive. Teams that combine the right practices, metrics, and automation maintain quality even during high-pressure sprints.

The key is working smarter, not just harder. Automate routine checks. Prioritize high-risk code. Track metrics that reveal problems early. Build a culture that values quality alongside velocity.

CodeAnt AI unifies code review, security, and quality in a single platform. The platform provides context-aware AI reviews, continuous security scanning, and engineering metrics that surface bottlenecks before they derail your sprint. Unlike tools that force you into separate add-ons for analytics, CodeAnt provides a complete picture out of the box, including DORA metrics, developer metrics, and test coverage.

Ready to maintain review quality under pressure?Book your 1:1 with our experts today!