AI Code Review

Jan 29, 2026

Top 5 No-Impact Severity Labels to Watch in Early 2026

Sonali Sood

Founding GTM, CodeAnt AI

As we settle into early 2026, the software development landscape has shifted. AI-generated code is now the norm, drastically increasing the volume of pull requests and the speed of deployment. However, this velocity brings a hidden challenge: the accumulation of "No-Impact" or "Minor" severity issues. These are code health warnings that don't crash the system immediately, they don't trigger PagerDuty, but they silently degrade maintainability and scalability.

Many engineering teams ignore these labels, focusing only on Critical or Major alerts that affect customers directly. But here's the thing: in an AI-accelerated environment, these minor issues compound exponentially. What starts as a harmless duplication or a slight configuration drift can quickly become technical debt that paralyzes your team's ability to ship new features. Understanding which of these "quiet" alerts to watch is essential for maintaining a healthy codebase this year.

What Are No-Impact Severity Labels?

In technical terms, a "No-Impact" or Minor severity label represents a risk score compressed into a category where the immediate blast radius is zero. According to recent engineering standards, these labels typically flag maintainability improvements, refactors, or low-risk cleanup tasks. Unlike Critical issues that signal security exposure or Major issues that indicate broken business logic, "No-Impact" labels tell you the code works, but it isn't healthy.

These labels essentially highlight "silent" failures. The application returns the correct response, and the user is happy, but the underlying structure is weakening. They often include dead code, minor style violations, or logic that is technically correct but unnecessarily expensive to compute. While they lack immediate consumer-facing consequences, they increase the cost-of-inaction, eventually leading to regressions or compute spikes.

Why Prioritize Them in Early 2026

The urgency to address these labels in 2026 stems from the sheer scale of code production. With AI tools acting as force multipliers, a single developer can generate significantly more lines of code than just two years ago. If your AI tools introduce minor inefficiencies or duplications at scale, you aren't just adding a few bad lines, you are systematizing technical debt.

Ignoring these labels creates a "death by a thousand cuts" scenario. A codebase riddled with minor severity issues becomes impossible to audit or refactor. Furthermore, as systems become more autonomous, "No-Impact" issues like confusing variable naming or lack of comments make it harder for both humans and AI agents to understand the system's intent later. Prioritizing these labels now ensures your infrastructure remains robust enough to handle the next wave of innovation.

Top 5 No-Impact Severity Labels to Watch

1. Low-CVSS Dependency Vulnerabilities

Not every security alert is a fire. Low-severity dependency warnings often get ignored because they lack a clear exploit path. However, these "sleeping" vulnerabilities are dangerous. A library with a minor flaw today can become a Critical vector when chained with a new feature tomorrow. In 2026, supply chain attacks often leverage these overlooked, low-priority entry points to move laterally through a system. Watching these ensures you aren't building on a crumbling foundation.

2. Minor Configuration Drifts

Configuration drift usually starts small, a feature flag left on in a staging environment or a timeout setting that differs slightly between services. These are classic "No-Impact" labels because the app still runs. However, they destroy determinism. When an incident occurs, these drifts make reproduction impossible because "it works on my machine" becomes a valid, yet unhelpful, defense. Catching these drifts early prevents the chaos of debugging non-deterministic environments.

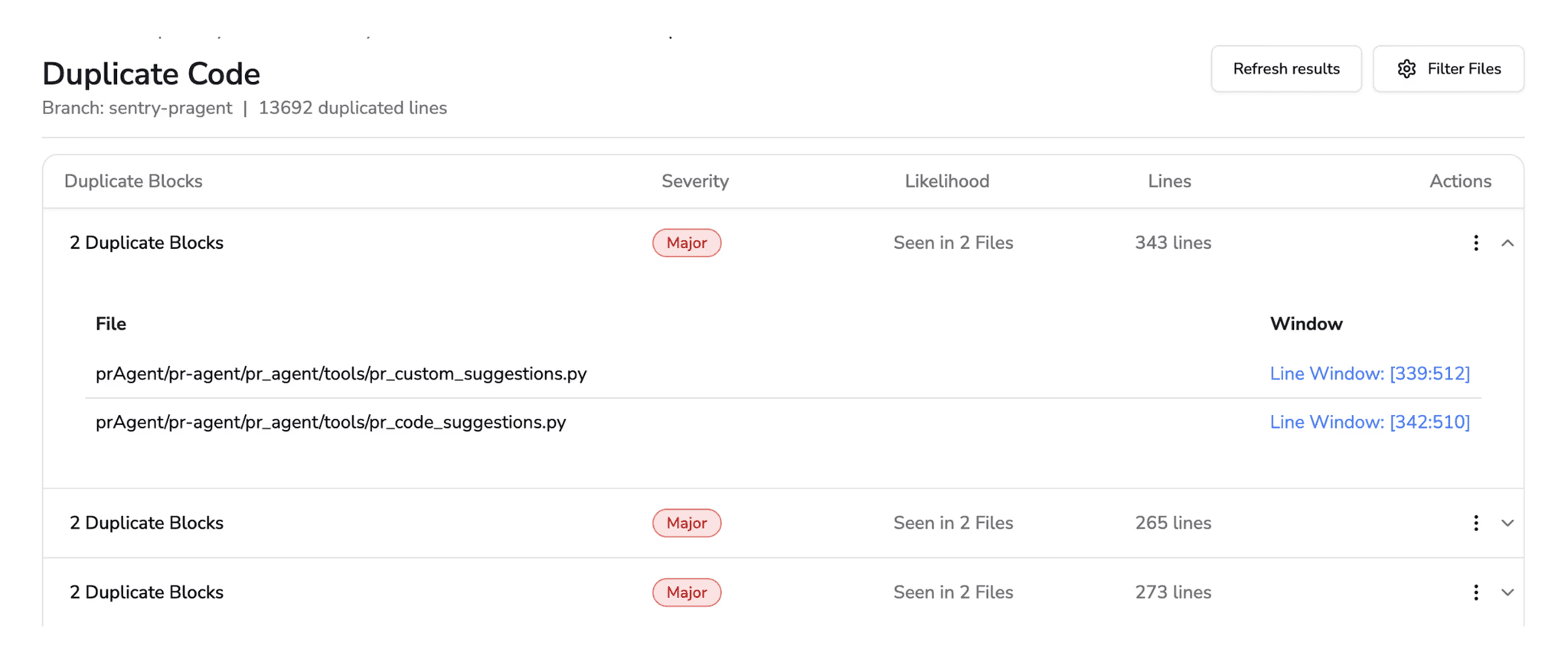

3. Code Duplication Alerts

AI coding assistants are notorious for generating duplicate logic. They solve a problem in one file and copy-paste that solution to another. While this doesn't break the build, it violates the DRY (Don't Repeat Yourself) principle. This is a maintenance nightmare. If a bug is found in one instance, it must be fixed in five others. If you miss one, you have a regression. These alerts are the best indicator of future maintenance costs.

4. Elevated Cyclomatic Complexity

Cyclomatic complexity measures the number of linearly independent paths through a program's source code. A function with high complexity might work perfectly (Zero Impact), but it is fragile. It indicates that the logic is too convoluted to be easily tested or understood. If you see this label, it means your code is becoming a "black box" that developers will be afraid to touch, slowing down future feature development.

5. Suboptimal Test Coverage

This label often appears when tests pass, but coverage metrics are slightly off. It’s easy to dismiss as noise. However, "suboptimal" often means the "happy path" is tested, but edge cases are ignored. In 2026, where systems are increasingly complex and distributed, edge cases are where the bugs live. This label is a warning that your safety net has holes, leaving you vulnerable to silent failures during refactors.

How No-Impact Labels Emerge and Escalate

No-impact labels rarely stay that way. They emerge from the gap between "working code" and "correct code." A developer might skip a tenant filter because it's an internal tool (Minor severity), or an AI might hallucinate a retry loop that works but wastes compute (Performance impact). Initially, these are harmless.

However, without a Trace + Attack Path analysis, these minor issues escalate. A missing filter becomes a data leak when the internal tool is exposed publicly. A retry loop becomes a DDoS attack on your own database during high traffic. The issue propagates through the system, from entry point to downstream calls, turning a "cleanup" task into a Major incident. Understanding this propagation is key to realizing why "No-Impact" is often a temporary state.

Best Practices for Detection and Remediation

Prioritize Risk-Based Triage

You cannot fix everything, nor should you. The goal is to use Impact Areas to categorize failures. Is this minor issue affecting reliability, security, or just style? Use a severity matrix that weighs the likelihood of failure against the cost of inaction. If a "No-Impact" label involves data validation or authentication logic, treat it as a latent Critical risk and prioritize it immediately.

Integrate Automated Scanning

Manual review is too slow for modern velocity. You need automated tools that act as a quality gate, not just a second opinion. These tools should continuously scan for maintainability issues, complexity spikes, and configuration drifts. By integrating these checks into your CI/CD pipeline, you catch "No-Impact" issues before they merge. This shifts the focus from "finding bugs" to "preventing rot."

Foster Developer-Led Fixes

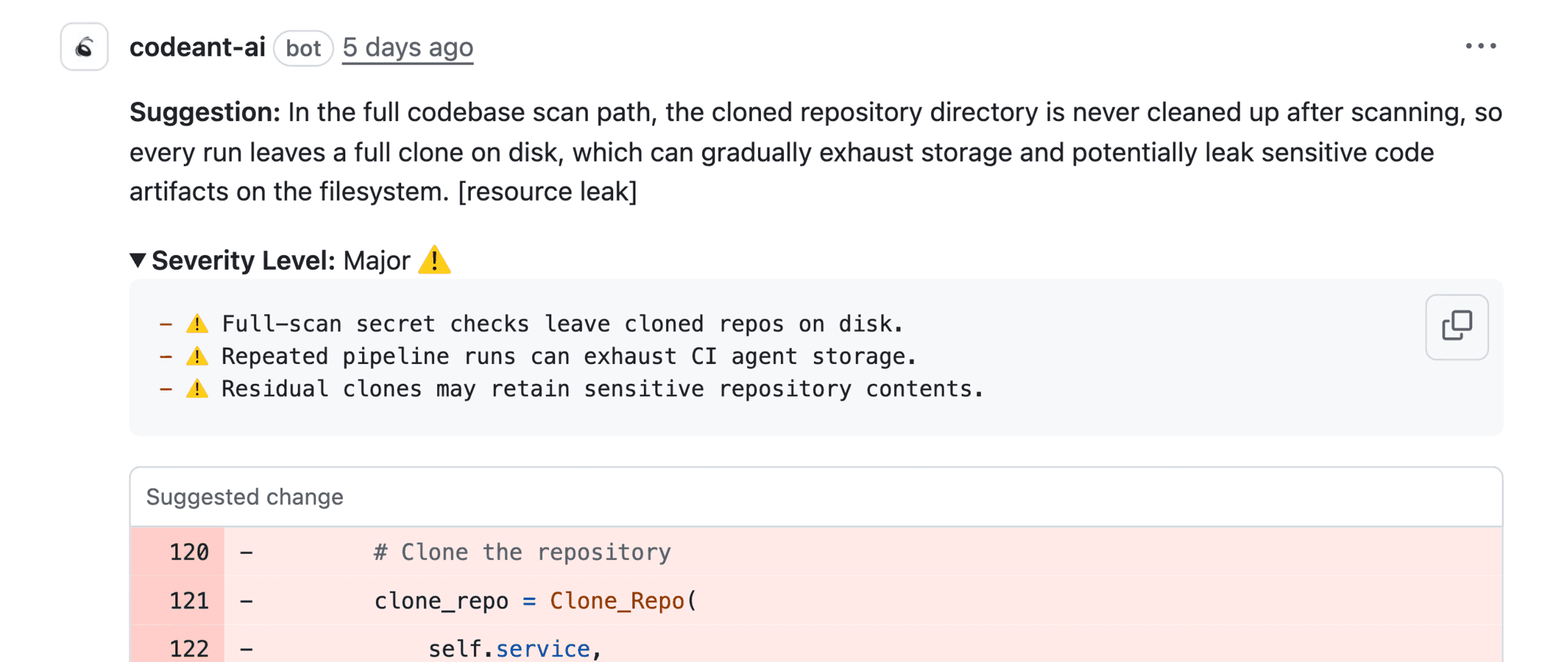

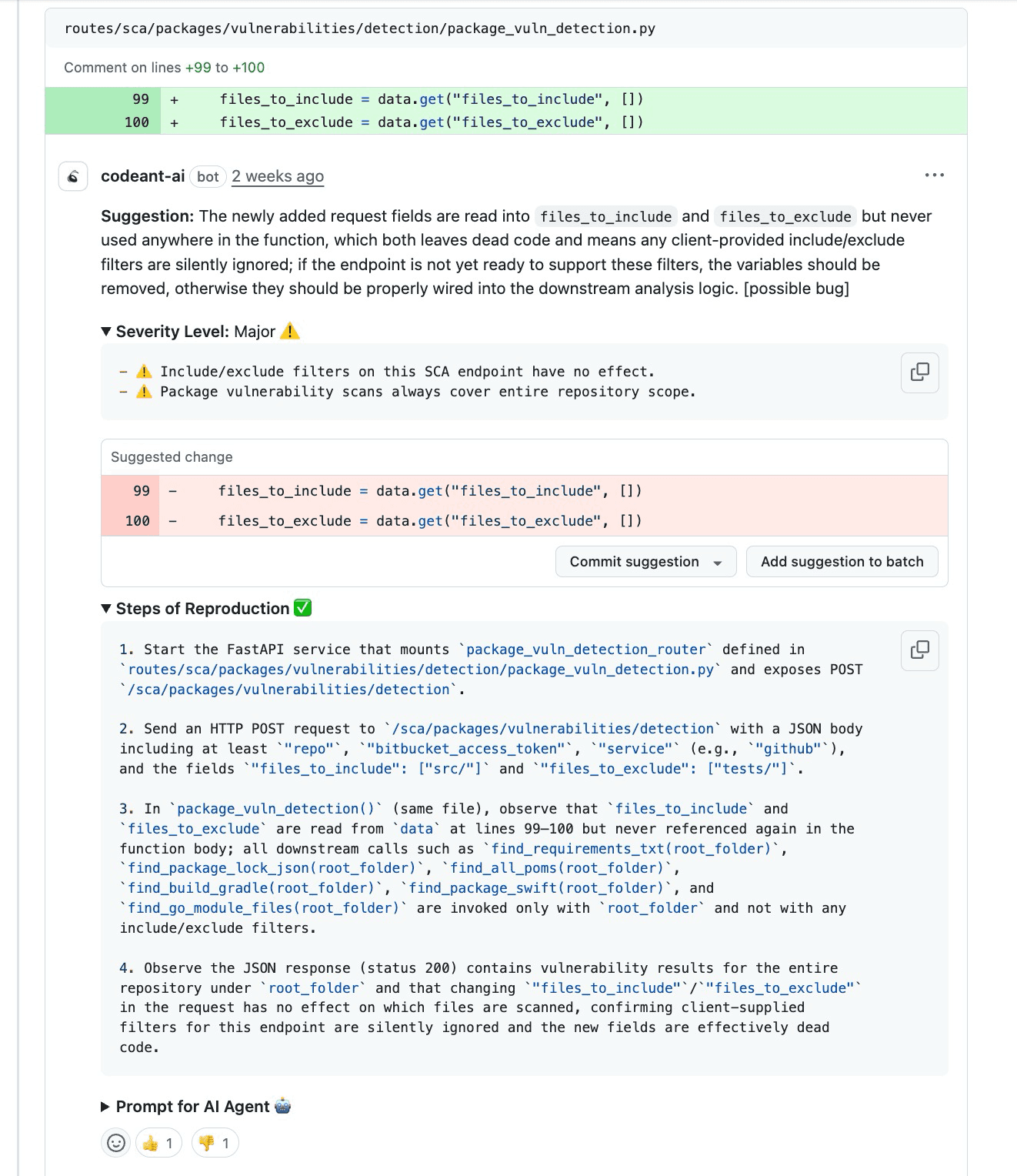

The best way to handle minor severity labels is to empower developers to fix them as they go. This requires Steps of Reproduction that prove the issue exists.

When a tool provides a deterministic checklist, entry point, trigger condition, and expected vs. actual output, developers can verify and fix the issue quickly. This moves the conversation from arguing about opinions to validating engineering statements.

Common Mistakes to Avoid

The biggest mistake is treating "No-Impact" as "No-Action." Teams often silence these alerts to reduce noise, only to find themselves drowning in technical debt months later. Conversely, treating every minor linting error as a blocker causes alert fatigue, leading developers to ignore the tools entirely.

Another pitfall is relying on severity alone. A Minor severity on a payment gateway is different from a Minor severity on a readme file. Context matters. Failing to correlate severity with Impact Areas (like Consumer-facing vs. Internal) leads to poor prioritization. Finally, avoid vague feedback. Telling a developer "this is complex" without showing the Trace of how it complicates the system ensures the advice will be ignored.

Level Up Code Health with CodeAnt AI

Managing severity labels effectively requires more than just a linter; it demands a platform that understands context. CodeAnt AI moves beyond simple suggestions by acting as a true quality gate for your codebase. Unlike standard tools that flood you with unprioritized claims, CodeAnt AI provides evidence-first reviews.

For every issue, CodeAnt AI assigns a Severity Level based on risk and Impact Areas to explain exactly what breaks, whether it's security, reliability, or cost. It goes further by generating Steps of Reproduction and a Trace + Attack Path, showing you exactly how a bug propagates through your system. This transforms code review from a guessing game into a verifiable engineering process, helping you catch both critical bugs and the "no-impact" issues that threaten your long-term velocity.

Conclusion

In 2026, the difference between a high-performing engineering team and a stagnant one often lies in how they handle the small stuff. "No-Impact" severity labels are not just noise; they are early warning signals of technical debt, security risks, and maintainability nightmares. By keeping a close watch on dependencies, configuration drifts, duplication, complexity, and coverage, you can maintain a codebase that is not just functional, but healthy. Prioritize these silent risks today to prevent the loud failures of tomorrow.