AI Code Review

Feb 17, 2026

Leading Platforms That Surface Bugs Before Code Is Merged

Sonali Sood

Founding GTM, CodeAnt AI

Manual code reviews catch only 60% of defects. AI-generated code introduces bugs at 1.7x the rate of human-written code. When breaking changes slip through to production, whether it's a refactored API that breaks three downstream services or a security vulnerability from Copilot, the cost isn't just technical debt. It's incident response, customer trust, and engineering hours spent firefighting instead of shipping features.

This guide evaluates the leading platforms that surface bugs pre-merge. You'll learn what separates effective pre-merge detection from basic linting and which capabilities actually reduce production incidents for teams managing 100+ developers or microservices complexity.

What Defines a "Leading" Pre-Merge Detection Platform

Not all pre-merge tools are created equal. The difference between a rule-based linter and a context-aware AI platform is the difference between catching syntax errors and preventing architectural drift.

Context Awareness

Leading platforms understand your codebase as a system, not isolated files. They analyze cross-file dependencies, track how changes propagate through microservices, and flag breaking changes that ripple across service boundaries. File-level analysis catches code smells; architectural analysis prevents production incidents.

Signal Quality Over Noise

False positive rates matter more than raw issue counts. A platform surfacing 200 issues per PR, 90% irrelevant, trains developers to ignore alerts. The best platforms learn from your codebase, adapt to your standards, and surface only actionable findings.

Integration Depth

Pre-merge detection requires seamless CI/CD integration. Leading platforms run automated checks on every PR, block merges when critical issues are found, and provide inline comments developers can act on without context switching.

Remediation Workflows

Identifying bugs is half the battle. The best platforms suggest fixes, generate patches, and in some cases auto-commit corrections. One-click remediation turns detection into resolution.

Custom Standards Enforcement

Every organization has unique requirements. Leading platforms let you codify naming conventions, architectural patterns, and security policies as custom guidelines that run alongside built-in checks.

CodeAnt AI: Context-Aware Leader in Pre-Merge Detection

CodeAnt AI sets the standard for pre-merge bug detection by combining a semantic dependency graph with AI-powered review automation. While competitors analyze individual files or PRs in isolation, CodeAnt's Codegraph maps your entire codebase, understanding how changes in one service affect dependent systems.

How the Codegraph Catches Cross-Service Breaking Changes

The Codegraph analyzes up to 400,000+ files to build a semantic map of your codebase, tracking function calls, API contracts, database schemas, and service dependencies. When a developer refactors an authentication service, CodeAnt doesn't just check the modified files, it identifies every downstream service that depends on that auth contract.

Real-world example: A fintech team refactored their payment processing API, changing a response field from transaction_id to txn_id. GitHub Copilot and SonarQube flagged no issues, both tools analyzed the PR in isolation. CodeAnt's Codegraph identified that 3 microservices and 12 frontend components depended on the original field name, preventing a production outage.

This architectural awareness delivers measurable impact:

80% reduction in manual review time by surfacing only high-signal issues

65% fewer security vulnerabilities reaching production

Cross-service impact analysis that prevents breaking changes from merging

Unified Platform vs. Fragmented Toolchains

Most teams cobble together pre-merge detection: SonarQube for quality, Snyk for security, manual reviews for architecture. CodeAnt consolidates this into a single platform:

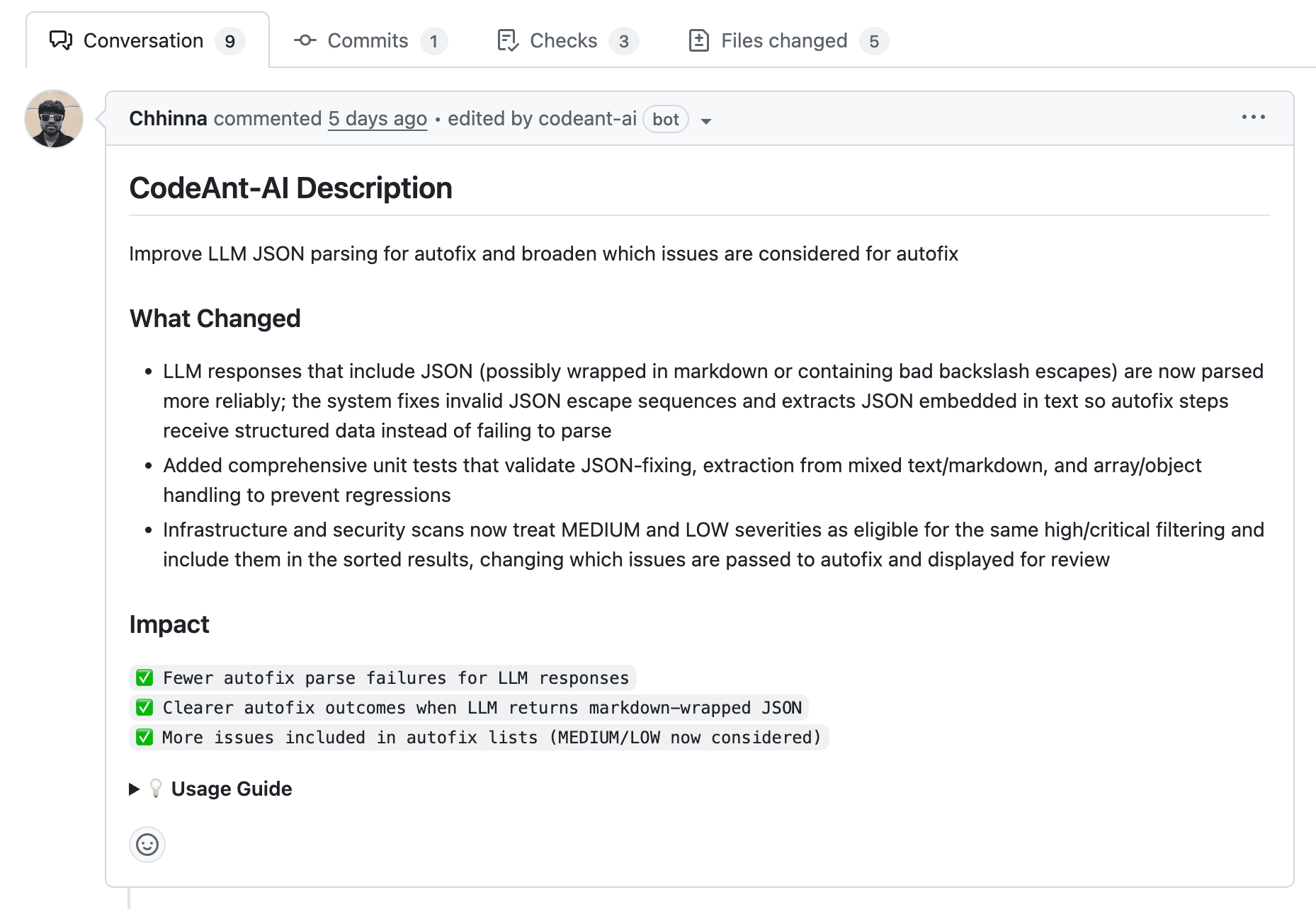

PR review automation with AI-generated summaries and inline suggestions

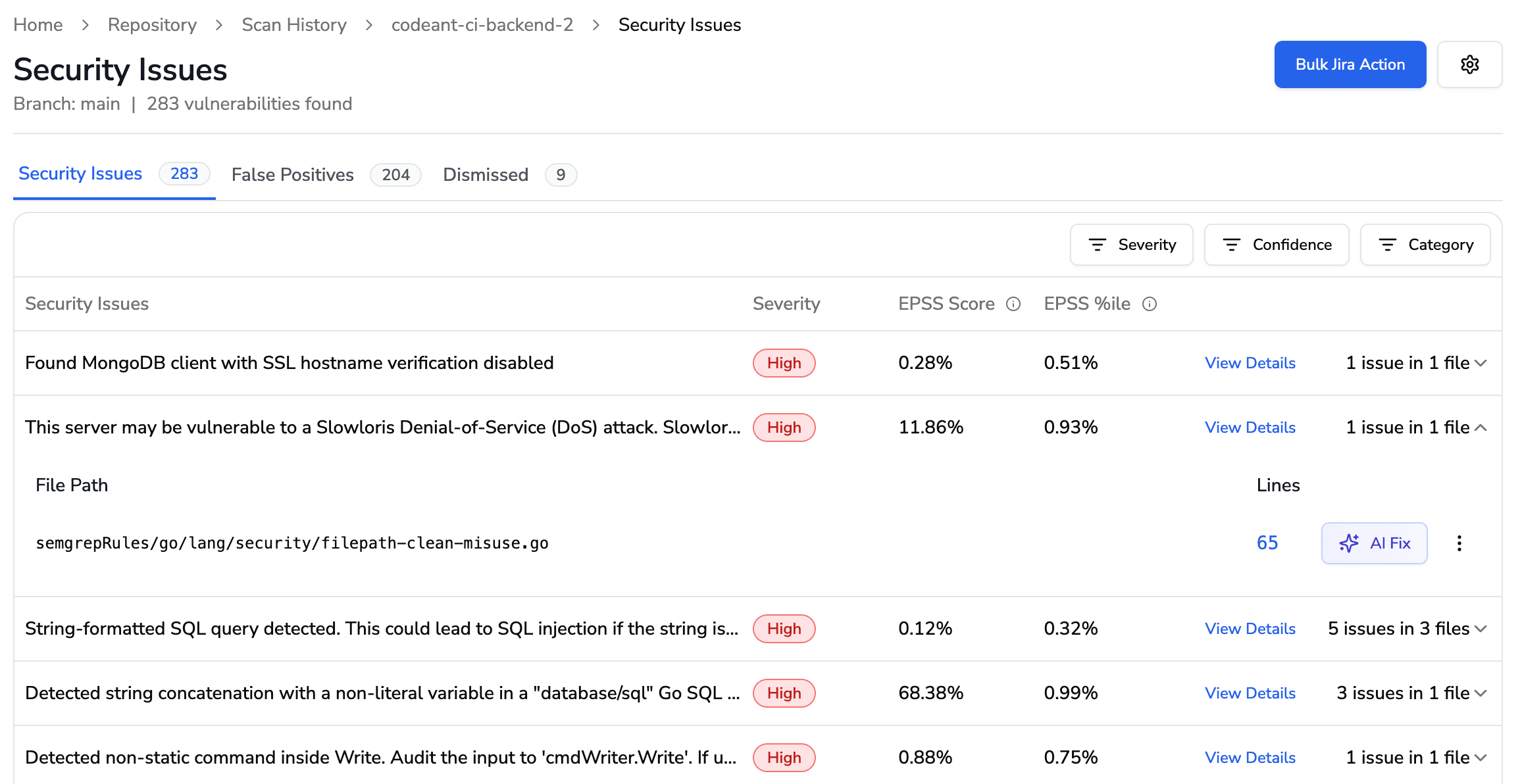

Security scanning for vulnerabilities, secrets, and misconfigurations

Quality gates enforcing complexity, duplication, and coverage thresholds

Custom guidelines codifying organization-specific standards

Engineering analytics tracking DORA metrics and code health trends

The difference isn't just convenience, it's context. A fragmented toolchain analyzes your code through multiple disconnected lenses. CodeAnt's unified platform understands how security findings relate to quality issues and how individual PRs affect system-wide health.

One-click fixes take this further. When CodeAnt flags a security vulnerability or quality issue, it generates a patch and can auto-commit the fix as a suggestion or merge request, eliminating back-and-forth that extends review cycles.

Book your 1:1 with our experts today to see how CodeAnt fits your workflow.

Alternative Platforms: Specialized Use Cases + Trade-offs

While CodeAnt leads in comprehensive pre-merge detection, specific teams may evaluate alternatives based on niche requirements or existing toolchain investments.

GitHub Copilot for Pull Requests: Generation Without Validation

What it does well:

Copilot excels at code generation and IDE integration. It suggests completions, generates boilerplate, and speeds up initial development. For teams already using GitHub, it's a natural extension with zero setup.

Where it falls short:

Copilot generates code, it doesn't validate it. There are no quality gates, no cross-service analysis, no security scanning, and no enforcement of custom standards. It reviews PRs at a surface level without understanding architectural impact.

CodeAnt's advantage: Copilot writes code. CodeAnt ensures it's production-ready. Use them together: let Copilot accelerate development, then run CodeAnt's pre-merge checks to validate security, quality, and architectural consistency.

Checkout the best Github Copilot alternative.

CodeRabbit: PR-Focused Review Without Codebase-Wide Context

What it does well:

CodeRabbit provides fast PR reviews with learning capabilities, adapting to your team's feedback. It integrates cleanly with GitHub and GitLab, offering inline comments and review summaries.

Where it falls short:

CodeRabbit reviews new code only, it doesn't scan your existing codebase for vulnerabilities or technical debt. It lacks architectural understanding to catch cross-service breaking changes and doesn't provide engineering analytics like DORA metrics.

CodeAnt's advantage: CodeAnt's Codegraph analyzes your entire codebase, not just the diff. It tracks how changes affect dependent services and surfaces technical debt that accumulates over time.

SonarQube Community Edition: Rule-Based Quality, Not AI-Powered Insight

What it does well:

SonarQube is mature, supports 30+ languages, and provides comprehensive rule-based quality checks. It's widely adopted, well-documented, and offers a free Community Edition.

Where it falls short:

SonarQube's rule-based approach is architecturally blind. It analyzes files in isolation, missing cross-service dependencies and breaking changes that span multiple components. For teams with microservices or distributed architectures, file-level analysis isn't enough.

CodeAnt's advantage: SonarQube catches code smells. CodeAnt catches architectural drift. By combining AI-powered analysis with the Codegraph's semantic understanding, CodeAnt identifies issues that rule-based tools fundamentally cannot detect.

Checkout the best SonarQube alternative.

Snyk Code: Security-First, Quality-Second

What it does well:

Snyk Code excels at security scanning, identifying vulnerabilities in dependencies and application code. It integrates with CI/CD pipelines and provides detailed remediation guidance.

Where it falls short:

Snyk is security-focused, not a comprehensive code review platform. It doesn't enforce quality standards, track technical debt, or provide engineering analytics.

CodeAnt's advantage: CodeAnt unifies security and quality in a single platform. You get vulnerability scanning, secret detection, and misconfigurations alongside complexity analysis, duplication tracking, and custom guidelines—all with the architectural context that Snyk lacks.

Platform Comparison: Core Capabilities

Capability | CodeAnt AI | GitHub Copilot | CodeRabbit | SonarQube | Snyk Code |

Cross-service breaking change detection | ✅ Yes (Codegraph) | ❌ No | ❌ No | ❌ No | ❌ No |

One-click fixes / auto-remediation | ✅ Yes | ❌ No | ⚠️ Limited | ⚠️ Limited | ⚠️ Limited |

Custom guidelines enforcement | ✅ Yes | ❌ No | ⚠️ Basic | ✅ Yes | ❌ No |

Engineering analytics (DORA metrics) | ✅ Yes | ❌ No | ❌ No | ⚠️ Limited | ❌ No |

Existing codebase scanning | ✅ Yes | ❌ No | ❌ No | ✅ Yes | ✅ Yes |

AI-powered review automation | ✅ Yes | ✅ Yes | ✅ Yes | ❌ No | ❌ No |

Security + quality unified | ✅ Yes | ❌ No | ❌ No | ⚠️ Quality only | ⚠️ Security only |

Key takeaway: CodeAnt is the only platform combining architectural understanding, one-click remediation, and unified security + quality + analytics. Competitors excel in narrow domains but require fragmented toolchains to match CodeAnt's comprehensive capabilities.

Choosing the Right Platform for Your Team

The best pre-merge bug detection platform depends on your team's size, codebase complexity, and workflow requirements.

When CodeAnt Is the Clear Choice

Ideal for:

Teams with 100+ developers managing microservices or distributed architectures

Organizations with polyglot codebases (multiple languages, frameworks, and services)

Engineering teams validating AI-generated code from Copilot, Cursor, or similar tools

Companies with security or compliance requirements (SOC2, HIPAA, PCI-DSS)

Teams seeking unified code health visibility across review, security, quality, and productivity

ROI comparison: CodeAnt costs ~$24/user/month. A fragmented toolchain (SonarQube + Snyk + manual reviews) costs $800-1,500/month for a 50-developer team, plus integration overhead and context-switching tax. CodeAnt consolidates this into a single platform with deeper insights and faster remediation.

How to Evaluate Platforms in Your Environment

Don't rely on marketing claims, test platforms on your real codebase:

Test on a complex PR: Choose a PR touching multiple services or introducing architectural changes. Run it through each platform and compare what they catch.

Measure false positive rate: Count actionable issues vs. noise. A platform surfacing 50 high-signal issues beats one surfacing 200 low-signal issues.

Assess integration complexity: How long does setup take? Does it work out of the box?

Validate custom guidelines support: Can you enforce your organization's specific standards?

Check remediation workflows: Does the platform just identify issues, or suggest fixes and generate patches?

Start with CodeAnt's 14-day trial: Try CodeAnt on your most complex PR and see the difference context-aware AI makes. Book your 1:1 with our experts today to discuss your specific requirements.

Practical Evaluation Checklist

Select Representative Test Cases

Choose 2-3 PRs that reflect your team's real work patterns:

A refactor PR: Tests cross-file context awareness and breaking change detection

A dependency bump: Validates security and compatibility detection

A security-sensitive change: Evaluates threat modeling capabilities

Define Measurement Criteria

Metric | Target Threshold |

True Positives | ≥70% of flagged issues should be actionable |

False Positives | ≤30% of total findings |

Time-to-Fix | <5 min per issue for common patterns |

CI Runtime Impact | <2 min for typical PR analysis |

Tune Policies and Suppressions

Every codebase has unique patterns. Example CodeAnt configuration:

Integrate with Branch Protection

Once you've validated accuracy, enforce checks at the merge gate:

Recommended rollout:

Week 1-2: Advisory mode (checks run, but don't block)

Week 3-4: Soft block on security/critical issues only

Week 5+: Full enforcement with tuned policies

Make Pre-Merge Detection a Required Gate

The leading platforms that surface bugs pre-merge share four defining traits: context-aware detection that understands your architecture, high-precision signals that reduce alert fatigue, enforceable gates that block risky changes, and fast remediation workflows that keep velocity high.

Run a Focused 2-Week Pilot

Before rolling out platform-wide:

Select 3 representative PRs: a refactor touching multiple services, a dependency upgrade, and a security-sensitive change

Measure precision: Track true positives vs. false positives, aim for >80% signal quality

Benchmark time-to-fix: Compare remediation speed against your current review process

Enable as a required check: Start with high-risk repos before expanding

Assess Workflow Fit with CodeAnt AI

If you're managing 100+ developers or dealing with microservices complexity, CodeAnt AI unifies pre-merge review, security scanning, and code quality enforcement in a single platform. It integrates directly into your PR workflow, enforces org-specific standards, and delivers actionable fixes that don't slow down shipping.

Start 14-day free trial to run the pilot on your actual codebase—evaluate integrations, policy enforcement, and detection accuracy against your team's real PRs, not synthetic demos.