AI Code Review

Feb 9, 2026

How to Prevent AI Code Review from Overwhelming Developers

Sonali Sood

Founding GTM, CodeAnt AI

AI code generation has unlocked unprecedented velocity, your team ships features faster than ever. But there's a hidden cost: PR queues are exploding, AI review tools flag hundreds of issues per week, and senior engineers drown in noise instead of focusing on architecture.

The promise was "AI speeds up reviews." For many teams, the reality is review paralysis.

The problem isn't AI itself, it's how most tools operate. They treat every change equally, lack codebase context, and generate mountains of generic feedback developers learn to ignore. When 90% of AI comments are false positives or style nitpicks, the 10% that matter, security gaps, architectural risks, get lost in noise.

The solution isn't adding more tools or hiring more reviewers. It's implementing a framework that prioritizes by risk, learns your conventions, and automates fixes instead of just flagging problems. This guide shows how engineering teams handle AI-generated code velocity without burning out reviewers.

The AI Review Paradox: Why Automation Creates Burnout

Your team adopted GitHub Copilot six months ago. Developer velocity shot up, PRs jumped from 40 to 150 per week. You added an AI code reviewer to keep pace. Instead of relief, you got chaos.

The typical breakdown:

A senior engineer opens their review queue Monday morning to find 23 PRs waiting. The AI reviewer left 187 comments. Twelve flag the same async/await pattern your team standardized two years ago. Fifteen suggest refactors that break existing integrations. Eight identify legitimate security issues—buried in noise. The engineer spends 90 minutes triaging AI feedback before reviewing a single line of logic.

By Wednesday, the team rubber-stamps approvals. The AI cried wolf too many times. A credentials leak makes it to production because the warning was comment #43 in a PR with 60 AI-generated nitpicks about variable naming.

What Overload Actually Means

Review overload shows up in measurable workflow degradation:

Comment explosion: 200-400 AI comments per week, with 70-90% ignored as false positives

Time-to-merge inflation: Median PR merge time increases from 6 hours to 2-3 days

Ignored feedback: When tools flag everything equally, developers dismiss all feedback, including the 10% that matters

Rework cycles: Context-free comments trigger three-round debates instead of one-cycle fixes

Misallocated expertise: Senior engineers spend 40% of review time triaging AI noise instead of evaluating logic

The paradox is complete: AI was supposed to free senior engineers. Instead, it created a new category of toil, managing the AI itself.

Four Failure Modes That Create Review Overload

1. Context-Blind Feedback

Generic AI tools analyze code in isolation, applying universal rules without understanding your architectural decisions or conventions. A PR refactoring your authentication layer gets the same boilerplate "consider adding error handling" as a README typo fix.

This happens because most tools operate on static rules or LLMs trained on public repos, not your codebase. They can't distinguish between legitimate architectural patterns you've adopted and genuine anti-patterns.

Consequence: Developers learn to dismiss AI feedback entirely. When 80% of comments are irrelevant, the 20% of legitimate issues get ignored too.

2. No Prioritization

Tools that treat a missing semicolon with the same urgency as an exposed API key create a prioritization crisis. Without risk-based scoring, developers face an impossible choice: spend hours addressing every flag (and miss deadlines), or ignore the noise and hope nothing important was buried in it.

When AI code generation increases PR volume 3-5x, a tool flagging 40 issues per PR generates 200+ comments weekly. No human can process that volume while maintaining quality judgment.

Consequence: Review queues grow despite AI "assistance." Senior engineers become bottleneck managers, not force multipliers.

3. Detection Without Remediation

Many tools excel at finding issues but provide zero help fixing them. "Potential SQL injection vulnerability detected" without context, examples, or fixes creates a research task. Developers must understand why it's flagged, research remediation, implement the fix, verify functionality, and wait for re-review.

Consequence: "AI toil" automation creating more manual work. Fix cycles lengthen from one round to three or four.

4. Tool Sprawl and Duplicate Alerts

When AI review exists as a separate dashboard disconnected from your PR workflow, it introduces context-switching overhead. Developers check GitHub for human comments, a separate SonarQube dashboard for quality, another Snyk interface for security. Each tool operates in a silo, reporting the same issue three different ways.

Consequence: Review fatigue. Developers batch PR checks instead of addressing feedback immediately, delaying merges and increasing conflict risk.

Five Strategies to Prevent Overload

Strategy 1: Prioritize by Risk, Not Volume

Implement risk-based review routing that automatically categorizes PRs by impact. Authentication changes and API modifications get deep AI + human scrutiny. Documentation updates and formatting fixes fast-track through automated checks.

Why it works: Uniform review depth is the fastest path to cognitive overload. When every PR demands equal attention, developers either burn out or rubber-stamp everything—and critical issues slip through.

How to roll it out:

Define risk categories in your CI config:

Integrate with branch protection rules and use platforms like CodeAnt AI that automatically analyze PR content, not just file paths, to assess risk. A one-line change to an authentication function gets flagged as high-risk even in a "low-risk" directory.

Avoid: Over-categorization (start with 3 levels), static rules only (file paths miss context), no manual escalation option.

Strategy 2: Eliminate False Positives Through Learning

Deploy AI that learns your architectural patterns, conventions, and historical decisions, not just generic best practices. Reduce false positives from "90% ignored" to "90% actioned."

Why it works: Generic tools treat every codebase like strangers wrote it. They flag your deliberate architectural choices as "issues." After 50 irrelevant comments, developers stop reading, and real bugs get missed.

How to roll it out:

Connect your AI platform to full repo history (3-6 months of commits)

Start conservative (fewer comments, higher confidence), increase coverage as trust builds

Create a feedback loop, when developers dismiss comments, capture that signal

Document team conventions explicitly:

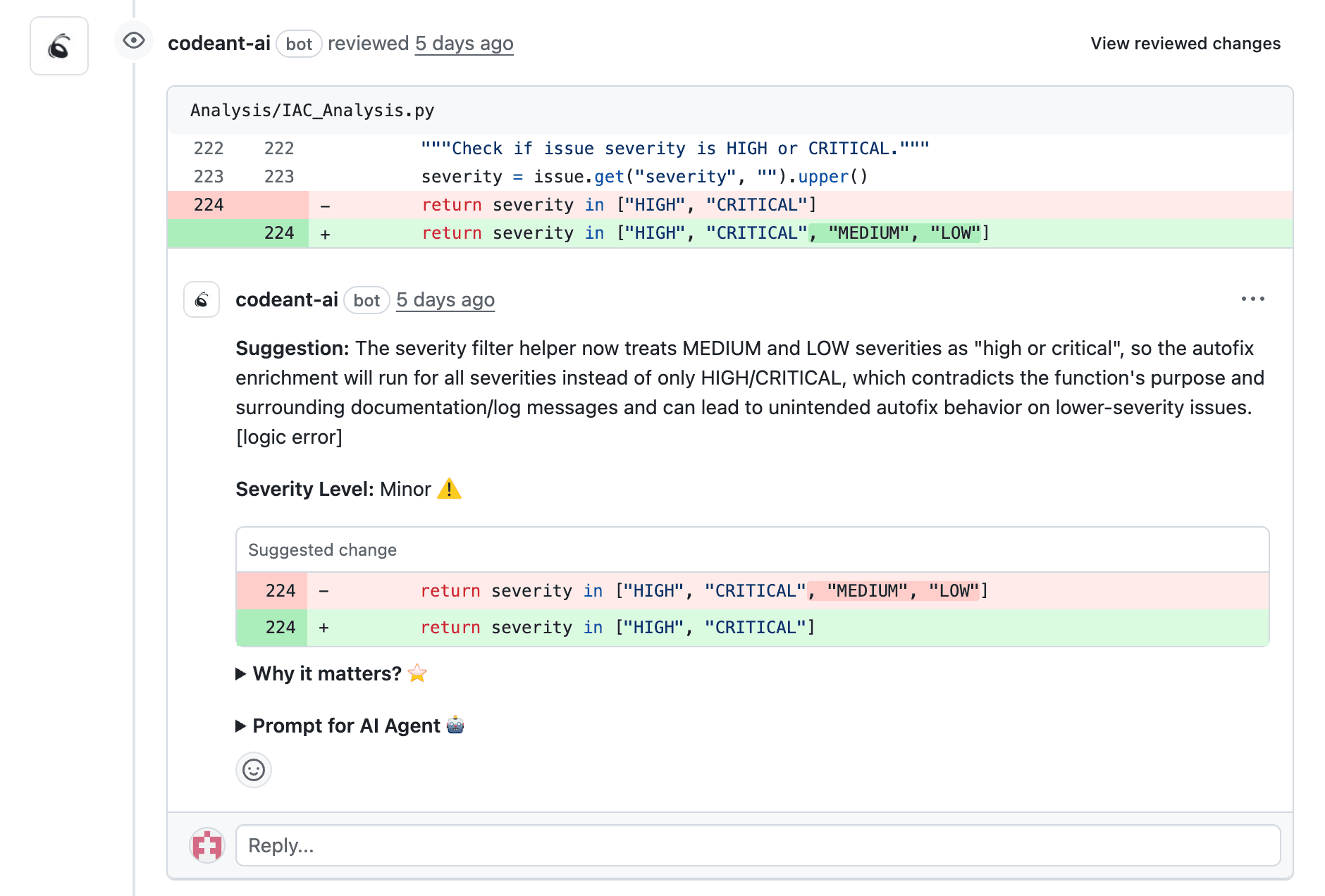

Competitive context: SonarQube and traditional linters flag violations against fixed rulesets, they can't learn if your team's patterns are intentional. CodeAnt AI analyzes your entire codebase to understand your conventions, reducing false positives by 70-80%.

Strategy 3: Provide Context, Not Just Criticism

Ensure every AI comment includes codebase context explaining why it matters and where it impacts the system. Replace "This function is too complex" with "This function is called by 12 modules across 4 services; increasing complexity will make debugging harder in auth flows, payment processing, and user onboarding."

Why it works: Context-free comments create review ping-pong. A single unclear comment adds 2-3 days to review cycles. Context-rich comments enable one-pass understanding and fixes.

How to roll it out:

Require impact analysis in comments, always answer:

What's the issue?

Why does it matter in this codebase?

What's the blast radius?

Use AI that understands cross-file dependencies and automatically surfaces which functions call this code, which services depend on this API, which tests will break.

Example:

Integration point: CodeAnt's comments automatically include codebase context by analyzing repo structure in real-time, showing where code is used, what depends on it, and why the change matters.

Strategy 4: Automate Fixes, Not Just Detection

Deploy AI tools that generate one-click fixes for detected issues. Shift from "here's what's wrong" to "here's what's wrong and here's the fix, approve to apply."

Why it works: Detection-only tools shift work, they don't eliminate it. If AI flags 50 issues and developers manually fix all 50, you've added 50 tasks to their queue. Auto-remediation flips the equation, AI detects, generates fixes, and presents for review. The developer approves in seconds.

How to roll it out:

Start with low-risk, high-volume fixes:

Adding null checks

Updating deprecated API calls

Fixing formatting violations

Applying security patches

Integrate with PR workflow:

Require human approval for high-risk fixes. Track fix acceptance rates—low acceptance signals the AI needs tuning.

Avoid: Auto-applying breaking changes, no diff visibility, ignoring test failures.

Competitive context: SonarQube, Snyk, and most linters detect but don't fix. CodeAnt AI generates one-click fixes directly in the PR interface, 80% of issues come with auto-remediation, cutting manual effort by 75%.

Strategy 5: Measure with Developer Analytics

Implement continuous monitoring of review metrics to diagnose overload before burnout. Track PR velocity, review time distribution, bottleneck reviewers, and comment-to-action ratios.

Why it works: You can't fix what you can't see. Most teams don't realize they have overload until developers complain or disengage. Analytics surface the problem early: "Review queue grew 40%," "Sarah reviews 3x more than anyone," "60% of AI comments dismissed."

How to roll it out:

Define baseline metrics:

PR velocity (opened vs. merged per week)

Review time (first review and final merge)

Review distribution per team member

Comment quality (actioned vs. dismissed)

DORA metrics (deployment frequency, lead time, change failure rate)

Set up automated dashboards and review weekly with leads. Act on the data: redistribute workloads, tune thresholds, adjust risk categorization.

Avoid: Vanity metrics without outcomes, dashboards without follow-up, blaming individuals instead of fixing systems.

Strategy | Impact Timeline | Implementation Effort |

Risk-based prioritization | 1-2 weeks | Medium |

Codebase learning | 2-4 weeks | Low |

Context-rich comments | Immediate | Low |

Auto-remediation | 1 week | Medium |

Developer analytics | Ongoing | Low |

How CodeAnt AI Prevents Overload by Design

CodeAnt isn't another AI code review tool contributing to noise, it's architected to handle AI-generated code velocity without overwhelming teams.

Codebase Context and History Awareness

CodeAnt ingests your entire codebase history, architectural patterns, team conventions, and cross-module dependencies to deliver comments that make sense in your specific context.

What it analyzes:

Change surface area and sensitive module detection

Dependency diff intelligence for CVEs and breaking changes

IaC and configuration misconfigurations

Context-aware secrets pattern matching

Instead of "Potential SQL injection risk," you get:

⚠️ High Risk: SQL query construction without parameterization

This endpoint accepts user input (req.query.userId) and builds raw SQL.

Similar endpoints in /api/v2/users use parameterized queries via db.query().

Blast radius: 3 public API routes

Suggested fix: Use parameterized query pattern from v2 endpoints

Risk-Based Prioritization and Intelligent Filtering

CodeAnt's risk scoring automatically triages every PR into priority tiers, ensuring senior engineers spend time on high-impact reviews while low-risk changes flow through quickly.

Risk dimensions:

Security surface (auth, secrets, input validation) → +3 levels

Architectural impact (breaking changes, API contracts) → +2 levels

Deployment risk (IaC, environment config) → +2 levels

Code complexity and test coverage → +1 level each

Intelligent filtering reduces comment volume 70% by eliminating false positives, consolidating duplicates, suppressing low-impact suggestions, and tracking already-addressed issues.

Remediation Suggestions and One-Click Fixes

When CodeAnt AI detects a hardcoded API key:

🔒 Critical: Production API key hardcoded in config file

This key has write access to Stripe.

Your team uses environment variables (see .env.example).

One-click fix:

1. Move to: STRIPE_API_KEY

2. Update config to: ENV['STRIPE_API_KEY']

3. Add to .gitignore

4. Rotate exposed key

[Apply Fix] [Dismiss]

Clicking "Apply Fix" automatically refactors code, updates .gitignore, creates follow-up task, and commits changes.

Unified Code Health View

CodeAnt consolidates review, security, quality, and productivity into a single platform. Every PR shows:

Review status with AI summary and risk score

Security findings (vulnerabilities, secrets, misconfigurations)

Quality metrics (complexity, duplication, coverage)

Productivity impact (estimated review time, DORA contribution)

This prevents context-switching tax across multiple tools.

Getting Started: 2-Week Implementation

Week 1: Connect and Baseline

Day 1-2: OAuth setup, select 2-3 high-velocity repos, CodeAnt AI scans history and conventions

Day 3-5: Establish baseline, review cycle time, manual review load, security findings, developer sentiment

Day 6-7: Observe CodeAnt in learning mode without changing team behavior

Week 2: Tune and Measure

Day 8-10: Adjust risk scoring, auto-approval rules, false positive tuning

Day 11-14: Compare baseline to current metrics:

40-60% review cycle reduction (13 hours → 6 hours)

70% less triage time, 70% more architectural review

3x more vulnerabilities caught in PR vs. production

80%+ AI fix acceptance rate

Beyond Week 2

Monitor monthly:

DORA metrics correlation

Contributor balance (is review load distributed?)

Technical debt trends

CodeAnt's built-in analytics surface these patterns automatically.

From Noise to Signal

The shift from "review everything" to "focus human attention where it matters" is a fundamental rethinking of how AI should support code review. By implementing risk-based prioritization, contextual filtering, auto-fixes, and continuous calibration, you transform AI from noise generator into force multiplier.

Your execution checklist:

Establish risk lanes: Route critical changes to senior reviewers, routine changes to automation

Set a noise budget: Cap AI comments at 5 actionable items per PR

Calibrate false positives: Review dismissed suggestions weekly, tune to your codebase

Enforce context-rich comments: Require AI to explain why and link to standards

Automate fixes: Apply one-click remediation for 80% of issues

Measure continuously: Track noise rate, time-to-merge, risk distribution

Ready to reclaim your team's focus? Start your 14-day free trial and see how context-aware AI code review eliminates overwhelm while catching the issues that matter.