AI Code Review

Dec 21, 2025

How to Reduce Code Review Fatigue: A 7-Step Guide for Development Teams

Sonali Sood

Founding GTM, CodeAnt AI

Code reviews are supposed to protect quality, not drain the life out of your engineering team. Yet as organizations scale, reviewers often find themselves stuck in a loop of repetitive checks, long PR queues, unclear expectations, and constant context-switching. The result is code review fatigue: a state of mental exhaustion that lowers feedback quality, slows delivery, and quietly hurts engineering culture.

If your team is rubber-stamping approvals, leaving PRs open for days, or complaining about “too many reviews,” you’re not alone, and you’re not stuck. This guide provides a practical, actionable system any engineering team can use to reduce review fatigue and create a more sustainable, high-quality code review process.

What Is Code Review Fatigue?

Code review fatigue is the mental and cognitive strain developers experience when they face high-volume, repetitive, or unclear pull request review expectations. Fatigue leads to:

rushed or shallow reviews

inconsistent feedback

overlooked bugs

growing PR queues

reviewer burnout

slower release cycles

Before fixing it, teams need to recognize the early signs.

Warning Signs of Reviewer Burnout

Fatigue rarely appears suddenly—it grows invisibly inside your development workflow. Here’s how to spot it early.

Rubber-Stamping Approvals Without Meaningful Comments

When developers start approving PRs in seconds with no actual feedback, it’s a sign they’re trying to move PRs out of their queue—not review them. This leads to lower quality and higher defect escape rates.

Growing Review Cycle Times

Review cycle time increases when reviewers subconsciously deprioritize PRs because they feel overloaded. Slow reviews cause PR queues to back up, creating frustration for authors and reviewers.

Reviewer Complaints About PR Volume

When team members frequently say things like:

“Too many PRs”

“Why am I always reviewing?”

“We need more reviewers”

…it’s a clear signal that workload distribution isn’t sustainable.

Inconsistent Feedback Quality Across the Team

Some reviews are thorough; others barely skim the surface. Variability indicates fatigue, unclear standards, or overloaded reviewers.

Knowledge Silos Around Senior Developers

If senior engineers handle all complex reviews while others remain underutilized, the imbalance eventually leads to burnout and delays.

Step 1: Keep Pull Requests Small and Focused

Small PRs are the foundation of healthy reviews. Cognitive load multiplies with every additional file, dependency, or change. Large diffs guarantee reviewer fatigue.

Why Large PRs Lead to Shallow Reviews

Reviewers facing a massive PR naturally skim or skip sections just to get through the volume. Critical issues hide inside noise. The chance of missing bugs rises sharply with PR size.

How to Enforce PR Size Limits in Your Workflow

Here are three effective techniques:

Branch by feature: Each PR should reflect one logical change, not a batch of unrelated updates.

Set soft limits: Agree on thresholds (e.g., 200–400 LOC) to nudge authors toward smaller, cleaner changes.

Use merge gates: Tools like CodeAnt AI flag oversized PRs early so authors split them before reviewers suffer.

Step 2: Standardize Code Review Expectations with Checklists

Checklists reduce cognitive load by giving reviewers a repeatable, predictable framework for what to look for—no decision paralysis, no inconsistency.

What to Include in a Code Review Checklist

A strong checklist covers:

Functionality → Does the code do what it claims?

Security → Are secrets protected? Inputs validated?

Readability → Is logic clear, naming consistent?

Testing → Are tests present, meaningful, and passing?

Performance → Any obvious bottlenecks?

How Checklists Reduce Cognitive Load

Instead of “figure out what to check,” reviewers start with a ready-made list. When the mental overhead drops, review quality increases—even under time pressure.

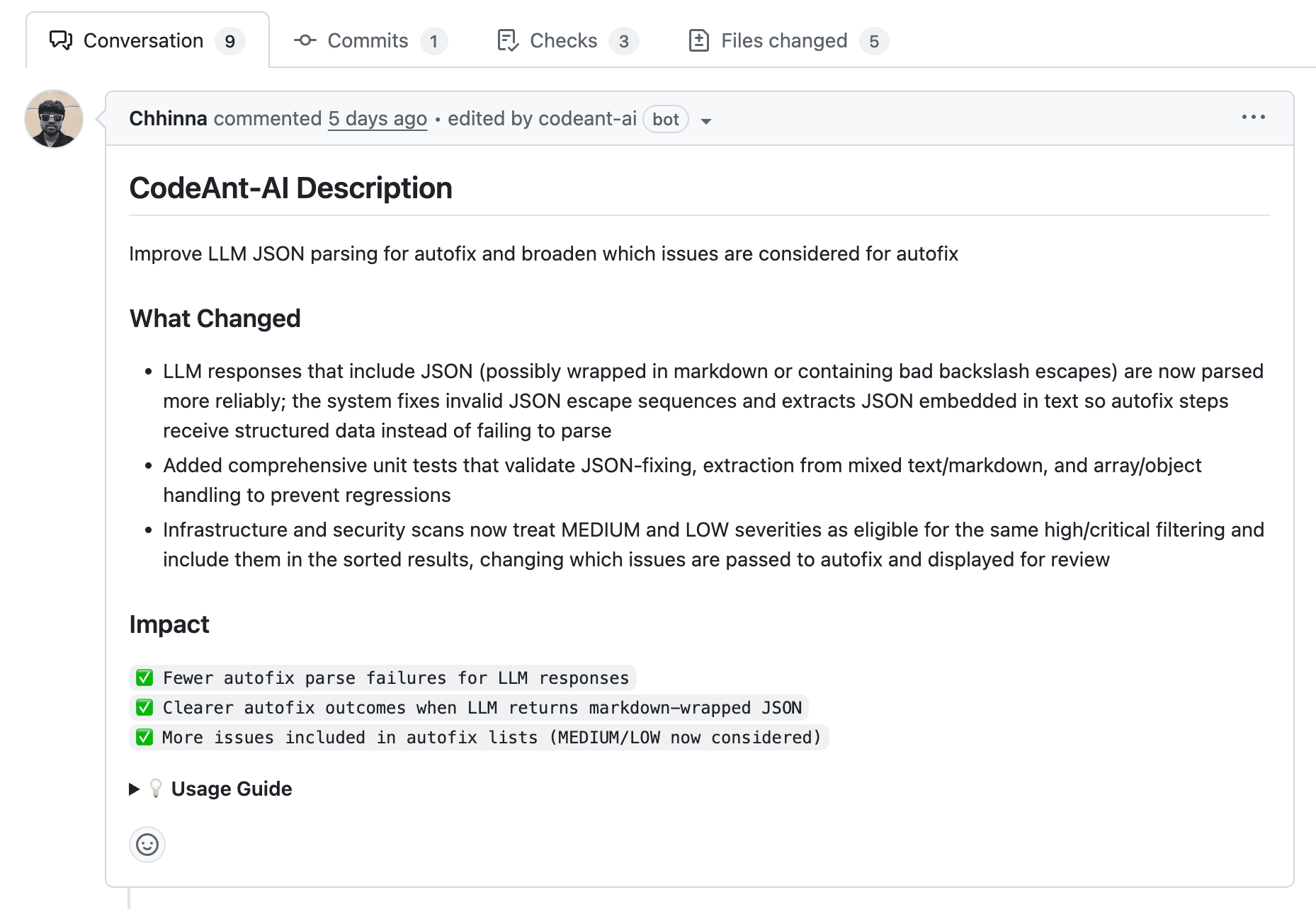

Step 3: Require Author Self-Review Before Submission

The easiest way to reduce reviewer fatigue? Authors catching their own mistakes before reviewers see them.

Encourage authors to:

step away for a few minutes

reopen their diff with fresh eyes

read comments out loud

verify naming clarity

run automated checks

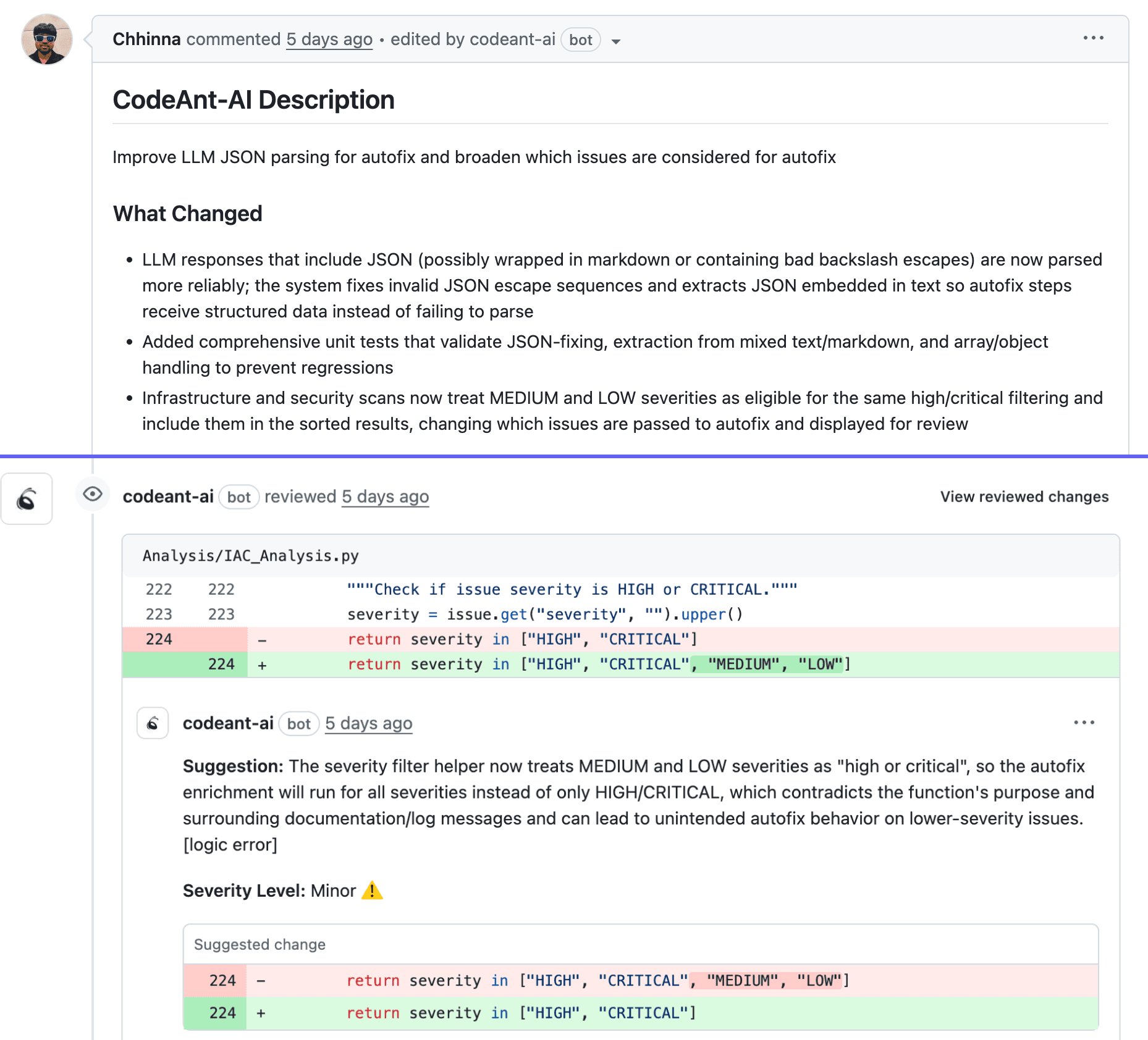

Tools like CodeAnt AI also generate PR summaries and flag issues proactively, giving authors an automated “pre-review” before they hit Request Review.

This cuts down on unnecessary back-and-forth and shows respect for reviewer time.

Step 4: Set Time Limits for Code Review Sessions

Even the best reviewers degrade after long periods of focused scanning. Timeboxing prevents mental fatigue and keeps reviews thoughtful.

How Long Should a Peer Code Review Take?

Studies show review accuracy drops sharply after 60–90 minutes. Most teams benefit from short, frequent review blocks instead of long, draining sessions.

Timeboxing Reviews to Prevent Mental Fatigue

Implement:

Dedicated review windows: Blocked calendar time avoids random interruptions.

Limit consecutive reviews: Alternate between coding and reviewing to reset mental state.

Prioritize by urgency: Review complex or critical PRs when reviewers are most alert.

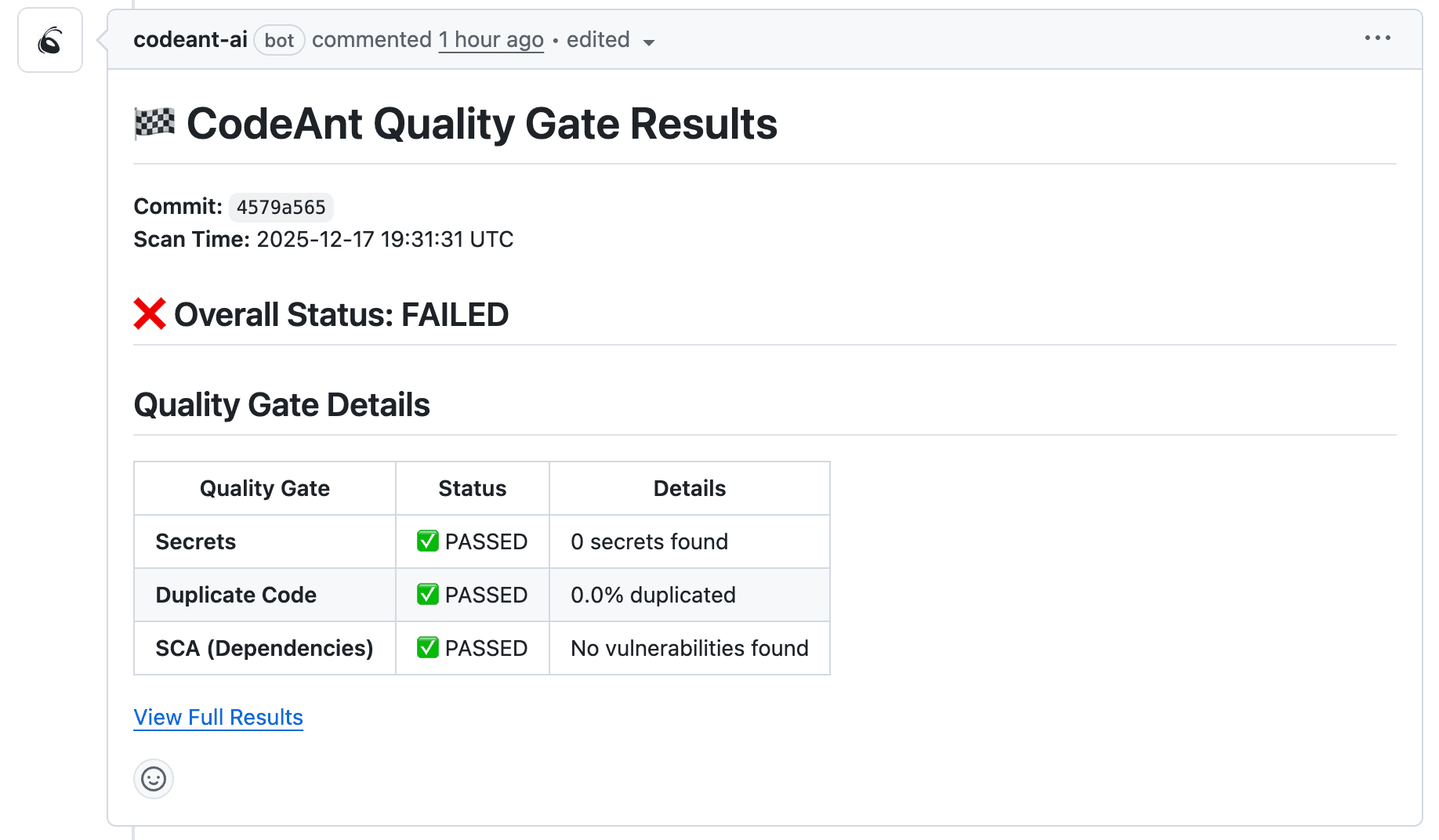

Step 5: Automate Objective Quality Checks

Humans should not perform repetitive checks. Automation handles mechanical tasks; humans handle logic, design, and the “why” behind changes.

Static Analysis and Linting Automation

Static analysis tools catch:

unused variables

unreachable code

complexity issues

formatting problems

Automatic linting eliminates 70%+ of “nitpick” comments.

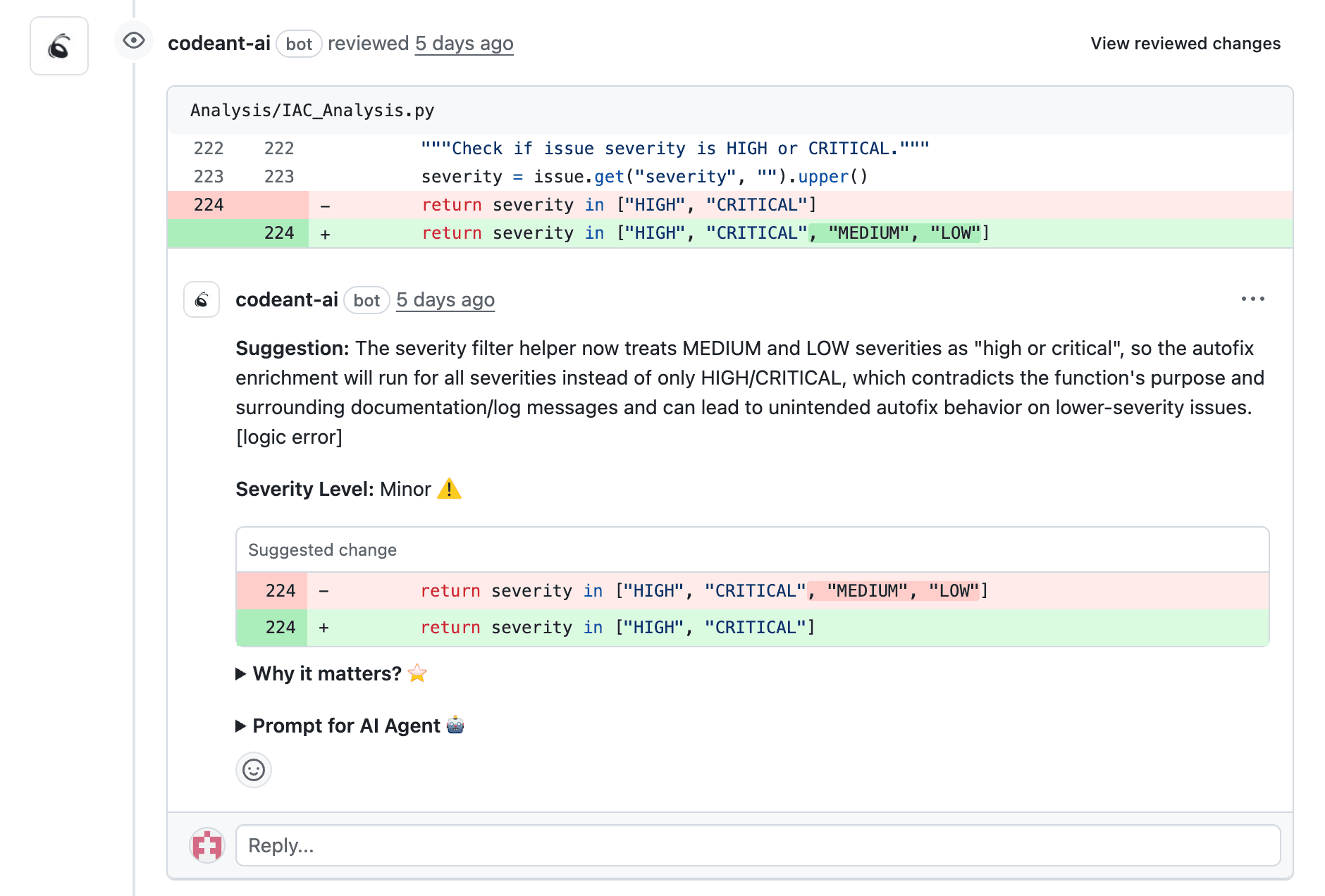

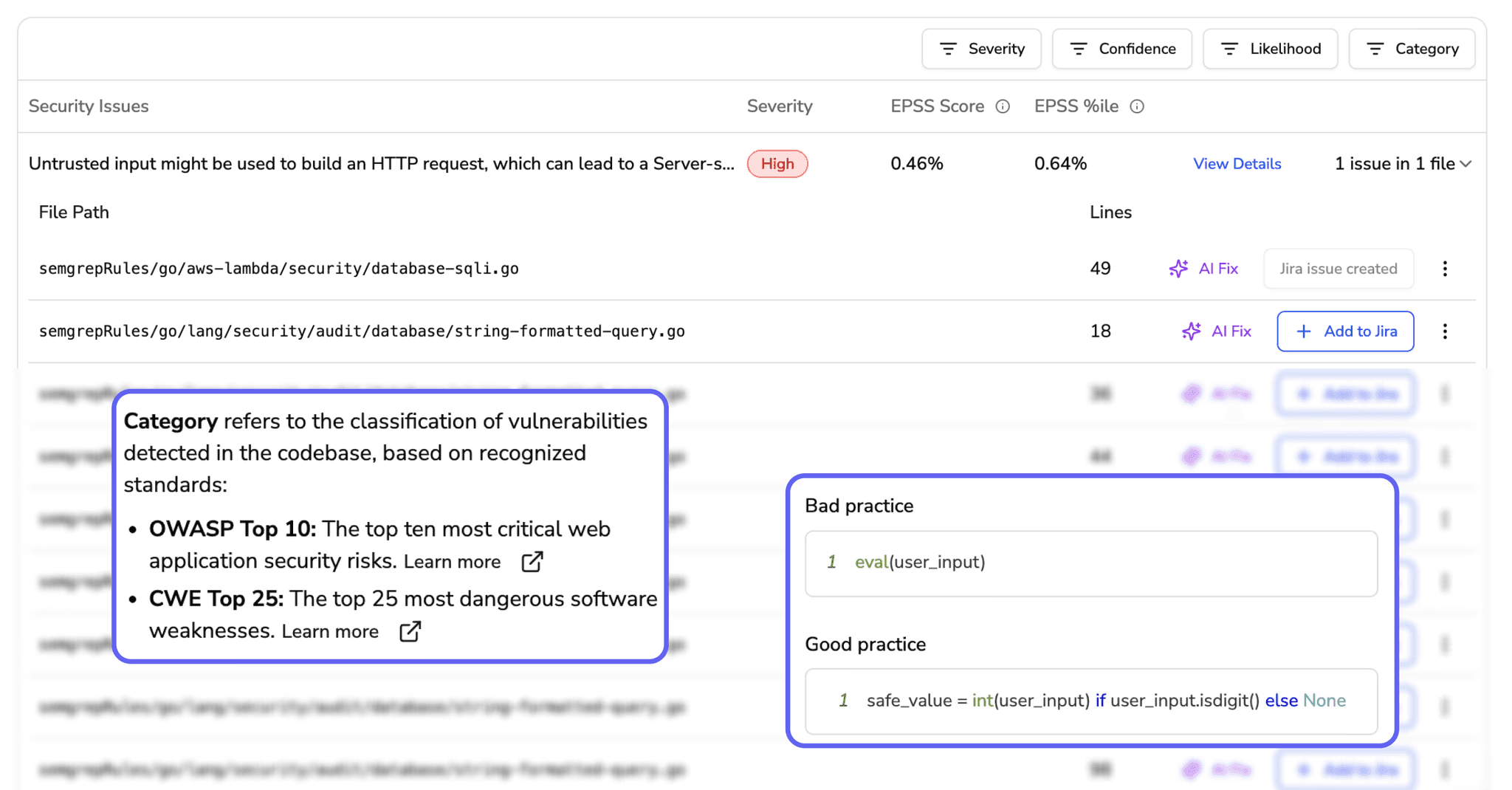

Security Scanning and Vulnerability Detection

AI and SAST scanners detect:

injection vectors

dependency vulnerabilities

insecure configurations

secret exposures

CodeAnt AI integrates security scanning directly into the PR so reviewers don’t manually hunt for risky patterns.

Automated Style and Formatting Enforcement

Prettier, ESLint, Black, and gofmt prevent reviewers from wasting time on styling opinions.

Automation = fewer distractions, fewer mental hops, better focus on meaningful review.

Step 6: Build a Reviewer Rotation to Distribute Load

Even distribution prevents burnout and avoids creating bottlenecks around a few experienced engineers.

Creating a Fair Review Rotation Schedule

Options include:

Round-robin assignment: Automatically assign reviewers in order.

Domain-based rotation: Review within areas of expertise, but rotate periodically.

Track reviewer load: Simple dashboards or PR analytics show who is overloaded.

Preventing Knowledge Silos Through Rotation

Rotation improves bus-factor, spreads domain knowledge, and reduces dependency on senior engineers—lowering the long-term risk of burnout.

Step 7: Coach Reviewers on Giving Actionable Feedback

Reviewer fatigue isn’t just about workload—it’s about giving high-quality feedback efficiently.

What Makes Code Review Feedback Constructive

Good feedback is:

specific → “variable name unclear because…”

explanatory → why it matters

solution-oriented → propose a fix

prioritized → must-fix vs. nice-to-have

respectful → critique code, not people

Training Reviewers to Balance Thoroughness with Speed

Thorough ≠ slow. Effective reviewers prioritize:

correctness

security

maintainability

…and deprioritize personal stylistic preferences.

Code Review Metrics That Reveal Reviewer Health

Metrics turn “I think we’re tired” into facts.

Review Cycle Time

How long a PR stays open. Increase = fatigue or process issues.

Average PR Size and Review Depth

Large PRs + few comments = shallow reviews.

Reviewer Load Distribution

Too many assigned PRs = clear burnout trigger.

Comment-to-Approval Ratio

Bare-minimum comments before approval = rubber-stamping.

Here’s how these metrics map:

Metric | What It Reveals | Warning Sign |

Review cycle time | Bottlenecks | Time increasing consistently |

Average PR size | Reviewer cognitive load | PRs consistently large |

Reviewer load distribution | Workload fairness | Same people reviewing everything |

Comment-to-approval ratio | Feedback depth | Approvals with no comments |

Code Review Tools That Reduce Manual Review Burden

Modern teams use a blend of tools to reduce fatigue:

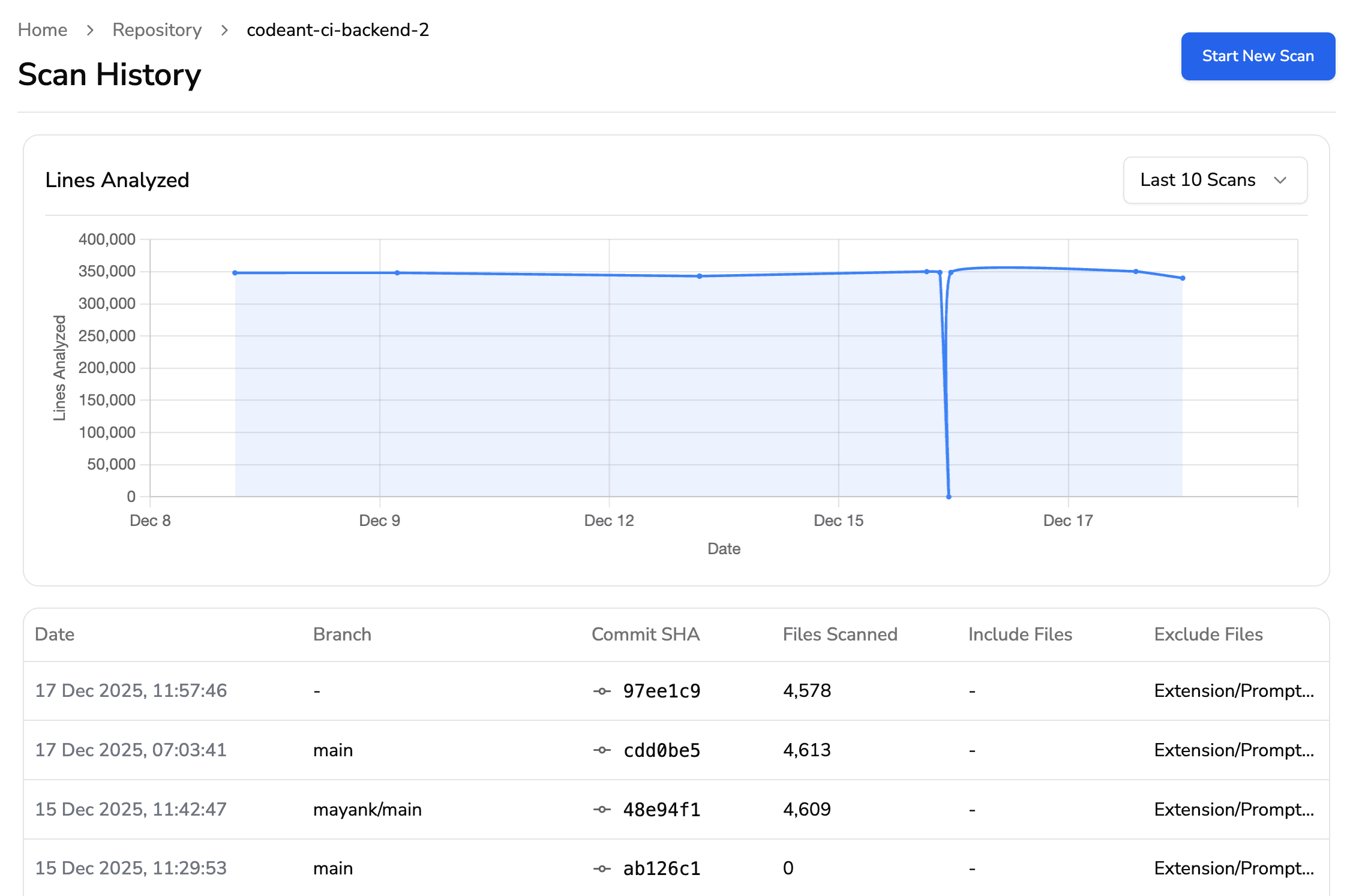

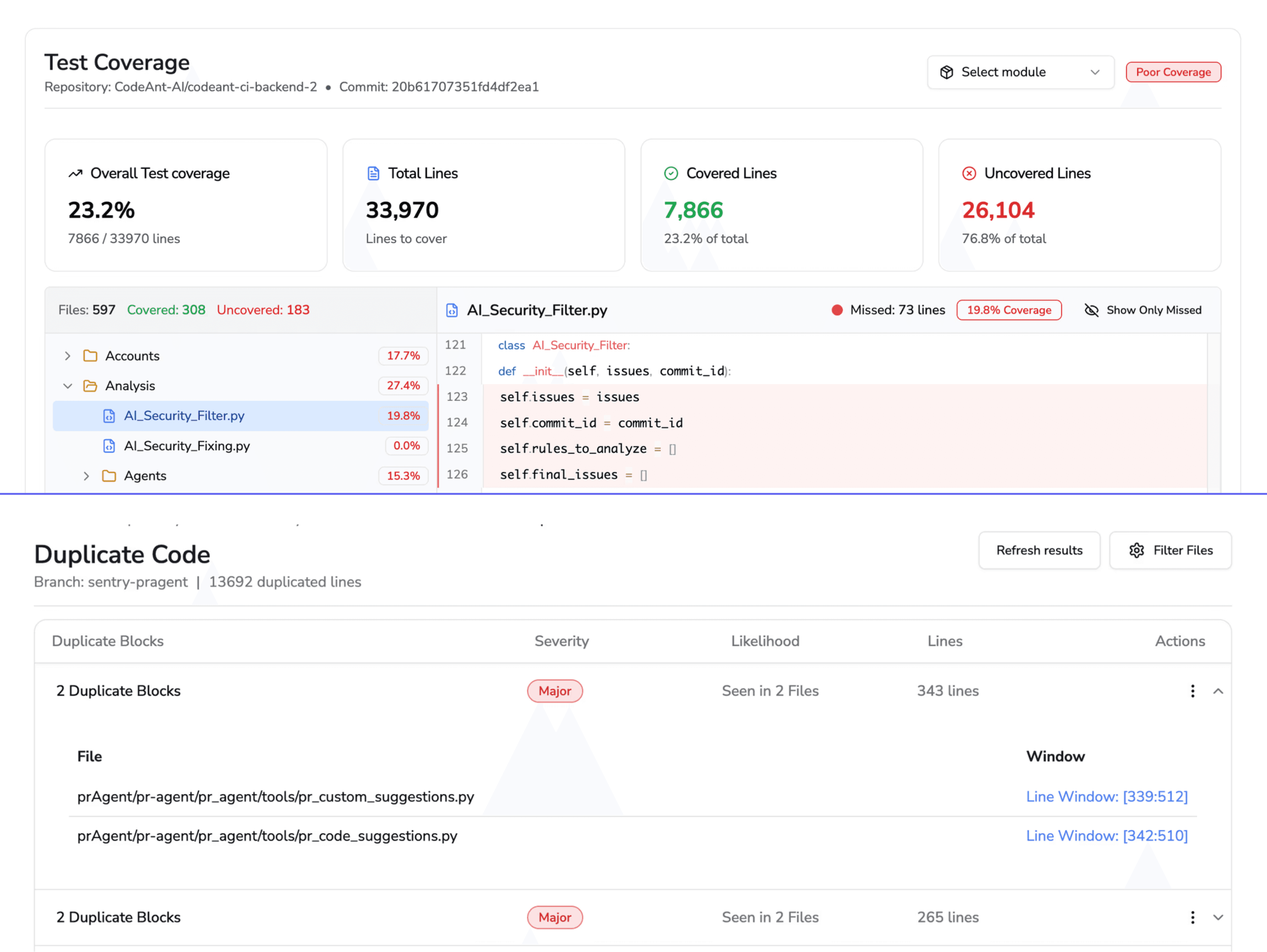

AI code review platforms → automated suggestions, inline comments, PR summaries (CodeAnt AI)

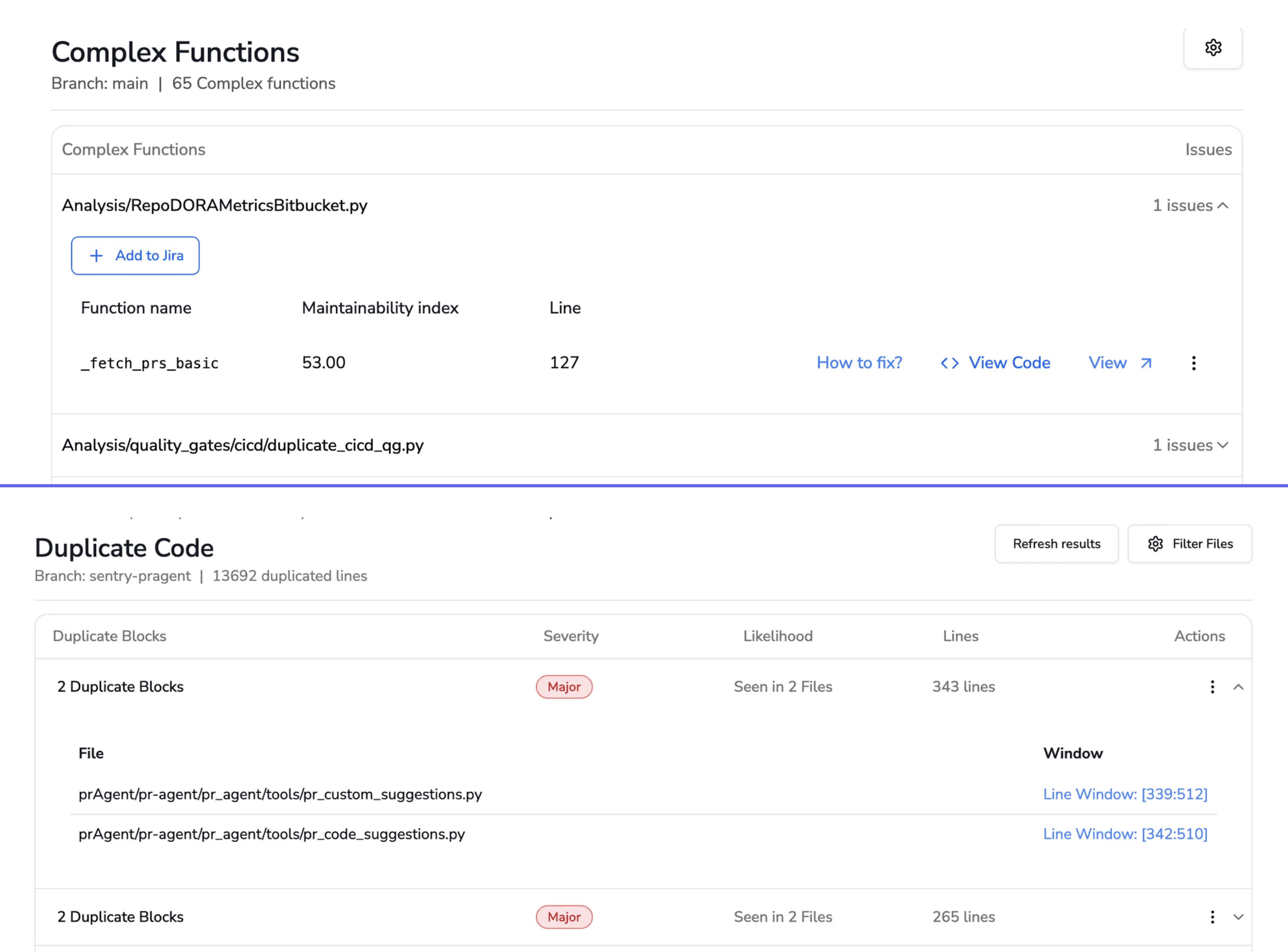

Static analysis tools → bug patterns, complexity, code smells

Security scanners → secrets, CVEs, insecure patterns

Quality dashboards → maintainability, duplication, test coverage

CodeAnt AI combines these in a single automated PR review layer, reducing the manual load on human reviewers significantly.

Build a Sustainable Code Review Process That Scales

Reducing reviewer fatigue isn’t about fixing one thing—it’s about reinforcing a system of practices:

keep PRs small

automate what humans shouldn’t do

rotate reviewers

timebox reviews

train authors and reviewers

monitor review health

use AI to reduce mechanical checks

Teams using tools like CodeAnt AI see faster PR turnaround, better feedback quality, and more predictable release cycles, all without increasing reviewer load.

Want to reduce reviewer fatigue and automate by 80% of repetitive review tasks? Book a 1:1 with CodeAnt AI experts today.