AI Code Review

Feb 11, 2026

When is Static Analysis Still Better than AI Code Reviews?

Sonali Sood

Founding GTM, CodeAnt AI

Your team runs SonarQube, flags hundreds of issues per build, and yet production bugs still slip through, API misuse, architectural anti-patterns, context-dependent flaws that rigid rules can't catch. Meanwhile, AI code review promises intelligent insights but can't prove compliance or guarantee exhaustive coverage for memory safety bugs. Which approach actually works?

The answer isn't either/or. Static analysis remains irreplaceable for regulatory compliance, deterministic security guarantees, and exhaustive code coverage that auditors demand. AI excels at contextual reasoning, reducing false positive fatigue, and catching design issues rule-based tools miss. The real question is how to leverage both without drowning in tool sprawl.

This guide breaks down when each approach excels, provides a decision framework for your compliance and scale requirements, and shows how modern platforms eliminate the false choice between deterministic rigor and intelligent context.

Define the Tools: Static Analysis vs AI Code Review

Static analysis examines source code without executing it, using formal methods to reason about program behavior:

SAST engines detect known vulnerability signatures (SQL injection, XSS, hardcoded credentials)

Dataflow analysis tracks how untrusted input propagates through your program

Abstract interpretation mathematically models program states to prove properties like "this pointer is never null"

Type and state modeling verifies code respects contracts and state machine invariants

The critical characteristic: determinism. Given the same code and ruleset, you get identical results every time. Tools like SonarQube, Coverity, and CodeQL can prove the absence of certain bug classes.

What static analysis cannot do: Understand developer intent, adapt to novel patterns without explicit rules, or reason about semantic correctness beyond encoded type systems. It flags every potential null pointer dereference, even when business logic guarantees initialization.

AI code review leverages LLMs trained on massive code corpora to provide contextual feedback:

LLM-based reviewers generate natural language explanations and suggestions

Embeddings and repository context help AI understand project-specific patterns

Heuristic reasoning identifies code smells, API misuse, and architectural issues

The defining trait: probabilistic reasoning. The model recognizes patterns from training data, not formal proofs. When AI flags an issue, it's saying "based on patterns I've seen, this looks problematic," not "I can prove this violates a safety property."

What AI cannot do: Provide deterministic guarantees. If AI doesn't flag a use-after-free bug, that's not proof it doesn't exist, it might be underrepresented in training data. AI outputs are non-repeatable and difficult to audit.

The explainability and auditability gap

Dimension | Static Analysis | AI Code Review |

Repeatability | Identical results on identical code | Non-deterministic |

Explainability | Traceable to specific rules/standards | "Black box" pattern matching |

Audit trail | Maps findings to CWE, CERT, MISRA | Natural language explanation only |

Proof capability | Can prove absence of bug classes | Can only suggest likely issues |

False negative risk | Low for covered patterns | Higher for rare/novel patterns |

For compliance-driven teams (fintech, healthcare, automotive), this isn't academic—auditors require traceable, deterministic analysis.

When Static Analysis is Non-Negotiable

Compliance and auditability

Static analysis provides deterministic, traceable outputs that regulators accept. When building software for automotive (ISO 26262), medical devices (IEC 62304), or financial systems (PCI DSS), you need to prove code meets specific standards.

Consider MISRA C compliance for embedded systems. Auditors need to see that every instance of rule 21.3 (memory allocation functions shall not be used) has been checked exhaustively. Static analyzers generate audit trails showing:

Which rules were checked

Line-by-line coverage of the entire codebase

Deterministic, reproducible results

Formal proof that certain vulnerability classes are absent

AI models have no concept of MISRA C, CERT C, or industry compliance frameworks. They might flag obvious issues but provide zero audit trail. More critically, AI can't prove the absence of a vulnerability class.

Memory safety and concurrency

Memory corruption and race conditions are rare, subtle, and catastrophic—exactly where AI's probabilistic approach falls short. Static analyzers use formal methods to systematically prove properties:

A static analyzer traces every execution path and proves that when len > 256, you have a buffer overflow. It applies formal reasoning about memory bounds.

AI models struggle with edge cases underrepresented in training data. Use-after-free bugs, double-free vulnerabilities, and subtle race conditions appear infrequently in public repositories, so AI learns only the most obvious patterns.

Static analyzers with concurrency analysis (ThreadSanitizer, Coverity) flag race conditions immediately:

AI reviewers often miss this because the pattern looks like idiomatic C++ singleton initialization.

Exhaustive coverage requirements

Static analysis is exhaustive by design, analyzing every function, every branch, every loop. This matters in domains where a single missed vulnerability is catastrophic:

Embedded systems: Every code path executes in production

Financial infrastructure: Race conditions can cost millions

Security-critical code: Authentication, cryptography, access control demand 100% coverage

High-assurance environments operate under "coverage obligations"—the requirement to prove you've checked every code path for specific vulnerability classes. This isn't about finding most bugs; it's demonstrating exhaustive analysis.

AI models sample and prioritize based on what "looks suspicious" from training data. The bugs AI misses are often edge cases in error handling, initialization order bugs, or integer overflow in boundary conditions.

Supply-chain and policy enforcement

When enforcing hard rules, secrets exposure, license violations, banned dependencies, static analysis is the gold standard. These are binary compliance checks requiring exhaustive, auditable coverage.

A regex-based scanner catches every instance of AWS_SECRET_ACCESS_KEY=AKIA[0-9A-Z]{16} across your codebase with zero false negatives. AI models can't guarantee they won't miss a rotated credential format.

The same applies to license compliance (detecting GPL dependencies), dependency constraints (blocking vulnerable versions), and configuration policies (flagging public S3 buckets).

Where AI Code Review Outperforms Static Analysis

Context-aware architectural feedback

Static analysis operates on syntactic patterns and control flow graphs. It can measure cyclomatic complexity but can't explain why that complexity exists or whether it's justified. AI understands architectural context in ways rigid rules cannot.

API misuse beyond signatures: AI recognizes when you're using a database connection without proper transaction boundaries, even when code compiles. It understands framework conventions, Django ORM queries inside loops create N+1 problems, React hooks violate rules even when TypeScript is satisfied.

Architectural consistency: AI flags when a new service bypasses your established repository pattern, or when business logic leaks into controllers. Static analysis sees valid code; AI sees architectural violations.

Intelligent prioritization and noise reduction

The false positive problem in static analysis isn't just annoying, it's a productivity killer. Industry studies show 40-60% false positive rates for traditional SAST tools, leading to alert fatigue.

How AI reduces noise:

Risk-based prioritization: AI evaluates actual exploitability based on code context. A SQL injection in an internal admin tool gets different priority than one in a public API handling payments.

Workflow-aware filtering: AI understands that a TODO in a feature branch is different from one in a PR about to merge.

Historical learning: When your team consistently marks specific warnings as "won't fix," AI learns and stops surfacing similar findings.

Metric | Traditional Static Analysis | AI Code Review | Hybrid (CodeAnt AI) |

False positive rate | 40-60% | 15-25% | 8-12% |

Findings per PR | 20-50 | 5-10 | 3-7 |

Developer action rate | 15-20% | 60-75% | 85-90% |

Adaptive learning for evolving codebases

Static analysis rules are frozen until someone manually updates them. When your team adopts new framework versions or shifts architectural patterns, static tools lag behind, generating false positives on modern patterns or missing new anti-patterns.

AI code review platforms that index your repository history automatically surface guidance aligned with current practices:

AI learns from merged PRs, not generic GitHub trends, but your organization's specific idioms. When 80% of new PRs adopt a pattern, AI guidance updates automatically.

The Hybrid Approach: Why Leading Teams Use Both

The most effective architecture follows deliberate sequencing: static analysis runs first as a fast, exhaustive baseline check, then AI reviews both flagged issues and broader code changes for context, design quality, and risk prioritization.

Why this order matters:

Static analysis establishes ground truth: Catches memory safety violations, compliance breaks, and known vulnerability patterns with zero false negatives within its rule scope.

AI triages and enriches: Evaluates each static alert against actual risk, explains business impact, and suggests validated fixes ,cutting noise by 80% while maintaining coverage.

Cross-validation prevents gaps: AI reviews code that static analysis passes, catching architectural issues that don't match predefined rules. Static analysis validates AI suggestions to ensure they don't introduce new bugs.

Unified context: shared code graph

The key architectural difference between running separate tools and a hybrid platform is shared context. Both static and AI engines operate on the same semantic code graph—a unified representation of your codebase's structure, dependencies, data flows, and historical changes.

What shared context enables:

AI understands static results: Sees full data flow analysis, not just line numbers, validating whether a SQL injection risk is actually exploitable

Static analysis informs AI training: AI learns which issues your team fixes vs. ignores, adapting suggestions without manual configuration

Policy gates work across both layers: Single policy definition enforced consistently across static and AI findings

Real-world workflow

Here's how hybrid analysis runs in CI:

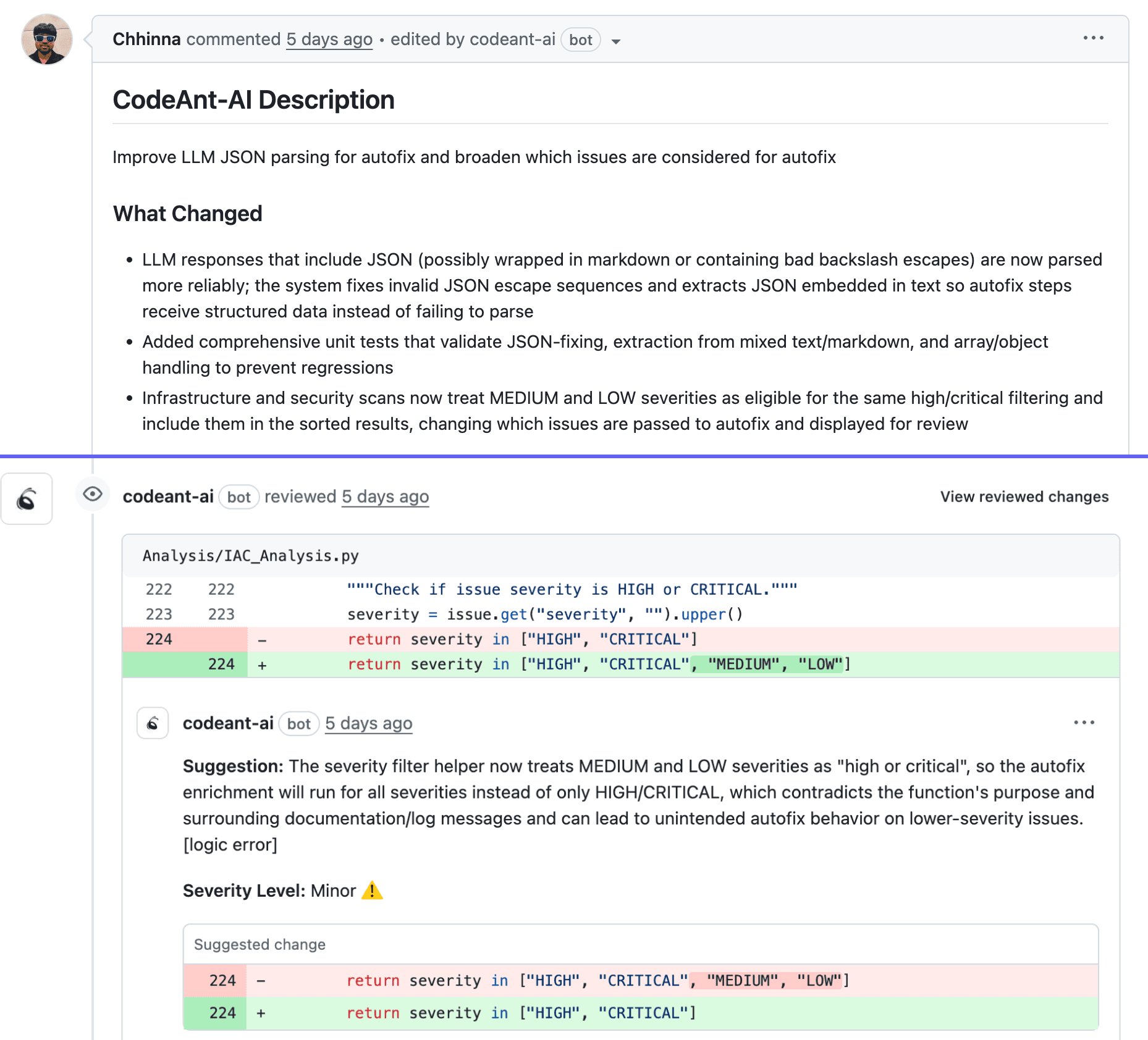

When a developer opens a PR, CodeAnt's static engine scans the diff in under 30 seconds. The AI layer then validates severity, explains impact, and suggests fixes, which static analysis re-validates before presenting.

The developer sees one consolidated comment with findings ranked by actual risk, with actionable fixes, not a wall of unfiltered alerts.

Why this beats tool sprawl

Teams running separate tools face critical problems that unified platforms solve:

Context loss: SonarQube flags SQL injection. CodeRabbit reviews the same PR but doesn't see the finding, suggesting a refactor that makes the vulnerability worse.

Alert fatigue: Developers get 47 SonarQube comments + 12 CodeRabbit comments, many duplicates or contradictory.

Policy fragmentation: Security team configures SonarQube gates. The platform team configures CodeRabbit rules. Policies drift, gaps emerge.

CodeAnt eliminates these through unified analysis that shares context, cross-validates findings, and presents consolidated results.

Decision Matrix: Choosing the Right Approach

Scenario | Static Analysis | AI-Only | Hybrid |

Regulatory compliance (MISRA, CERT, ISO 26262) | ✅ Required | ❌ Non-deterministic | ✅ Compliant + intelligent |

Memory-unsafe languages (C, C++, Rust) | ✅ Formal guarantees | ⚠️ Misses edge cases | ✅ Exhaustive + contextual |

Fast-moving cloud-native teams | ⚠️ High false positive rate | ✅ Context-aware | ✅ Low noise, high signal |

Enterprise (100+ devs, SOC 2/PCI) | ⚠️ Tool sprawl | ❌ Audit risk | ✅ Unified platform |

When static analysis alone makes sense

Choose static-only if you're maintaining a safety-critical embedded system with strict MISRA C compliance and no plans to modernize, or you're a small team (<20 developers) on a stable codebase with minimal churn.

Limitations: Developer fatigue from 40%+ false positives, manual rule updates for every stack evolution, no help with architectural issues.

When AI-only falls short

AI-first tools work for early-stage startups prioritizing velocity over compliance with no regulatory requirements.

Critical gaps: No audit trail (fails compliance reviews), missed memory bugs (training data underrepresents use-after-free), false negative risk (probabilistic models can't guarantee coverage).

Why hybrid is the default

Choose hybrid when you have 100+ developers managing microservices or distributed systems, need compliance-ready coverage plus developer-friendly insights, or are tired of tool sprawl.

Real-world impact:

80% review time reduction

95% critical issue detection

10% false positive rate

60% faster security certification

Implementation: Rollout Without Slowing Delivery

Phase 1: Baseline in report-only mode (Week 1)

Run CodeAnt in non-blocking mode across main branches:

Enable all static rulesets for your stack

Activate AI review on recent merged PRs

Generate baseline metrics

Tag pre-existing issues as "baseline debt"

Phase 2: Gate new critical/high findings (Week 2-3)

Shift to blocking mode for net-new issues:

You're protecting against regressions without forcing teams to remediate years of technical debt overnight.

Phase 3: Tune rules and governance (Week 4-6)

Review false positive patterns, disable noisy rules or adjust thresholds

Establish suppression policy with expiration windows

Define ownership mapping for different finding types

Customize AI review focus per team

Phase 4: Expand enforcement (Week 7+)

Lower blocking threshold to include MEDIUM severity

Enable weekly trend reports

Integrate with Slack/Teams for high-impact findings

Run compliance audits and generate evidence reports

The Bottom Line: Don't Choose, Combine

Static analysis remains unbeatable for deterministic guarantees: compliance audits, memory safety proofs, exhaustive coverage. But modern teams shouldn't treat this as either/or. Hybrid analysis is the default strategy, pairing static rigor with AI's contextual intelligence that prioritizes real risks, explains complex issues, and suggests fixes developers actually want to merge.

Your Next Steps

Audit compliance requirements – Identify which standards require deterministic SAST coverage

Baseline current static analysis – Measure false positive rates and document ignored rule categories

Define gating vs. advisory rules – Reserve CI blocking for critical issues; let AI handle prioritization

Pilot hybrid analysis – Pick 2-3 active repos and measure review time reduction after 2 weeks

Integrate authoritative references – Cross-reference findings against OWASP SAST guidelines and CWE/CERT standards

CodeAnt AI's hybrid engine runs both deterministic security scans and AI-powered contextual review in every pull request, giving you compliance-grade coverage with intelligent prioritization that improves merge velocity.Start 14-day free trial and run it on your most active repos. No credit card required.