Code reviews are leverage: they catch defects early, spread context, and keep quality high as codebases grow. Done poorly, the code review process stalls releases and drains developer productivity. The fix isn’t “more review,” it’s better review, fast, focused, and data-driven.

Why they matter

Catch issues before they become outages.

Share knowledge and raise the team’s baseline.

Improve maintainability and long-term velocity.

Where teams go wrong

Oversized PRs and rubber-stamp approvals.

Slow first responses and endless back-and-forth.

Humans nitpicking what tools could auto-fix.

What this guide covers (5 steps)

Set a clear review goal (code health > perfection).

Right-size PRs and add crisp context.

Navigate diffs in a logical order.

Write actionable, respectful feedback.

Use AI code review tools to automate the trivial and surface real risks.

Follow these code review best practices to raise quality without sacrificing speed, keep humans on judgment, let automation handle the rest, and ship cleaner code faster.

Step 1: Establish Clear Objectives and Standards for Code Reviews

The first step to conducting effective code reviews is clarity. Every developer, author or reviewer, should know why reviews happen and what good looks like. Without shared objectives, reviews turn into opinion battles instead of quality checks.

Start by defining the primary goals of your code review process:

Maintainability & readability: Does this change make the codebase cleaner and easier to understand?

Security & compliance: Does it meet internal and external standards (e.g., SOC 2, OWASP Top 10)?

Architecture & design adherence: Does it align with your system’s design principles and long-term structure?

That said: approve a change once it improves overall code health, even if it isn’t perfect.

The goal is continuous improvement, not nitpicking over commas. For minor non-blocking issues, reviewers often use a “Nit:” prefix, a small signal that feedback is optional, not a blocker.

To make reviews consistent and objective, document a checklist or rubric tied to your team’s Definition of Done. Example items could include:

Names follow your project’s style guide.

Every new function has unit tests and clear documentation.

No critical security vulnerabilities or exposed secrets.

A shared checklist turns reviews from personal opinions into a standardized quality gate, something you can improve and automate later through AI code review tools like CodeAnt AI.

Finally, measure how your process performs. Tracking metrics such as:

Time-in-review: How long does a pull request wait for feedback or merge?

Defect density: How many issues are caught during review versus after release?

Developer productivity metrics: Review throughput, PR size, and reviewer load.

These insights reveal where reviews get stuck and how they impact velocity. Many high-performing teams target an average review turnaround under one business day, balancing speed with depth.

By setting clear objectives, measurable standards, and transparent metrics, you build a data-driven code review culture that consistently improves code quality and developer experience.

Step 2: Keep Code Reviews Small, Focused, and Frequent

One of the most impactful code review best practices is keeping reviews small and focused. Big, 2,000-line pull requests rarely get deep attention, they’re exhausting to review and easy to skim past. Studies show reviewers find most issues within the first 400 lines of code, and detection rates drop sharply beyond that.

Smaller PRs mean:

Better accuracy: Reviewers catch subtle logic and design flaws they’d miss in a large diff.

Faster feedback loops: Authors get quicker responses, reducing merge delays.

Lower cognitive load: Reviewers stay focused and consistent, improving code health overall.

Think of each review as a single, clear unit of intent, one bug fix, one feature, or one code refactor.

Practical ways to keep reviews small:

Commit early, commit often: Push incremental updates behind feature flags instead of massive end-of-week commits.

Stack PRs: Break large features into small, self-contained branches that build on each other.

Separate refactors from new logic: Submit refactoring work as its own PR so the review scope stays tight and clear.

Use limits: Many teams set boundaries like ≤ 400 LOC or ≤ 1 hour review time per PR, if it exceeds that, split it.

Smaller, frequent reviews make the code review process feel like a natural rhythm, not a chore. Reviewers stay engaged, developers iterate faster, and the team’s overall developer productivity rises.

If your team uses an AI code review tool like CodeAnt AI, it becomes even easier, the AI can pre-scan each PR, flag risks, and summarize changes instantly, helping reviewers stay fast and accurate without burnout.

The takeaway: big code changes slow down progress; small reviews build momentum.

Step 3: Automate the Basics with Tools and Save Human Effort

Great code reviews keep human attention on design, correctness, and long-term maintainability. Everything else? Automate it. Why? So reviewers can focus on what matters, boosting code quality and developer productivity.

Linters & formatters (baseline hygiene)

Enforce style and catch simple defects automatically (ESLint, Pylint, Prettier, Black).

Run in pre-commit/CI; fail fast so nitpicks never reach a reviewer.

Outcome: fewer trivial comments, cleaner diffs, tighter code review process.

Automated tests & CI (don’t review what CI can prove)

Execute the full test suite on every PR; surface breakage before humans review code.

Track coverage thresholds; consider mutation testing for critical modules.

Treat a red build as non-reviewable; save human cycles for logic and architecture.

Security scanning (shift-left safety)

Add SAST/secret scanners/dependency checks to PRs to flag OWASP-class issues, insecure configs, and leaked credentials.

Use policies (e.g., block on critical severity; warn on medium) for consistent, auditable enforcement.

Platform workflow (make the path easy)

Use inline comments, suggested changes, side-by-side diffs, and PR checklists (tests, docs, risk notes).

Require passing checks and required reviewers before merge; integrate notifications (chat/email) for async teams.

AI co-reviewer (first pass at machine speed)

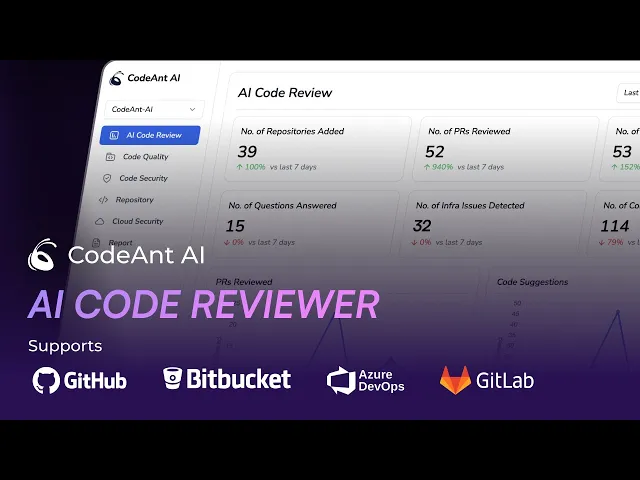

In 2025, AI code review tools like CodeAnt.ai act as an always-on reviewer:

Instant PR summary + risk hotspots (complexity, duplication, churn, test gaps).

Security/quality findings with one-click fixes for common issues (null checks, safe codemods, dedupes).

Tunable signal-to-noise so only high-impact items reach humans.

Result: humans spend time on architecture, edge cases, and trade-offs—not on spacing, renames, or obvious defects.

Why this matters (the payoff)

Faster merges, fewer escaped defects, and consistent standards across teams.

Reviewers stop acting like linters; they add real judgment.

Teams report large cuts in manual review time when AI handles the first pass, freeing capacity without lowering the bar.

Bottom line: Automate everything repeatable. Let AI code review handle the first pass and policy gates. Keep people on judgment, not janitorial work, and your code review best practices will scale with your codebase.

Step 4: Provide Constructive and Contextual Feedback (Focus on the Code, Not the Coder)

Great code reviews are collaborative, not confrontational. Keep comments respectful, specific, and anchored in shared standards so the author knows what to change and why, and the team keeps momentum.

Related read: Give Code Review Feedback Without Pushback (2025)

Lead with empathy, aim for progress

Start from good intent and invite dialogue.

Instead of: “Why did you do it this way?”

Try: “I see the intent. Would extracting a helper here simplify the flow?”

Be specific and actionable

Vague: “Needs work.”

Concrete: “This function does parsing and DB writes, let’s split into

parseInvoice()andpersistInvoice()to improve testability.”

Offer examples/snippets or a failing test when helpful. Clarity shortens review cycles.

Keep a friendly, collaborative tone

Use “we” language and options, not commands:

“What do you think about handling the edge case with a strategy pattern?”

“Could we add a guard here to prevent null deref on

customer?”

Anchor feedback in principles and data

Reference standards to de-personalize decisions:

“Per our style guide, avoid globals for maintainability.”

“OWASP recommends validating this input; an allowlist here would mitigate XSS.”

“This query is O(n²) with expected sizes, index on

created_atshould keep it under our SLA.”

Separate blockers from suggestions

Mark minor items as non-blocking (e.g., prefix with “Nit: …”). Reserve blocks for correctness, security, and maintainability. This keeps the code review process fast and high-signal.

Keep it a two-way conversation

Ask clarifying questions, authors may have context you don’t. If a thread gets long, do a quick call and summarize the decision in the PR for traceability.

Use AI to enrich, not replace, your feedback

AI code review tools (e.g., CodeAnt AI) can surface risk hotspots, complexity, and missing tests; cite those insights to add objectivity (“AI flagged high complexity here—splitting the method drops it below our threshold.”). You still provide judgment and trade-offs.

Positive reinforcement matters

Call out wins (“Great use of the Strategy pattern, this will make adding new channels trivial.”). Recognition builds trust and sets examples worth repeating.

(Remember: The purpose is to improve the codebase, not to prove who’s right. Everyone is on the same side, building great software.)

Step 5: Continuously Improve the Code Review Process

A great code review process isn’t static, it evolves with your team, your tooling, and your codebase. Continuous improvement ensures that reviews stay fast, fair, and effective as complexity grows.

1. Track key metrics and find bottlenecks

Use data to see how your reviews actually perform. Watch:

Review turnaround time – how long PRs wait for first feedback and merge.

Number of review cycles per PR – fewer loops usually mean clearer feedback.

PR size and merge rate – smaller PRs and faster merges correlate with better velocity.

Map these metrics to DORA indicators (Lead Time for Changes, Deployment Frequency). If PRs stall, investigate: reviewer overload, unclear ownership, or overly complex modules.

2. Optimize for both speed and quality

Slow reviews kill momentum. Meta’s internal research showed that long delays in the slowest quartile of reviews directly reduce developer satisfaction and throughput.

Rotate reviewers or assign “review buddies” to prevent single-person bottlenecks.

Batch review time: block 2–3 focused sessions daily to clear queues.

Balance load automatically with reviewer suggestions or rotation policies.

This creates predictable flow and minimizes context switching, a big win for developer productivity.

3. Iterate based on data

Regular retrospectives help tune your code review best practices:

Are reviews catching major issues or just nits?

Are turnaround times improving or slipping?

Do certain areas keep producing post-release bugs?

Use insights to tweak checklists, automate repetitive rules, or refine AI scan thresholds. For instance, if you find that 70% of delays come from test coverage discussions, let your CI gate enforce coverage automatically, humans focus on logic, not thresholds.

4. Encourage knowledge sharing and rotation

Mix reviewers to prevent silos. Have juniors review senior code (with mentorship) to spread context and surface fresh perspectives. Pair reviews or small-group reviews on critical modules foster learning and collaboration.

This cross-pollination strengthens collective ownership and resilience.

5. Mentor and upskill reviewers

Code reviewing is a skill. Offer training, shadow sessions, or “model review” walkthroughs showing great comments and tone. Recognize reviewers who provide high-signal, empathetic feedback, they set the cultural tone. Maintain an internal “review playbook” that outlines etiquette, tone, and quality standards.

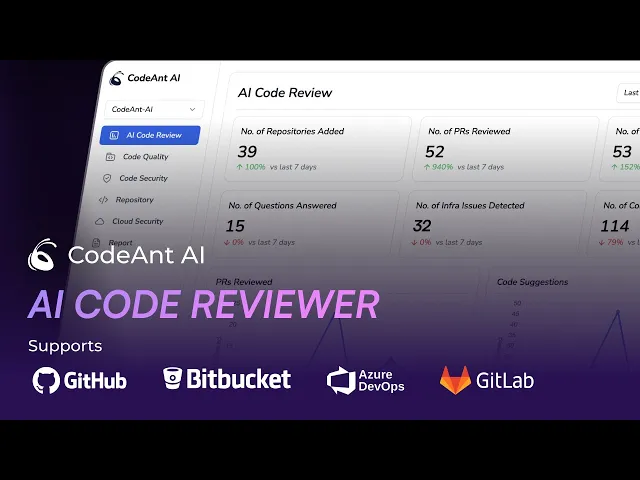

AI-assisted iteration

AI tools like CodeAnt AI can make continuous improvement measurable. CodeAnt’s analytics dashboard tracks PR cycle time, reviewer workload, test coverage shifts, and even identifies recurring bottlenecks.

You can see which teams review fastest, where merge delays happen, and which modules need cleanup. These insights feed your next round of process tweaks, turning intuition into hard data.

The bottom line: A high-performing code review culture doesn’t emerge from rules alone, it’s maintained by measurement, iteration, and mentorship. When you review the process as rigorously as you review the code, you build a team that learns faster, ships faster, and writes cleaner software.

Continuous feedback → Continuous improvement → Continuous delivery. That’s the CodeAnt AI way. 🚀

Ship Cleaner Code Faster with CodeAnt AI Code Review

Code reviews, when executed with discipline and care, become a powerful accelerant rather than a drag on development. They ensure code quality and security standards are upheld before code hits production, saving countless hours of firefighting later. They also serve as a forum for mentoring and sharing knowledge, which increases the collective expertise of the team.

By following these five steps, your team can make code reviews both efficient and impactful. The payoff is visible in the outcomes. Adopt these best practices and you’ll turn code reviews from a perceived hurdle into a competitive advantage for your engineering organization.

Try CodeAnt AI as your co-reviewer today for 14-days FREE.

Happy reviewing!

Code reviews are leverage: they catch defects early, spread context, and keep quality high as codebases grow. Done poorly, the code review process stalls releases and drains developer productivity. The fix isn’t “more review,” it’s better review, fast, focused, and data-driven.

Why they matter

Catch issues before they become outages.

Share knowledge and raise the team’s baseline.

Improve maintainability and long-term velocity.

Where teams go wrong

Oversized PRs and rubber-stamp approvals.

Slow first responses and endless back-and-forth.

Humans nitpicking what tools could auto-fix.

What this guide covers (5 steps)

Set a clear review goal (code health > perfection).

Right-size PRs and add crisp context.

Navigate diffs in a logical order.

Write actionable, respectful feedback.

Use AI code review tools to automate the trivial and surface real risks.

Follow these code review best practices to raise quality without sacrificing speed, keep humans on judgment, let automation handle the rest, and ship cleaner code faster.

Step 1: Establish Clear Objectives and Standards for Code Reviews

The first step to conducting effective code reviews is clarity. Every developer, author or reviewer, should know why reviews happen and what good looks like. Without shared objectives, reviews turn into opinion battles instead of quality checks.

Start by defining the primary goals of your code review process:

Maintainability & readability: Does this change make the codebase cleaner and easier to understand?

Security & compliance: Does it meet internal and external standards (e.g., SOC 2, OWASP Top 10)?

Architecture & design adherence: Does it align with your system’s design principles and long-term structure?

That said: approve a change once it improves overall code health, even if it isn’t perfect.

The goal is continuous improvement, not nitpicking over commas. For minor non-blocking issues, reviewers often use a “Nit:” prefix, a small signal that feedback is optional, not a blocker.

To make reviews consistent and objective, document a checklist or rubric tied to your team’s Definition of Done. Example items could include:

Names follow your project’s style guide.

Every new function has unit tests and clear documentation.

No critical security vulnerabilities or exposed secrets.

A shared checklist turns reviews from personal opinions into a standardized quality gate, something you can improve and automate later through AI code review tools like CodeAnt AI.

Finally, measure how your process performs. Tracking metrics such as:

Time-in-review: How long does a pull request wait for feedback or merge?

Defect density: How many issues are caught during review versus after release?

Developer productivity metrics: Review throughput, PR size, and reviewer load.

These insights reveal where reviews get stuck and how they impact velocity. Many high-performing teams target an average review turnaround under one business day, balancing speed with depth.

By setting clear objectives, measurable standards, and transparent metrics, you build a data-driven code review culture that consistently improves code quality and developer experience.

Step 2: Keep Code Reviews Small, Focused, and Frequent

One of the most impactful code review best practices is keeping reviews small and focused. Big, 2,000-line pull requests rarely get deep attention, they’re exhausting to review and easy to skim past. Studies show reviewers find most issues within the first 400 lines of code, and detection rates drop sharply beyond that.

Smaller PRs mean:

Better accuracy: Reviewers catch subtle logic and design flaws they’d miss in a large diff.

Faster feedback loops: Authors get quicker responses, reducing merge delays.

Lower cognitive load: Reviewers stay focused and consistent, improving code health overall.

Think of each review as a single, clear unit of intent, one bug fix, one feature, or one code refactor.

Practical ways to keep reviews small:

Commit early, commit often: Push incremental updates behind feature flags instead of massive end-of-week commits.

Stack PRs: Break large features into small, self-contained branches that build on each other.

Separate refactors from new logic: Submit refactoring work as its own PR so the review scope stays tight and clear.

Use limits: Many teams set boundaries like ≤ 400 LOC or ≤ 1 hour review time per PR, if it exceeds that, split it.

Smaller, frequent reviews make the code review process feel like a natural rhythm, not a chore. Reviewers stay engaged, developers iterate faster, and the team’s overall developer productivity rises.

If your team uses an AI code review tool like CodeAnt AI, it becomes even easier, the AI can pre-scan each PR, flag risks, and summarize changes instantly, helping reviewers stay fast and accurate without burnout.

The takeaway: big code changes slow down progress; small reviews build momentum.

Step 3: Automate the Basics with Tools and Save Human Effort

Great code reviews keep human attention on design, correctness, and long-term maintainability. Everything else? Automate it. Why? So reviewers can focus on what matters, boosting code quality and developer productivity.

Linters & formatters (baseline hygiene)

Enforce style and catch simple defects automatically (ESLint, Pylint, Prettier, Black).

Run in pre-commit/CI; fail fast so nitpicks never reach a reviewer.

Outcome: fewer trivial comments, cleaner diffs, tighter code review process.

Automated tests & CI (don’t review what CI can prove)

Execute the full test suite on every PR; surface breakage before humans review code.

Track coverage thresholds; consider mutation testing for critical modules.

Treat a red build as non-reviewable; save human cycles for logic and architecture.

Security scanning (shift-left safety)

Add SAST/secret scanners/dependency checks to PRs to flag OWASP-class issues, insecure configs, and leaked credentials.

Use policies (e.g., block on critical severity; warn on medium) for consistent, auditable enforcement.

Platform workflow (make the path easy)

Use inline comments, suggested changes, side-by-side diffs, and PR checklists (tests, docs, risk notes).

Require passing checks and required reviewers before merge; integrate notifications (chat/email) for async teams.

AI co-reviewer (first pass at machine speed)

In 2025, AI code review tools like CodeAnt.ai act as an always-on reviewer:

Instant PR summary + risk hotspots (complexity, duplication, churn, test gaps).

Security/quality findings with one-click fixes for common issues (null checks, safe codemods, dedupes).

Tunable signal-to-noise so only high-impact items reach humans.

Result: humans spend time on architecture, edge cases, and trade-offs—not on spacing, renames, or obvious defects.

Why this matters (the payoff)

Faster merges, fewer escaped defects, and consistent standards across teams.

Reviewers stop acting like linters; they add real judgment.

Teams report large cuts in manual review time when AI handles the first pass, freeing capacity without lowering the bar.

Bottom line: Automate everything repeatable. Let AI code review handle the first pass and policy gates. Keep people on judgment, not janitorial work, and your code review best practices will scale with your codebase.

Step 4: Provide Constructive and Contextual Feedback (Focus on the Code, Not the Coder)

Great code reviews are collaborative, not confrontational. Keep comments respectful, specific, and anchored in shared standards so the author knows what to change and why, and the team keeps momentum.

Related read: Give Code Review Feedback Without Pushback (2025)

Lead with empathy, aim for progress

Start from good intent and invite dialogue.

Instead of: “Why did you do it this way?”

Try: “I see the intent. Would extracting a helper here simplify the flow?”

Be specific and actionable

Vague: “Needs work.”

Concrete: “This function does parsing and DB writes, let’s split into

parseInvoice()andpersistInvoice()to improve testability.”

Offer examples/snippets or a failing test when helpful. Clarity shortens review cycles.

Keep a friendly, collaborative tone

Use “we” language and options, not commands:

“What do you think about handling the edge case with a strategy pattern?”

“Could we add a guard here to prevent null deref on

customer?”

Anchor feedback in principles and data

Reference standards to de-personalize decisions:

“Per our style guide, avoid globals for maintainability.”

“OWASP recommends validating this input; an allowlist here would mitigate XSS.”

“This query is O(n²) with expected sizes, index on

created_atshould keep it under our SLA.”

Separate blockers from suggestions

Mark minor items as non-blocking (e.g., prefix with “Nit: …”). Reserve blocks for correctness, security, and maintainability. This keeps the code review process fast and high-signal.

Keep it a two-way conversation

Ask clarifying questions, authors may have context you don’t. If a thread gets long, do a quick call and summarize the decision in the PR for traceability.

Use AI to enrich, not replace, your feedback

AI code review tools (e.g., CodeAnt AI) can surface risk hotspots, complexity, and missing tests; cite those insights to add objectivity (“AI flagged high complexity here—splitting the method drops it below our threshold.”). You still provide judgment and trade-offs.

Positive reinforcement matters

Call out wins (“Great use of the Strategy pattern, this will make adding new channels trivial.”). Recognition builds trust and sets examples worth repeating.

(Remember: The purpose is to improve the codebase, not to prove who’s right. Everyone is on the same side, building great software.)

Step 5: Continuously Improve the Code Review Process

A great code review process isn’t static, it evolves with your team, your tooling, and your codebase. Continuous improvement ensures that reviews stay fast, fair, and effective as complexity grows.

1. Track key metrics and find bottlenecks

Use data to see how your reviews actually perform. Watch:

Review turnaround time – how long PRs wait for first feedback and merge.

Number of review cycles per PR – fewer loops usually mean clearer feedback.

PR size and merge rate – smaller PRs and faster merges correlate with better velocity.

Map these metrics to DORA indicators (Lead Time for Changes, Deployment Frequency). If PRs stall, investigate: reviewer overload, unclear ownership, or overly complex modules.

2. Optimize for both speed and quality

Slow reviews kill momentum. Meta’s internal research showed that long delays in the slowest quartile of reviews directly reduce developer satisfaction and throughput.

Rotate reviewers or assign “review buddies” to prevent single-person bottlenecks.

Batch review time: block 2–3 focused sessions daily to clear queues.

Balance load automatically with reviewer suggestions or rotation policies.

This creates predictable flow and minimizes context switching, a big win for developer productivity.

3. Iterate based on data

Regular retrospectives help tune your code review best practices:

Are reviews catching major issues or just nits?

Are turnaround times improving or slipping?

Do certain areas keep producing post-release bugs?

Use insights to tweak checklists, automate repetitive rules, or refine AI scan thresholds. For instance, if you find that 70% of delays come from test coverage discussions, let your CI gate enforce coverage automatically, humans focus on logic, not thresholds.

4. Encourage knowledge sharing and rotation

Mix reviewers to prevent silos. Have juniors review senior code (with mentorship) to spread context and surface fresh perspectives. Pair reviews or small-group reviews on critical modules foster learning and collaboration.

This cross-pollination strengthens collective ownership and resilience.

5. Mentor and upskill reviewers

Code reviewing is a skill. Offer training, shadow sessions, or “model review” walkthroughs showing great comments and tone. Recognize reviewers who provide high-signal, empathetic feedback, they set the cultural tone. Maintain an internal “review playbook” that outlines etiquette, tone, and quality standards.

AI-assisted iteration

AI tools like CodeAnt AI can make continuous improvement measurable. CodeAnt’s analytics dashboard tracks PR cycle time, reviewer workload, test coverage shifts, and even identifies recurring bottlenecks.

You can see which teams review fastest, where merge delays happen, and which modules need cleanup. These insights feed your next round of process tweaks, turning intuition into hard data.

The bottom line: A high-performing code review culture doesn’t emerge from rules alone, it’s maintained by measurement, iteration, and mentorship. When you review the process as rigorously as you review the code, you build a team that learns faster, ships faster, and writes cleaner software.

Continuous feedback → Continuous improvement → Continuous delivery. That’s the CodeAnt AI way. 🚀

Ship Cleaner Code Faster with CodeAnt AI Code Review

Code reviews, when executed with discipline and care, become a powerful accelerant rather than a drag on development. They ensure code quality and security standards are upheld before code hits production, saving countless hours of firefighting later. They also serve as a forum for mentoring and sharing knowledge, which increases the collective expertise of the team.

By following these five steps, your team can make code reviews both efficient and impactful. The payoff is visible in the outcomes. Adopt these best practices and you’ll turn code reviews from a perceived hurdle into a competitive advantage for your engineering organization.

Try CodeAnt AI as your co-reviewer today for 14-days FREE.

Happy reviewing!

FAQs

What is code review and why does it matter for developer productivity?

What are the most effective code review best practices?

How do AI-powered code review tools help software developer productivity?

What is the ideal code review process for modern engineering teams?

How can engineering leaders measure and improve developer productivity with code reviews?

Table of Contents

Start Your 14-Day Free Trial

AI code reviews, security, and quality trusted by modern engineering teams. No credit card required!

Share blog: