SWE-Bench scores dominate conversations about LLM coding ability. A model hits 50% on the leaderboard, and suddenly it's "ready for production." But here's the thing, passing tests on popular open-source repositories doesn't mean the model will perform on your private codebase.

The benchmark uses real GitHub issues to evaluate bug-fixing ability, which makes it more realistic than older tests like HumanEval. It also has blind spots: memorization, security gaps, and zero coverage of enterprise codebases. This guide breaks down where SWE-Bench actually predicts real-world performance, where it falls short, and how to evaluate AI coding tools beyond the leaderboard.

What Is SWE-Bench and Why It Matters

SWE-Bench predicts real-world LLM performance by using actual GitHub issues and pull requests. Models have to navigate large codebases and fix real bugs, which makes the benchmark highly relevant for daily development work. However, SWE-Bench can overstate capabilities due to data contamination (where models memorize solutions rather than reason through problems), and it doesn't capture factors like code quality or security.

For engineering teams evaluating AI coding tools, SWE-Bench offers a useful signal. But it's only one piece of the puzzle.

The problem SWE-Bench was designed to solve

Earlier benchmarks like HumanEval tested isolated coding tasks. Write a function, return the correct output. That's useful for measuring basic code synthesis, but it doesn't reflect how developers actually work.

Real software engineering involves understanding sprawling codebases, tracking down bugs across multiple files, and producing patches that pass existing test suites. SWE-Bench fills this gap by drawing from actual GitHub issues in popular open-source Python repositories. The result is a benchmark that looks a lot more like the bug-fixing work your team does every day.

How SWE-Bench differs from HumanEval and MBPP

Benchmark | Task Type | Codebase Context | Real-World Relevance |

HumanEval | Single-function generation | None | Low |

MBPP | Basic programming problems | None | Low |

SWE-Bench | Bug fixing from GitHub issues | Full repository | High |

HumanEval and MBPP measure whether a model can write correct code in isolation. SWE-Bench measures whether it can operate within a real project, which is a much harder test.

SWE-Bench Verified vs Lite vs Full

The benchmark comes in several variants:

SWE-Bench Full: The complete dataset of GitHub issues across multiple repositories

SWE-Bench Lite: A curated subset designed for faster evaluation

SWE-Bench Verified: Human-validated subset that reduces noise and confirms each task has a clear, achievable solution

SWE-Bench Verified matters most for serious evaluation because it filters out ambiguous issues.

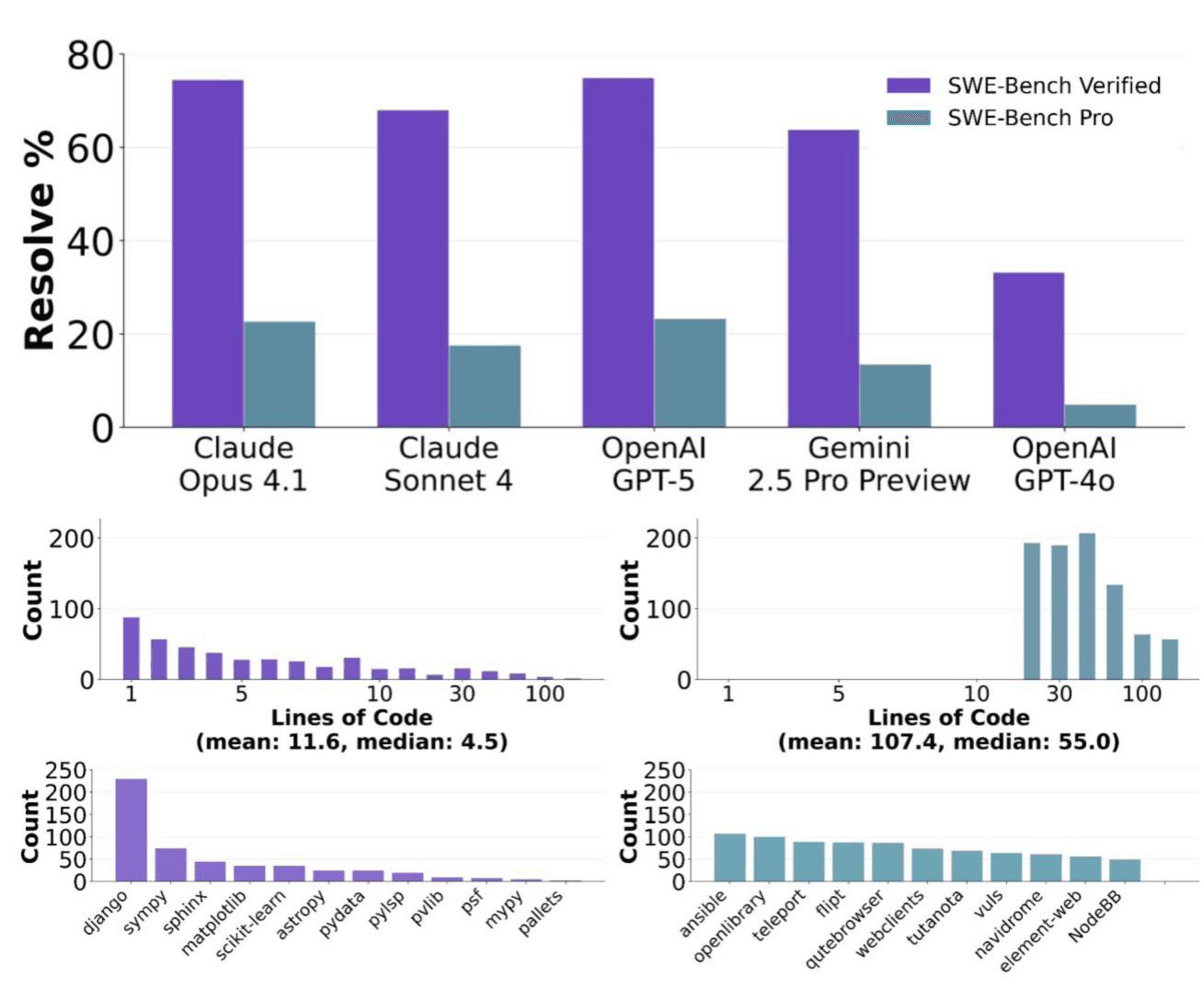

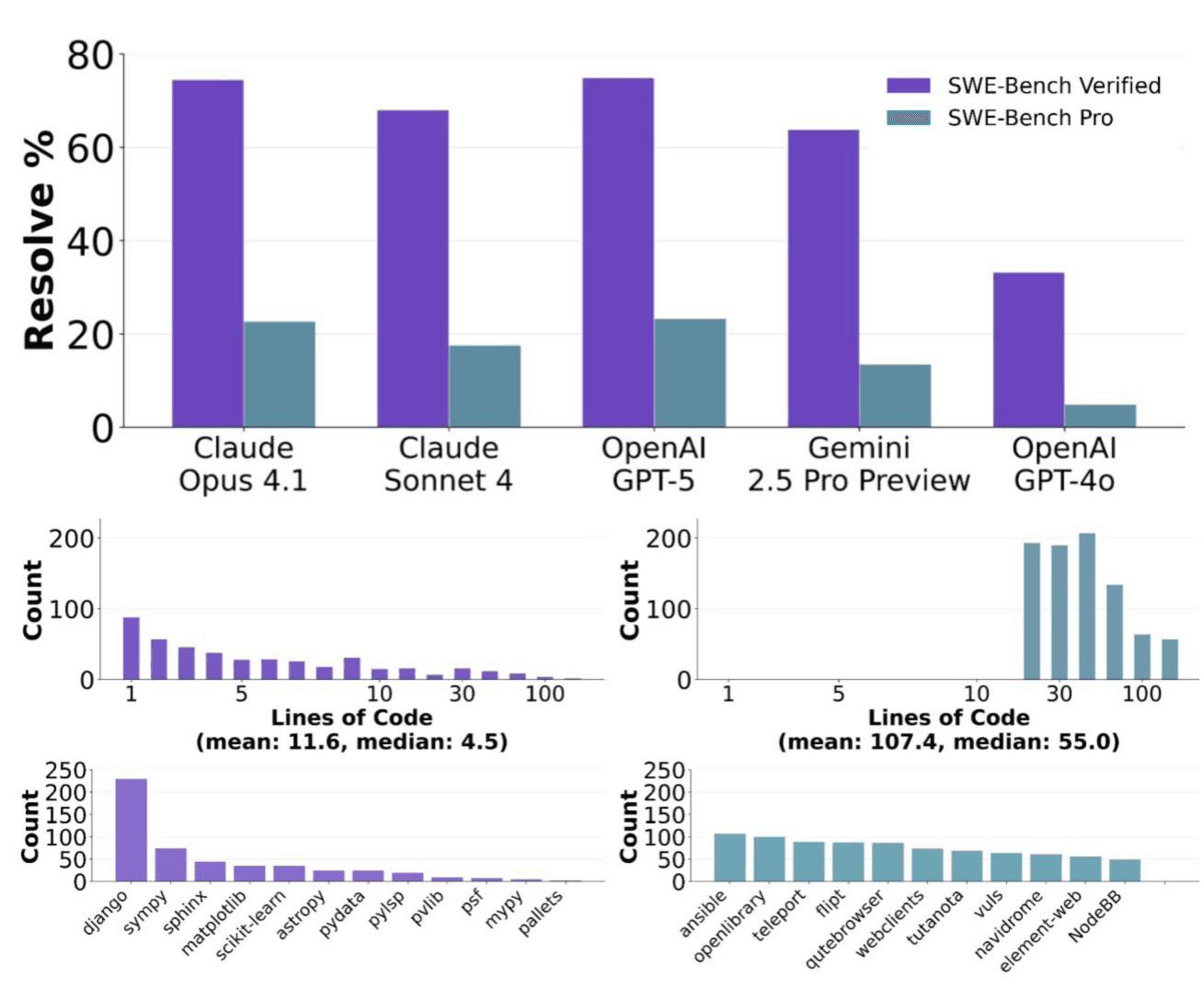

How SWE-Bench Pro Raises the Bar Beyond Vanilla SWE-Bench

SWE-Bench Pro was introduced to address many of the limitations that surfaced as models began saturating earlier benchmark variants. While the original SWE-Bench measures whether a model can go from issue to patch to passing tests, Pro adds structural safeguards and task design choices that make it a more discriminative and realistic benchmark for modern AI agents.

Three-way dataset split for contamination resistance

One of the biggest challenges with any public benchmark is training data leakage. SWE-Bench Pro explicitly tackles this by splitting tasks across three tiers: public repositories, held-out private GPL repositories reserved to prevent overfitting, and commercial codebases sourced from real startups. The commercial tier is never released publicly; only evaluation results are shared. This makes memorization far less effective as a strategy and improves confidence that scores reflect reasoning, not recall.

Long-horizon, non-trivial tasks by construction

Unlike earlier splits that include small or localized fixes, Pro filters out trivial issues by design. Tasks typically require substantial multi-file changes, with the paper reporting an average of roughly 107 lines of code across just over four files. This shifts the benchmark away from “find the obvious bug” and toward the kind of extended reasoning and coordination real engineering work demands.

Human augmentation to make tasks resolvable

SWE-Bench Pro uses a human-in-the-loop workflow to clarify ambiguous issues and add missing context without simplifying the underlying challenge. This reduces failure modes where agents fail simply because an issue is underspecified, allowing the benchmark to better measure true problem-solving ability rather than prompt interpretation luck.

Stronger verification through improved test recovery

Test quality is a quiet but critical factor in any benchmark. Pro introduces mechanisms to recover or strengthen unit tests so that correct fixes are less likely to fail due to flaky, missing, or overly strict verifiers. This reduces false negatives and makes pass rates more meaningful as signals of actual correctness.

Broader, enterprise-style repository mix

Instead of focusing heavily on a small set of popular open-source libraries, SWE-Bench Pro draws problems from 41 actively maintained repositories spanning business applications, B2B software, and developer tools. This diversity better reflects the kinds of systems engineering teams actually run, rather than just the most commonly forked Python projects on GitHub.

Harder and more discriminative today

Even under a unified evaluation scaffold, strong models and agents remain well below “near-solved” territory on Pro. Baseline results reported in the paper show pass@1 rates below 25%, making it significantly more discriminative than easier splits like Verified. As a result, Pro currently does a better job separating incremental capability gains from benchmark saturation effects.

If you want a deeper breakdown of how SWE-Bench Pro differs methodologically from earlier variants, including task construction and evaluation scaffolds, we’ve covered that in a dedicated comparison below.

Benchmark | Primary Task Type | Codebase Scope | Contamination Resistance | Task Complexity | Enterprise Relevance |

|---|---|---|---|---|---|

HumanEval | Function generation | None | Low | Low | Very Low |

MBPP | Basic programming problems | None | Low | Low | Very Low |

SWE-Bench | Issue-based bug fixing | Popular OSS repos | Medium | Medium | Limited |

SWE-Bench Verified | Bug fixing with validated tasks | Popular OSS repos | Medium | Medium | Limited |

SWE-Bench Pro | Long-horizon issue resolution | OSS + private + commercial | High | High | Higher (still partial) |

SWE-Bench Pro is a meaningful step forward, but it still evaluates models in isolation from the review workflows, security constraints, and organizational standards that define real-world engineering environments.

How SWE-Bench Evaluates LLM Coding Performance

Understanding what a SWE-Bench score actually measures helps you interpret leaderboard results and recognize their limits.

Real GitHub issues as test cases

Each task comes from an actual pull request merged into a popular open-source Python repository. The model receives the issue description and repository context, then attempts to locate and fix the problem. This mirrors how developers approach bug reports in practice.

Bug localization and patch generation tasks

The challenge has two parts. First, the model identifies which files and functions contain the bug. Then it generates a code patch that resolves the issue. Both steps require understanding the codebase structure, not just writing syntactically correct code.

Pass rate scoring and leaderboard rankings

Pass rate equals the percentage of issues where the model's patch passes all associated tests. Leaderboards rank models by this metric. A model scoring 40% on SWE-Bench Verified resolves 40% of the test issues correctly, at least according to the test suite.

Where SWE-Bench Predicts Real-World LLM Performance

High SWE-Bench scores do correlate with practical coding ability in certain scenarios. Here's where the benchmark genuinely predicts performance.

Bug fixing and issue resolution

Models that score well on SWE-Bench typically handle straightforward bug fixes effectively. If your team uses AI assistants for triaging and patching known issues, benchmark performance offers a reasonable signal.

Codebase navigation and context understanding

SWE-Bench tests a model's ability to read and understand large codebases. This skill transfers to real development work. Models that struggle here will likely struggle when you ask them to work with your repositories.

Multi-file code changes

The benchmark requires edits across multiple files, which predicts how well an LLM handles complex, interconnected changes. This matters for feature development and refactoring tasks, not just isolated fixes.

Where SWE-Bench Falls Short

Now for the critical limitations. The gaps below explain why you can't rely on benchmark scores alone when selecting AI coding tools.

Memorization vs genuine problem-solving

Recent research shows LLMs may recall solutions from training data rather than reason through problems. This phenomenon, called benchmark contamination, inflates scores without reflecting true capability. A model might "solve" an issue simply because it saw the fix during training.

Repository bias toward popular open-source projects

SWE-Bench draws from well-known repositories like Django, Flask, and scikit-learn. Popular codebases are likely overrepresented in LLM training data. Performance on familiar projects doesn't guarantee performance on your private codebase with custom frameworks and internal conventions.

No evaluation of code quality or security

SWE-Bench only checks if tests pass. It doesn't assess whether the code is maintainable, secure, or follows best practices. A patch that introduces a security vulnerability still counts as a success if the tests pass. For enterprise teams, this blind spot matters enormously.

Limited coverage of enterprise and private codebases

The benchmark cannot predict LLM performance on proprietary code, internal frameworks, or languages beyond Python. If your stack includes Java, TypeScript, or Go, SWE-Bench scores tell you less than you might hope.

Evidence That LLMs Memorize SWE-Bench Solutions

Microsoft researchers and others have investigated whether high scores reflect genuine reasoning or memorization. The findings are sobering.

File path identification experiments

Researchers tested whether models could identify the correct file paths to modify without seeing the issue description. Some models succeeded at rates suggesting they'd memorized repository structures from training data.

Function reproduction tests

In another experiment, models were asked to reproduce exact function implementations given only partial context. High reproduction accuracy indicated potential training data leakage rather than reasoning ability.

Benchmark contamination research findings

Multiple studies have found evidence of memorization, particularly for older, widely-circulated issues. Data contamination occurs when test data appears in a model's training set, causing inflated scores. SWE-Bench Verified attempts to address this, but the problem persists for models trained on large web corpora.

How SWE-Bench Compares to Other LLM Coding Benchmarks

SWE-Bench isn't the only benchmark worth watching. Here's how it fits into the broader landscape.

HumanEval for function-level code generation

HumanEval tests isolated function writing. It's useful for measuring basic code synthesis but lacks the complexity of real software engineering. Think of it as a baseline, not a ceiling.

MBPP for basic programming problems

MBPP (Mostly Basic Programming Problems) evaluates simple programming tasks. It serves as another baseline but doesn't test codebase navigation or multi-file changes.

Agentic benchmarks for tool use and automation

Newer benchmarks test LLMs using external tools, browsing documentation, and multi-step reasoning. Agentic benchmarks come closer to how AI coding assistants actually operate and may prove more predictive for production use cases.

How to Evaluate LLM Coding Tools Beyond Benchmarks

Benchmark scores provide a starting point. Here's how to go further when selecting AI code review or coding assistant tools.

1. Test on your private codebase

Run candidate tools against your actual repositories. Performance on open-source Python projects doesn't guarantee results on your proprietary code, internal frameworks, or polyglot stack.

2. Measure code quality and maintainability metrics

Track complexity, duplication, and maintainability. Benchmarks ignore all of this. Platforms like CodeAnt AI surface code quality metrics automatically, giving you visibility into whether AI-generated code meets your standards.

3. Assess security vulnerability detection

Evaluate whether the tool catches security issues, secrets, and misconfigurations. SWE-Bench doesn't test this at all, yet it's critical for enterprise environments with compliance requirements.

4. Evaluate review accuracy on real pull requests

Pilot AI code review tools on actual PRs. Measure false positive rates, actionable suggestions, and developer satisfaction. Real-world performance on your team's workflow matters more than any leaderboard position.

👉 Try CodeAnt AI to see how AI-driven reviews perform on your codebase, not just benchmarks.

What SWE-Bench Scores Mean for AI-Powered Code Review

SWE-Bench provides a useful signal, but it's not the whole story. The benchmark measures bug-fixing ability on familiar open-source projects. It doesn't assess security scanning, quality enforcement, or organization-specific standards.

When evaluating AI coding tools, treat SWE-Bench scores as one data point among many. A model that tops the leaderboard might still miss security vulnerabilities, generate unmaintainable code, or struggle with your private repositories.

CodeAnt AI combines AI-driven reviews with static analysis, security scanning, and quality metrics in a single platform. Instead of relying on benchmark performance alone, you get visibility into what actually matters: clean, secure, maintainable code.

Ready to see real-world performance? Book your 1:1 with our experts today!

SWE-Bench scores dominate conversations about LLM coding ability. A model hits 50% on the leaderboard, and suddenly it's "ready for production." But here's the thing, passing tests on popular open-source repositories doesn't mean the model will perform on your private codebase.

The benchmark uses real GitHub issues to evaluate bug-fixing ability, which makes it more realistic than older tests like HumanEval. It also has blind spots: memorization, security gaps, and zero coverage of enterprise codebases. This guide breaks down where SWE-Bench actually predicts real-world performance, where it falls short, and how to evaluate AI coding tools beyond the leaderboard.

What Is SWE-Bench and Why It Matters

SWE-Bench predicts real-world LLM performance by using actual GitHub issues and pull requests. Models have to navigate large codebases and fix real bugs, which makes the benchmark highly relevant for daily development work. However, SWE-Bench can overstate capabilities due to data contamination (where models memorize solutions rather than reason through problems), and it doesn't capture factors like code quality or security.

For engineering teams evaluating AI coding tools, SWE-Bench offers a useful signal. But it's only one piece of the puzzle.

The problem SWE-Bench was designed to solve

Earlier benchmarks like HumanEval tested isolated coding tasks. Write a function, return the correct output. That's useful for measuring basic code synthesis, but it doesn't reflect how developers actually work.

Real software engineering involves understanding sprawling codebases, tracking down bugs across multiple files, and producing patches that pass existing test suites. SWE-Bench fills this gap by drawing from actual GitHub issues in popular open-source Python repositories. The result is a benchmark that looks a lot more like the bug-fixing work your team does every day.

How SWE-Bench differs from HumanEval and MBPP

Benchmark | Task Type | Codebase Context | Real-World Relevance |

HumanEval | Single-function generation | None | Low |

MBPP | Basic programming problems | None | Low |

SWE-Bench | Bug fixing from GitHub issues | Full repository | High |

HumanEval and MBPP measure whether a model can write correct code in isolation. SWE-Bench measures whether it can operate within a real project, which is a much harder test.

SWE-Bench Verified vs Lite vs Full

The benchmark comes in several variants:

SWE-Bench Full: The complete dataset of GitHub issues across multiple repositories

SWE-Bench Lite: A curated subset designed for faster evaluation

SWE-Bench Verified: Human-validated subset that reduces noise and confirms each task has a clear, achievable solution

SWE-Bench Verified matters most for serious evaluation because it filters out ambiguous issues.

How SWE-Bench Pro Raises the Bar Beyond Vanilla SWE-Bench

SWE-Bench Pro was introduced to address many of the limitations that surfaced as models began saturating earlier benchmark variants. While the original SWE-Bench measures whether a model can go from issue to patch to passing tests, Pro adds structural safeguards and task design choices that make it a more discriminative and realistic benchmark for modern AI agents.

Three-way dataset split for contamination resistance

One of the biggest challenges with any public benchmark is training data leakage. SWE-Bench Pro explicitly tackles this by splitting tasks across three tiers: public repositories, held-out private GPL repositories reserved to prevent overfitting, and commercial codebases sourced from real startups. The commercial tier is never released publicly; only evaluation results are shared. This makes memorization far less effective as a strategy and improves confidence that scores reflect reasoning, not recall.

Long-horizon, non-trivial tasks by construction

Unlike earlier splits that include small or localized fixes, Pro filters out trivial issues by design. Tasks typically require substantial multi-file changes, with the paper reporting an average of roughly 107 lines of code across just over four files. This shifts the benchmark away from “find the obvious bug” and toward the kind of extended reasoning and coordination real engineering work demands.

Human augmentation to make tasks resolvable

SWE-Bench Pro uses a human-in-the-loop workflow to clarify ambiguous issues and add missing context without simplifying the underlying challenge. This reduces failure modes where agents fail simply because an issue is underspecified, allowing the benchmark to better measure true problem-solving ability rather than prompt interpretation luck.

Stronger verification through improved test recovery

Test quality is a quiet but critical factor in any benchmark. Pro introduces mechanisms to recover or strengthen unit tests so that correct fixes are less likely to fail due to flaky, missing, or overly strict verifiers. This reduces false negatives and makes pass rates more meaningful as signals of actual correctness.

Broader, enterprise-style repository mix

Instead of focusing heavily on a small set of popular open-source libraries, SWE-Bench Pro draws problems from 41 actively maintained repositories spanning business applications, B2B software, and developer tools. This diversity better reflects the kinds of systems engineering teams actually run, rather than just the most commonly forked Python projects on GitHub.

Harder and more discriminative today

Even under a unified evaluation scaffold, strong models and agents remain well below “near-solved” territory on Pro. Baseline results reported in the paper show pass@1 rates below 25%, making it significantly more discriminative than easier splits like Verified. As a result, Pro currently does a better job separating incremental capability gains from benchmark saturation effects.

If you want a deeper breakdown of how SWE-Bench Pro differs methodologically from earlier variants, including task construction and evaluation scaffolds, we’ve covered that in a dedicated comparison below.

Benchmark | Primary Task Type | Codebase Scope | Contamination Resistance | Task Complexity | Enterprise Relevance |

|---|---|---|---|---|---|

HumanEval | Function generation | None | Low | Low | Very Low |

MBPP | Basic programming problems | None | Low | Low | Very Low |

SWE-Bench | Issue-based bug fixing | Popular OSS repos | Medium | Medium | Limited |

SWE-Bench Verified | Bug fixing with validated tasks | Popular OSS repos | Medium | Medium | Limited |

SWE-Bench Pro | Long-horizon issue resolution | OSS + private + commercial | High | High | Higher (still partial) |

SWE-Bench Pro is a meaningful step forward, but it still evaluates models in isolation from the review workflows, security constraints, and organizational standards that define real-world engineering environments.

How SWE-Bench Evaluates LLM Coding Performance

Understanding what a SWE-Bench score actually measures helps you interpret leaderboard results and recognize their limits.

Real GitHub issues as test cases

Each task comes from an actual pull request merged into a popular open-source Python repository. The model receives the issue description and repository context, then attempts to locate and fix the problem. This mirrors how developers approach bug reports in practice.

Bug localization and patch generation tasks

The challenge has two parts. First, the model identifies which files and functions contain the bug. Then it generates a code patch that resolves the issue. Both steps require understanding the codebase structure, not just writing syntactically correct code.

Pass rate scoring and leaderboard rankings

Pass rate equals the percentage of issues where the model's patch passes all associated tests. Leaderboards rank models by this metric. A model scoring 40% on SWE-Bench Verified resolves 40% of the test issues correctly, at least according to the test suite.

Where SWE-Bench Predicts Real-World LLM Performance

High SWE-Bench scores do correlate with practical coding ability in certain scenarios. Here's where the benchmark genuinely predicts performance.

Bug fixing and issue resolution

Models that score well on SWE-Bench typically handle straightforward bug fixes effectively. If your team uses AI assistants for triaging and patching known issues, benchmark performance offers a reasonable signal.

Codebase navigation and context understanding

SWE-Bench tests a model's ability to read and understand large codebases. This skill transfers to real development work. Models that struggle here will likely struggle when you ask them to work with your repositories.

Multi-file code changes

The benchmark requires edits across multiple files, which predicts how well an LLM handles complex, interconnected changes. This matters for feature development and refactoring tasks, not just isolated fixes.

Where SWE-Bench Falls Short

Now for the critical limitations. The gaps below explain why you can't rely on benchmark scores alone when selecting AI coding tools.

Memorization vs genuine problem-solving

Recent research shows LLMs may recall solutions from training data rather than reason through problems. This phenomenon, called benchmark contamination, inflates scores without reflecting true capability. A model might "solve" an issue simply because it saw the fix during training.

Repository bias toward popular open-source projects

SWE-Bench draws from well-known repositories like Django, Flask, and scikit-learn. Popular codebases are likely overrepresented in LLM training data. Performance on familiar projects doesn't guarantee performance on your private codebase with custom frameworks and internal conventions.

No evaluation of code quality or security

SWE-Bench only checks if tests pass. It doesn't assess whether the code is maintainable, secure, or follows best practices. A patch that introduces a security vulnerability still counts as a success if the tests pass. For enterprise teams, this blind spot matters enormously.

Limited coverage of enterprise and private codebases

The benchmark cannot predict LLM performance on proprietary code, internal frameworks, or languages beyond Python. If your stack includes Java, TypeScript, or Go, SWE-Bench scores tell you less than you might hope.

Evidence That LLMs Memorize SWE-Bench Solutions

Microsoft researchers and others have investigated whether high scores reflect genuine reasoning or memorization. The findings are sobering.

File path identification experiments

Researchers tested whether models could identify the correct file paths to modify without seeing the issue description. Some models succeeded at rates suggesting they'd memorized repository structures from training data.

Function reproduction tests

In another experiment, models were asked to reproduce exact function implementations given only partial context. High reproduction accuracy indicated potential training data leakage rather than reasoning ability.

Benchmark contamination research findings

Multiple studies have found evidence of memorization, particularly for older, widely-circulated issues. Data contamination occurs when test data appears in a model's training set, causing inflated scores. SWE-Bench Verified attempts to address this, but the problem persists for models trained on large web corpora.

How SWE-Bench Compares to Other LLM Coding Benchmarks

SWE-Bench isn't the only benchmark worth watching. Here's how it fits into the broader landscape.

HumanEval for function-level code generation

HumanEval tests isolated function writing. It's useful for measuring basic code synthesis but lacks the complexity of real software engineering. Think of it as a baseline, not a ceiling.

MBPP for basic programming problems

MBPP (Mostly Basic Programming Problems) evaluates simple programming tasks. It serves as another baseline but doesn't test codebase navigation or multi-file changes.

Agentic benchmarks for tool use and automation

Newer benchmarks test LLMs using external tools, browsing documentation, and multi-step reasoning. Agentic benchmarks come closer to how AI coding assistants actually operate and may prove more predictive for production use cases.

How to Evaluate LLM Coding Tools Beyond Benchmarks

Benchmark scores provide a starting point. Here's how to go further when selecting AI code review or coding assistant tools.

1. Test on your private codebase

Run candidate tools against your actual repositories. Performance on open-source Python projects doesn't guarantee results on your proprietary code, internal frameworks, or polyglot stack.

2. Measure code quality and maintainability metrics

Track complexity, duplication, and maintainability. Benchmarks ignore all of this. Platforms like CodeAnt AI surface code quality metrics automatically, giving you visibility into whether AI-generated code meets your standards.

3. Assess security vulnerability detection

Evaluate whether the tool catches security issues, secrets, and misconfigurations. SWE-Bench doesn't test this at all, yet it's critical for enterprise environments with compliance requirements.

4. Evaluate review accuracy on real pull requests

Pilot AI code review tools on actual PRs. Measure false positive rates, actionable suggestions, and developer satisfaction. Real-world performance on your team's workflow matters more than any leaderboard position.

👉 Try CodeAnt AI to see how AI-driven reviews perform on your codebase, not just benchmarks.

What SWE-Bench Scores Mean for AI-Powered Code Review

SWE-Bench provides a useful signal, but it's not the whole story. The benchmark measures bug-fixing ability on familiar open-source projects. It doesn't assess security scanning, quality enforcement, or organization-specific standards.

When evaluating AI coding tools, treat SWE-Bench scores as one data point among many. A model that tops the leaderboard might still miss security vulnerabilities, generate unmaintainable code, or struggle with your private repositories.

CodeAnt AI combines AI-driven reviews with static analysis, security scanning, and quality metrics in a single platform. Instead of relying on benchmark performance alone, you get visibility into what actually matters: clean, secure, maintainable code.

Ready to see real-world performance? Book your 1:1 with our experts today!

FAQs

Does a high SWE-Bench score guarantee an LLM will work well on my codebase?

How often is the SWE-Bench dataset updated to prevent memorization?

Can SWE-Bench evaluate LLMs for automated code review tasks?

What is benchmark contamination in LLM evaluation?

Why do some models perform well on SWE-Bench but poorly in production?

Table of Contents

Start Your 14-Day Free Trial

AI code reviews, security, and quality trusted by modern engineering teams. No credit card required!

Share blog: