AI Code Review

Feb 6, 2026

How Developers Learn to Trust AI Code Review Suggestions

Sonali Sood

Founding GTM, CodeAnt AI

An AI code reviewer flags a potential bug in your PR. Your first reaction isn't relief, it's skepticism. Is this real, or just noise? Does it understand our architecture, or is it pattern-matching generic rules?

That hesitation is the "trust tax" the productivity loss from manually verifying AI suggestions because the tool can't explain its reasoning or prove it understands your codebase. Here's the disconnect: 81% of developers report quality improvements with AI code review, yet most teams treat AI suggestions like output from a junior engineer, useful maybe, but requiring full verification before merge.

The gap isn't accuracy. It's context. When AI analyzes diffs in isolation without understanding your repo's architecture, team standards, or historical patterns, even correct suggestions feel like guesswork. And guesswork doesn't ship to production.

This guide breaks down how developers actually learn to trust AI code review, through a measurable progression from skepticism to confident reliance. You'll learn the three stages of trust-building, why context-awareness matters more than accuracy alone, and how to accelerate your team's journey from "verify everything" to "ship with confidence" in weeks, not months.

The Trust Gap: Why Developer Skepticism Is Rational

Developer skepticism toward AI suggestions isn't irrational fear of automation, it's a calibration problem rooted in risk management and verification cost.

The trust equation is simple: Will this AI catch issues I'd miss and avoid flooding me with false positives that waste more time than manual review?

Here's what's actually happening when developers say they "don't trust AI review":

Black-box reasoning: AI flags a potential bug but doesn't explain why it matters in your specific codebase context. You're left manually tracing data flows to verify if the suggestion is relevant or noise.

False positive fatigue: Tools that analyze diffs in isolation generate suggestions that look plausible but ignore your team's architectural patterns, creating noise that trains you to ignore alerts.

Standards mismatch: Generic AI models don't know your org's coding conventions, security policies, or compliance requirements—so suggestions feel like advice from someone who's never read your engineering handbook.

These aren't vibes-based concerns. They're measurable friction points that slow PR velocity and erode confidence in automation.

The Trust Tax: When Verification Costs More Than the Insight

Every AI suggestion carries an implicit cost: the time developers spend verifying whether it's worth acting on. Consider the typical workflow:

AI flags a potential null pointer dereference in a service method

Developer opens the PR but lacks context: Is this a real risk? Does this code path execute in production? What's the blast radius?

Developer spends 10-15 minutes tracing data flows, checking call sites, reviewing logs

Conclusion: the flag was technically correct but operationally irrelevant, the method is only called with validated inputs from an upstream service

Multiply this by 20-30 AI comments per PR, and teams waste 15+ hours per week manually verifying suggestions that should have been self-evident.

Why context-awareness eliminates the trust tax:

CodeAnt AI analyzes your entire repository, not just the diff. When it flags that null pointer issue, it also shows:

The three upstream call sites and their input validation logic

Similar patterns in other services where this was a production incident

Team-specific standards (e.g., "We always validate at the API boundary, not in business logic")

The developer sees the why immediately. Verification time drops from 15 minutes to 30 seconds.

What Developers Actually Mean By "I Don't Trust AI"

When senior engineers push back, they're objecting to tool design choices that create friction:

Developer Objection | Root Cause | CodeAnt Solution |

"It's a black box, I can't explain suggestions to my team" | No reasoning transparency | AI reasoning panel shows detection logic, references similar patterns, links to team standards |

"Too many false positives, I've learned to ignore it" | Diff-only analysis lacks codebase context | Full-repo understanding filters noise; 80% reduction in manual review effort |

"It doesn't understand our architecture" | Generic models trained on public code | Team-specific learning adapts to org patterns, coding standards, historical decisions |

"I spend more time verifying than reviewing" | Missing context forces manual investigation | Inline explanations with codebase-specific examples accelerate verification |

These aren't AI problems, they're trust design problems. Tools optimize for coverage (flag everything) rather than signal (flag what matters). The result? Developers tune out, and adoption stalls at 30-40% suggestion acceptance.

CodeAnt AI flips this: instead of maximizing alerts, we maximize actionable insights. Teams report 95%+ suggestion acceptance after 30 days because context is built-in, reasoning is transparent, and false positives are negligible.

The Three Stages of Learning to Trust AI Code Review

Trust isn't a switch you flip, it's a journey developers take as they move from skeptical verification to confident reliance.

Stage 1 – Verification: "Show Me It Works on My Code"

Developers treat AI suggestions like output from a junior engineer: potentially useful, but requiring thorough validation before merge. This skepticism is healthy, it's the same instinct that prevents shipping untested code.

What developers do:

Manual testing every suggestion: Run the code locally, check edge cases, verify the AI's reasoning

Demand reproducible evidence: Need to see the data flow, understand the context, trace the issue to root cause

Cross-reference with documentation: Compare against internal standards and architectural decision records

Treat AI comments as hypotheses: Validate through peer review and automated tests

The trust-building artifacts that matter:

At this stage, developers need transparent reasoning more than raw accuracy. A 95% accurate suggestion without explanation loses to an 85% accurate suggestion with clear justification and codebase-specific examples.

CodeAnt AI's AI reasoning panel shows:

Why the issue was flagged: "This variable is null in 3 similar patterns across your auth service, causing 12% of login failures in production"

Codebase-specific context: Links to similar code patterns in your repo

Fix rationale with trade-offs: Why the suggested approach works for your stack, including performance implications

Week 1-2 behavior shift:

Developers move from "I need to test everything" to "I can spot-check high-risk areas because the AI shows its work." When developers can independently verify AI reasoning, trust compounds faster than with black-box suggestions.

Stage 2 – Validation: "Prove It Catches What Humans Miss"

Verification answers "does this work?"—validation answers "does this add value?" Developers stop comparing AI to junior engineers and start evaluating it as a specialized reviewer with pattern recognition across the entire codebase.

What developers look for:

Incremental value beyond human review: Catching integration bugs, architectural anti-patterns, security gaps that slip through manual review

Cross-service context awareness: Identifying issues requiring understanding of multiple repos or historical changes

Systemic issue detection: Flagging patterns that repeat across the codebase

The context gap that kills validation:

Generic AI tools analyze diffs in isolation, missing architectural context. A suggestion to "add input validation" means nothing if the AI doesn't know your API gateway already handles sanitization upstream.

CodeAnt AI's full-repository analysis provides context that diff-only tools lack:

Issue: Redundant database query in user service

Context:

- Similar pattern in 3 other services (auth, billing, notifications)

- Causes 40% of P95 latency spikes in production

- Team standard: cache user lookups for 5 minutes

Suggestion: Add Redis cache layer with TTL=300s

Impact: Reduces query load by 60%, improves P95 by 200ms

Week 3-4 behavior shift:

Developers transition from "I'll verify this suggestion" to "I trust this suggestion because it understands our system." When AI catches a critical bug that slipped through human review, trust accelerates exponentially.

Stage 3 – Confidence: "I Trust It Enough to Ship"

Confidence doesn't mean blind trust, it means conditional reliance where developers know which suggestions to accept immediately and which require human judgment.

What confidence looks like:

Automated enforcement for routine checks: Teams configure CodeAnt to block PRs with security vulnerabilities, test coverage below 70%, or code complexity above thresholds

Selective human review for high-stakes code: Developers still manually review auth logic, payment flows, infrastructure changes, but trust AI for everything else

AI suggestions become team norms: New engineers learn coding standards through AI feedback

The trust infrastructure that enables confidence:

CodeAnt AI provides:

Audit trails: Every suggestion, acceptance, and override is logged, critical for SOC2, ISO 27001, NIST compliance

Quality gates with override policies: Define when AI can auto-merge vs. when human sign-off is required

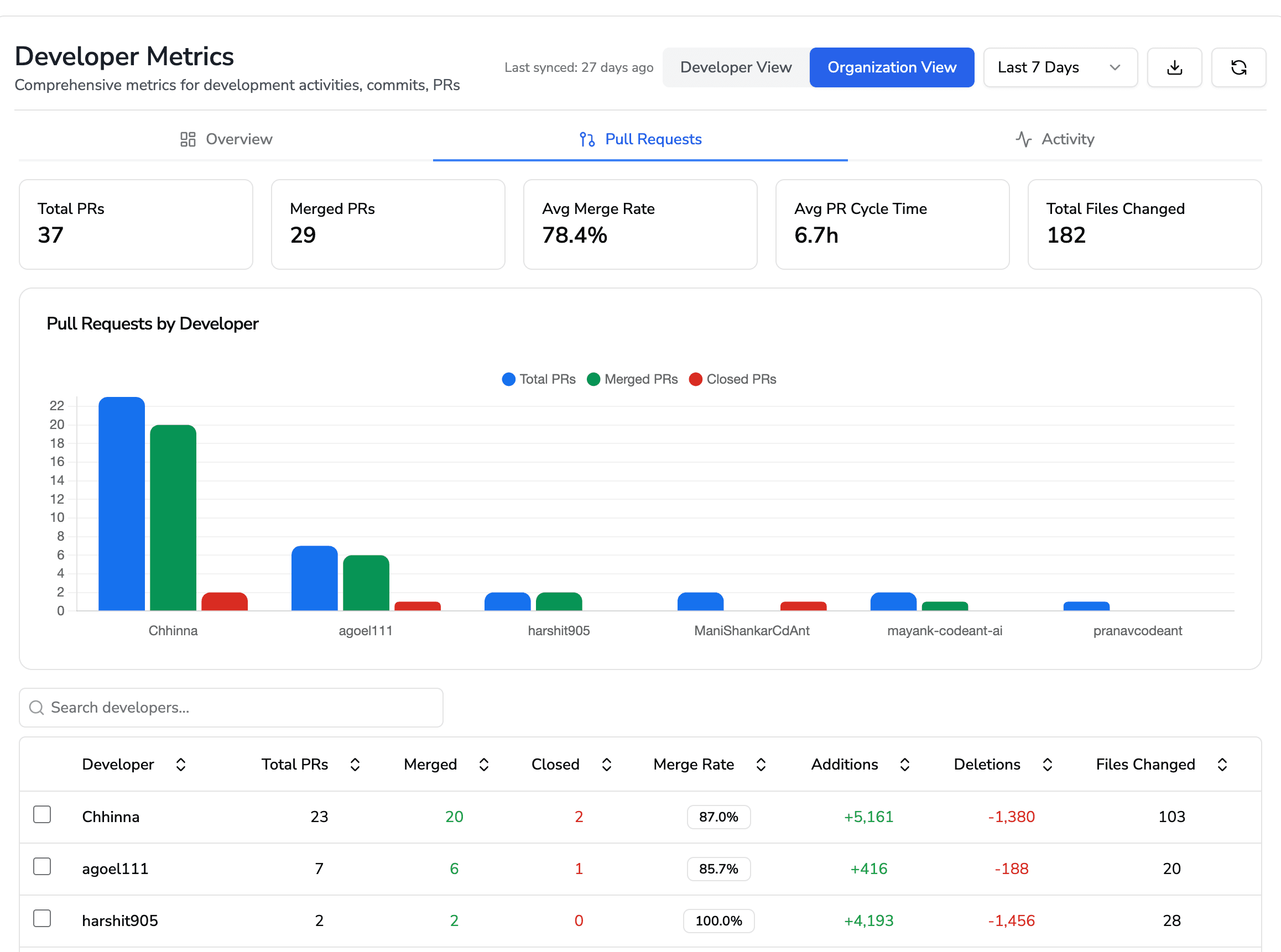

360° analytics dashboard: Tracks PR velocity, suggestion acceptance rates, post-merge incidents, DORA metrics

The 30-day trust journey:

Week 1: Read-only mode on non-critical repos. Developers observe, validate against mental models. Acceptance: 40-60%.

Week 2: Opt-in enforcement for security checks. AI catches first integration bug human review missed. Acceptance: 60-75%.

Week 3: Expand to all repos. Developers rely on AI for routine checks. Acceptance: 75-85%.

Week 4: Auto-merge policies for low-risk changes. AI feedback becomes the first line of review. Acceptance: 85-95%.

Why Context-Awareness Builds Trust Faster Than Accuracy Alone

Developers don't distrust AI because it's wrong, they distrust it because it doesn't explain why it's right.

The Context Gap: When Accurate Suggestions Still Feel Like Noise

Your AI reviewer flags a potential null pointer dereference. Technically accurate. But here's what's missing:

Multi-service flow understanding: Does this method get called by three microservices, or is it deprecated?

Configuration-driven behavior: Is this null case actually impossible given your feature flags?

Shared library implications: Are there 12 other instances needing the same fix?

Security context: Is this user-facing with PII exposure risk?

Without this context, you're stuck doing archaeology—grepping for call sites, checking configs, reviewing similar patterns. That's 15-20 minutes for a two-line fix.

This is why diff-only analysis fails the trust test. Tools analyzing changed lines in isolation can't answer "does this matter to us?"

How Full-Repository Understanding Changes the Equation

Context-aware systems analyze your entire codebase to build a dependency graph of how code behaves in production:

Example: Risky Change Detection

Diff-only tool: "This can return None, add a null check."

Context-aware system shows:

Call site analysis: Invoked in 47 places across 8 services

Critical paths: 12 call sites in payment processing with no null handling

Config correlation: Staging has

ALLOW_ANONYMOUS=true, making nulls common in test but rare in prodHistorical incidents: Similar bugs caused 3 production incidents, avg MTTR 2.3 hours

Pattern matching: 5 other auth utilities raise exceptions instead of returning None

Now the suggestion isn't "add a null check"—it's "this is a high-risk inconsistency that's already caused outages, here's how to align with your team's patterns."

That's the difference between verification and validation. You're not testing whether the AI is right; you're confirming it understands your system.

Practical Playbook: Accelerating Trust in 30 Days

Trust is earned through systematic verification and measurable outcomes. This playbook provides a framework for rolling out AI code review while maintaining quality standards.

Phase 1: Establish the Baseline (Days 1–7)

Start by treating AI review as an observational layer, not an enforcement mechanism.

Implementation steps:

Deploy in read-only mode on low-risk repositories

Enable CodeAnt on internal tools or documentation repos. Configure for comments without blocking merges.

1. Define risk categories and acceptance thresholds:

Risk Category | Examples | Auto-apply? | Human Review? |

Low | Formatting, unused imports | Yes (after observation) | No |

Medium | Code smells, complexity warnings | No | Yes (spot-check) |

High | Security vulnerabilities, architectural changes | No | Yes (mandatory) |

Critical | Secrets exposure, SQL injection, auth bypasses | No | Yes (security sign-off) |

2. Require evidence for every suggestion: Configure CodeAnt to include test impact analysis, reproduction steps, and reference links to OWASP guidelines or internal docs.

Success metrics (Week 1):

Suggestion acceptance: >60% low-risk, >40% medium-risk

False positive rate: <20% overall

Time to verify: <2 min low-risk, <10 min high-risk

Phase 2: Build Feedback Loops (Days 8–14)

Trust accelerates when developers see AI learning from their corrections.

Implementation steps:

Label false positives systematically

Add feedback directly in PR comments: 👍 Helpful | 👎 False Positive | 🤔 Needs ContextTune rules based on team standards

Use first week's data to customize CodeAnt:Suppress noise: If 80% of warnings are intentional, disable that rule

Elevate signal: Add custom patterns for architectural violations

Capture exceptions: Document legitimate rule violations

Implement multi-signal checks

Layer static analysis + dependency scanning + secret detection + context analysis

Success metrics (Week 2):

Feedback response rate: >50% developer engagement

Rule adjustments: 3-5 tuning changes per week

Multi-signal catch rate: 10-15% issues caught by layered checks

Phase 3: Graduate to Conditional Enforcement (Days 15–21)

Shift from observation to selective enforcement.

Implementation steps:

1. Set quality gates for medium-risk issues: Require acknowledgment but don't block merges. Developer must refactor or justify complexity.

2. Require security team sign-off for critical findings:

Success metrics (Week 3):

Auto-apply accuracy: >95%

Security review SLA: <4 hours for critical findings

Developer friction: "AI helps me ship faster" sentiment

Phase 4: Scale and Optimize (Days 22–30)

Prove trust translates to measurable outcomes.

Implementation steps:

Expand to production-critical repositories

Apply phased rollout with tighter thresholdsEstablish weekly success reviews

Track PR merge time (-30%), post-merge incidents (-20%), hours saved (10+/week)Document the trust playbook

Capture what worked for future teams

Measuring Trust Through Outcomes

Trust isn't about sentiment, it's about measurable impact on velocity, quality, and security.

Signal Quality Metrics

Suggestion acceptance rate, segmented by severity:

Critical security: 90%+ after 30 days (low acceptance signals false positives)

Architectural issues: 70-85% (CodeAnt's full-repo analysis detects integration bugs)

Code quality: 50-70% (selective acceptance shows critical thinking)

Track trends over time. If critical suggestions plateau below 80%, you have context gaps or noisy alerts.

Velocity Metrics

PR cycle time reduction:

Teams using CodeAnt see 40-70% reduction after the verification stage completes. Track:

Review comment density: Human comments should decrease for routine PRs, concentrate on high-stakes decisions

Time-to-first-review: AI summaries and context reduce this metric

Quality Metrics

Post-merge indicators:

Rework rate: PRs requiring follow-up fixes within 7 days (should trend down)

Escaped vulnerabilities: Security issues in production that passed AI review (should approach zero)

Post-merge incidents: 30% reduction proves trust is justified

MTTR: AI-generated context reduces resolution time

The Trust Calibration Dashboard

CodeAnt's 360° analytics consolidate these into a single view:

Metric Category | Key Indicator | Trust Signal |

Signal Quality | Acceptance rate by severity | >85% critical, >70% architectural |

Velocity | PR cycle time reduction | 40-70% improvement after 30 days |

Quality | Post-merge rework rate | Trending down |

Security | Escaped vulnerabilities | Near-zero for known classes |

Code Health | Complexity & duplication trends | Decreasing |

Case Study: Akasa Air’s Trust-Building Adoption Journey with CodeAnt AI

Akasa Air, an Indian airline operating a large, distributed GitHub ecosystem, strengthened developer confidence in AI-assisted code review by adopting CodeAnt AI through a progressive, low-friction rollout across mission-critical aviation systems.

Rather than disrupting existing workflows, the team introduced CodeAnt AI in stages, allowing engineers to observe value, validate accuracy, and gradually rely on automated security and quality enforcement at scale.

Phase 1: Read-Only Observation

CodeAnt AI was introduced as an always-on code health layer inside GitHub, providing non-blocking feedback on security risks, secrets exposure, infrastructure misconfigurations, and code quality issues.

Developers were able to:

See AI findings directly in pull requests

Review flagged issues without merge disruption

Compare AI feedback against existing manual checks

Engineering leads used this phase to understand coverage consistency and identify where risks were concentrating across repositories, without changing day-to-day development flow.

Phase 2: Security-Focused Enforcement

As confidence grew, Akasa Air relied on CodeAnt AI’s automated detection to surface high-impact risks early, including:

Hard-coded secrets and credentials

Critical and high-severity dependency vulnerabilities

Insecure code patterns and unsafe APIs

These findings provided faster feedback loops and reduced late-stage surprises, allowing teams to address security issues earlier in the development lifecycle.

Phase 3: Expanded Quality and Risk Visibility

With continuous scanning in place, CodeAnt AI enabled broader visibility across:

Infrastructure-as-Code risks in Kubernetes, Docker, and YAML

Code quality issues such as dead code, duplication, and anti-patterns

Risk hot-spots across services through centralized dashboards

Senior engineers used this visibility to validate changes against architectural expectations and maintain consistent standards across a rapidly growing codebase.

Phase 4: Organization-Wide Coverage

CodeAnt AI became Akasa Air’s system of record for Code Health, delivering:

Continuous security, dependency, and quality coverage across 1M+ lines of code

Organization-wide dashboards for leadership visibility

GitHub-native workflows that kept developers productive

Human reviewers increasingly focused on architecture, design decisions, and system behavior, while CodeAnt AI handled continuous detection and enforcement.

Measured Outcomes

After adopting CodeAnt AI across its GitHub ecosystem, Akasa Air achieved:

900+ security issues automatically flagged

150+ Infrastructure-as-Code risks detected earlier

20+ critical and high-severity CVEs surfaced

20+ secrets instantly identified

100,000+ code quality issues detected

Centralized visibility into security and quality risk across services

Why It Worked

Akasa Air’s approach emphasized progressive trust-building rather than forced automation. By introducing CodeAnt AI as an always-on, GitHub-native layer, the team was able to:

Validate AI findings without workflow disruption

Reduce blind spots caused by fragmented tools

Gain consistent, organization-wide risk visibility

Improve review focus without slowing delivery

CodeAnt AI’s full-repository context, continuous scanning, and unified dashboards helped transform skepticism into confidence, supporting secure, scalable engineering practices for aviation-grade systems.

Read the full case here.

Conclusion: Build Trust with Guardrails, Evidence, and Feedback Loops

Trust in AI code review is an engineered outcome built through clear stages, measurable checkpoints, and continuous feedback.

The Framework

Verification (Weeks 1-2): Test AI suggestions manually. CodeAnt's inline reasoning shows why suggestions matter.

Validation (Weeks 3-4): Track if AI catches what humans miss. CodeAnt's full-repository context detects architectural issues diff-only tools ignore.

Confidence (Week 5+): Rely on AI for routine checks. Teams report 95%+ acceptance after 30 days.

Three Trust Accelerators

Context-awareness eliminates verification tax. CodeAnt's 80% reduction in false positives means less time validating noise.

Transparency turns skeptics into advocates. Explainable AI builds trust through visible reasoning.

Metrics prove trust is earned. Track PR velocity, acceptance rate, incident reduction weekly.

Your Next Steps

Week 1: Deploy read-only

Enable CodeAnt on non-critical repos. Build familiarity without friction.

Week 2: Define risk-based rules

Auto-merge low-risk suggestions, require review for security/architecture.

Week 3-4: Instrument and iterate

Track time-to-merge, acceptance rate, incidents. Adjust CodeAnt's sensitivity based on patterns.

Ongoing: Use AI to verify AI

Let AI-generated tests act as trust backstop. Reserve human judgment for high-stakes architectural decisions.

Build Trust at Scale

Teams that move fastest build trust systematically. CodeAnt accelerates this journey through context, transparency, and metrics that turn skepticism into confidence in weeks.

Start your 14-day free trial and experience full-repository context, explainable AI reasoning, and measurable ROI. Or book a 1:1 with our team to see how organizations achieve 3x faster PR merges while reducing incidents by 30%.

Trust isn't about believing the AI, it's about building a system where trust is the inevitable outcome of evidence, guardrails, and continuous validation.