AI Code Review

Jan 7, 2026

How Visual PR Artifacts Cut Code Review Time by 80%

Sonali Sood

Founding GTM, CodeAnt AI

Code reviews shouldn't feel like a game of telephone. Yet most teams spend more time asking clarifying questions and waiting for responses than actually reviewing code.

Visual PR artifacts, auto-generated summaries, diagrams, and annotated diffs—give reviewers the context they need upfront, cutting back-and-forth by surfacing what changed and why before the first comment. This guide covers the types of visual artifacts that accelerate reviews, how they solve common bottlenecks, and how to implement them in your workflow.

Why PR Review Back-and-Forth Slows Your Entire Team

Visual PR artifacts, diagrams, AI-generated summaries, and annotated diffs, reduce review back-and-forth by giving reviewers immediate context about what changed and why. Instead of asking clarifying questions and waiting hours for responses, reviewers see the full picture upfront. That single shift eliminates most of the ping-pong that drags out review cycles.

The real cost goes beyond the PR itself. Every time a reviewer asks "what does this change affect?" or "why did you approach it this way?", both parties context-switch. The author stops their current work to respond. The reviewer moves on to something else and loses momentum. When the response finally arrives, everyone has to reload the mental context they had before.

Here's what typically triggers delays:

Unclear change scope: Reviewers can't quickly see which files or modules are affected

Missing rationale: No explanation of why changes were made

Hidden dependencies: Impacts on other parts of the codebase aren't visible

Ambiguous diffs: Raw code diffs lack the context to understand intent

Elite engineering teams complete reviews in under six hours. Teams stuck in clarification loops often take days. The difference usually comes down to how much context the PR provides upfront.

What Are Visual PR Artifacts

Visual PR artifacts are automatically generated representations—summaries, diagrams, annotated diffs, and graphs—that accompany pull requests. They translate raw code changes into formats that communicate intent quickly.

Think of it like the difference between handing someone a stack of blueprints versus walking them through a 3D model. Both contain the same information, but one requires far less effort to understand.

Artifacts typically include plain-language summaries of what changed, visual maps showing affected files and their relationships, and inline annotations highlighting potential issues. The key word here is automatic—developers don't create artifacts manually. The tooling generates them when a PR opens.

Types of Visual Artifacts That Speed Up Reviews

AI-Generated PR Summaries

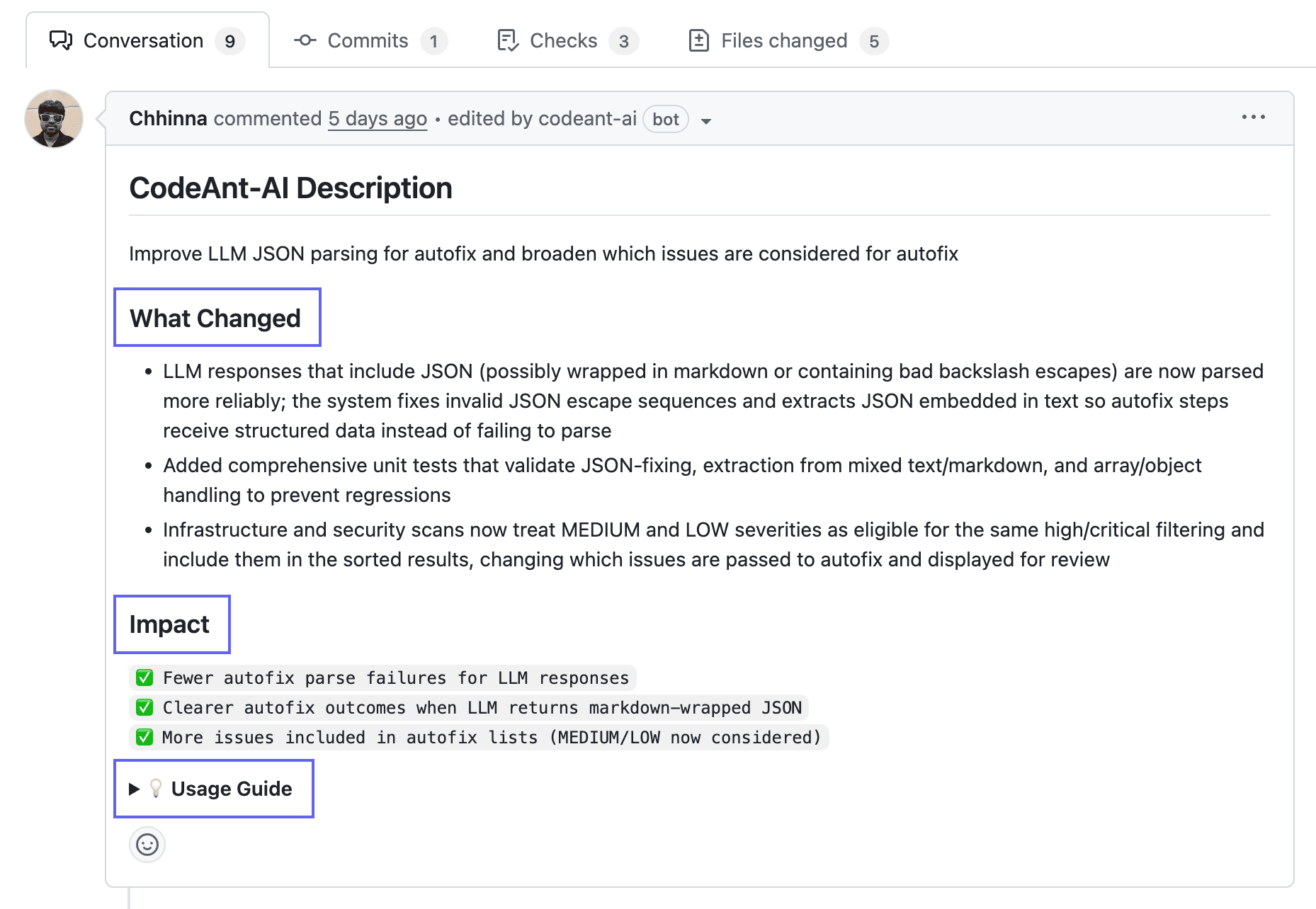

AI-generated summaries explain what changed and why in plain language. Instead of scanning through dozens of files, reviewers read a concise description of the PR's purpose, the approach taken, and the key areas to focus on.

CodeAnt AI produces summaries automatically on every PR. The summary captures the core intent, what problem the change solves, which components it touches, and what reviewers should pay attention to.

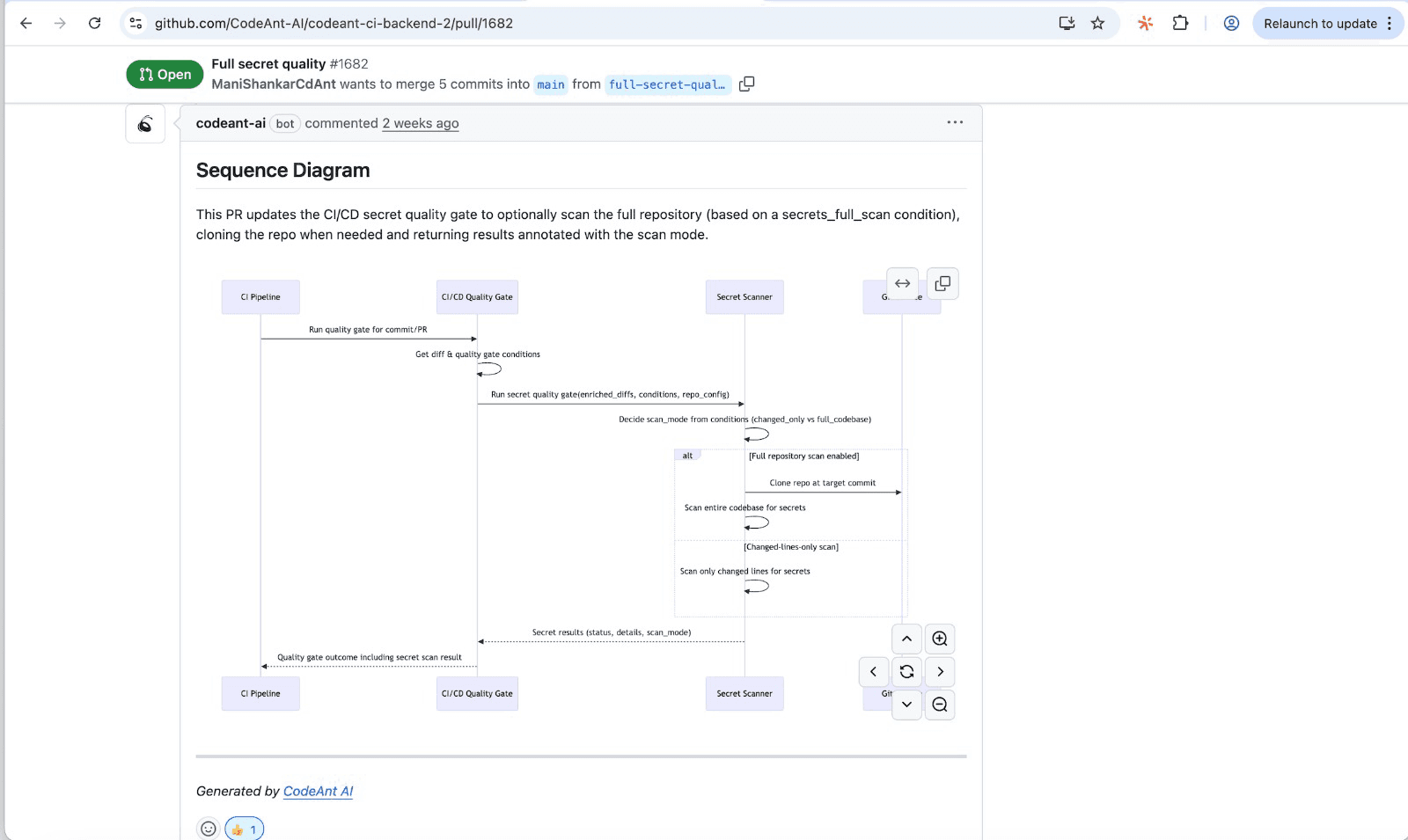

Change Impact Diagrams

Change impact diagrams show which files, functions, or services a PR touches and how they connect. For every PR, a well-designed diagram captures the core runtime flow introduced or modified by the change.

Diagrams focus on the most relevant touchpoints:

Primary entry point: API handler, job, or event consumer

Downstream modules: Functions and services the change invokes

External dependencies: Database, cache, queue, or third-party APIs

Critical transitions: Validations, authorization checks, and error paths

Instead of drawing the entire system, the view compresses to the PR's "happy path" plus the highest-risk branches.

![Example of a change impact diagram showing module relationships]

Annotated Code Diffs with Inline Context

Standard diffs show red and green lines. Annotated diffs add AI-powered inline comments, security flags, and quality annotations directly in the PR view.

Reviewers see potential issues—security vulnerabilities, complexity warnings, standards violations—before they even start reviewing. The diff becomes a conversation starter rather than a puzzle to decode.

Dependency and Architecture Graphs

Dependency graphs visualize how changes affect imports, packages, or microservice connections. When a PR modifies a shared utility, the graph shows every component that depends on it.

This prevents the classic "I didn't realize that would break X" scenario. Reviewers can assess blast radius at a glance.

Security and Quality Annotations

Security annotations highlight vulnerabilities, code smells, complexity hotspots, and standards violations directly in the PR view. Visual markers draw attention to what matters most.

Artifact Type | What It Shows | Review Benefit |

AI-generated summary | Plain-language description of changes | Instant understanding without reading code |

Change impact diagram | Files and modules affected | Quick scope assessment |

Annotated diff | Inline comments and flags | Issues surfaced before review starts |

Dependency graph | Upstream/downstream impacts | Prevents missed side effects |

Security annotations | Vulnerabilities and risks | Blocks risky merges automatically |

How Visual Artifacts Fix Common Review Bottlenecks

Large PRs That Overwhelm Reviewers

Large PRs create cognitive overload. Reviewers don't know where to start, so they either skim superficially or delay the review entirely.

Visual summaries and impact diagrams break down complexity. Reviewers see the PR's structure at a glance—which components changed, how they relate, and where to focus attention. A 50-file PR transforms from overwhelming to navigable.

Missing Context That Triggers Clarification Loops

Most back-and-forth stems from missing context. "What was the reason for this approach?" "Does this affect the payment flow?" "Why didn't you use the existing utility?"

AI-generated summaries and annotated diffs answer questions upfront. The "what" and "why" appear alongside the code, eliminating the need for reviewers to ask and authors to explain.

Scope Creep and Nitpicking

Without clear boundaries, reviewers often drift into unrelated suggestions. "While you're in here, could you also refactor this?" "This naming convention doesn't match what we use elsewhere."

Visual artifacts define the PR's scope explicitly. Reviewers see exactly what the PR intends to accomplish, making it easier to stay focused on the actual changes rather than adjacent concerns.

Context Switching Between Reviews and Coding

Every review interrupts flow. The faster reviewers can grasp changes, the sooner they return to their own work.

Visual artifacts reduce the cognitive load of switching contexts. Reviewers understand changes in minutes rather than spending 30 minutes reconstructing what happened.

What Review Cycle Time Looks Like After Visual Artifacts

Before visual artifacts, a typical review cycle looks like this: reviewer opens PR, scans the diff, asks three clarifying questions, waits for responses, re-reviews, requests changes, waits again, approves. Multiple rounds, multiple days.

After visual artifacts, the cycle compresses: reviewer opens PR, reads the summary, scans the impact diagram, reviews the annotated diff, approves or requests specific changes. Often a single pass.

Before: Multiple rounds of clarifying questions, delayed approvals, blocked merges

After: Single-pass reviews, immediate context, faster time-to-merge

The difference shows up in metrics. Teams using visual artifacts consistently report review times dropping from days to hours. CodeAnt AI surfaces cycle time metrics alongside PR artifacts, so you can track the improvement directly.

Tip: Track your PR review time before and after implementing visual artifacts. Most teams see significant reduction in cycle time within the first month.

How to Implement Visual PR Artifacts in Your Workflow

1. Automate Artifact Generation in Your CI/CD Pipeline

The key to adoption is automation. If developers have to manually create artifacts, they won't.

Configure your tooling to trigger artifact generation on every PR. CodeAnt AI integrates with GitHub, GitLab, Azure DevOps, or Bitbucket; artifacts appear automatically when a PR opens.

2. Standardize Visual Summaries for Every Pull Request

Set organization-wide standards so every PR includes consistent visual context. This removes ambiguity about what reviewers can expect.

When every PR follows the same format, summary, impact diagram, annotated diff, reviewers develop a rhythm. They know exactly where to look and what to check.

3. Integrate Artifacts into Review Checklists

Embed artifact review into your existing process. Add a checklist item: "Review AI-generated summary for accuracy." This ensures reviewers actually use the artifacts rather than skipping straight to the diff.

Quality gates can enforce this automatically. Block merges until the summary has been acknowledged or until security annotations have been addressed.

4. Track Cycle Time Metrics Before and After

Measure PR review time and back-and-forth frequency to validate improvements. Without data, you're guessing.

CodeAnt AI provides built-in metrics dashboards that show review cycle time, comment frequency, and time-to-merge. Compare your baseline to post-implementation numbers to quantify the impact.

Check out our self-hosted product today!

Tools That Generate Visual PR Artifacts Automatically

AI Code Review Platforms

Platforms like CodeAnt AI combine AI-driven summaries, inline suggestions, and visual context in a single solution. Every PR gets an automatic summary, security annotations, and quality insights—no developer effort required.

CodeAnt AI generates sequence diagrams that capture the core runtime flow for each PR.

Reviewers see which modules interact, in what order, and where the key decision points happen. Over time, diagrams become a living "change log" of how core flows evolve.

Static Analysis with Visual Reporting

Static analysis tools provide visual dashboards for code quality, complexity, and security findings. Dashboards complement PR-level artifacts by showing trends across the codebase.

Look for tools that surface findings directly in the PR view rather than requiring a separate dashboard visit.

Diagramming and Documentation Generators

Some tools auto-generate architecture diagrams and dependency graphs from code changes. Generators work well for teams that want visual context without adopting a full code review platform.

The tradeoff is integration depth. Standalone diagramming tools often require manual configuration and don't provide the AI-powered summaries and annotations that accelerate reviews most.

Accelerate Code Reviews with Automated Visual Context

Visual PR artifacts eliminate the guesswork that causes review delays. When reviewers understand changes instantly, back-and-forth disappears. When security issues surface automatically, risky code never merges. When cycle time metrics are visible, teams can continuously improve.

CodeAnt AI brings AI-generated summaries, sequence diagrams, security annotations, quality insights, and cycle time metrics into one unified platform. Every PR gets the context it deserves—automatically.

Ready to cut your review time? Book your 1:1 with our experts today!