Code reviews are supposed to catch bugs and spread knowledge. Instead, they often become the slowest part of your pipeline, PRs stack up, reviewers context-switch constantly, and feedback quality varies wildly depending on who's available.

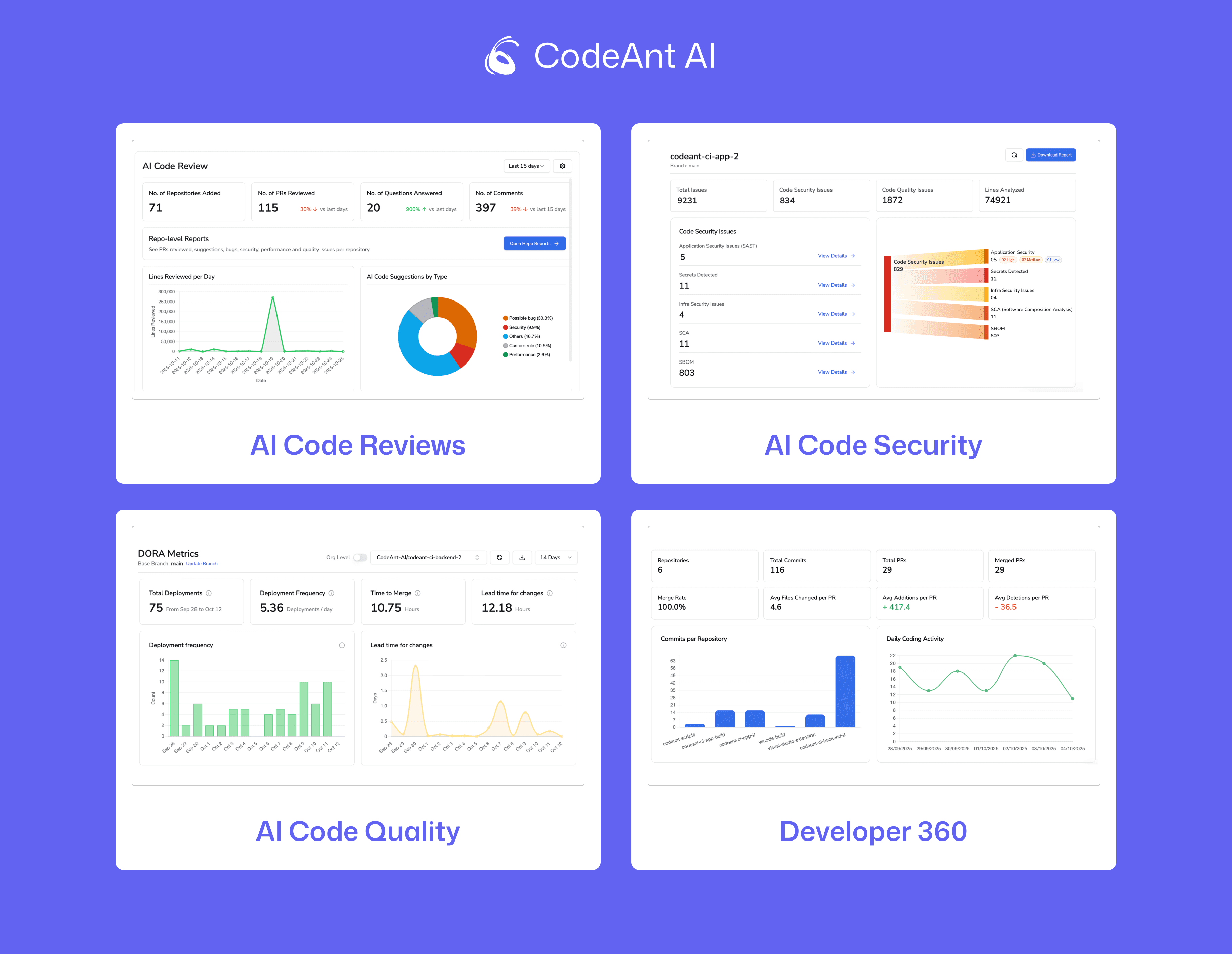

Visual PR artifacts change that equation. AI-generated summaries, sequence diagrams, and inline annotations give reviewers instant context without deep code diving. This article covers how these artifacts eliminate bottlenecks, improve defect detection, and deliver measurable ROI for engineering organizations.

Why Traditional Code Reviews Create Engineering Bottlenecks

Visual PR artifacts, screenshots, AI-generated summaries, and sequence diagrams embedded directly in pull requests, cut review time and catch bugs that text-only diffs miss. Reviewers see what changed without checking out code locally. Clear visuals remove ambiguity, and teams spot UI regressions or behavioral issues before they reach production.

But why do teams look for solutions like this in the first place? The answer comes down to how traditional code reviews break at scale.

Context Switching and Cognitive Overload

Every time a reviewer jumps between PRs, they lose focus. Research suggests regaining deep concentration takes 15–25 minutes after an interruption. Multiply that across five or six PRs waiting in queue, and you've lost hours.

Reviewers often juggle multiple codebases, languages, and architectural patterns in a single day. Without visual context, they mentally trace code execution across files—a slow process that drains cognitive energy and invites mistakes.

Manual Review Delays and PR Queue Buildup

Async review cycles compound quickly. A PR submitted Monday morning might not get attention until Tuesday afternoon. By then, the author has moved on to new work, and merging the original change requires rebasing and re-testing.

Large teams often see PR queues grow faster than reviewers can clear them. Deployments stall, developers get frustrated, and merge conflicts multiply.

Inconsistent Feedback Quality Across Reviewers

Different reviewers catch different things. One engineer focuses on performance; another flags naming conventions. A third might approve without deep inspection because they're overloaded.

Bugs slip through unpredictably when review quality varies by who happens to be available.

Limited Visibility into Code Review Metrics

Most teams don't track review cycle time, defect escape rates, or reviewer workload. Without data, you can't identify bottlenecks or measure improvement. You might feel like reviews are slow, but you can't prove it or pinpoint where the slowdown happens.

What Are Visual PR Artifacts?

Visual PR artifacts are non-code elements attached to a pull request that help reviewers understand changes faster. They range from simple screenshots to AI-generated sequence diagrams mapping runtime behavior.

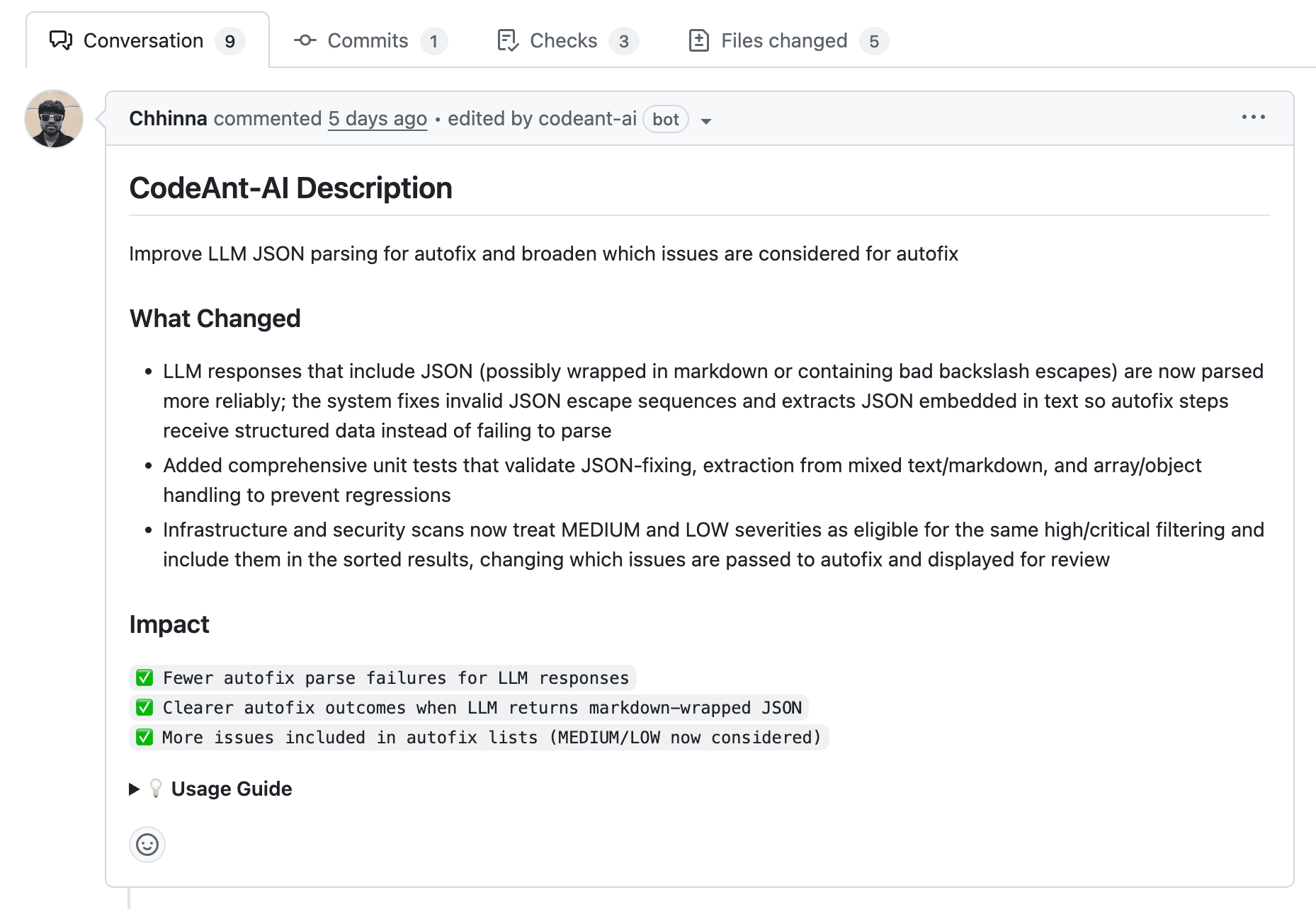

AI-Generated PR Summaries and Changelogs

AI tools scan the diff and produce plain-language summaries explaining what changed and why. Instead of reading 500 lines of code, a reviewer reads a paragraph capturing the intent.

Common summary elements:

New functionality: Features or endpoints added

Refactors: Structural changes without behavior modification

Bug fixes: Specific issues addressed

Dependencies: New libraries or version updates

Visual Diff Highlighting and Code Impact Maps

Standard diffs show line-by-line changes. Visual impact maps go further—they show which modules, services, or functions a PR affects and how changes propagate through the system.

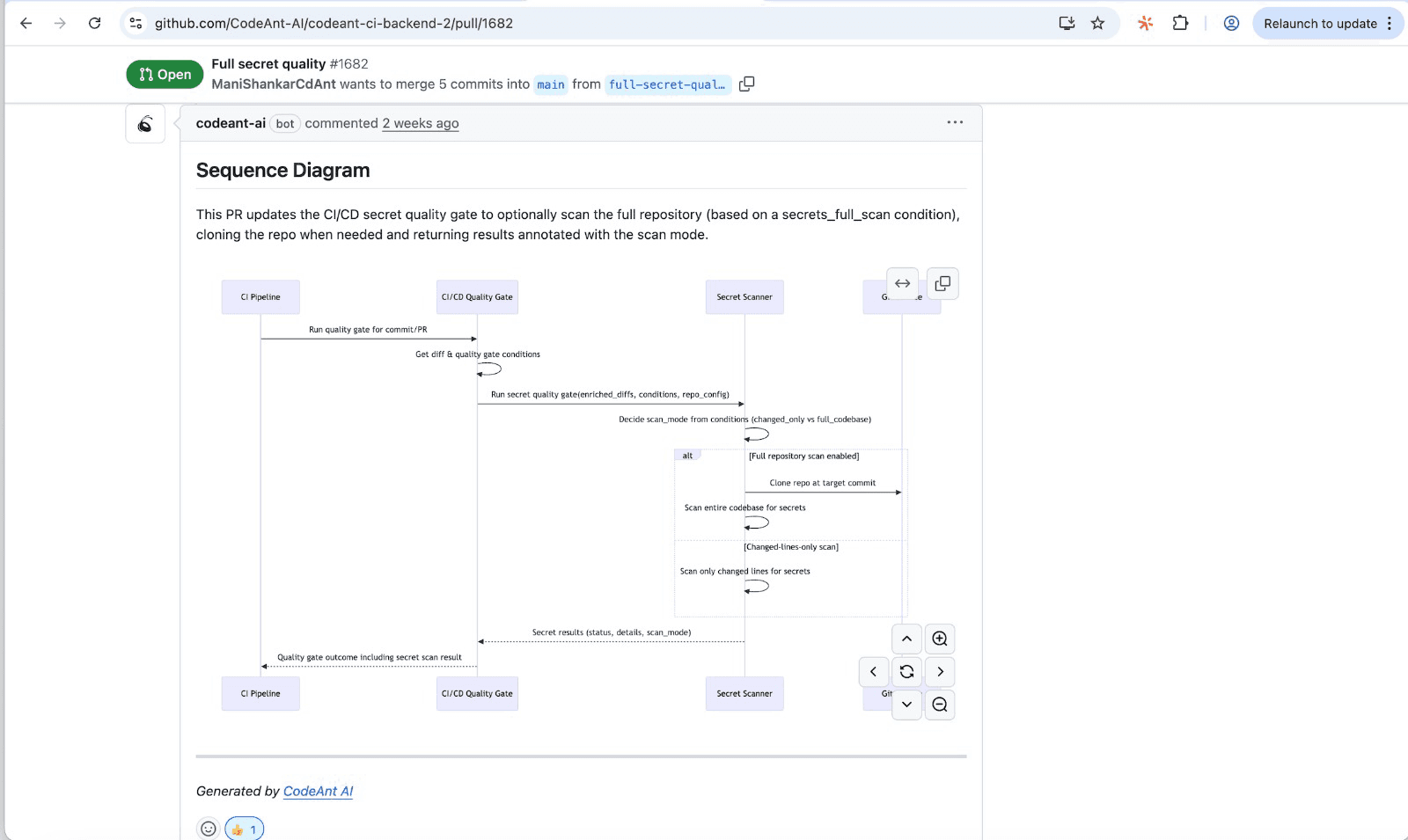

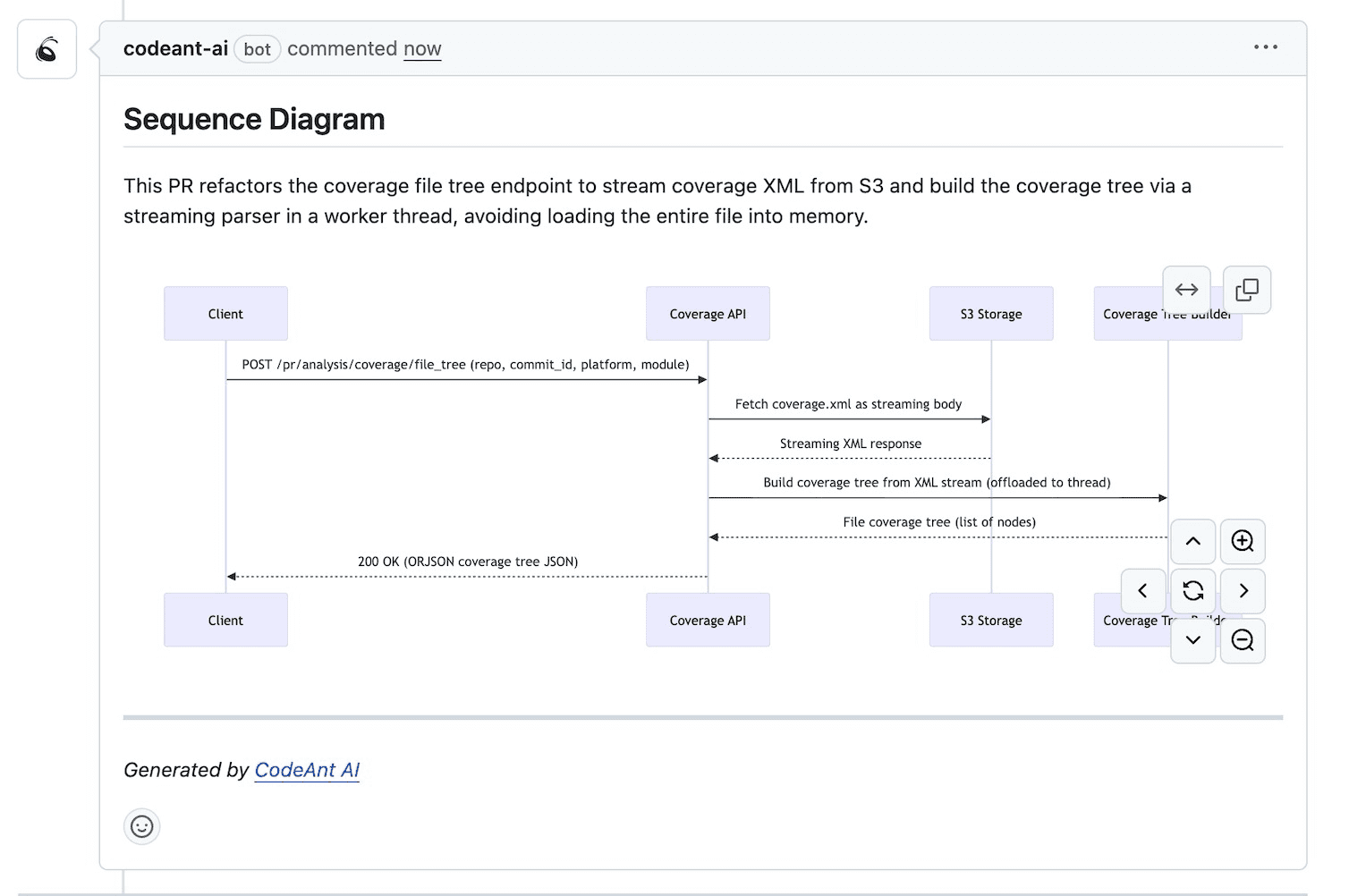

For every PR, CodeAnt AI generates a sequence diagram capturing the core runtime flow introduced or modified.

The diagram shows which modules interact, in what order, and where key decision points occur. Instead of drawing the entire system, the view compresses to the PR's "happy path" plus high-risk branches.

Inline Code Quality and Security Annotations

Automated tools overlay quality scores, vulnerability flags, and style violations directly in the PR view. Reviewers see issues highlighted in context rather than hunting through separate reports.

Artifact Type | What It Shows | Primary Benefit |

AI-generated summary | Plain-language changeset overview | Faster reviewer onboarding |

Sequence diagram | Runtime flow and module interactions | Quick architectural assessment |

Visual diff map | Impacted files and dependencies | Scope assessment at a glance |

Inline annotations | Security, quality, and style flags | Immediate issue visibility |

How Visual PR Artifacts Speed Up Code Reviews

The "faster reviews" promise translates to measurable time savings per PR and per developer.

Instant Context Without Deep Code Diving

A well-generated summary lets reviewers understand a PR's purpose in seconds. They can decide immediately whether they're the right reviewer or if the change aligns with sprint goals.

Sequence diagrams compress what might take 20 minutes of mental simulation into a single visual.

Reviewers see the happy path plus high-risk branches without tracing through multiple files.

Faster Reviewer Onboarding for Complex Changesets

When a reviewer isn't familiar with a particular service or module, visual artifacts bridge the knowledge gap. They don't need to understand the entire codebase—just the flow the PR modifies.

Junior engineers can provide meaningful feedback on senior engineers' code because visual context levels the playing field.

Reduced Async Communication and Back-and-Forth

Ambiguous PRs generate clarifying questions. "What does this function do?" "Why did you change this file?" Each question adds a round-trip delay.

Clear visual artifacts answer questions preemptively. Fewer comments, faster approvals, shorter cycle times.

How Visual Artifacts Help Catch More Bugs Before Production

Speed without quality is just faster failure. Visual artifacts improve defect detection alongside review velocity.

Automated Detection of Logic Errors and Edge Cases

AI-powered analysis flags potential bugs inline: null pointer risks, unhandled exceptions, incorrect conditionals. Issues appear as annotations directly in the PR, not buried in a separate tool.

Common bug types caught:

Logic errors: Missing null checks, off-by-one errors

Security flaws: Injection risks, hardcoded credentials

Performance issues: N+1 queries, unbounded loops

Style violations: Inconsistent naming, code duplication

Real-Time Security Vulnerability Flagging

Visual annotations surface OWASP Top 10 vulnerabilities, secrets exposure, and dependency risks during review—not after deployment. Static Application Security Testing (SAST) runs automatically on every changeset.

Issues that would cost far more to fix in production get caught when they're cheap to address.

Pattern Recognition from Historical Bug Data

AI learns from past defects. If your codebase has a history of null pointer exceptions in a particular module, the system flags similar patterns in new code.

This institutional memory persists even as team members rotate. The AI never forgets a bug pattern it's seen before.

Calculating the Organizational ROI of Visual PR Tools

Engineering leaders often ask: "How do I justify this investment?" The math is straightforward once you identify the right inputs.

Estimating Time Saved Per Code Review

Measure your current average review cycle time (PR open to merge). Then measure after implementing visual artifacts. Multiply the difference by your team size and weekly PR volume.

For example, if you save 30 minutes per PR across 100 PRs per week, that's 50 hours of engineering time recovered weekly.

Quantifying Bug Prevention and Rework Savings

Fixing a bug in production costs significantly more than fixing it during development. Track your defect escape rate before and after adoption.

Even a modest improvement—catching 10 additional bugs per month pre-merge—translates to meaningful cost avoidance.

Measuring Developer Productivity Gains

Less time reviewing means more time building. There's also a qualitative dimension: developers report higher satisfaction when reviews feel efficient rather than frustrating.

Key ROI factors to track:

Review time reduction: Hours saved per developer per week

Defect escape prevention: Cost of bugs caught early vs. late

Developer satisfaction: Retention and morale improvements

Key Metrics to Track for PR Review Efficiency

You can't improve what you don't measure. The following metrics prove ROI and guide continuous improvement.

Review Cycle Time and PR Throughput

Review cycle time measures duration from PR creation to merge. Throughput counts PRs merged per sprint or week. Both matter—fast reviews on few PRs still creates a bottleneck.

Aim for cycle times under 24 hours for most PRs. Longer times signal process or capacity issues.

Defect Escape Rate to Production

Defect escape rate tracks bugs found in production that reviews could have caught. Lower is better. This metric directly measures review effectiveness.

If your escape rate isn't improving, your reviews aren't working—regardless of how fast they are.

Developer Satisfaction and Review Quality Scores

Quantitative metrics miss the human element. Survey your team periodically: Do reviews feel helpful? Is feedback actionable? Do reviewers feel overwhelmed?

CodeAnt AI provides built-in dashboards tracking review metrics automatically, so you don't need custom reporting.

Best Practices for Implementing Visual PR Artifacts

Adopting new tools requires more than installation. The following practices maximize value.

1. Keep Pull Requests Small and Focused

Visual summaries work best on focused changes. A 2,000-line PR overwhelms any artifact. Aim for PRs that address a single concern—one feature, one bug fix, one refactor.

2. Integrate Visual Tools into Your CI/CD Pipeline

Artifact generation runs automatically on every PR. No manual steps, no forgotten summaries. The tool triggers on PR creation and updates as commits are added.

3. Customize Analysis Rules for Your Codebase

Default rules generate noise. Configure organization-specific standards, ignore patterns for generated code, and adjust severity levels to match your risk tolerance.

4. Train Teams on Interpreting Visual Summaries

Reviewers trust AI-generated insights more after seeing how summaries map to actual code changes. A few walkthrough sessions build confidence through familiarity.

5. Iterate Based on Review Metrics and Feedback

Track your metrics monthly. If cycle times aren't improving, investigate why. Maybe reviewers aren't using the artifacts, or maybe the artifacts aren't surfacing the right information.

Security and Compliance Benefits of Automated PR Reviews

For regulated industries or security-conscious teams, visual artifacts provide documentation alongside detection.

Continuous Vulnerability Scanning in Every PR

SAST runs on every changeset—not just periodic scans. Vulnerabilities get caught when they're introduced, not weeks later during a scheduled audit.

Secrets Detection and Exposure Prevention

API keys, passwords, and tokens get flagged before they reach the main branch. One leaked credential can cost millions; automated detection prevents that scenario entirely.

Audit Trails for Governance and Compliance

Visual artifacts create documentation for SOC 2, HIPAA, and other compliance frameworks. Every PR has a record of what was reviewed, what was flagged, and how issues were resolved.

CodeAnt AI provides unified security, quality, and compliance in a single platform—no juggling multiple point solutions.

Build a Faster and More Reliable Code Review Workflow

Traditional code reviews create bottlenecks through context switching, async delays, and inconsistent feedback. Visual PR artifacts solve these problems by giving reviewers instant context, catching bugs automatically, and creating documentation for compliance.

The organizational ROI is clear: faster cycle times, fewer escaped defects, and happier developers.

Ready to see visual PR artifacts in action? Try our self-hosted version today!

FAQs

How do automated code reviews reduce the risk of security vulnerabilities?

Why are pull request reviews important for engineering team velocity?

What is the difference between visual PR artifacts and traditional code diffs?

How long does it typically take to see ROI from visual PR review tools?

Can visual PR artifact tools analyze legacy codebases effectively?