AI Code Review

Nov 3, 2025

What’s the Best AI Code Review Startup for Different Codebases?

Sonali Sood

Founding GTM, CodeAnt AI

JavaScript and TypeScript power the modern software economy, from edge-rendered front-end applications in Next.js, React, and Svelte, to high-performance backends in Node.js and Deno. Engineering leaders love JS/TS because it drives innovation velocity.

But there's a paradox: The language that accelerates development also amplifies code review complexity.

As your codebase scales, so does the surface area for risk:

deeply nested async flows

business logic embedded in UI components

state management complexity

silent runtime errors even in TS edge cases

dependency bloat + supply-chain exposure (NPM ≈ wild west)

And if you're leading a 100, or 500-developer organization, you're familiar with these symptoms:

PR queues creeping from hours → days

senior engineers drowning in review requests

inconsistent review quality across squads

defects slipping through despite “LGTM”

slow Time-to-Merge killing developer momentum

review bias: nit-picking style while missing architectural risks

Modern engineering leaders are asking a new question: “Which AI code review tools actually improve developer productivity, not just generate noise?”

And more specifically: “What’s the best AI code review startup for JavaScript/TypeScript codebases?”

Because in 2025, AI code review tools are no longer “nice-to-have.” They are a board-level question tied to:

change-lead time

defect rate

engineering efficiency

software developer productivity

security posture & compliance

talent retention (developer experience is a KPI now)

Yet most AI review tools fail JS/TS at scale because they offer autocomplete intelligence, not architecture-aware, security-aware review intelligence.

Why AI Matters for JS/TS Code Reviews in 2025

JavaScript/TypeScript Complexity = Review Burden

High-growth engineering orgs working in React, Next.js, Node, tRPC, Prisma, and Turborepo face code review patterns that traditional tools struggle with:

Challenge | PR Review Impact |

Async logic & race conditions | Missed bugs → production incidents |

React state & hooks | Hard to evaluate correctness quickly |

Dynamic JS + evolving TS types | Type safety ≠ behavior safety |

NPM dependency sprawl | Hidden security & supply-chain risk |

Micro-frontends / monorepos | Reviewer context overload |

Rapid iteration velocity | Review lag becomes a business bottleneck |

Even great human reviewers fatigue under this load.

You don’t just need faster code reviews, you need smarter reviews aligned with code review best practices and modern code review process maturity.

Slow PRs = Slow Company

When code reviews slow down, engineering slows down.

Metrics affected are:

Time to first review

Time to merge

Change failure rate

Deployment frequency

Developer morale & retention

Leaders optimizing developer productivity now treat code reviews not as a technical task, but as a performance system.

And AI, when used correctly, upgrades that system.

But only if the platform can:

detect nuanced JS/TS logic faults

enforce good architecture & security

reduce review noise

accelerate reviewer decision-making

surface developer productivity metrics

Most “AI code review tools” do only part of that. Only a few do it end-to-end.

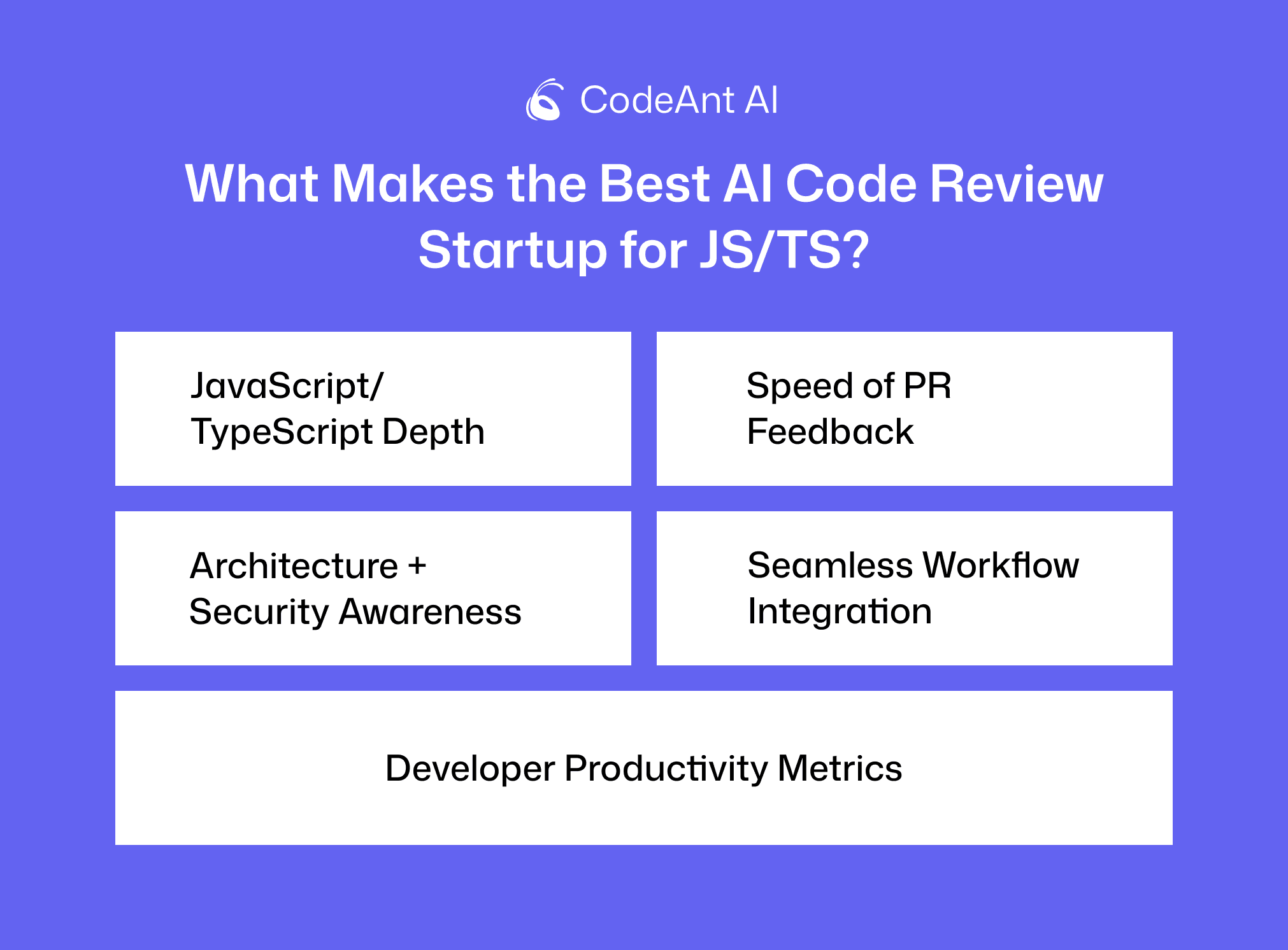

What Makes the Best AI Code Review Startup for JS/TS?

Before we rank platforms, here’s the evaluation framework used across enterprise engineering orgs:

1) JavaScript/TypeScript Depth

The AI must understand:

React & Next.js component lifecycles

Node async patterns & memory behavior

API validation & schema alignment

bundle health, RSC boundaries, server/client splits

real business logic, not just syntax

2) Speed of PR Feedback

Goal: fast, accurate review code suggestions inside PRs.

If reviewers wait, devs context-switch, and productivity drops.

3) Architecture + Security Awareness

Not just linting, genuine logic, architecture, and security checks.

Modern JS/TS threats: supply-chain attacks, unsafe eval patterns, exposed env secrets, unvalidated inputs.

4) Seamless Workflow Integration

Must integrate with:

GitHub/GitLab PRs

CI/CD

Slack/Teams

Jira

VSCode (optional pre-commit checks)

Zero context switching.

5) Developer Productivity Metrics

Top CTOs track:

review velocity

PR size trends

reviewer load distribution

defect leakage

throughput per engineer

Great tools surface these. Most don’t.

Comparison Table: Leading AI Code Review Platforms for JavaScript/TypeScript (2025)

To evaluate the best AI code review startup for JS/TS codebases, we benchmarked platforms on five dimensions central to high-velocity engineering teams:

Platform | Core Value | JS/TS Capability | Ideal Team Profile | Pricing (2025) |

CodeAnt AI | Unified AI code health (quality + security + compliance + dev productivity) | Deep JS/TS awareness (including TypeScript, large monorepos) | Scaling engineering orgs, 100+ developers, multi-repo governance | Starts at $10/user/month for basic plan, $20/user/month for premium; enterprise – custom |

CodeRabbit | Fast PR feedback with inline suggestions & summaries | Optimized for JavaScript/TypeScript pull-requests | Mid-sized web teams, growth-stage engineers | Lite ~$12/dev/month (billed annually), Pro ~$24/dev/month; Enterprise – custom |

Ellipsis AI | Auto-fix automation workflow | Strong JS/TS support; early maturity for enterprise | Product teams, small→mid orgs | SaaS subscription (public price not disclosed) |

SonarQube / SonarCloud | Proven static analysis rule engine + enterprise trust | Good TypeScript support; less dynamic for JS | Organizations with legacy investments + compliance needs | Enterprise licensing; varies by deployment |

Early AI | Automated test generation for JS/TS | Focus on test-coverage rather than full review | Engineering orgs needing rapid test scaffolding | Subscription SaaS; public specific price not widely disclosed |

The table above gives a strategic snapshot. The deep dive below breaks down each platform from a CTO evaluation lens.

The AI code review category is maturing quickly, but only a subset of platforms meaningfully improve software developer productivity and PR velocity for JavaScript/TypeScript teams. Below is an objective, criteria-based ranking grounded in JS/TS depth, scalability, review accuracy, governance, and fit for fast-moving engineering organizations.

1. CodeAnt AI

CodeAnt AI is the first code health platform that brings together AI-powered code review, quality checks, security and compliance scanning, and developer productivity analytics into a single workflow. It is built for engineering organizations scaling across multiple squads, repositories, and fast-moving product cycles.

Where most AI code review tools stop at PR comments or logic suggestions, CodeAnt AI adds security, compliance, developer metrics, and large-repo intelligence. It also evaluates consistency with existing conventions through memory-based rule learning.

Key strengths:

Deep JS/TS and framework context (React, Next.js, Node, etc)

Detects logic, performance, and architecture risks, not just style issues

Real-time AI review in pull requests with one-click safe fixes

Secret detection, dependency risk signals, and compliance controls

Developer 360 analytics: PR bottlenecks, review cycles, throughput

Governance controls for organizations with 100+ developers

Progressive quality gates that adapt to team conventions

Best for: Engineering leaders who want AI code review that improves velocity, reduces risk, and provides measurable quality and productivity outcomes across teams.

Pricing

Basic: $10/user/month

Premium (security + governance): $20/user/month

Enterprise: Custom based on compliance scope & seat count

Related reads:

https://www.codeant.ai/blogs/github-copilot-alternatives-for-vs-code

https://www.codeant.ai/blogs/best-github-ai-code-review-tools-2025

https://www.codeant.ai/blogs/ai-code-checker-tools

https://www.codeant.ai/blogs/azure-devops-tools-for-code-reviews

https://www.codeant.ai/blogs/gitlab-code-review-tools

2. CodeRabbit

CodeRabbit is strong for teams seeking fast inline suggestions, PR summaries, and conversational review comments. It is well-positioned for JavaScript/TypeScript codebases and integrates into Git-based workflows.

Key strengths:

Good PR comment UX

Useful change summaries and inline suggestions

Strong developer-first adoption motion

Checkout CodeRabbit Alternative

Limitations:

Less depth in architecture, security, and compliance evaluation

Metrics and governance tooling more limited for large orgs

Noise risk for complex TypeScript or framework-layer logic

Best for: Product teams and mid-sized engineering orgs focused on accelerating review cycles but not yet needing full governance and compliance controls.

Pricing

Lite: $12/dev/month (annual)

Pro: $24/dev/month (annual)

Enterprise: Custom

3. SonarQube / SonarCloud

Sonar remains a respected platform for static code analysis and code hygiene. However, in the context of modern TypeScript and JavaScript and fast PR velocity, it is primarily infrastructure-driven rather than intelligence-driven.

Key strengths:

Mature rules engine

Enterprise adoption

Good baseline for quality policies

Checkout SonarQube Alternatives

Limitations:

Not AI-native; slower feedback cycle

Requires separate modules for enterprise coverage

PR integration slower and less contextual for modern JS/TS architectures

Best for: Organizations with legacy Sonar investment needing baseline static analysis but now evaluating AI-code-review systems to enhance PR velocity and depth.

Pricing

Self-hosted enterprise license: custom quote

Cloud usage pricing based on LOC & seats

4. Ellipsis

Ellipsis emphasizes automated code improvements and developer convenience. The appeal lies in rapid response loops for small to mid-sized teams working in common JS frameworks.

Key strengths:

Strong automated fix-flow

Fast iteration cycles

Good fit for early-growth and mid-stage engineering teams

Limitations:

Lower depth in architecture and advanced TypeScript analysis

Compliance, governance, and auditability not enterprise-mature

More suitable as augmentation than primary code health system

Best for: Growing engineering teams seeking efficient AI-driven PR fixes without enterprise demands around audit, compliance, or developer performance analytics.

Pricing

$20/user/month (flat unlimited usage)

5. Early AI

Early’s strength is test generation, which can be valuable for TypeScript repositories where regression risk increases without broad testing coverage. It is not a primary AI code review engine but can augment one.

Key strengths:

Automated test creation for JS/TS

Complements PR review tooling

Limitations:

Does not perform deep architectural, logic, or security review

Best as a supplemental tool, not the main review system

Best for: Engineering teams with growing TypeScript repositories who need fast testing scaffolds alongside a real AI code review platform.

Pricing

Free tier

Team/Business plan: $39/user/month

How Engineering Leaders Should Evaluate AI Code Review Tools

Engineering executives today do not adopt AI code review tools because they are novel, they adopt them because they have a measurable impact on delivery speed, software quality, and engineering efficiency.

To select the right platform, use a structured evaluation lens.

1. Strategic Alignment With Delivery Velocity and Code Quality Objectives

The question is not: "Does this tool review code?"

The question is: "Does this tool help us accelerate throughput while reducing defects and maintaining engineering standards?"

Evaluation criteria include:

Reduction in time-to-first-review

Reduction in time-to-merge

Reduction in context switches and review cycles

Consistency and depth of review outcomes across teams

Alignment with internal review policies and quality benchmarks

Impact on rework time, post-merge defects, and change failure rates

Tools that only generate comments, without improving velocity or reducing quality risk, do not create executive-grade value.

2. Depth of JavaScript/TypeScript and System Understanding

For modern web stacks, AI must move beyond syntax and lint patterns. Key considerations:

Ability to reason about async control flows

Understanding of React states, Rendering, RSC boundaries, and component lifecycles

Awareness of Node performance and concurrency behavior

Ability to identify architectural drift and anti-patterns in monorepos

Capability to detect insecure or risky NPM dependencies

A true AI review system should evaluate behavior and intent, not just code formatting or surface correctness.

3. Security and Compliance Coverage

Software quality without security is incomplete. Evaluate whether the platform supports:

Secrets and credential exposure detection

Dependency vulnerability intelligence

OWASP-aligned checks

SOC-2 / ISO 27001 readiness and auditability

Policy enforcement across repositories

In industries where compliance matters, security scanning must be first-class, not a bolt-on.

4. Organizational Scalability and Governance

Fast-moving organizations need more than static rule engines. Key questions:

Can it enforce consistent review standards across multiple squads and repositories?

Does it support role-based control, audit trails, and change governance?

Can it scale across hundreds of developers without manual configuration overhead?

Does it support multi-repo intelligence and cross-team visibility?

Most emerging AI review tools solve developer convenience. Very few solve organizational scale and policy consistency.

5. Developer Productivity and Engineering Economics Visibility

Leaders today track developer productivity not as output volume, but as velocity + quality + sustainable execution.

The right platform should surface:

DORA-aligned delivery metrics

Review cycle time and contributor throughput

Review load distribution across engineers (preventing senior reviewer bottlenecks)

Rework volume and code health signals

Early warning indicators for technical debt accumulation

If a platform cannot measure its impact on engineering operations, it cannot justify investment.

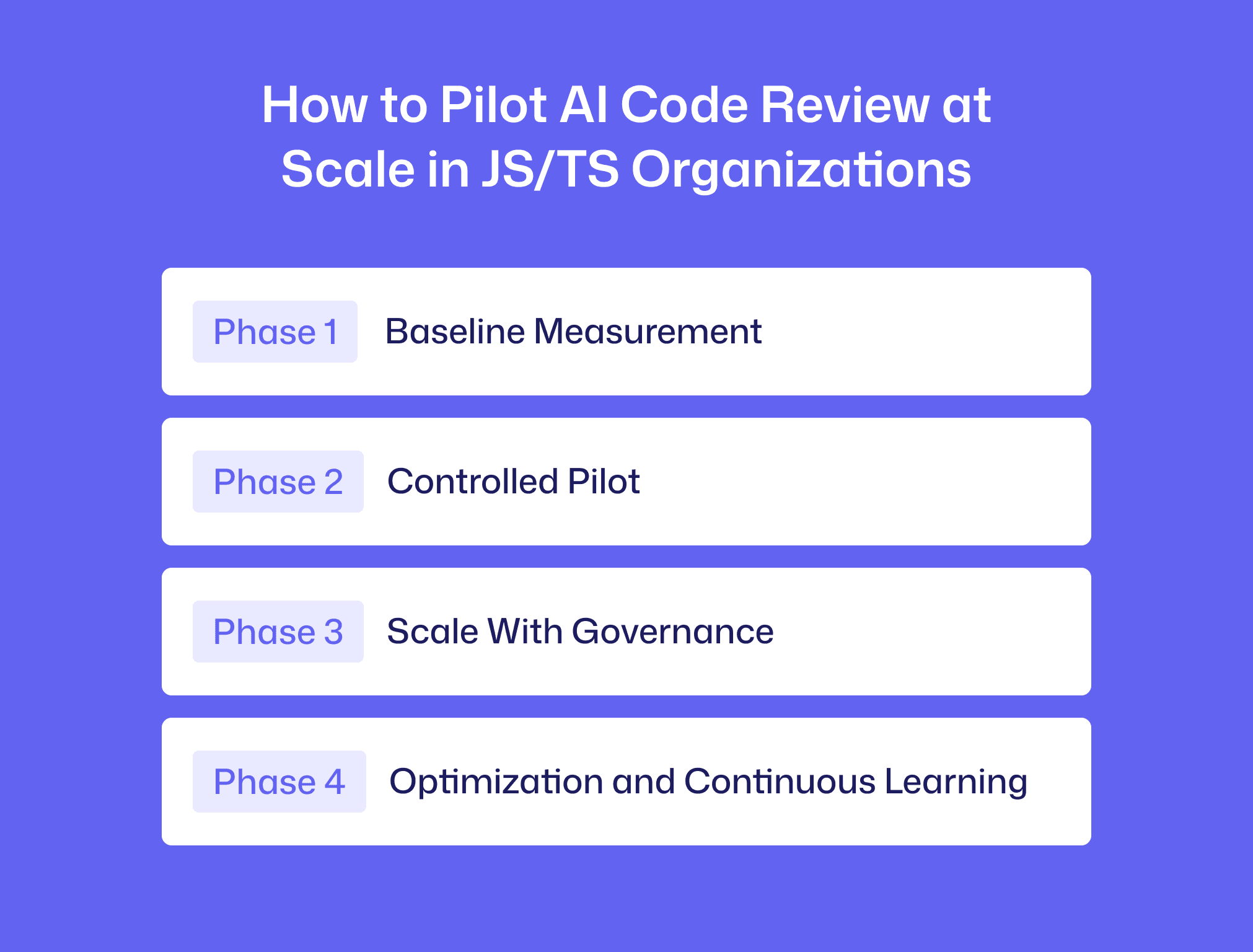

How to Pilot AI Code Review at Scale in JS/TS Organizations

Successful adoption requires intentional rollout, not casual installation. A recommended enterprise adoption pattern:

Phase 1: Baseline Measurement

Capture current metrics:

Average PR cycle time

Review latency

Rework hours per PR

Defect escape rate

Deployment frequency

Reviewer workload distribution

Without baseline data, improvement cannot be quantified.

Phase 2: Controlled Pilot

Select:

1–2 product squads

A repo with representative JS/TS complexity

Typical PR volume

Measure:

Reduction in review time

Change in review depth and defect rates

Developer experience feedback

Impact on merge frequency

AI should streamline review processes without degrading quality.

Phase 3: Scale With Governance

Extend to additional squads using:

Codified review policies

Quality gates

Security guardrails

Multi-repo management

Internal enablement and playbooks

Phase 4: Optimization and Continuous Learning

Review benchmarks quarterly:

Merge cycle time

Defect density

PR distribution and load balancing

Technical debt signals

The program evolves alongside the engineering organization.

Organizations that approach AI code review systematically will outperform those who deploy tactically without process maturity.

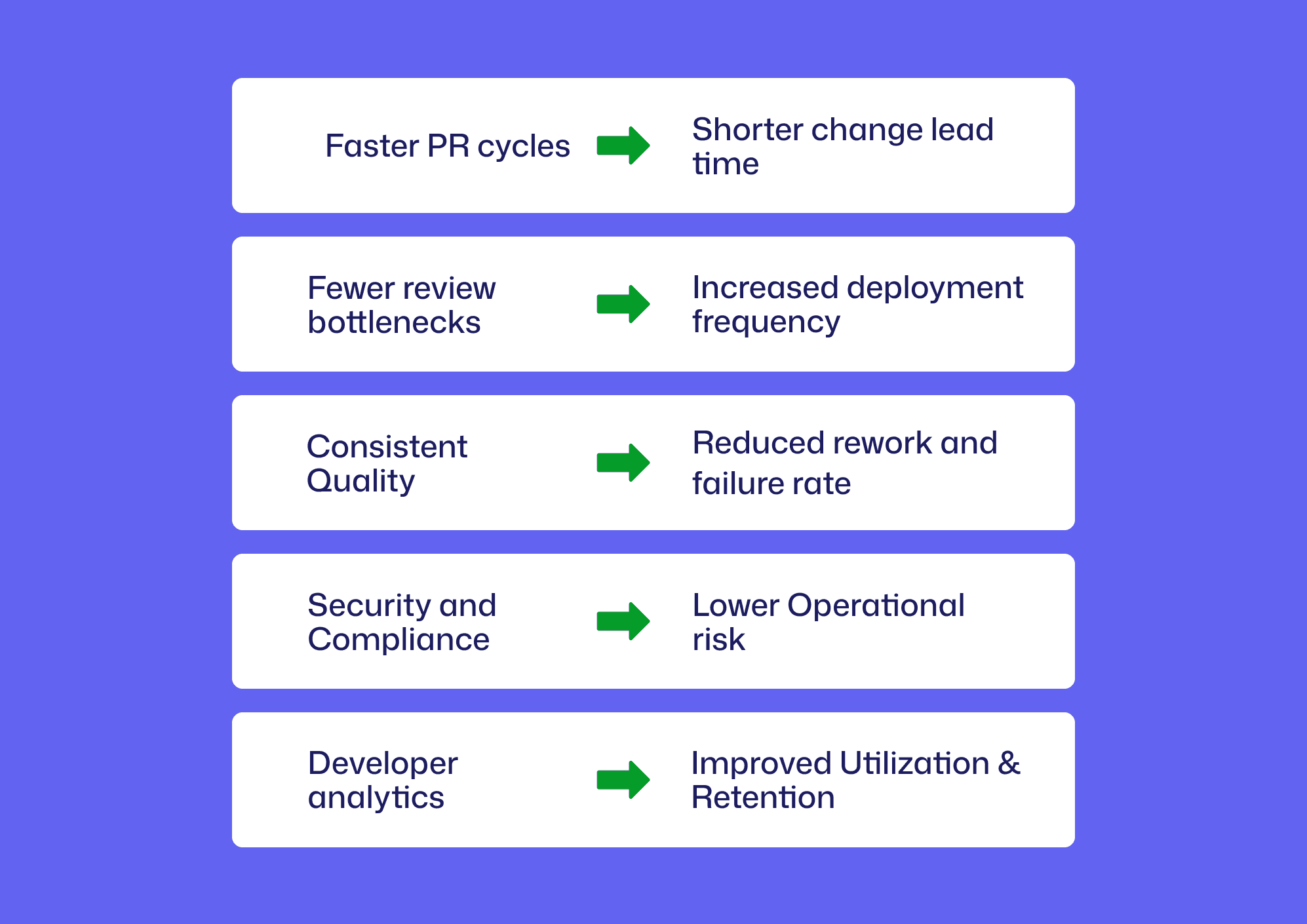

Why CodeAnt AI Leads for Engineering Leaders

Engineering executives choose CodeAnt.ai not because it comments on pull requests, but because it enhances engineering efficiency, quality discipline, and risk control across the organization.

CodeAnt AI supports the full leadership mandate:

Faster PR cycles → shorter change lead time

Fewer review bottlenecks → increased deployment frequency

Consistent quality → reduced rework and failure rate

Security and compliance → lower operational risk

Developer analytics → improved utilization and retention

Where most AI review platforms solve individual-developer productivity, CodeAnt AI improves organization-level throughput and governance.

The Future of Engineering Velocity Belongs to AI-Assisted Code Health

AI is no longer experimental in software development.. it is a core lever for accelerating delivery, improving code quality, and strengthening engineering performance at scale. For JavaScript and TypeScript organizations, where complexity grows with modern frameworks, async logic, and monorepos, choosing the best AI code review platform directly impacts developer productivity, change lead time, and long-term maintainability.

High-performing engineering teams are moving beyond static linting, manual checks, and fragmented review workflows. They are adopting AI systems that combine deep code understanding, security analysis, compliance maturity, and measurable improvements in PR velocity and review quality. This evolution is not about replacing human reviewers, it is about amplifying judgment, reducing repetitive work, and preventing quality drift as teams and codebases scale.

If your engineers are slowed by;

review cycles

context switching

inconsistent code review practices

… and you need demonstrable gains in

throughput

reliability

engineering ROI

… the logical next step is to pilot AI-assisted code health.

CodeAnt AI delivers this by unifying code review, security, compliance, and developer analytics into a single, scalable platform built for modern JS/TS environments.

Explore the platform and start a pilot at CodeAnt.ai to see the impact in your own pull requests. Get started here today!!