AI Code Review

Feb 8, 2026

How AI Code Review Affects Code Quality and Developer Productivity

Sonali Sood

Founding GTM, CodeAnt AI

You've seen the conflicting headlines: AI code review cuts review time by 55%. Or AI slows developers down by 19%. Both are true, and understanding why reveals everything about choosing the right tool.

The answer isn't yes or no. It's "it depends on which AI." Pattern-matching tools like SonarQube flag hundreds of issues per PR, creating alert fatigue while missing architectural problems. Generic AI assistants speed up surface-level tasks but let critical vulnerabilities slip through. The result? Teams face a false choice between velocity and quality.

This article shows how context-aware AI code review delivers both, reducing review time 40-60% while catching security and maintainability issues that pattern-matching misses. You'll learn when AI fails, which metrics prove real impact, and how to run a 30-day pilot that produces clear ROI data.

The Productivity-Quality Paradox: Why Research Contradicts Itself

GitHub's Copilot study showed developers completing tasks 26% faster. METR's research found AI tools slowed developers by 19% on complex work. Both studies are correct, the difference is what the AI understands.

Pattern-matching tools analyze code diffs in isolation, lacking architectural context. Context-aware platforms understand your full codebase, organizational standards, and security posture. This distinction determines everything.

What the Data Actually Shows

Productivity evidence:

GitHub Copilot: 26% faster for code generation tasks

Microsoft internal: 10-20% faster PR completion with context-aware AI

METR study: 19% slowdown when developers over-relied on diff-only AI

Quality evidence:

ACM research: 15-30% more security vulnerabilities caught pre-production

Microsoft Engineering: 84% reduction in MTTR when AI flagged architectural issues during review

Industry surveys: 70%+ of developers ignore pattern-matching findings due to false positives

The pattern is clear: AI improves both outcomes when it understands context, but degrades both when it doesn't.

Why Pattern-Matching Creates False Trade-Offs

Traditional static analysis operates on a simple model: scan code against pattern databases, flag everything that matches. On a typical enterprise PR:

SonarQube output:

847 issues found

├─ 412 Code Smells (complexity, duplication)

├─ 289 Minor Issues (formatting, naming)

├─ 124 Security Hotspots (potential vulnerabilities)

└─ 22 Bugs (null pointer risks, type mismatches)

The problem? Most findings lack context:

False positives: Flagging intentional complexity in performance-critical code

Irrelevant noise: Style violations in generated code

Missing severity: Treating minor issues like critical vulnerabilities

The result is alert fatigue. Developers learn to ignore noise, which means they miss real issues. You get slower reviews (triaging false positives) and lower quality (critical bugs buried in noise).

The Hidden Cost of "Faster" Reviews

Some AI tools accelerate reviews by auto-approving PRs that pass basic checks. This creates dangerous illusions:

What gets missed without architectural context:

Authentication bypass only visible across multiple services

Performance regression invisible in the diff but catastrophic at scale

Security misconfiguration compliant with generic rules but violating your zero-trust model

Microsoft research shows catching architectural issues during review reduces MTTR by 84% versus finding them in production. Speed without context doesn't improve productivity, it defers work to the worst possible moment.

How Context-Aware AI Delivers Both Quality and Productivity

The AI code review landscape has evolved through three generations:

First generation: Manual review

Senior engineers spend 4-6 hours weekly reviewing code. Quality depends on reviewer availability. Bottlenecks are inevitable.

Second generation: Pattern-matching AI

Tools apply predefined rules across your codebase. They flag eval() usage and SQL concatenation, but don't understand your architecture. SonarQube flags 847 issues; your team ignores 600+ as false positives.

Third generation: Context-aware AI

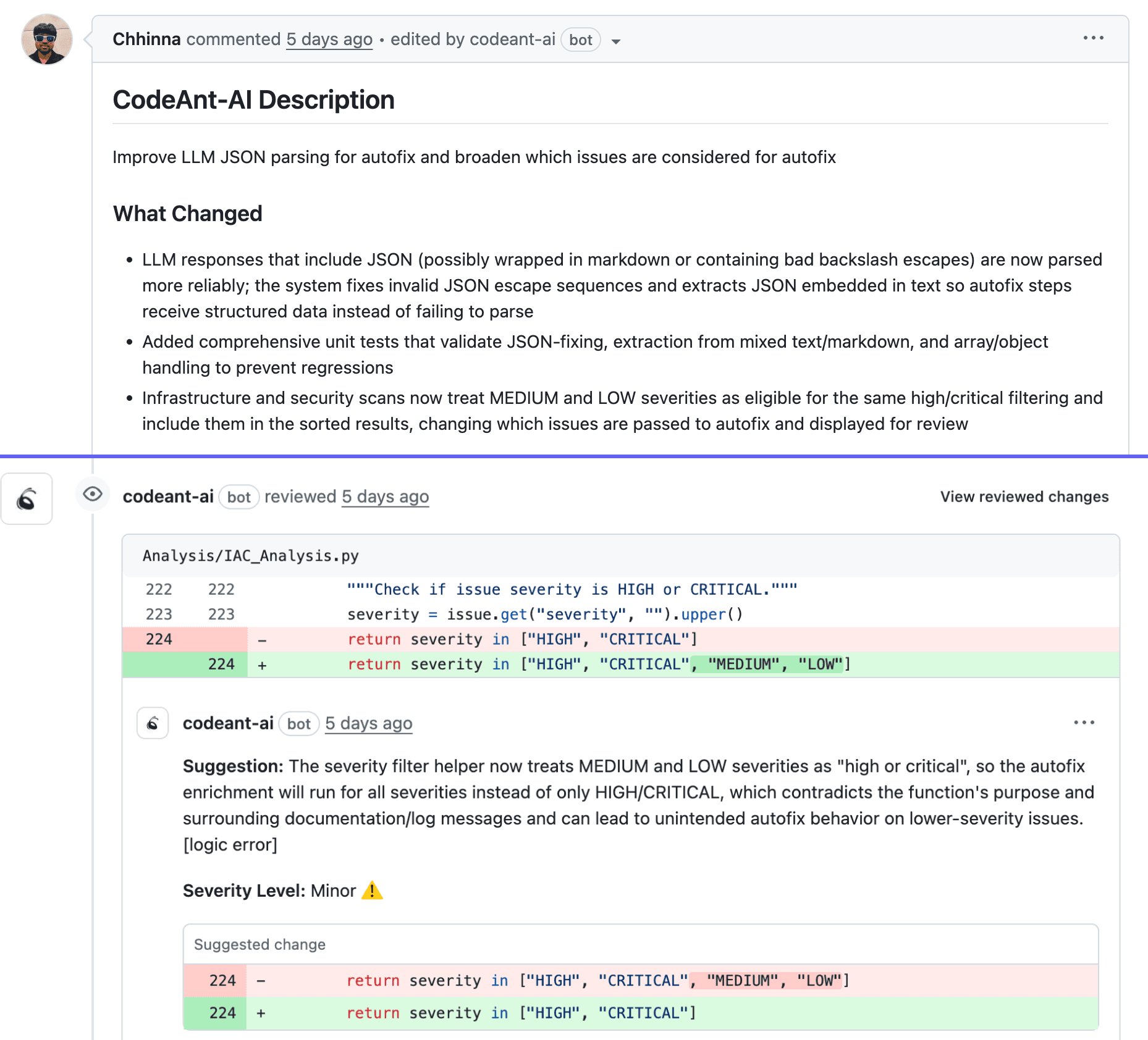

Platforms like CodeAnt AI build complete understanding of your repository: dependency graphs, historical patterns, organizational standards, and risk profiles. They know a SQL query in your ORM layer is safe because they've traced parameterization through three abstraction layers.

What Context-Awareness Means in Practice

Repository-wide understanding

Context-aware AI analyzes how changes interact with existing code across the entire codebase. When you modify authentication middleware, it traces impact through every dependent route.

Dependency graph awareness

Your codebase is a network of dependencies. Context-aware systems map this network and understand blast radius. They know changing a core data model affects 47 downstream services and prioritize based on critical path impact.

Risk-based prioritization

Not all issues are equal. CodeAnt AI's prioritization considers:

Exposure: Is this code path reachable from external requests?

Sensitivity: Does it handle PII, credentials, or financial data?

Blast radius: How many users or services depend on this component?

Change frequency: Is this stable core or rapidly evolving code?

On the same PR where SonarQube flagged 847 issues, CodeAnt AI flags 63, all critical, all actionable, all relevant to your architecture.

Measurable Outcomes

Metric | Pattern-Matching | Context-Aware (CodeAnt AI) |

Review time reduction | 15-25% (offset by triage) | 40-60% (clean signal) |

Critical issues caught | 60-70% (high false negatives) | 95%+ (architectural understanding) |

Alert fatigue | 70%+ ignored | <10% dismissal rate |

Developer satisfaction | Mixed (tool fights workflow) | High (enhances workflow) |

Review time: 40-60% faster

CodeAnt's AI-generated PR summaries give reviewers instant context. Instead of reading 300 lines to understand intent, they get: "Refactor authentication to support OAuth2, maintains backward compatibility, adds rate limiting."

Noise reduction: 80%+

By understanding your architecture, CodeAnt AI eliminates false positives. Teams go from 40 minutes per PR spent on "is this real?" triage to under 5 minutes.

Critical issue catch rate: 95%+

Context-awareness means catching issues that matter: authentication bypasses, data leaks, architectural violations. CodeAnt understands your security model well enough to flag when you've accidentally exposed internal APIs.

The Three Quality Dimensions AI Must Address

Effective code quality AI operates across three dimensions: security, maintainability, and standards compliance. Pattern-matching tools treat these as independent checklists, but context-aware platforms understand how they intersect within your architecture.

Security: Beyond Patterns to Contextual Threat Analysis

Why context matters:

Supply chain risk: A vulnerable

lodashversion buried three levels deep poses real risk, but pattern matchers only flag direct imports. CodeAnt traces the full dependency graph.Secrets detection: Finding

API_KEY = "abc123"in a test fixture differs from production config. Context-aware analysis understands your repository structure and deployment patterns.

Concrete example: A fintech team using SonarQube received 847 security findings on a PR introducing OAuth integration. 812 were false positives. CodeAnt analyzed the same PR and surfaced 9 critical issues: exposed client secret, missing token expiration validation, race condition in session handler. The difference? Understanding the authentication flow architecture.

Maintainability: Identifying Architectural Debt

Why codebase-aware analysis matters:

Complexity hotspots: A function with complexity 15 isn't problematic if called once. But in your critical path, invoked 10,000 times per request, it's a maintenance liability. CodeAnt maps call graphs to identify high-impact complexity.

Duplication detection: Copy-pasting validation across three microservices creates debt, but pattern matchers can't distinguish this from legitimate similar code.

Example: An e-commerce checkout service had 3,200 lines with 47% coverage. SonarQube flagged 156 maintainability issues across the codebase. CodeAnt identified the real problem: a 400-line processPayment() function handling five providers with nested conditionals, called by every checkout flow. Refactoring this one function eliminated 23 downstream bugs.

Standards: Enforcing Organization-Specific Rules

Why customization is critical:

Compliance controls: Your SOC2 audit requires all API endpoints log authentication attempts with specific fields. Pattern matchers check for logging statements; only context-aware AI verifies log format matches your compliance schema.

Architectural conventions: Your team requires all feature flags registered in a central service. This isn't universal—it's your architectural decision. CodeAnt learns these patterns and enforces them automatically.

Measuring Real Productivity Beyond Review Speed

Review velocity means nothing if deployment frequency drops, change failure rate climbs, or engineers spend more time fixing escaped defects. Real productivity requires connecting AI review to DORA metrics and hidden costs.

Instrument What Drives Business Outcomes

DORA metrics AI should improve:

Deployment frequency: Context-aware AI reduces "fix-retest-redeploy" cycles. CodeAnt customers see 15-25% increases because PRs pass with fewer iterations.

Lead time for changes: Measure commit to production, not just PR to merge. If AI speeds PR but introduces defects requiring hotfixes, lead time increases.

Change failure rate: Pattern-matching tools flag 800+ issues but miss architectural flaws causing incidents. CodeAnt's context-aware filtering reduces noise 80% while maintaining security coverage.

MTTR: AI-generated PR summaries and architectural context accelerate incident response. CodeAnt customers report 84% MTTR reductions.

PR-specific analytics:

PR cycle time: Median time open to merge should decrease 30-50%

Review depth: Ratio of strategic comments to nitpicks should shift toward architecture

Rework rate: Percentage of PRs requiring multiple cycles should drop

Escaped defects: Production issues that should've been caught in review

Avoid the False Precision Trap

Connect metrics to decisions:

The Compounding Value of Reduced Context-Switching

Multi-tool workflows tax productivity through cognitive overhead. Every switch from GitHub to SonarQube to Snyk costs 5-10 minutes. Across a 100-person org doing 50 PRs daily, that's 250-500 hours weekly lost to tool fragmentation.

CodeAnt's single-pane-of-glass eliminates this tax. Security findings, quality metrics, and productivity analytics live where engineers review code. The gain isn't just faster reviews, it's eliminating workflow friction that compounds across every PR.

When AI Code Review Fails (And How to Avoid It)

Even promising tools backfire without understanding failure modes. Here's where things go wrong and how to prevent it.

The Alert Fatigue Trap

Symptom: Your team ignores 70%+ of automated findings, PRs accumulate unresolved comments, developers bypass the tool.

Pattern-matching tools generate noise at scale. Typical enterprise PR:

SonarQube: 847 flagged issues

CodeAnt AI: 63 critical issues

The fix:

Demand context-aware filtering: Tools should analyze data flow, not just syntax. CodeAnt tracks whether user input reaches that SQL query or if validation happens upstream.

Measure precision, not just recall: Ask vendors for false positive rate on your codebase during the pilot. A tool catching 100% of issues but flagging 800 false positives is worse than one catching 95% with 10 false positives.

Configure severity thresholds: Start by auto-blocking only P0/P1 issues. Expand as the team builds trust.

Check out this interesting read on "limitation of AI code review.”

Why Generic AI Can't Enforce Your Standards

Symptom: AI suggestions conflict with team patterns, fail to catch org-specific anti-patterns, can't enforce compliance like SOC2.

Off-the-shelf models trained on public repos don't know your team:

Requires all database queries use your internal ORM wrapper

Has custom authentication flow generic AI flags as "insecure"

Must log all PII access for compliance

Follows specific error-handling patterns for microservices

The fix:

Choose tools that learn from your codebase: CodeAnt analyzes repository history to understand team-specific patterns, naming conventions, architectural decisions.

Define custom rules with business context: Map compliance requirements to code patterns.

Validate during pilot: Test on 20-30 historical PRs. If it flags accepted code or misses known issues, the tool lacks context-awareness.

The Integration Overhead That Kills Gains

Symptom: Your team spends more time managing tools than writing code.

Typical enterprise stack:

Tool | Purpose | Context Switching Cost |

SonarQube | Quality & tech debt | 5-10 min per PR |

Snyk | Dependency security | 3-5 min per PR |

GitHub Advanced Security | Secrets, CodeQL | 2-4 min per PR |

Custom dashboards | DORA metrics | 15-20 min weekly |

Total | Fragmented | 25-39 min per PR |

The fix:

Consolidate to a unified platform: CodeAnt AI provides security, quality, compliance, and DORA metrics in one interface.

Calculate true TCO: Factor in engineering time on tool management, context-switching overhead, opportunity cost of fragmented insights.

Pilot with integration metrics: Track time-to-first-review and time-to-merge before and after consolidation.

Evaluation Framework: What to Test in Your Repos

Don't evaluate on vendor demos, run structured pilots on your actual codebase.

Build Your Scorecard

Context-awareness

Test: Introduce a change violating existing architectural pattern (e.g., bypassing auth middleware). Context-aware AI should flag it; diff-only tools miss it.

CodeAnt analyzes a full repository graph to catch issues requiring broader context.

Customization

Test: Define custom rule ("all database queries must use parameterized statements in our ORM"). See if AI enforces it consistently.

Generic AI can't adapt; CodeAnt ingests existing guidelines and enforces automatically.

Integration depth

Test: Count tools needed to understand single PR's health. Track tabs, logins, context switches.

CodeAnt unifies vulnerabilities, code smells, complexity, and DORA metrics in one interface.

Precision and recall

Test: Run tool on recent PR where you know real issues. Calculate:

(true positives) / (true positives + false positives).Pattern-matching flags 800+ per PR; CodeAnt's context-awareness reduces noise 80% while maintaining coverage.

Run an A/B Pilot

Week 1: Baseline

Select 2-3 representative repositories

Install CodeAnt and comparison tool(s)

Collect baseline: median PR review time, security incidents, friction points

Weeks 2-3: Active comparison

Both tools run in parallel on new PRs

Track scorecard metrics

Survey developers weekly on usefulness

Document specific successes and failures

Week 4: Analysis

Compare final metrics against baseline

Calculate ROI:

(time saved + incidents prevented + tools consolidated) - (setup + subscription)Review qualitative feedback

Make data-backed decision

Getting Started: Your 30-Day Pilot Plan

Phase 1: Pick Your Repository

Choose high-PR-volume repository where review bottlenecks are visible:

20+ PRs per week for statistical significance

Clear ownership for fast feedback

Representative complexity reflecting broader codebase

Existing pain points: long cycles, security escapes, inconsistent standards

Phase 2: Define Acceptance Criteria

Set measurable 30-day targets:

Reduce PR cycle time by 30-40%

Reduce critical security/quality escapes by 50%+

Maintain or improve review thoroughness

Achieve <10% false positive rate

Document baseline before enabling CodeAnt.

Phase 3: Configure Thresholds

Start with CodeAnt's default severity model, then tune:

Define org-specific rules:

Compliance: PCI-DSS handling, SOC2 logging, GDPR retention

Architecture: Required auth libraries, approved database patterns

Performance: Query complexity, payload size, rate limiting

Phase 4: Start Comment-Only Mode

Enable CodeAnt to comment without blocking merges. Build trust first:

Week 1-2: AI comments on all findings, developers provide feedback

Review feedback daily: Mark false positives, confirm true positives

Track engagement: Are developers reading and acting?

Phase 5: Gate Critical Findings

Once false positives drop below 10%, enable merge blocking for critical severity:

Security vulnerabilities: SQL injection, XSS, auth bypasses, exposed secrets

Compliance violations: Regulatory requirements

Breaking changes: API contract violations

Keep medium and low in comment-only mode.

Measuring Success

After 30 days, review results. Typical CodeAnt pilots show:

40-60% reduction in PR review time

80%+ reduction in alert fatigue versus pattern-matching

100% catch rate on critical security issues

10-20% improvement in overall PR completion time

The Verdict: Context Beats Patterns Every Time

AI code review improves both quality and productivity when it understands your codebase deeply enough to reduce rework, not just flag issues. The difference between tools that slow teams down and those delivering 40-60% faster reviews comes down to codebase context over generic patterns, measuring outcomes instead of activity, and adopting through low-friction pilots that prove value.

Your Measurement Checklist

Run this on your next 20 PRs:

Baseline current state: Track review time, rework cycles, critical issues missed

Pilot context-aware AI: Choose 2-3 active repositories

Measure signal-to-noise: Count actionable findings vs. false positives

Track rework reduction: Monitor pre-merge vs. post-deployment issues

Survey your team: Are reviewers focusing on what matters?

Start Your Pilot This Week

Most teams see measurable impact within 10 days when piloting AI code review on repositories with clear quality standards and active PRs.

CodeAnt AI automatically reviews pull requests with full repository context, catches security vulnerabilities, pattern-matching tools miss, and enforces your team's specific standards ,cutting review time while improving code health. Start your 14-day free trial to run the pilot framework on your repositories, or book a 1:1 with our team to tailor evaluation to your specific codebase and quality goals.