AI Code Review

Dec 23, 2025

How to Enhance Code Review Effectiveness for Better Team Collaboration

Sonali Sood

Founding GTM, CodeAnt AI

Modern engineering organizations rarely work in isolated silos. Features cut across multiple services, backend teams collaborate with frontend teams, platform teams coordinate with product teams, and infrastructure changes affect every downstream consumer. In this environment, cross-team code reviews are unavoidable—and increasingly critical.

But cross-team reviews are also where friction is highest. Context gaps, mismatched priorities, and inconsistent standards slow PRs to a crawl. What should be a routine collaboration can easily become a source of delay, frustration, and strained relationships.

This guide shows how to turn cross-team code reviews into a collaboration advantage, not a bottleneck.

Why Cross-Team Code Reviews Create Bottlenecks

Cross-team reviews add complexity because reviewers are no longer operating within their familiar environment. They are required to evaluate code written in a domain, architecture, or service they may not know deeply. The lack of shared assumptions and context introduces friction that same-team reviews rarely face.

One major bottleneck is the lack of shared context

Reviewers from other teams may not fully understand architectural constraints, edge-case history, legacy decisions, or domain-specific logic. This creates hesitation, second-guessing, over-questioning, or surface-level reviews.

Another challenge is competing priorities

Each team is running its own sprint, roadmap, and deadlines. A PR coming from another team often slips in priority because reviewing it doesn’t directly contribute to their committed goals. This mismatch creates delays that compound over time.

Inconsistent standards also slow down reviews

If two teams follow different style guides, different definitions of “clean code,” or different testing expectations, then the same PR may be interpreted entirely differently depending on who reviews it.

Cross-team communication increases the time to completion

Async clarification, questions, misunderstandings, and missing details create long back-and-forth loops. What should take hours can stretch into days simply because reviewers need more context to give meaningful feedback.

How to Build a Structured Cross-Team Review Process

Cross-team review friction almost always comes from unclear structure. Before optimizing speed or tooling, teams need to define how the process should work and who is responsible for what.

Define Clear Roles and Responsibilities

Roles create clarity. Clarity creates speed.

The code owner is the ultimate authority for merge decisions related to their domain. Their approval ensures domain correctness and architectural alignment.

The primary reviewer provides technical depth—logic validation, maintainability concerns, performance evaluations, and design alignment.

A secondary reviewer adds cross-functional perspective, ensuring integrations align with shared standards, contracts, and architectural expectations.

When each PR clearly indicates these roles, ambiguity disappears and review queues move faster.

Keep Pull Requests Small and Focused

Cross-team reviews require reviewers to ramp into unfamiliar code quickly. Small PRs significantly reduce cognitive load. A small, focused PR touching only a few files can be understood by someone outside the team far more easily than a large, multi-feature diff.

The best cross-team PRs tackle one bug fix, one change, or one feature slice at a time. Anything large should be broken down into a series of incremental steps to reduce review overhead.

Use Checklists for Review Consistency

Checklists ensure every reviewer—across every team—evaluates the PR using the same criteria. This eliminates subjective variation and reduces the risk of teams using different review heuristics.

A good checklist ensures the reviewer verifies alignment with the organization’s style guide, tests cover new logic, secrets aren’t exposed, and documentation is updated when public APIs change.

Checklists reduce friction, increase consistency, and accelerate cross-team collaboration.

Set Time Limits for Review Turnaround

When multiple teams rely on each other, review latency compounds. Setting clear SLAs—such as initial response within one business day—ensures no PR gets stuck indefinitely.

Strong SLAs create shared expectations, mutual accountability, and predictable delivery.

Cross-Team Code Review Guidelines and Agreements

Documentation is essential for collaboration. Without shared agreements, cross-team reviews devolve into friction, preference battles, and inconsistent decisions.

Align on a Shared Definition of Done

Cross-team reviews fail when teams operate with different definitions of what “done” means. A shared DoD ensures that everyone evaluates PR completeness using the same checklist.

A strong cross-team DoD includes passing tests, clean static analysis results, security scans free of critical findings, and at least one code owner approval. With a shared DoD, disputes about readiness decrease dramatically.

Establish Organization-Wide Coding Standards

Unified standards prevent arguments that stem from stylistic preferences rather than correctness. Linter configurations and formatters ensure consistency across teams, freeing reviewers to focus on deeper concerns such as logic, architecture, and risk.

Document Escalation Paths for Disputes

Even well-structured teams will occasionally disagree. Without a clear escalation path, disagreements turn into delays or strained relationships. A documented ladder—such as escalating from reviewer → tech lead → engineering manager → architecture council—allows teams to resolve disputes respectfully and consistently.

Communication Patterns That Improve Cross-Team Reviews

Cross-team reviews succeed or fail based on communication quality. More than code correctness, misunderstandings slow things down.

Write Effective Pull Request Descriptions

A strong PR description eliminates 70% of unnecessary back-and-forth. Cross-team reviewers cannot rely on tribal knowledge, so PRs must describe context clearly.

A clear description explains what changed, why it changed, how it was implemented, and how the behavior was tested. When the PR is self-contained, reviewers need fewer clarifications and respond faster.

Use Standardized Comment Templates

Labeling comments reduces misinterpretation. When reviewers clarify intent—whether blocking, suggestion, or question—authors know exactly how to prioritize responses.

Clear labeling reduces emotional friction, accelerates decision-making, and sets expectations for merge readiness.

Adopt Asynchronous Review Protocols for Distributed Teams

Teams spread across time zones benefit from async review cultures. Video walkthroughs, Loom recordings, screenshots, and detailed PR write-ups help reviewers understand the context without requiring synchronous discussions.

These async-first practices turn time zone differences into an advantage rather than an obstacle.

How to Handle Disagreements and Escalations Across Teams

Cross-team disagreements are inevitable—but they don’t need to become conflicts.

One effective approach is the “pause, pivot, positive” method. Pause to avoid emotional responses; pivot to the shared objective, such as system reliability or performance; and frame feedback positively to keep the discussion collaborative.

Teams should restate each other’s concerns to confirm understanding, keep discussions focused on code behavior rather than personal preferences, reference documented standards, and escalate only after good-faith attempts to resolve concerns.

Written guidelines prevent disagreements from characterizing the relationship between teams.

How Automation and AI Tools Streamline Cross-Team Code Reviews

Cross-team reviews benefit significantly from automation because automation removes ambiguity, enforces consistency, and speeds up early-stage validation.

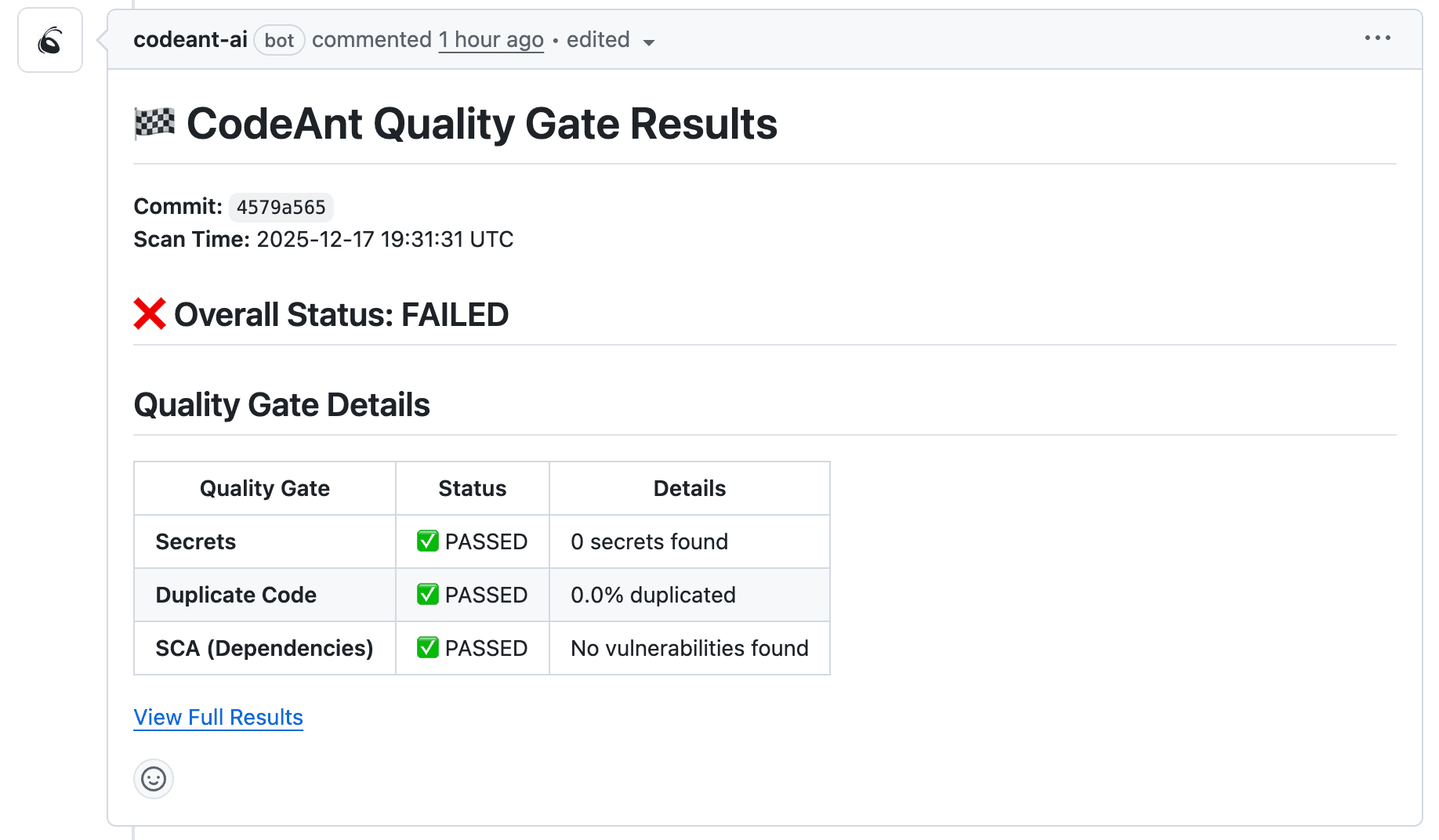

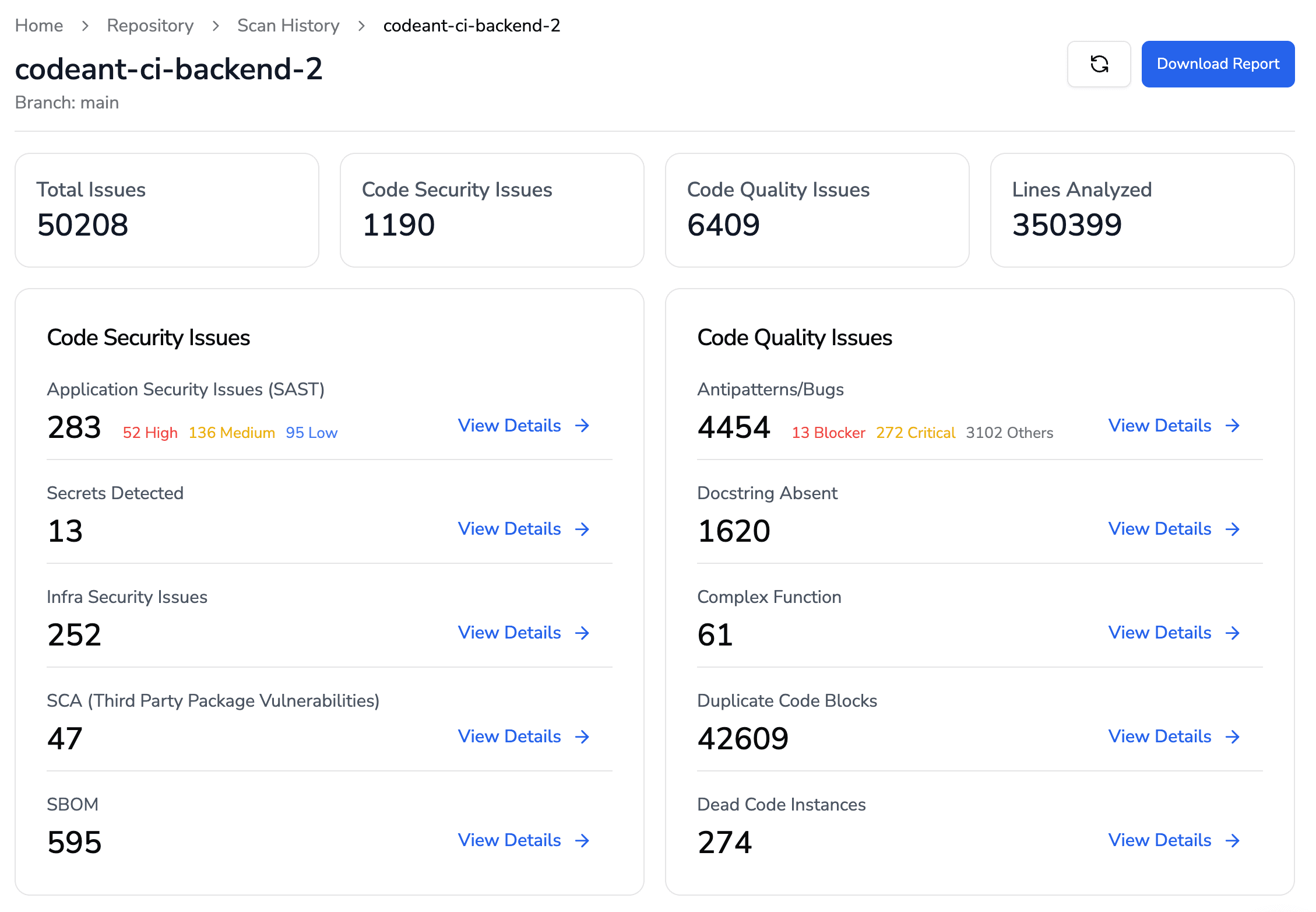

Automated Quality Gates and Static Analysis

Static analysis detects code smells, complexity hotspots, unused logic, and unsafe patterns before humans touch the PR.

Quality gates ensure no PR proceeds without meeting baseline standards.

CodeAnt AI centralizes these rules across all teams, enforcing organization-wide consistency and removing friction caused by stylistic or subjective debate.

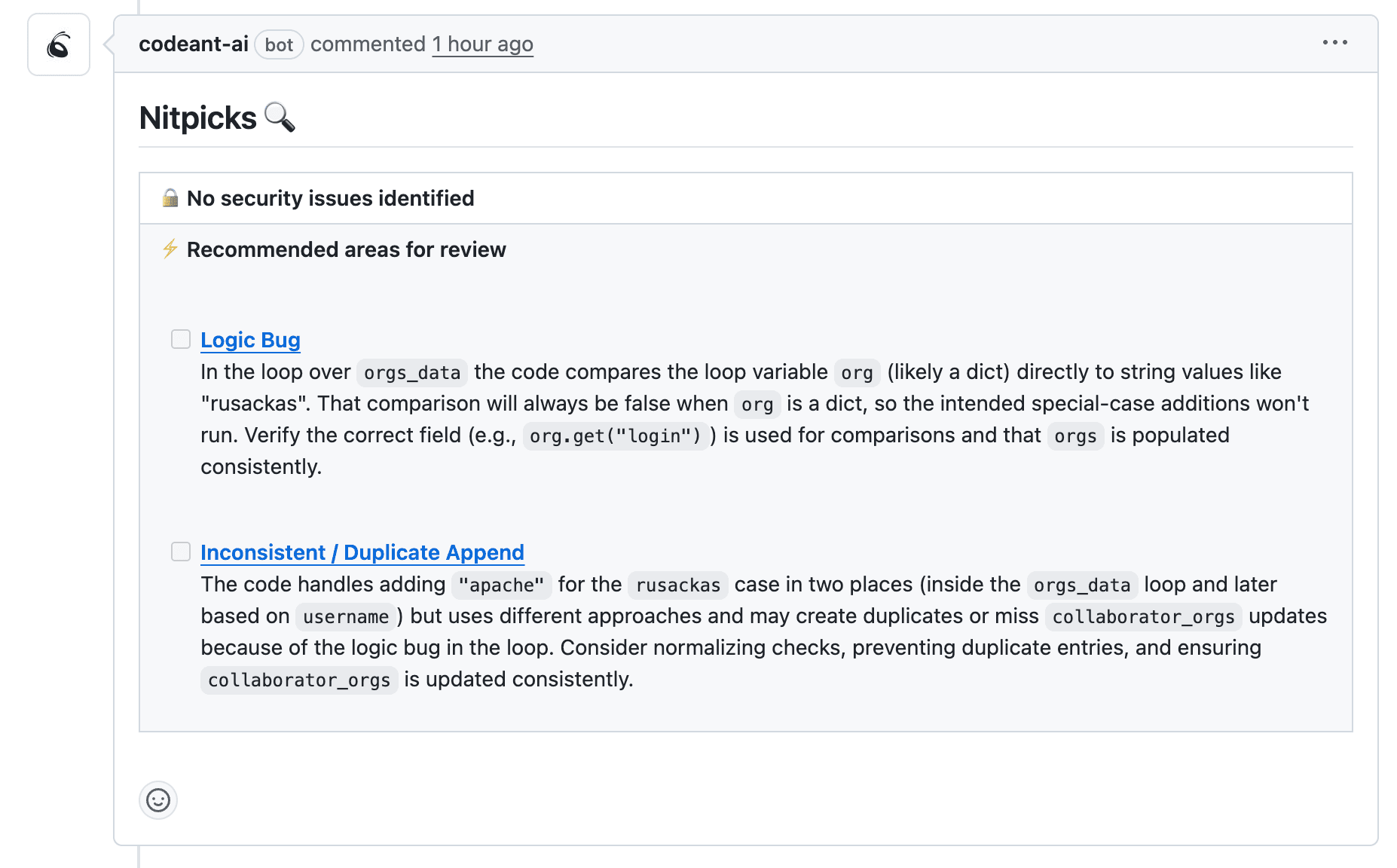

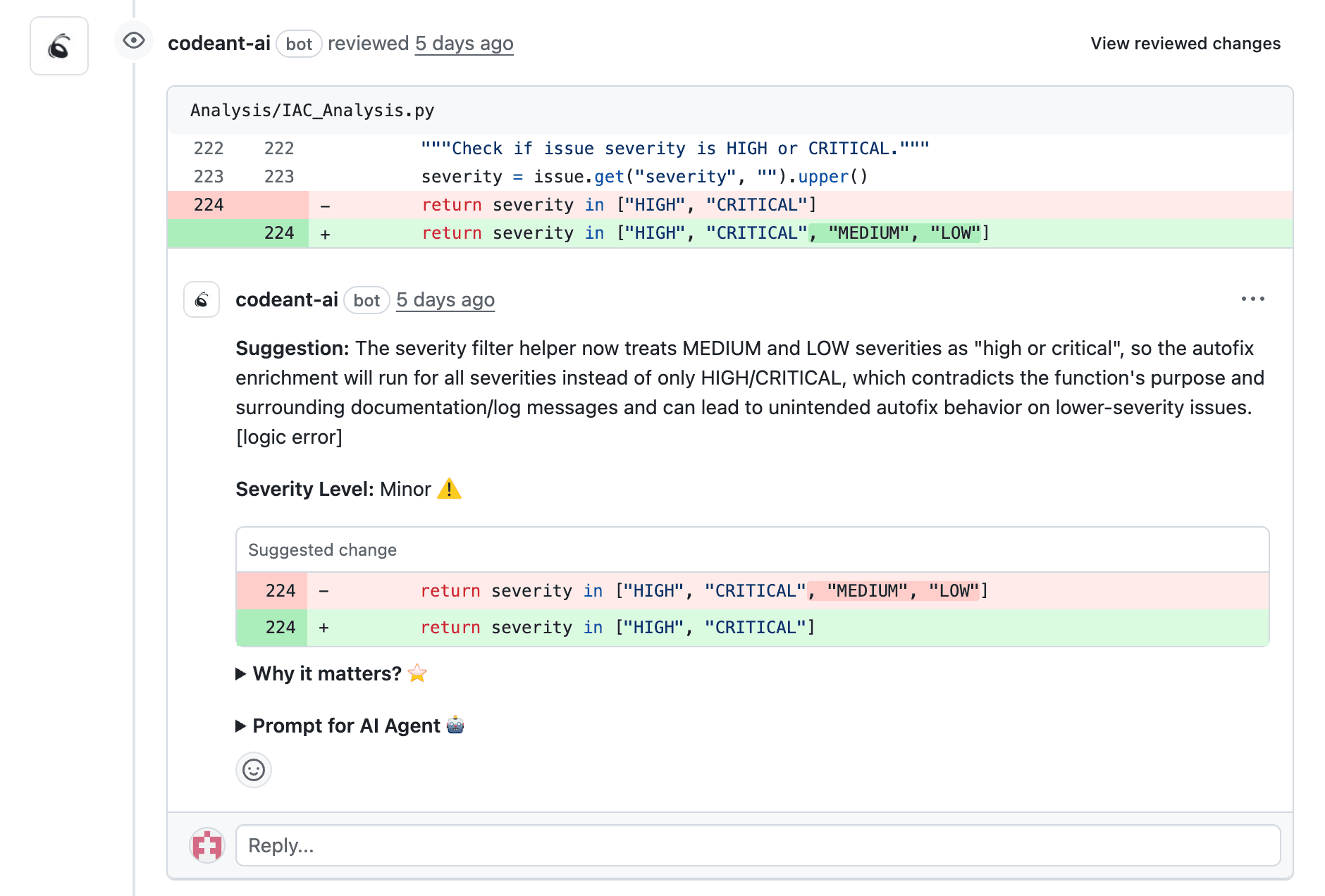

AI-Powered Code Review Suggestions

AI tools drastically reduce review time by performing a first-pass evaluation. They provide summaries, identify risk areas, detect logic flaws, and propose suggested fixes. Human reviewers can then focus on architecture, domain correctness, and integration concerns.

CodeAnt AI’s multi-language, context-aware AI reviewer is especially effective in cross-team scenarios where domain gaps are wide.

Security and Compliance Scanning

Security scanning must be consistent across teams. Automated tools detect secrets, vulnerabilities, dependency issues, and misconfigurations long before code owner review.

Platforms like CodeAnt AI unify SAST and compliance across all services, ensuring teams follow the same security standards regardless of tech stack or domain.

Comparison Table:

Capability | Manual Review | Automated / AI Review |

|---|---|---|

Style and formatting | Inconsistent | Consistent enforcement |

Security vulnerabilities | Easy to miss | Detected automatically |

Review turnaround | Hours to days | Instant feedback |

Scalability | Limited by headcount | Unlimited |

Metrics to Measure Cross-Team Code Review Effectiveness

Cross-team improvement requires data. Without metrics, teams rely on anecdotal observations rather than actionable insights.

Review Cycle Time and Turnaround

Long cycle times indicate bottlenecks in collaboration, unclear ownership, or prioritization issues. This metric is the single clearest indicator of cross-team inefficiency.

Rework Rate and Defect Density

High rework suggests misalignment: unclear requirements, inconsistent expectations, or varying definitions of acceptable quality. Defect density post-merge reveals the quality of cross-team review decisions.

DORA Metrics and Developer Productivity

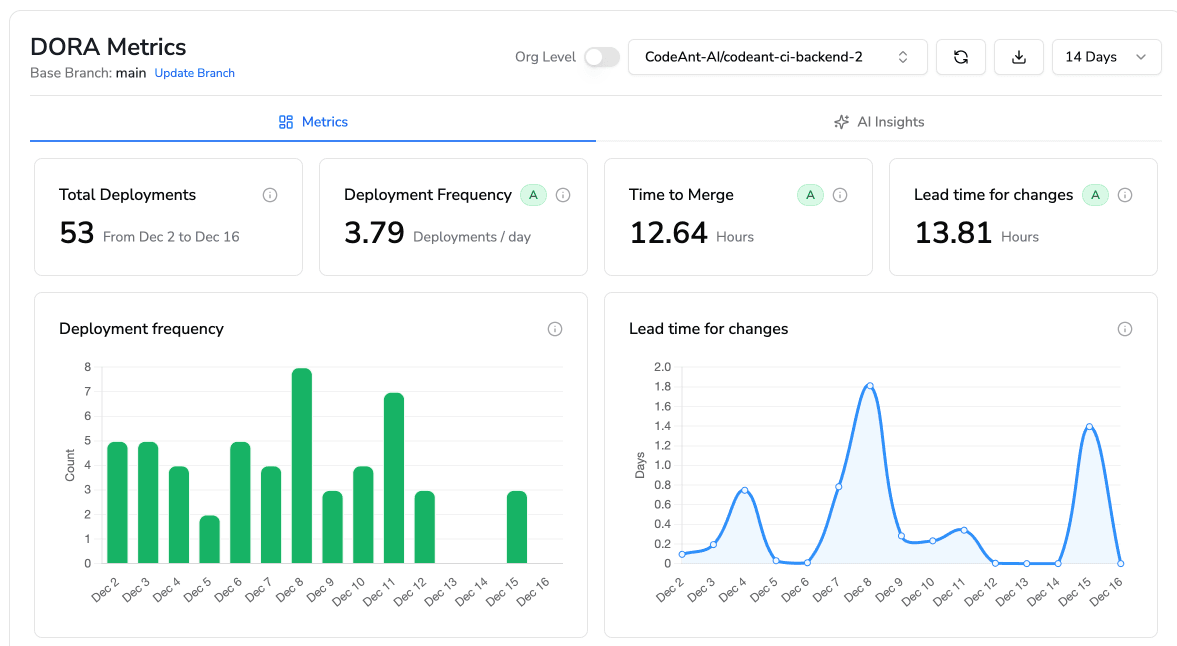

DORA metrics—deployment frequency, lead time, change failure rate, and MTTR—connect code review efficiency directly to delivery performance. CodeAnt AI provides these metrics in a unified dashboard across repositories.

Building a Culture of Collaborative Code Reviews

Culture is the ultimate multiplier. Tooling, SLAs, and checklists matter—but they cannot replace strong collaboration habits.

Onboard New Team Members on Review Practices

New engineers must understand the organization’s review expectations early. Pairing new reviewers with experienced ones builds shared intuition and reduces friction.

Make Code Reviews a Learning Opportunity

Cross-team reviews expose engineers to design patterns and architectures beyond their silo. Teams that treat reviews as learning channels create stronger shared ownership and reduce the bus factor.

Recognize and Reward Cross-Team Collaboration

Celebrate engineers who consistently contribute to cross-team review quality. Recognition reinforces behaviors that elevate the entire engineering org.

Turn Cross-Team Code Reviews into a Competitive Advantage

When done well, cross-team code reviews accelerate collaboration, reduce defects, and strengthen engineering culture. They transform isolated teams into a unified engineering organization.

A platform like CodeAnt AI streamlines this end-to-end by providing AI-powered review, static analysis, security scanning, and unified metrics—removing friction across every team.

Ready to streamline cross-team code reviews? Try our 14-day free trial here and let us know how we can help me even more by booking your free slot with our experts.