AI Code Review

Dec 21, 2025

How Development Teams Can Adopt AI-Assisted Code Review Workflows

Sonali Sood

Founding GTM, CodeAnt AI

Code reviews aren’t going away, they’re the backbone of engineering quality. But they are changing. With rising PR throughput, complex architectures, distributed teams, and tighter release cycles, the traditional human-only review model no longer scales. That’s where AI-assisted code review workflows come in.

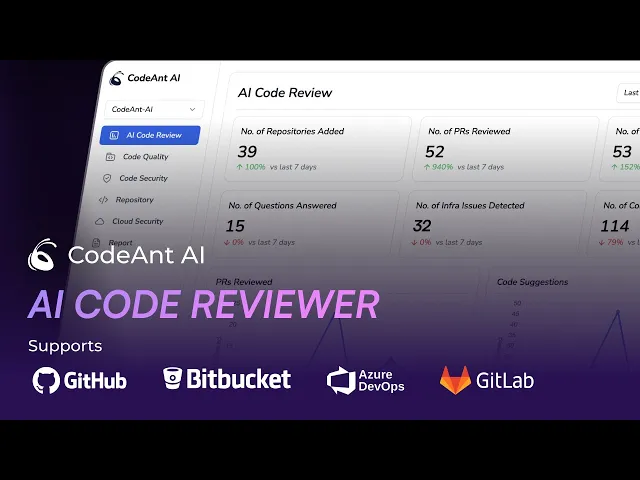

AI is not replacing reviewers; it’s eliminating the repetitive, mechanical parts of review so engineers can focus on logic, architecture, and business impact. This guide explains what AI-assisted code review workflows are, why teams adopt them, how they work technically, and how to integrate them across GitHub, GitLab, Azure DevOps, and Bitbucket.

What Are AI-Assisted Code Review Workflows?

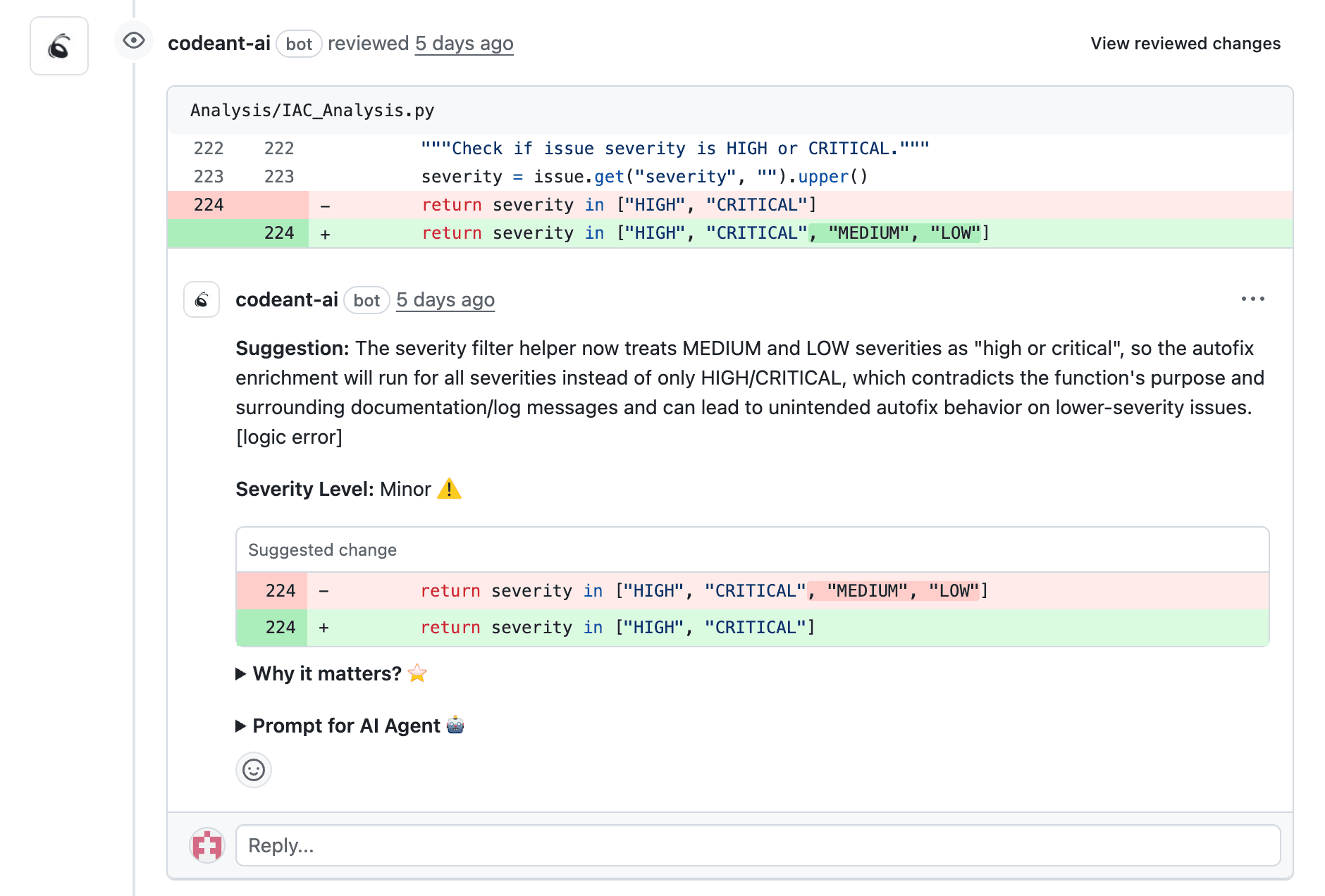

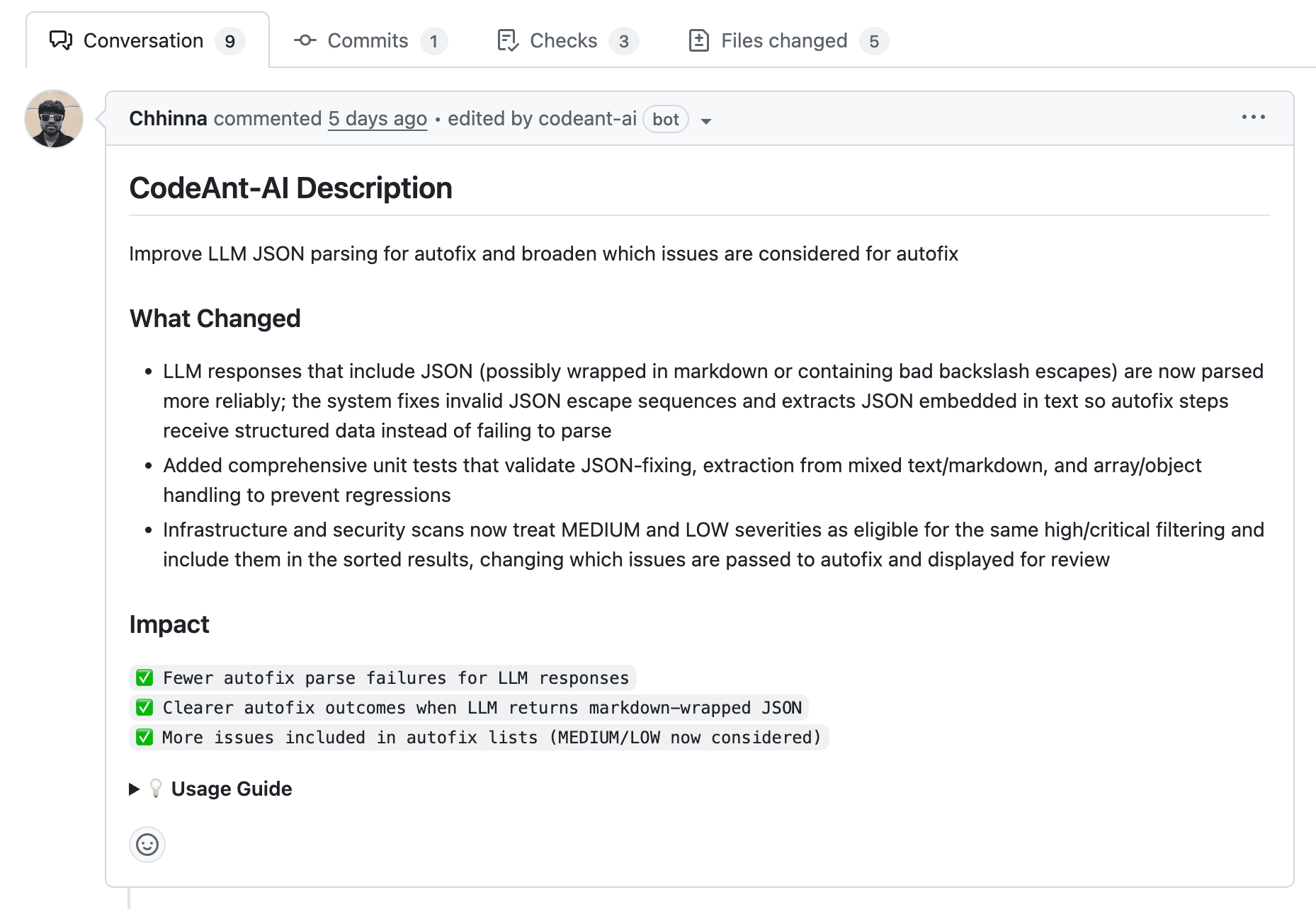

AI-assisted code review workflows use machine learning models, static analysis engines, and security scanners to automatically evaluate pull requests the moment they open. Instead of waiting for a human to notice problems, AI flags issues proactively and posts inline suggestions, right inside the PR view.

These workflows plug directly into GitHub, GitLab, Azure DevOps, and Bitbucket so teams don't need to change how they work. They simply get smarter, faster feedback with every PR.

Typical capabilities include:

Automated PR analysis: AI scans diffs as soon as a pull request opens

Inline suggestions: Comments appear on specific problematic lines

Security + quality checks: Vulnerabilities, code smells, and complexity flagged automatically

Human review handoff: Developers validate or dismiss AI findings before merge

AI becomes the first reviewer, humans become the final reviewer.

Why Development Teams Need AI-Powered Code Reviews

Slow Review Cycles Create Bottlenecks

Growing teams see PRs sitting idle for hours or days. Authors lose context, reviewers become overloaded, and release cycles slow down. Most delays aren’t technical—they’re bandwidth and attention issues.

Inconsistent Quality Across Reviewers

One reviewer flags security issues, another focuses on naming, another looks only at logic. AI removes inconsistency by applying the same checks across every PR.

Security Vulnerabilities Slip Through Manual Review

Even the best reviewers miss secrets, dependency risks, and OWASP-pattern vulnerabilities—especially when reviewing under time pressure. AI catches these issues instantly.

Difficulty Scaling as Teams Grow

As engineering orgs expand, senior reviewers become bottlenecks. AI absorbs the repetitive checks so senior engineers can focus on architectural and high-risk areas.

How AI Code Review Tools Work

AI-assisted code review looks simple from the outside (“comments magically appear”), but under the hood, the pipeline is structured and deliberate.

1. Code Parsing and Context Extraction

AI parses diffs, identifies languages, understands file types, and loads relevant code context to avoid shallow pattern matching.

2. Pattern Recognition and Static Analysis

ML models + static analyzers detect:

logic bugs

unreachable code

anti-patterns

dangerous coding constructs

potential regressions

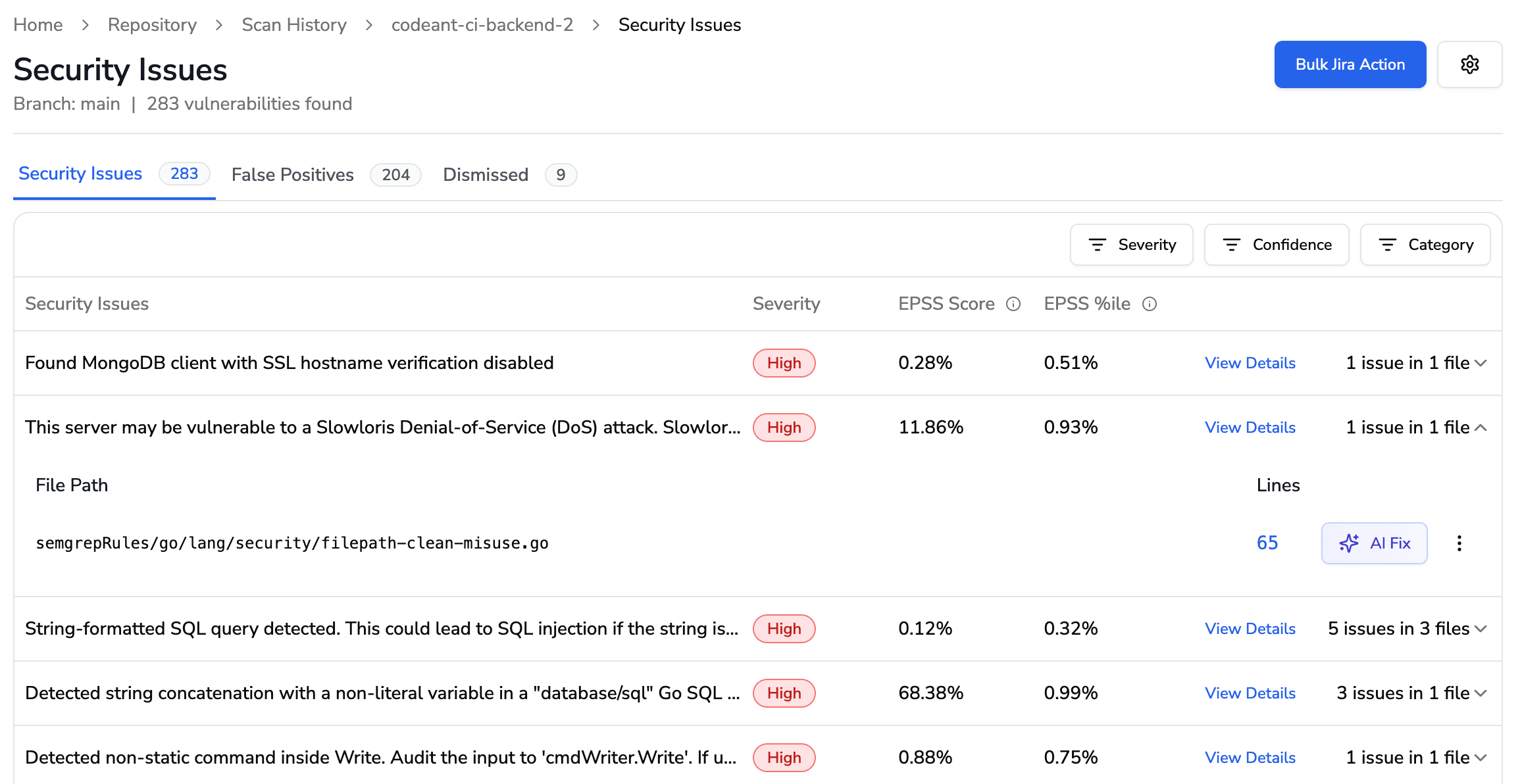

3. Security and Vulnerability Scanning

This is where security value spikes. AI performs:

hardcoded secret detection

dependency CVE checks

SAST scanning for injection vectors (SQLi, XSS, command injection)

insecure API usage detection

Check out this Modern Code Security Scanning for CI/CD Pipelines.

4. Suggestion Generation and Prioritization

Not every issue matters equally. AI prioritizes:

critical security risks

crash-level bugs

high-complexity sections

maintainability issues

5. Inline Comment Delivery on Pull Requests

AI posts suggestions directly inside GitHub, GitLab, Azure DevOps, and Bitbucket PRs. Developers can accept, dismiss, or discuss each finding, no switching tools.

Platforms like CodeAnt AI automate this entire pipeline while integrating into existing Git workflows.

Essential Capabilities to Look for in AI Code Review Tools

Below is a simplified version of what teams should evaluate when comparing AI tools for code review:

Capability | Why It Matters |

Line-by-line code analysis | Helps devs fix exactly the right line quickly |

Security vulnerability detection | Prevents high-risk issues from reaching production |

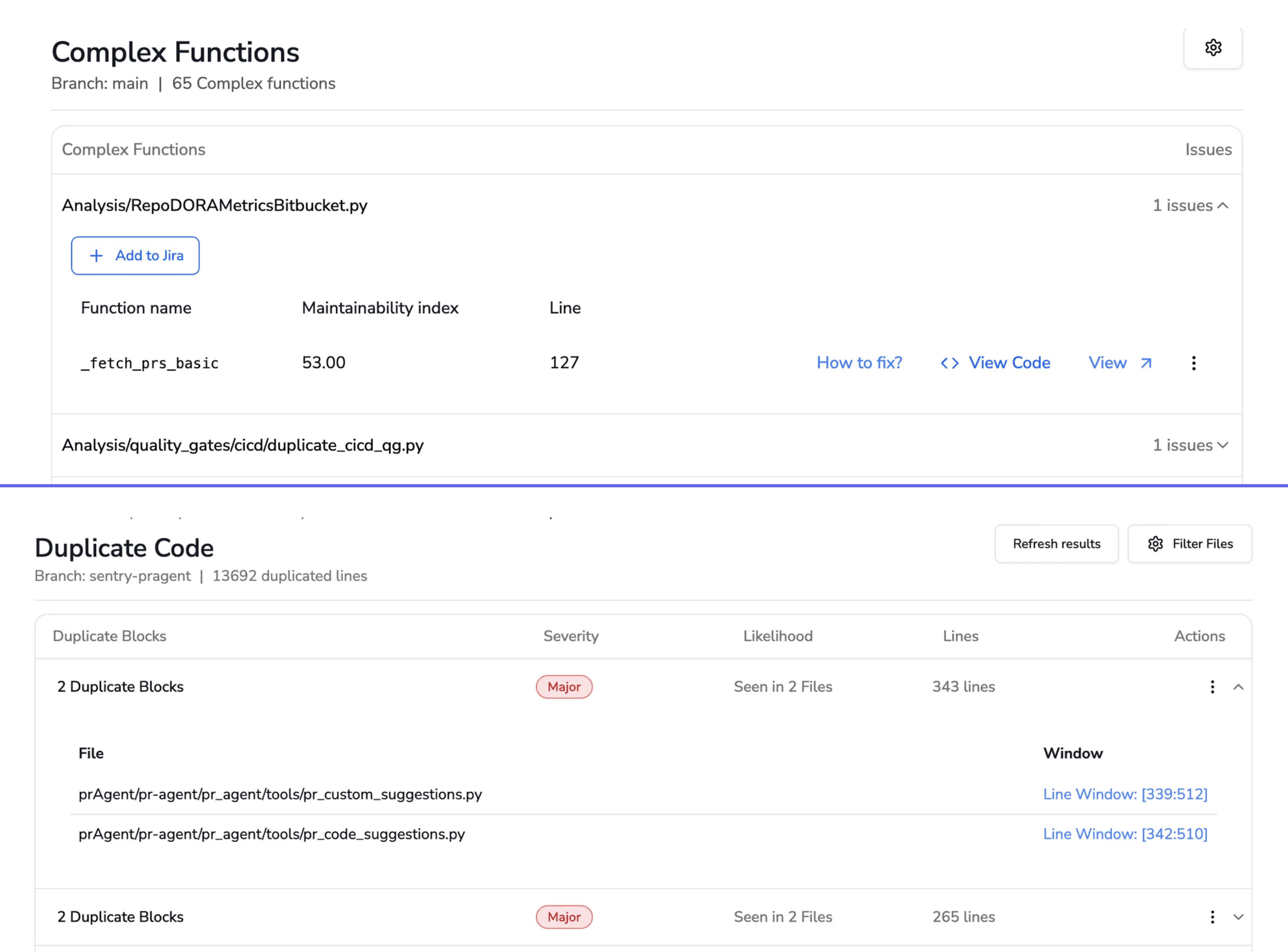

Code quality & complexity scoring | Surfaces maintainability and tech-debt hotspots |

Automated PR summaries | Saves reviewers time understanding changes |

Custom rule configuration | Enforces org-specific standards |

CI/CD + Git platform integration | Ensures AI reviews run automatically |

Line-by-Line Code Analysis

Precise suggestions reduce noise and guesswork during reviews.

Security Vulnerability & Secrets Detection

AI needs to catch secrets and CVEs—this is table stakes for modern pipelines. CodeAnt AI includes native SAST + dependency scanning.

Code Quality and Complexity Assessment

Cyclomatic complexity, duplication, and readability scores help teams understand long-term quality impact.

Automated PR Summaries

AI-generated summaries help reviewers get context quickly without reading full diffs.

Custom Rule Configuration

Teams define patterns that matter to them (naming, architecture, style).

CI/CD & Git Integration

AI must plug into existing GitHub, GitLab, Azure DevOps, and Bitbucket pipelines without friction.

How to Integrate AI Code Reviews Into Your Workflow

Implementation shouldn’t take more than 10–30 minutes if the platform is modern.

1. Connect Your Repository to the AI Review Tool

OAuth or app installation links the tool to GitHub/GitLab/Bitbucket/ADO.

2. Configure Review Rules & Quality Standards

Set:

severity thresholds

rule categories (security, style, complexity)

org-wide coding standards

3. Set Up Automated Triggers on Pull Requests

Choose when AI should run:

on PR open

on PR update

on branch target

on merge requests

4. Establish Quality Gates Before Merge

Block merges if critical issues remain unresolved. This improves code health automatically.

5. Monitor Feedback & Iterate

Fine-tune rules, adjust noise levels, and add custom patterns based on team feedback.

Platforms like CodeAnt AI make this mostly one-click.

Best Practices for Team Adoption of AI Code Reviews

Adopting AI is as much about change management as tooling.

Start with a Pilot Team Before Org-Wide Rollout

Begin with one repo or team, collect feedback, tune configurations, then expand.

Define Clear Responsibilities for AI vs Human Reviewers

AI handles:

style

secrets

complexity

known bug patterns

Humans handle:

architecture

logic

business correctness

edge cases

trade-offs

Train Developers to Act on AI Suggestions

Teach devs how to accept, dismiss, or discuss suggestions.

Create a Feedback Loop to Improve Accuracy

Log false positives and misses. Adjust rules accordingly.

Celebrate Early Wins

Share examples of critical bugs caught by AI to build internal momentum.

Balancing Human Expertise with AI Automation

AI augments developers—it doesn’t replace them.

Where AI Excels

catching vulnerability patterns instantly

enforcing style rules consistently

scanning every line of a PR without fatigue

flagging complexity and duplication

providing “always-on” consistency

Where Humans Excel

judging whether code solves the right problem

evaluating architectural implications

mentoring junior developers

reasoning about trade-offs

interpreting business context

Structuring Human-AI Collaboration

A healthy workflow looks like this:

AI reviews first

Humans review AI findings + logic + architecture

Both approvals required before merge

This creates a high-confidence, low-friction system.

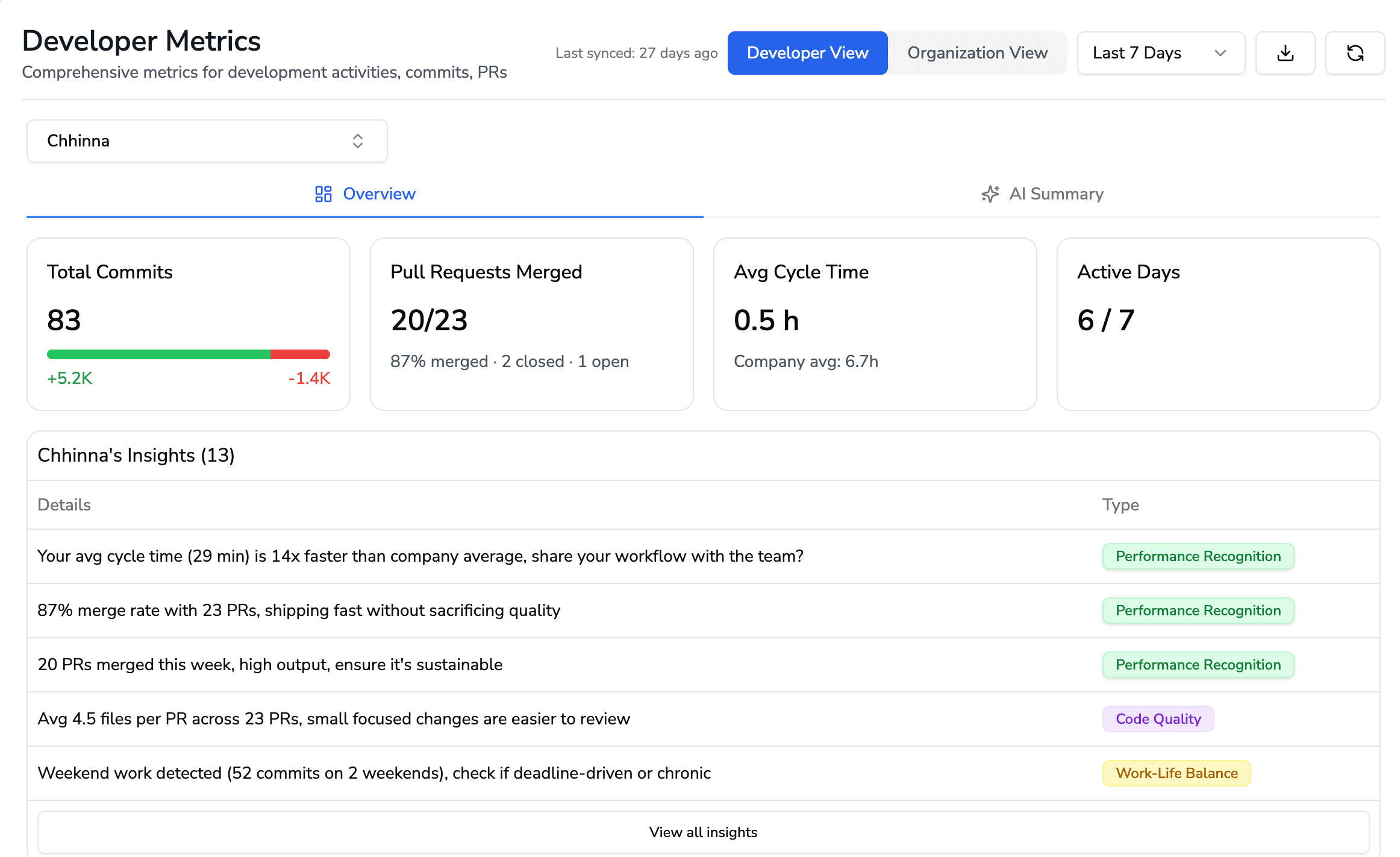

How to Measure the Impact of AI-Assisted Code Reviews

Engineering leaders need data—not opinions—to justify adoption.

Review Cycle Time Reduction

Measures how much faster PRs move from open → merge.

Defect Escape Rate

Tracks bugs reaching production after review. Lower means higher review effectiveness.

Developer Productivity Metrics

Look at:

PR throughput

time spent waiting for reviews

reduction in reviewer load

Code Quality & Technical Debt Trends

Monitor complexity, duplication, and maintainability scores.

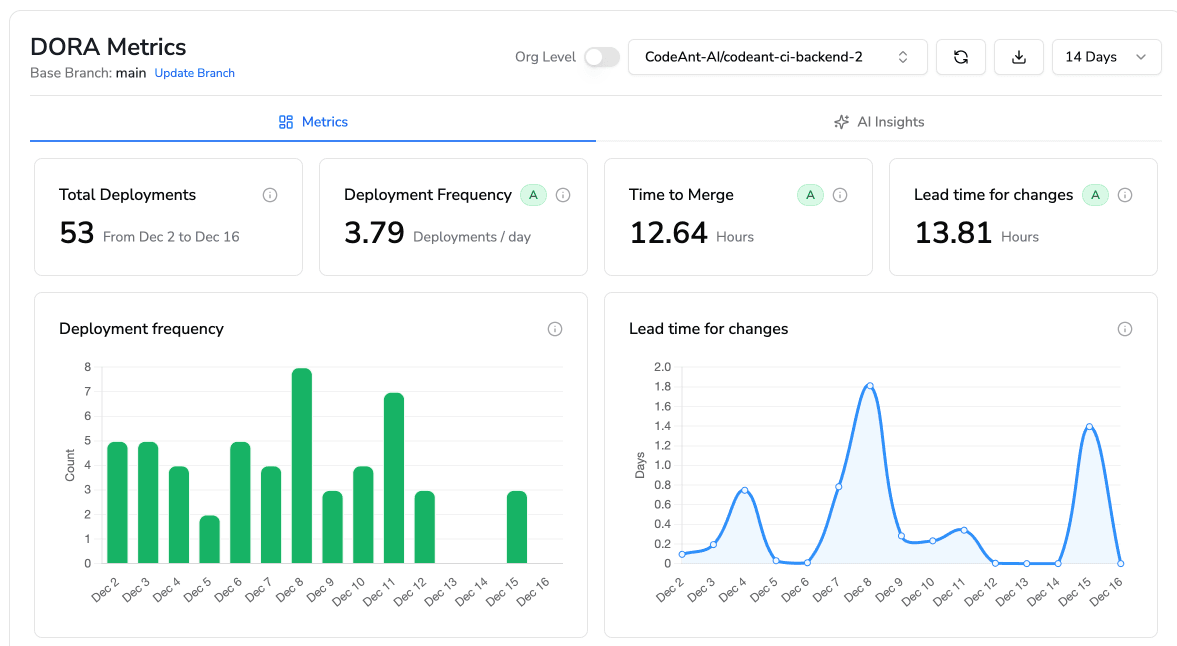

DORA Metrics

AI-assisted reviews improve:

deployment frequency

lead time

change failure rate

mean time to recovery

These metrics show organizational-level ROI.

Building a Scalable AI-Powered Code Review Culture

AI-assisted code review is not a feature—it’s a cultural shift. Teams that embrace automation ship faster, catch more issues early, and maintain higher code quality over time.

Platforms like CodeAnt AI unify:

security

quality

developer productivity

PR review automation

DORA metrics

code health analytics

…into one end-to-end view of engineering performance.

Ready to bring AI-assisted code reviews to your team? Book a 1:1 with our experts today.