AI Code Review

Jan 30, 2026

12 Best AI Code Review Tools for Backend API Dev Teams in 2026

Sonali Sood

Founding GTM, CodeAnt AI

Backend API teams are drowning in pull requests, missing critical security vulnerabilities and performance issues that derail deployments. Manual reviews steal hours from innovation, slowing your 2026 roadmap. This guide ranks the 12 best AI code review tools which cuts review time by 80%.

Why backend API teams need AI code review in 2026?

In 2026, backend API development is faster and more complex than ever. With microservices sprawling and deployment cycles shrinking to hours, manual code review has become a dangerous bottleneck. You can't afford to have senior engineers spend half their week hunting for missing error handlers or debating variable names in pull requests.

That’s where AI code review tools come in. They aren't just spellcheckers anymore; they are intelligent assistants that understand context, security, and architectural patterns. For backend teams, this means catching critical API vulnerabilities, like broken object level authorization (BOLA) or N+1 query problems, before they merge. This guide covers the best tools available right now to help you ship cleaner, safer APIs without slowing down.

What Are AI Code Review Tools for Backend API Development?

AI code review tools are automated systems that integrate directly into your version control workflow to analyze code changes. Unlike traditional static analysis, these tools use Large Language Models (LLMs) to understand the intent behind a code change, not just the syntax. They provide conversational feedback, suggest specific fixes, and can even generate summaries of complex pull requests.

That said: "AI code review is an automated review layer that evaluates code changes for correctness, safety, scope alignment, and architectural impact before a human reviewer ever opens the PR."

For backend APIs, these tools specifically look for issues that break contracts, introduce latency, or expose data. They act as a first line of defense, handling the tedious parts of review so humans can focus on logic and design.

Interesting reads:

Roll Out New LLMs Safely using Shadow Testing

Learn If You Need to Replace or Optimize Your LLMs

Self-Evolving LLM Evaluation Pipelines

HIdden Cost of Cheap LLM Models

Will Chasing Every New LLM Hurt Engineering

Why Backend API Teams Need AI Code Review in 2026

Backend teams face unique challenges that frontend teams often don't. A mistake in a backend API doesn't just look bad; it can take down other services or leak sensitive user data. Manual reviews often miss subtle issues like inconsistent API contracts across microservices or complex dependency chains that ripple through a system.

Recent data shows that enterprise review systems need five key capabilities for large, distributed engineering organizations to function effectively. Without automation, maintaining consistency across dozens of repositories is nearly impossible. AI tools solve this by enforcing standards automatically. They catch endpoint security gaps, such as authentication flaws, and ensure schema drift doesn't break downstream consumers. This reduces the cognitive load on reviewers and prevents "rubber stamp" approvals.

Key Features to Prioritize for Backend APIs

Security and Vulnerability Scanning

For API development, security is the top priority. Your tool must go beyond basic syntax checks and perform deep SAST (Static Application Security Testing). It needs to identify the OWASP API Security Top 10 vulnerabilities, such as broken user authentication, excessive data exposure, and mass assignment.

Look for tools that also include secrets detection. Backend code often interacts with databases and third-party services, making accidental commits of API keys or credentials a massive risk. The best tools block these commits before they enter the repository.

API-Specific Quality Checks

Backend code requires strict adherence to contracts and standards. A generic code reviewer might miss that you changed a public endpoint's response format, breaking mobile clients.

Effective tools prioritize:

Context-aware checks: Analyzing imports, related modules, and shared libraries.

Service boundaries: ensuring data flows respect architectural limits.

Test conventions: Verifying that new endpoints include proper integration tests.

Lifecycle behavior: Checking initialization sequences and cleanup routines.

Performance and Scalability Analysis

Performance issues in backend APIs compound quickly. A loop that looks fine in a small PR might cause a timeout when processing thousands of requests in production.

AI tools for backend teams should detect complexity spikes and inefficient database queries. Look for features that flag N+1 problems (fetching data in a loop) or expensive operations inside critical paths. The tool should help you maintain low latency by identifying code that will degrade scalability before it merges.

The 12 Best AI Code Review Tools for 2026

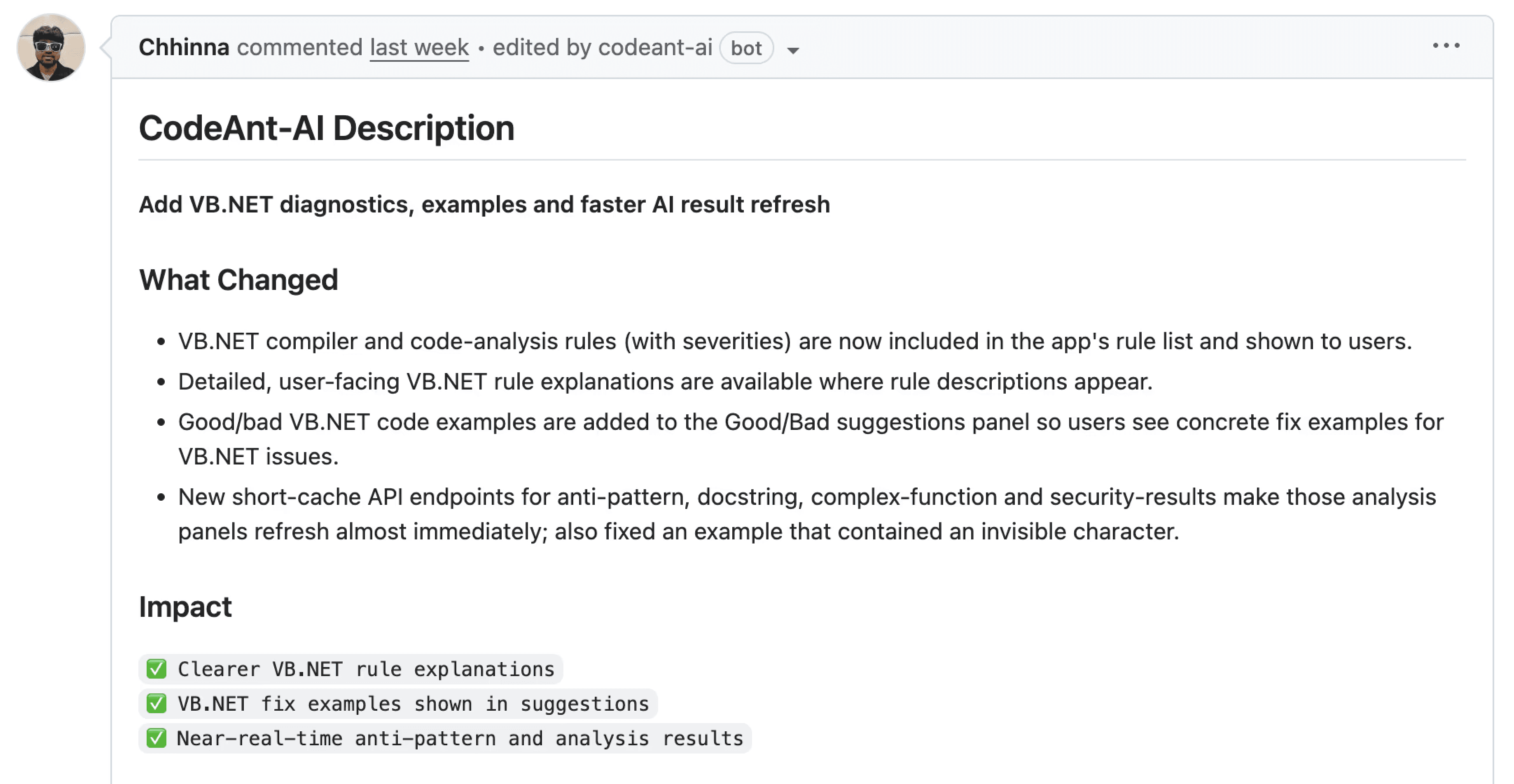

1. CodeAnt AI

CodeAnt AI is a unified code health platform designed for enterprise teams. It combines AI-powered code reviews, security scanning, and quality metrics into a single view. Instead of juggling separate tools for SAST, linting, and PR summaries, CodeAnt AI handles it all, scanning both new PRs and existing repositories to provide a 360° view of engineering health.

Key Features:

Line-by-line AI reviews with context-aware feedback.

One-click auto-fix suggestions for common issues.

Integrated SAST, secrets detection, and dependency checks.

DORA metrics tracking to measure engineering velocity.

Support for 30+ languages including Python, Java, and Go.

Best For: Enterprise teams (100+ developers) wanting a complete platform for security and code quality.

Pricing: Per-user pricing with a 14-day free trial.

Limitations: May be more robust than small startups need for simple linting.

2. CodeRabbit

CodeRabbit focuses heavily on the pull request experience. It uses AI to generate detailed summaries of changes, making it easier for human reviewers to understand the context of a PR quickly. It excels at providing conversational feedback directly in the code comments.

Key Features:

Automated PR summaries and walkthroughs.

Line-by-line code suggestions.

Chat interface to ask questions about the code.

Integration with major issue trackers like Jira.

Best For: Teams that want to speed up the manual review process with better context.

Pricing: Free tier for open source; Pro and Enterprise plans available.

Limitations: Focuses more on the review workflow than deep historical code analysis.

Checkout this CodeRabbit alternative.

3. Qodo

Qodo (formerly Codium) distinguishes itself by focusing on test generation and behavior analysis. It doesn't just review code; it helps developers write tests to verify that their code works as intended. This is critical for backend APIs where regression testing is mandatory.

Key Features:

AI-powered test generation for backend logic.

Automated code review agents.

Behavior analysis to predict how changes affect the system.

IDE plugins for early feedback.

Best For: Teams that struggle with test coverage and regression bugs.

Pricing: Teams and Enterprise tiers.

Limitations: The interface can be complex for users only looking for simple syntax checking.

Checkout this Qodo Alternative.

4. Greptile

Greptile is built to understand the entire codebase context. Unlike tools that only look at the "diff" (the changes), Greptile indexes your whole repository. This allows it to answer complex questions about how a change in one API endpoint might break a service defined in a completely different file.

Key Features:

Full codebase semantic search and understanding.

"Review this PR" functionality that checks against repo-wide patterns.

Natural language query interface for the codebase.

Custom rule instruction via plain English.

Best For: Complex monorepos where changes have far-reaching side effects.

Pricing: Usage-based pricing model.

Limitations: Initial indexing of very large repositories can take time.

5. Codacy

Codacy is a veteran in the code quality space that has integrated AI features. It provides a comprehensive dashboard for tracking technical debt, code coverage, and duplication. It's excellent for engineering managers who need high-level visibility into code health trends over time.

Key Features:

Automated code patterns and style enforcement.

Security and performance checks.

Time-to-merge and other productivity metrics.

Integration with GitHub, GitLab, and Bitbucket.

Best For: Teams that need strict enforcement of coding standards and style guidelines.

Pricing: Open source is free; Pro and Enterprise paid plans.

Limitations: Can be noisy with false positives if not tuned correctly.

Checkout this Codacy Alternative

6. Snyk Code

Snyk Code is primarily a security tool that fits into the code review process. It is famous for its speed and developer-friendly focus. It scans for vulnerabilities in real-time as you code and during the PR process, focusing on open source dependencies and proprietary code flaws.

Key Features:

Real-time SAST scanning.

Deep dependency vulnerability analysis.

Fix suggestions for security flaws.

IDE integration for "shift left" security.

Best For: Backend teams where security compliance is the number one requirement.

Pricing: Free for individual devs; Team and Enterprise plans.

Limitations: Less focused on general code style or logic bugs compared to generalist tools.

Checkout this Synk alternative.

7. SonarQube

SonarQube is the industry standard for static analysis. While less "AI-native" than newer tools, it has robust rules for backend languages like Java and C#. It provides a massive library of static analysis rules and is often self-hosted by enterprises with strict data requirements.

Key Features:

Extensive rule library for bugs and vulnerabilities.

"Quality Gates" to block PRs that fail standards.

Technical debt calculation.

On-premise hosting options.

Best For: Large enterprises requiring self-hosted infrastructure and established compliance gates.

Pricing: Community edition is free; Developer and Enterprise editions are paid.

Limitations: Setup and maintenance can be heavy; UI is less modern than AI-first tools.

Checkout this SonarQube Alternative.

8. DeepSource

DeepSource emphasizes "autofixing" problems. It detects issues and creates commits to fix them automatically, reducing the friction of correcting minor linting errors. It supports all major backend languages and focuses on keeping configuration simple.

Key Features:

Automated pull requests to fix issues.

Low false-positive rate by design.

Custom transformers for code formatting.

Security analysis (SAST) included.

Best For: Teams that want to automate the cleanup of code style and simple bugs.

Pricing: Free for open source and small teams; Enterprise for larger orgs.

Limitations: Deep logical analysis is less advanced than LLM-based competitors.

Checkout this Deepsource Alternative.

9. CodeScene

CodeScene takes a unique behavioral approach. It analyzes version control history to find "hotspots," files that are changed frequently and have high complexity. It helps backend teams prioritize technical debt based on which code is actually being touched, rather than just static metrics.

Key Features:

Behavioral code analysis (hotspot detection).

Technical debt prioritization based on business impact.

Team coupling analysis (Conway's Law).

Cost of change estimation.

Best For: Strategic planning and prioritizing refactoring work in legacy backends.

Pricing: Standard and Enterprise licenses.

Limitations: It is an analysis tool, not a "fixer" or traditional reviewer.

10. LinearB

LinearB is a software delivery management platform that includes gitStream for programmable code reviews. It connects code signals to project management data. It focuses on workflow automation, routing PRs to the right people and auto-approving safe changes to improve DORA metrics.

Key Features:

gitStream for automating PR routing and merging.

Correlation of code activity with project issues (Jira).

Detailed DORA metrics and cycle time tracking.

Resource allocation visibility.

Best For: Engineering leaders optimizing team workflow and delivery efficiency.

Pricing: Free tier available; Business and Enterprise plans.

Limitations: Focuses on process and metrics rather than line-by-line code logic analysis.

11. Cursor Bugbot

Cursor is an AI-powered code editor, and its Bugbot feature is a specialized agent designed to find bugs. Unlike CI tools, this runs closer to the developer's workflow. It scans code to find subtle logical errors that traditional linters miss, acting like an automated QA engineer inside the IDE.

Key Features:

Agentic bug hunting capabilities.

Deep integration with the Cursor editor.

Understanding of project-wide context.

Fix generation directly in the editor buffer.

Best For: Individual developers wanting immediate feedback while writing code.

Pricing: Part of the Cursor subscription (Pro/Business).

Limitations: Requires using the Cursor editor; not a server-side CI/CD gate.

12. Aikido Security

Aikido Security is a developer-first security platform that aggregates various scanners and filters out the noise. For backend teams tired of thousands of false alerts, Aikido focuses on what's actually exploitable. It combines SAST, DAST, and secrets detection into a clean UI.

Key Features:

Unified security scanning (SAST, SCA, Secrets).

"Reachability analysis" to reduce false positives.

Fast scanning integrated into CI/CD.

Simple, no-nonsense dashboard.

Best For: SaaS startups needing robust security without hiring a dedicated security team.

Pricing: Free tier for startups; paid plans for growth.

Limitations: Primarily a security tool, lacks general code style or logic review features.

How AI Code Review Tools Work

Analyzing Code Changes with LLMs

Modern tools don't just run regex scripts. They use Large Language Models trained on billions of lines of code. When a developer opens a PR, the tool extracts the "diff" (the changes) along with surrounding context files. It feeds this data into the model, which analyzes the logic against known patterns, security vulnerabilities, and the specific language's best practices.

Interesting reads:

Roll Out New LLMs Safely using Shadow Testing

Learn If You Need to Replace or Optimize Your LLMs

Self-Evolving LLM Evaluation Pipelines

HIdden Cost of Cheap LLM Models

Will Chasing Every New LLM Hurt Engineering

Generating Suggestions and Summaries

Once analyzed, the AI formulates a response. For simple errors, it generates a code snippet that fixes the issue. For complex architectural concerns, it writes a comment explaining why the code might be problematic (e.g., "This query might cause a deadlock under load"). Many tools also generate a high-level summary of the PR, explaining to human reviewers what changed and why, which saves massive amounts of time.

Integrating with CI/CD Pipelines

These tools live inside your workflow. They connect via GitHub Apps, GitLab Webhooks, or Azure DevOps integrations.

Trigger: Developer pushes code.

Scan: Tool runs analysis in the background.

Feedback: Comments appear directly on the PR lines.

Gate: If critical issues (like secrets or severe bugs) are found, the tool can block the merge button until they are resolved.

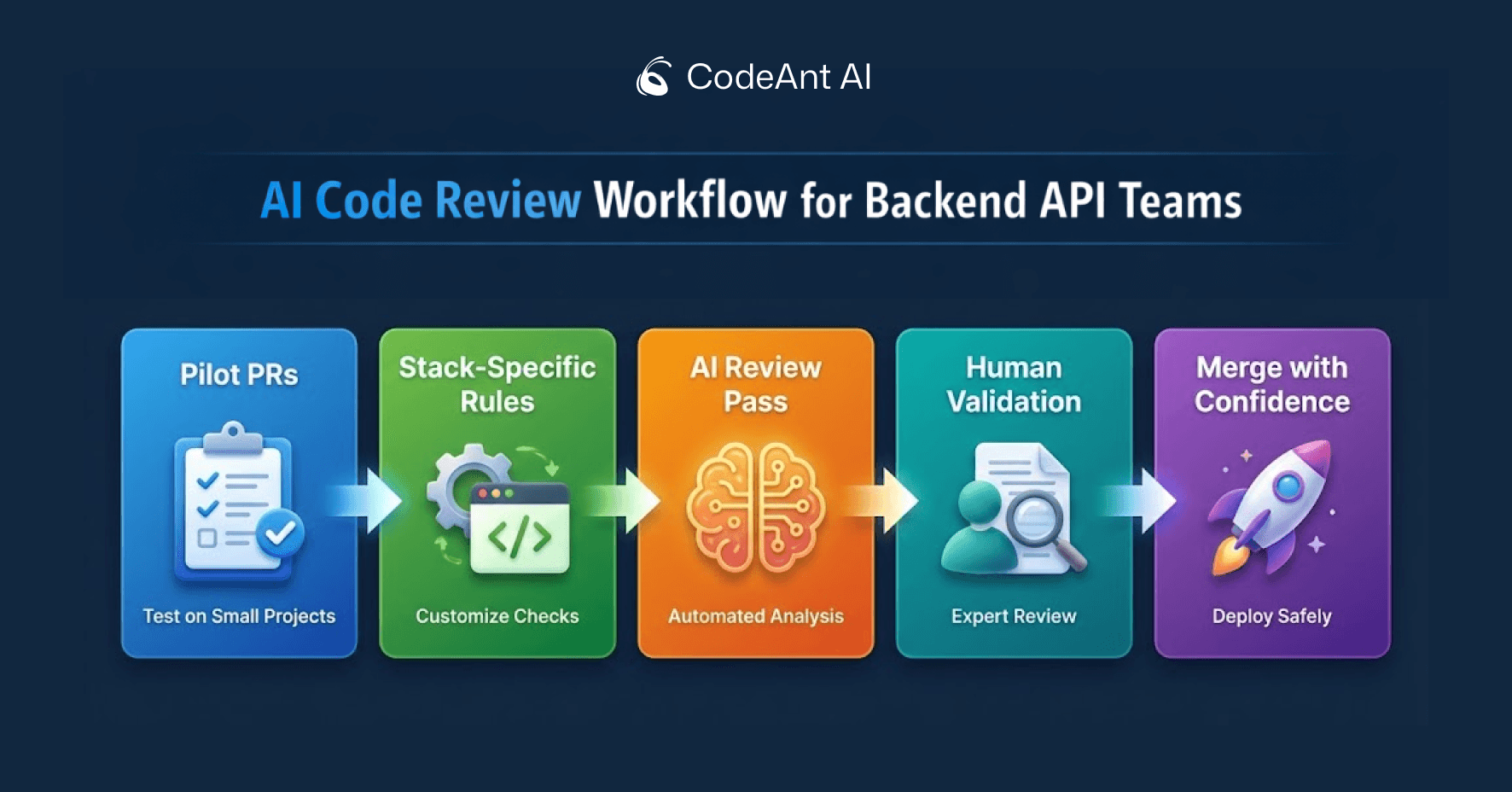

Best Practices for Backend API Teams

Start with Pilot PRs

Don't turn on every rule for every repository at once. Pick a non-critical microservice or a small team to pilot the tool. This allows you to calibrate the "noise" level. You want the AI to be helpful, not annoying. If the tool flags every single missing docstring, developers will learn to ignore it.

Customize Rules for Your Stack

Generic rules aren't enough for specialized backends. If you use Django, configure the tool to look for Django-specific security flaws. If you have an internal naming convention for API endpoints (e.g., /api/v1/resource), add custom instructions to enforce it. The best tools allow you to write custom rules in plain English or YAML to match your organization's standards.

Combine AI with Human Oversight

AI is a tool, not a replacement. AI excels at catching patterns, syntax errors, and known vulnerabilities. Humans excel at understanding business logic, architectural fit, and user intent. Use AI to handle the "grunt work" of review, checking for null pointers and style violations, so your senior engineers can focus on the system design and long-term maintainability.

Common Mistakes in AI Code Review Adoption

One of the biggest mistakes teams make is ignoring context window constraints. AI models have a limit on how much text they can process. If you dump a massive PR with 50 changed files into the tool, it might hallucinate or miss critical context. Encourage smaller, atomic PRs, which is a good practice anyway.

Another pitfall is alert fatigue. If a tool reports 100 "issues" on a legacy codebase, the team will disable it. Configure the tool to only report on new code (the diff) rather than the entire history. This ensures developers are only responsible for fixing what they just touched, making adoption smoother.

Conclusion

In 2026, relying solely on human review for backend APIs is a risk you don't need to take. The right AI code review tool acts as a force multiplier, catching security flaws and enforcing consistency while freeing your team to build features.

Whether you choose a unified platform like CodeAnt AI for complete visibility, or a specialized tool for security or workflow automation, the goal is the same: shipping secure, high-quality code faster. Start by evaluating your team's biggest pain points, be it security gaps, slow review cycles, or technical debt, and select the tool that solves them best.

Ready to ship secure, high-quality API code faster? Book your 1:1 with our experts today at CodeAnt AI.