AI Code Review

Jan 31, 2026

Top 12 AI Code Review Tools to Speed Up Full-Stack Development

Sonali Sood

Founding GTM, CodeAnt AI

Struggling with endless pull request backlogs that slow your full-stack team's releases and drain developer productivity? Manual code reviews often miss subtle bugs while wasting 30-50% of engineering hours. This article ranks the 12 best AI code review tools for 2026, giving you actionable picks to automate reviews and reclaim time with top tools that cut cycles by 70%.

Why AI Code Review Is Now Table Stakes for Full-Stack Teams?

Code review bottlenecks are the silent killers of product velocity. In 2026, engineering teams aren't just looking for tools to catch syntax errors; they need intelligent systems that understand context, security, and architectural intent. The days of waiting 48 hours for a simple peer review are fading.

AI code review tools have shifted from experimental novelties to essential infrastructure for full-stack teams. They don't replace human engineers but act as a first line of defense, filtering out noise and catching complex issues before a human ever looks at the PR. For product engineering teams, this means shipping features faster without accumulating technical debt. Here is how the top tools stack up this year.

What Are AI Code Review Tools?

AI code review tools are specialized software agents that analyze source code using Large Language Models (LLMs) and static analysis. Unlike traditional linters that strictly follow rule sets, these tools understand the intent behind a code change. They can suggest refactoring, detect logic bugs, and even explain complex diffs in plain English.

The goal is to automate the tedious parts of the review process. As noted by industry experts:

"AI code review tools are designed to make reviews faster, easier and more secure. It delivers AI-driven insights and integrates seamlessly into your existing workflows."

In practice, they act as an always-on senior engineer, providing immediate feedback on every commit.

Also, check out some of our interesting reads:

Roll Out New LLMs Safely using Shadow Testing

Learn If You Need to Replace or Optimize Your LLMs

Self-Evolving LLM Evaluation Pipelines

HIdden Cost of Cheap LLM Models

Will Chasing Every New LLM Hurt Engineering

How AI Code Review Tools Work

Modern AI review tools go beyond simple pattern matching. They utilize advanced techniques to understand your specific codebase rather than just generic coding standards.

Here is how they operate under the hood:

Retrieval-Augmented Generation (RAG): The tool indexes your entire repository to understand how a new change affects existing architecture.

Multi-Agent Frameworks: distinct AI agents handle different tasks, such as one agent generating tests while another reviews logic.

Automated Test Generation: Some tools actively write unit tests and suggest coverage improvements based on the new code.

This multi-layered approach ensures that feedback is relevant to your specific project structure.

Also, check out some of our interesting reads:

LLM Powered Code Reviews Go Beyond RAG

Why RAG Based Review Fails Autonomous Dev Agents

How LLM Based Code Review Achieves System-Level Context

Why Microservices Code Review Breaks Traditional AI Tools

How RAG Pipelines Attempt to Understand Code

Key Benefits for Full-Stack Product Engineering Teams

For full-stack teams juggling frontend state management and backend database migrations, context is everything. AI tools provide a unified view of code health across the stack. The primary benefit is speed, but the secondary benefit, consistency, is arguably more valuable.

By automating the initial pass, teams reduce context switching. Developers get instant feedback while the code is fresh in their minds. Furthermore, these tools significantly reduce alert fatigue. Recent data shows that using context-aware AI results in approximately 50% fewer false positives compared to traditional static analysis tools. This means engineers stop ignoring warnings and start fixing actual problems.

How to Choose the Best AI Code Review Tool

Selecting the right tool depends on your team's specific bottlenecks. A team struggling with security vulnerabilities needs a different tool than a team slowing down due to lack of documentation.

Critical Features for 2026

When evaluating tools this year, look for capabilities that go beyond simple syntax checking. The best tools offer:

AI-Powered Semantic Analysis: They sift through vast open-source datasets to flag unusual or previously unknown bug patterns.

Context Visualization: Tools like CodeRabbit can generate sequence diagrams or flow explanations for complex code changes.

Learning Capability: Advanced platforms like Greptile learn from your feedback and adapt to your project's specific style over time.

Pricing, Integration, and Scalability Factors

Integration support is non-negotiable. If a tool doesn't slot into your existing Git workflow, it won't get used.

Here is a comparison of top contenders based on current data:

Tool | Noise Reduction | Git Integration | Compliance Automation | AI Insights | Pricing |

Aikido Security | ✅ (up to 95%) | ✅ Easy setup (GitHub, GitLab, ADO, CircleCI) | ✅ Full support (SOC 2, GDPR, ISO) | ✅ Advanced (Contextual) | ✅ Predictable |

CodeRabbit | ✅ High | ✅ Supported (GitHub, GL, ADO) | ✅ Supported (SOC 2, GDPR) | ✅ Advanced (Learning) | ❌ High |

CodeAnt AI | ✅ High | ✅ Supported (GitHub, GL, BB, ADO) | ✅ Full support (SOC 2, ISO 27001) | ✅ Advanced (AST-based) | ✅ Moderate |

1. CodeAnt AI

CodeAnt AI is a unified code health platform that combines AI code review, security scanning, and quality metrics in one tool. It scans both new code and existing repositories, understanding context rather than just diffs. CodeAnt AI stands out as the most comprehensive platform for teams that want to manage code review, security, and quality in a single place. While many tools focus solely on the PR comment stream, CodeAnt AI looks at the broader health of the application.

It excels at reducing technical debt by enforcing consistency across the entire SDLC.

CI/CD Integration: Supports common CI/CD tools to catch issues before deployment.

Automated Documentation: It can automatically generate documentation for the entire codebase, keeping wikis up to date.

Line-by-line AI reviews: Context-aware feedback on every PR.

One-click auto-fixes: Instantly resolve common issues and style violations.

Comprehensive scanning: Includes SAST, secrets detection, and dependency vulnerability checks.

DORA metrics: Tracks engineering velocity and deployment frequency.

Custom Rules: Allows teams to define and enforce custom coding standards specific to their organization.

For teams wanting a unified solution that fixes issues rather than just flagging them, CodeAnt AI is the top choice.

2. CodeRabbit

CodeRabbit has become a favorite for its ability to "read" code like a human. It focuses heavily on the pull request experience, providing detailed summaries that help human reviewers get up to speed quickly.

Its strength lies in explanation and visualization.

PR Summaries: Provides natural language summaries of code changes, saving reviewers from reading every line of diff.

Context Visualization: Can generate sequence diagrams or flow explanations for complex code changes.

Chat Interface: Developers can chat with the bot directly in the PR comments to clarify suggestions.

If your primary pain point is the time it takes for senior engineers to understand a PR, CodeRabbit is a strong contender.

Checkout this CodeRabbit alternative.

3. Qodo

Formerly known as Codium, Qodo focuses on test integrity and enterprise governance. It is designed for large organizations where code quality must be managed across hundreds of repositories and thousands of developers.

Qodo excels at generating test suites to ensure that new code doesn't break existing functionality.

AI-Generated Test Suggestions: Automatically proposes unit and integration tests based on code changes to catch regressions before merge.

Quality & Complexity-Aware Code Review: Analyzes logic, maintainability, and complexity to flag risky code paths and technical debt early.

IDE & GitHub Integration: Delivers instant feedback directly inside IDEs and pull requests, without breaking developer workflow.

It integrates deeply with enterprise workflows to enforce strict quality gates.

Checkout this Qodo Alternative.

4. Greptile

Greptile differentiates itself by understanding the entire repository context better than most. It acts as an expert system that knows how Module A interacts with Module B, even if they are in different folders.

This tool is particularly useful for onboarding new developers who need to understand the codebase quickly.

AI Code Review: Automatically reviews PRs with full codebase context, reducing "missing context" errors.

Learning Capability: Greptile learns from your feedback and adapts to your project's specific conventions.

Contextual Assistance: Developers can ask Greptile natural language questions about the codebase to find logic paths.

5. Snyk Code

Snyk remains the heavyweight champion for security-focused code analysis. While it has expanded its AI capabilities, its core strength is still its massive database of vulnerabilities.

Snyk is less about style and more about risk. It scans for open-source dependencies with known exploits and checks your proprietary code for security flaws like SQL injection or cross-site scripting.

Checkout this Synk alternative.

6. Codacy

Codacy is ideal for engineering managers who need to enforce standardization across a large team. It focuses on code patterns, complexity, and style consistency.

It provides a dashboard that gives a high-level view of project health.

Customizable Quality Gates: Teams can set minimum criteria for merging code, like coverage percentages or linting thresholds.

Real-Time Feedback: As soon as code is pushed, it provides automated insights, speeding up iteration cycles.

Multiple Language Support: Works well for diverse stacks, enforcing standards consistently across backend and frontend.

Checkout this Codacy Alternative.

7. DeepSource

DeepSource is built for teams that want granular control over their static analysis. It is famous for its "autofix" capability, where it not only finds issues but creates a commit to fix them.

The platform shines in its configurability.

Real-Time Commit Analysis: Scans every commit instantly to catch bugs and quality issues before they reach pull requests.

One-Click Autofix: Automatically fixes common code issues and style violations with a single action.

OWASP Top 10 Security Scanning: Detects critical API and application vulnerabilities aligned with OWASP Top 10 standards.

Code Coverage Tracking: Monitors test coverage changes to ensure new code is properly validated before merge.

Checkout this Deepsource Alternative.

8. CodeScene

CodeScene takes a different approach by analyzing the behavior of the team alongside the code. It uses "forensic code analysis" to identify hotspots, files that are frequently changed and frequently buggy. It visualizes coupling between modules and identifies "knowledge silos" where only one developer understands a critical part of the system.

Hotspot Analysis: Identifies high-risk files that are frequently changed and most likely to cause production issues.

Code Health Trends: Tracks how maintainability, complexity, and risk evolve across the codebase over time.

Team Coordination Metrics: Surfaces ownership gaps and collaboration bottlenecks that slow development velocity.

Pull Request Risk Assessment: Scores PRs based on change size, complexity, and historical failure patterns before merge.

9. LinearB

LinearB is a software delivery and productivity analytics platform that ties code review activity directly to engineering outcomes. Through its gitStream feature, it connects pull request behavior to DORA metrics like deployment frequency and lead time. The focus is less on line-by-line code critique and more on accelerating safe delivery at scale.

Key Capabilities

DORA metrics integration: Maps PR activity to deployment frequency, lead time, and cycle time.

Programmable review workflows: Define rules like auto-approving docs changes while enforcing senior review for risky code.

Policy-as-code: Encodes review and merge policies directly into workflows for consistent enforcement.

Risk-aware automation: Speeds up low-risk changes while adding guardrails around high-impact modifications.

10. Aikido Security

Aikido Security is a developer-first application security platform built to reduce alert fatigue. It unifies SAST, DAST, and dependency scanning into a single workflow while aggressively filtering out low-signal findings. The result is security feedback developers actually trust and act on.

Key Capabilities

Unified security scanning: Combines SAST, DAST, and dependency vulnerability checks in one platform.

Noise reduction up to 95%: Filters out non-exploitable and irrelevant alerts to prevent alert fatigue.

Developer-first remediation: Prioritizes clear, actionable fixes instead of long security reports.

CI/CD-friendly integration: Delivers security feedback directly inside PRs and pipelines.

11. Bito

Bito brings AI-powered code review directly into the IDE, allowing developers to catch issues before code ever reaches a pull request. It focuses on real-time feedback inside editors like VS Code and JetBrains, reducing noisy PRs and speeding up team reviews.

Key Capabilities

IDE-native code review: Analyzes code in real time inside VS Code and JetBrains.

Pre-PR issue detection: Flags bugs, logic errors, and improvements before pushing to GitHub.

Multi-purpose AI assistant: Supports code generation, test creation, and review in one tool.

Lightweight and fast: Designed for low-latency feedback without interrupting developer flow.

12. Augment Code

Augment Code is a fast-rising AI coding assistant known for high-speed code completion and strong large-context understanding. While its primary strength is generation, its code review capabilities are evolving and already effective at catching real errors.

Key Capabilities

Large-context code understanding: Analyzes broader code scopes to predict intent and surface issues.

Error detection during development: Identifies logical and syntactic problems as code is written.

Copilot-class completion speed: Competes directly with GitHub Copilot on responsiveness.

Early-stage review UX: Strong detection accuracy, but review workflows are still maturing.

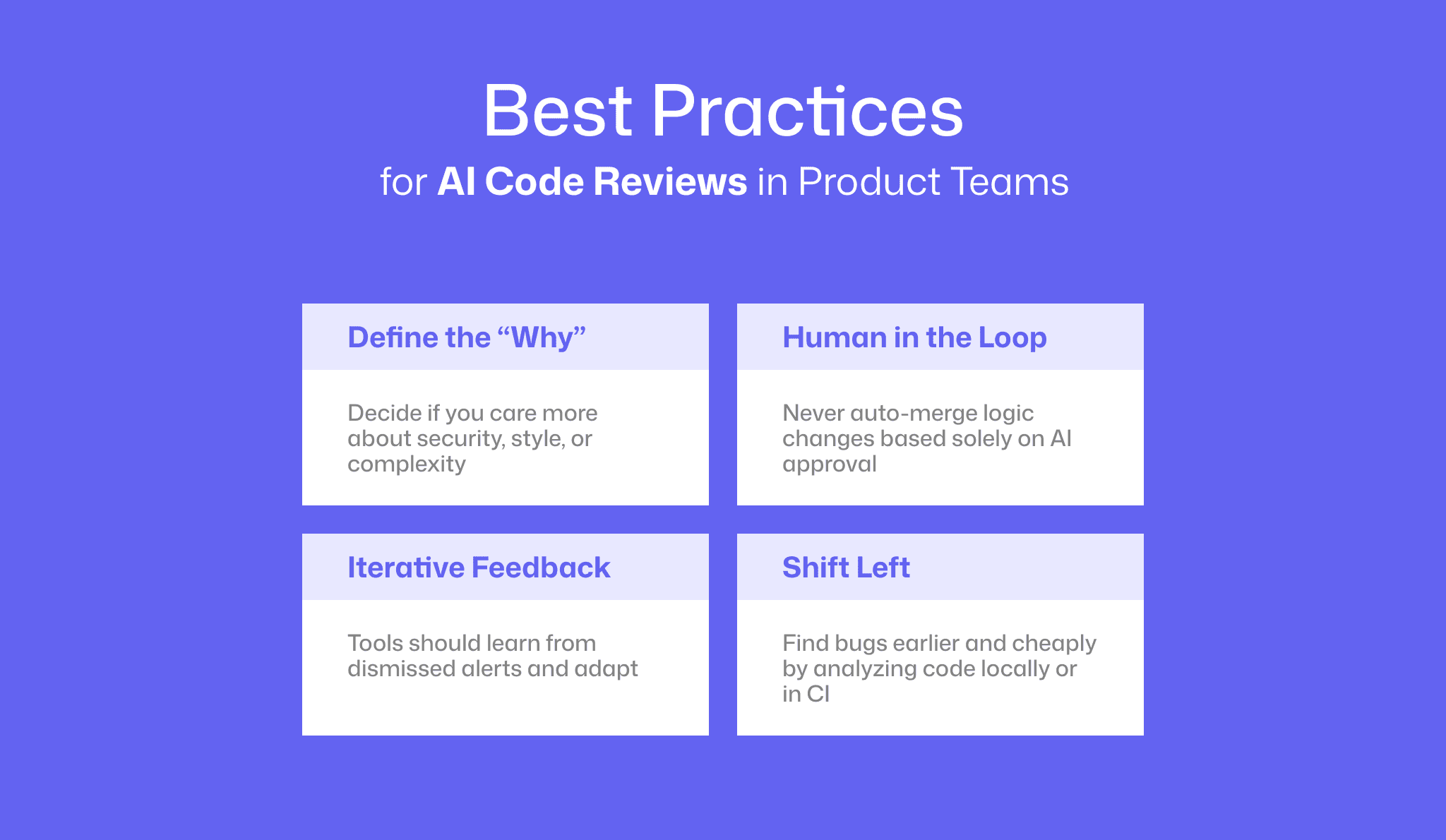

Best Practices for AI Code Reviews in Product Teams

To get the most out of these tools, teams need to integrate them into their culture, not just their pipeline.

Define the "Why": Don't just turn on every rule. Decide if you care more about security, style, or complexity.

Human in the Loop: AI should propose changes, but a human must accept them. Never auto-merge logic changes based solely on AI approval.

Iterative Feedback: Use tools that learn. If you dismiss an alert, the tool should learn not to show it again.

Shift Left: Run these tools locally or in CI, not just at the PR stage. The earlier you catch a bug, the cheaper it is to fix.

Filter The Best AI Code Review Now: Our Takeaway!

The landscape of AI code review in 2026 offers a tool for every specific need. Whether you prioritize security, context-aware summaries, or a comprehensive code health platform, the technology is ready for prime time.

For full-stack product teams, the goal is clear: reduce the friction of shipping code. By choosing a tool that fits your workflow and treating it as a teammate rather than a policeman, you can significantly improve both developer happiness and product quality. Start with a trial, measure the impact on your cycle time, and iterate.